Our growth in assessment! - East Carolina University

advertisement

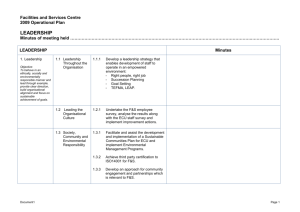

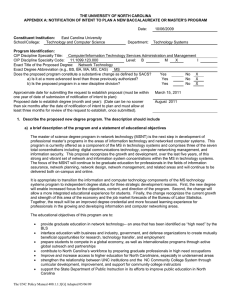

A Perspective on Student Learning at ECU It’s working! Marilyn Sheerer Provost and Senior Vice Chancellor Introduction • Policymakers, Accrediting Bodies, and Association Leaders continue to focus on assessing student learning outcomes • What is happening on the ground at ECU? – Where does it rank in importance on our action agenda? – To what extent are faculty involved in assessment activities? – To what extent are faculty using the results for improving student learning? Themes of this Conversation • Assessment has taken root at ECU – we can demonstrate Best Practices • Planning and assessment are complementary work – demonstrating that we are effectively accomplishing our mission • The UNC Performance Funding Initiative and Strategic Goals Best Practices of Assessment Review of Report Components for Improvement Opportunities Dissemination of Results Data-Driven Decisions Best Practices of Assessment • Review of Report Components – Biology Foundations: “This meeting came to the conclusion that we needed to: 1) re-examine our assessment instrument to ensure that the data collected was meaningful and could be used for effective course redesign, and 2) come up with a method to ensure higher completion rates of the assessment tools.” Best Practices of Assessment • Review of Report Components- (Cont’d) – Counseling & Student Development: “The center created therapy groups that focus on the top presenting issues. Knowing the usage patterns has enabled the center to be more prepared during peak times with back up services and support. This data has influenced how crisis counselor rotations are assigned.” Best Practices of Assessment • Dissemination of Results – Economics Foundations: “Results were reported to instructors in courses surveyed and to the department faculty for use to improve course content and presentation.” Best Practices of Assessment • Dissemination of Results - (Cont’d) – Academic Advising (Starfish Outcome): “Results of the surveys have been communicated to administrators and faculty. Individual meetings with departmental chairs were scheduled for the purpose of sharing survey results, receiving feedback for improvements to Starfish and training, and inquiring about faculty interest in collaborative research using Starfish.” Assessment Best Practices • Data-Driven Decisions – CON Dean’s Office: “Reorganized business office to more efficiently administer grants. Frequent meetings with PIs to review budget progress. Continue to utilized university systems to ensure compliance with internal controls.” Assessment Best Practices • Data-Driven Decisions - (Cont’d.) – COE Center for Science, Math & Technology (Professional Development Outcome): The data collected from the meeting helped to direct the summer professional offerings from the Center. Based on the requests, the sessions mainly addressed short-term need for teachers in mathematics and science. Over 20 sessions were offered and over 60 teachers trained in the summer in areas varying from Algebra 1 and discrete mathematics to scientific assessment and digital literacy. Assessment Best Practices • Data-Driven Decisions - (Cont’d) – Office of Grants & Contracts (Training Outcome): “As requested on the survey, the training schedule was revamped to provide more meeting, webinar and online style sessions. Additional training PowerPoint presentations were developed and posted to OGC website.” Unknown number of plans/reports from educational programs & support services No centralized reporting system No infrastructure for review of assessment reports IE of research units not assessed IE of service units not assessed 2012 2007 Our growth in assessment! 400+ assessment units in centralized reporting system including • 302 educational program units • 43 administrative support service units • 81 academic and student support service units • 25 research units • 22 service units Improvements in Institutional Effectiveness (IE) reported from SACS Working Groups Rubric developed and used for review of assessment reports Formation of Institutional Effectiveness Council SharePoint workflow constructed for the review of all academic assessment reports. Process includes department chairs, associate deans for assessment, deans, and university working group Established formal processes of reporting for all research and service units Formation of Public Service and Community Relations, Chancellor's Division Improvements in IE Established procedures for benchmarking materials expenditures and staffing against peers using ASERL data Faculty training and peer review of online instruction based on new Distance Education Policy Established procedures for benchmarking information literacy results against peers and aspirational peers using LibQUAL+ survey data Importance of this Work Assessment of student learning is the centerpiece of SACS accreditation. Authentic assessment is our top priority. Expect close scrutiny of DE, how we monitor quality, training of faculty, peer review of instruction. Not simply seeking SACS reaffirmation: Importance of this Work “We are committed to changing the way in which we assess our teaching and learning, and thus, to continuous improvement of our entire educational paradigm.” Provost’s Update January 11, 2011 on ECU Official Assessment Cycle 2012-2013 Units will receive feedback in the form of a rubric IPAR will assist in integrating the assessment and strategic planning processes Units will reflect on feedback and use to make changes in TracDat Units will continue ongoing assessment and strategic planning efforts Tying Planning to Assessment • Alignment of learning outcomes with the institutional mission • Reduce the duplication of efforts • Strategic Directions are evaluated using assessment techniques • Assure congruent linking to optimize the accomplishment of annual and long-term goals. • Integrate planning and assessment into existing organizational reporting efforts and timelines. • Provide assessment and planning training to the university and implement a communication plan. • Build a culture of continuous improvement that is centralized and based on data and the use of results. UNC: Our Time, Our Future (DRAFT - 2012) 1. Set Degree Attainment Goals Responsive to State Needs 2. Strengthen Academic Quality 3. Serve the People of North Carolina 4. Maximize Efficiencies 5. Ensure an Accessible and Financially Stable University UNC Model For Access & Student Success Performance Based Funding Metrics • ECU Performance Based Funding Metrics – Freshmen-to-Sophomore Retention Rate – Six-Year Graduation Rate – NCCCS Transfer Student Retention Rate – Degrees Awarded to Pell Recipients – Degrees Awarded in STEM and Health Disciplines United States Department of Education Strengthening Institutions Program: Title III • Student Learning Outcomes – development of department specific and University-wide written, comprehensive plans for utilizing institutional effectiveness planning and assessment mechanisms to enhance student learning • Think, Value, Communicate & Lead (TVCL) Framework – Tool to assist a faculty driven approach to defining what an ECU graduate should look like