Chapter 5 Producing Data

advertisement

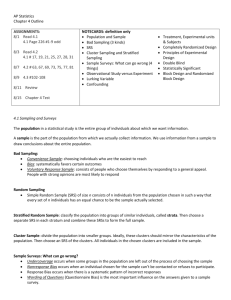

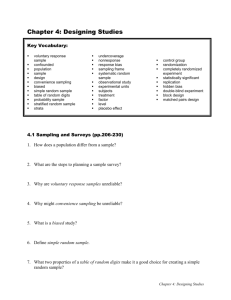

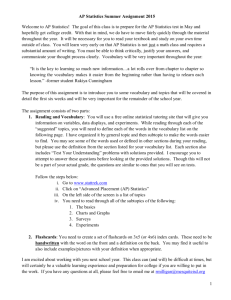

Chapter 5 Producing Data A.MANN A D A P T E D F R O M H A M I L T O N AP STATS Introduction 5.1 Designing Samples HOMEWORK….5.1,5.3, 5.7,5.9, 5.10, 5.11, 5.13, 5.16, 5.19, 5.20 Questions We Want to Answer How Could We Answer These Questions A political scientist wants to know what percentage of voting age individuals consider themselves conservatives Economists want to know what the average household income is An auto maker wants to know what percent of adults ages 18-35 recall seeing television advertisements for a new sport utility vehicle What’s the Population and what’s the Sample 1. We are going to survey 3,000 high school student athletes at random to determine if they are planning to attend college. 2. We are going to survey 3,000 high school male student athletes at random to determine if they are planning to attend college. 3. We are going to survey 100 property owners in Charlotte County to determine their opinions about property taxes. A census is very time consuming and expensive. Often, we need information next week, not next year. For this reason, sampling is preferable to taking a census. We always want to know about characteristics of a population. If we could easily ask everyone, we would. Since we cannot, we rely upon samples. As a result, the issue is how do we get a sample that is representative of the population? Sampling Method The process used to choose a sample from the population. Poor sampling methods produce misleading conclusions. Example – Nightline once conducted a viewer call-in poll and asked, “Should the U.N. continue to have its headquarters in the U.S.? Over 186,000 people responded and 67% said No! A properly designed survey later discovered that 72% of adults want the U.N. to stay in the U.S. Why were the results so different? The Nightline poll was not accurate because it was a voluntary response sample. People who felt strongly about the U.N. called in and others did not. Examples: (look at Ex. 5.3 on p.331 – Same-sex marriage) TV call-in polls Internet Polls Mailed surveys or questionnaires Anything else a person has a choice of responding to. For example, if I wanted to know what high school students opinions were about smoking, I might choose to conduct a survey at R-HHS. This would be a convenience sample because I have ready access to students at R-HHS. Would it be representative of all high school students? Example 5.4 on p. 332 – Interviewing at the Mall Voluntary Response Samples and Convenience Samples choose samples that are almost guaranteed not to represent the entire population. Hence they display bias. How was my Convenience Sample of R-HHS students biased? Due to bias, we want to avoid Voluntary Response and Convenience Samples. What’s the Answer In a Voluntary Response Sample, people choose whether to respond. In a Convenience Sample, the interviewer chooses who to interview. In both cases, choice leads to bias. The remedy is to allow chance to choose the sample. Chance does not allow favoritism by the interviewer or self selection by the respondent. Choosing by chance attacks bias by making all members of the population equally likely to be chosen – rich and poor, young and old, black and white, etc. An SRS not only gives each individual an equal chance of being selected, but also gives every possible sample an equal chance of being selected. The idea of an SRS is to draw names from a hat. Computers and calculators can be used to select an SRS. If you don’t use these, then you use a table of random digits. Table B at the back of the book is a table of random digits. In a table of random digits, each entry is equally likely to be any of the ten possibilities (0-9), each pair of entries is equally likely to be any of the 100 possible pairs (00-99) and so on. Since each is equally likely, random digit tables are useful for choosing a Simple Random Sample. Look at p. 336 Example 5.5 – Choosing an SRS Choose a Simple Random Sample of 5 letters from the alphabet using the random digit table. An SRS is a probability sample in which each member of the population has an equal chance of being selected. This is not always true in more elaborate sampling methods. In any event, using chance to select the sample is the essential principle of statistical sampling. Sampling from a large population spread out over a wide area would require more complex sampling methods than an SRS. Define the strata, using information available before choosing the sample, so that each stratum groups individuals that are likely to be similar. For example, you might divide high schools into public schools, Catholic schools, and other private schools. A stratified sample can give good information about each stratum as well as the overall population. Look at Example 5.6 on p. 339. Look at 5.11 on P. 342 Cluster sampling involves breaking the population into groups or clusters. This method is used because some groups are naturally grouped or clustered and it makes it easy to utilize them. For example, high schools provide clusters of U.S. students. As long as we randomly select the high schools, we should still get a representative sample. Look at Example 5.7 on p. 340. Stratified Random Sampling Population Cluster Sampling Population Strata Strata Strata Strata #1 #2 #3 #4 Randomly select clusters from the population. Randomly selected individuals from each strata. Sample Sample Cautions about Sample Surveys Random selection eliminates bias in choosing the sample, but getting accurate information requires much more than good sampling techniques. To begin with, we need an accurate and complete list of the population – which rarely if ever exists. As a result, most samples suffer from undercoverage. Another, more serious source of bias, is nonresponse, which occurs when a selected individual cannot be contacted or refuses to cooperate. Undercoverage A survey of households will miss homeless people, prison inmates, and college students living in dormitories. An opinion poll conducted by phone will miss the 7-8% of Americans who do not have residential phones. Nonresponse Individual cannot be contacted or will not cooperate (has Caller ID and will not answer phone or does not complete the survey). Response Bias The behavior of the respondent or the interviewer may cause response bias. An individual may lie, especially if asked about illegal or unpopular behavior. The race or sex of the interviewer can also influence responses to questions about race or gender equity. Answers to questions that ask respondents to recall past events are often inaccurate due to faulty memory. Response Bias Look at Example 5.9 on P. 344 – Effect of Interview Method The question, “Have you visited a Dentist in the Past six months?” will often get a response of yes even if the last dentist visit was 8 months ago. Look at Example 5.10 on P. 345 – Effect of Perception Wording of Questions How questions are worded is the most important influence on the answers given to a survey. Confusing or leading questions can introduce strong bias. Example of a leading question: Don’t you believe that killing babies is wrong? Isn’t abortion wrong then? Look at Example 5.11 and 5.12 on P. 346 Before Believing a Poll Insist on knowing the exact questions asked, the rate of nonresponse, and the date and method of the survey before you trust a poll result. Inference about a Population Even though we choose a random sample to eliminate bias, it is unlikely that the sample results are exactly the same as for the entire population. Sample results are only estimates of the truth about the population. For example, if we draw two different samples from the same population, the results will almost certainly differ somewhat. The point is that we can eliminate bias by using random sampling, but the results are rarely exactly correct and will vary from sample to sample. Inference about a Population We can improve our results by knowing a very simple fact: Larger random samples give more precise results than smaller random samples. By taking a large sample, we are confident that the sample result is very close to the truth about the population. This is only true for probability samples though! This relates to the fact that outliers will not have as much of an impact if we have a large sample. 5.2 Designing experiments HMWK QUESTIONS: 5.33, 5.36, 5.38, 5.39, 5.41 – 5.43, 5.45 – 5.47, 5.49 DUE FRIDAY!!! Experiment A study is an experiment when we actually do something to individuals in order to observe the response. Other Experimental Vocabulary Explanatory Variable – helps to explain or influence changes in the response variable. This is often referred to as the independent variable (x). Response Variable – measures an outcome of a study. This is often referred to as the dependent variable (y). The idea behind the language is that the response variable depends on the explanatory variable. To study the effect that alcohol has on body temperature, researchers give different amounts of alcohol to mice and measure the change in body temperature after 15 minutes. Identify the explanatory and response variables. Explanatory Variable – amount of alcohol Response Variable – change in body temperature after 15 minutes More Experimental Vocabulary Lurking Variable – a variable that is not among the explanatory or response variables in a study and may yet influence the interpretation of relationships among those variables Confounding – two variables are confounded when their effects on a response variable cannot be distinguished from each other. These may be explanatory or response variables. Example – Many studies have shown that people who are active in their religion live longer than those who are nonreligious. Does this mean that attending church makes you healthier? No! People who attend church tend to be more health conscious. They are less likely to smoke, more likely to exercise, etc. More Experimental Vocabulary Lurking Variables and Confounding Example – People who have more education make more money. It makes sense because many high paying professions require more education (like teaching())! We are ignoring other variables though. People who go to college tend to have a high ability or come from wealthy families. Obviously if you have a lot of potential or you start off wealthy, you are probably more likely to end up wealthy with a good paying job. So we have lurking and confounding variables. More Experimental Vocabulary Factors – what the explanatory variables in an experiment are called Level – a specific value of each of the factors For example, a factor could be the effect that the type of schedule has on students’ grades. The levels could be 7-periods a day (50-minute classes), 8-periods a day (40-minute classes), Semester Block Schedule (90 minutes every day for 90 days), Alternating Block (90-minutes every other day for the entire year), etc. Explanatory Variable – type of schedule Response Variable - grades Purpose of an Experiment An experiment’s purpose is to reveal the response of one variable to changes in the other variable. Look at Example 5.13 on p. 354. The big advantage of experiments is they provide good evidence for causation (A causes B). This is true because we study the factors we are interested in while controlling the effects of lurking variables. In example 5.13, all students in the schools still followed the same curriculum and were assigned to different class types within their school – which controlled for differences in school resources and family incomes. Therefore, class size was the only variable that could affect the results. Effects of several factors If we want to study more than one factor, we must make sure that every possible combination of factors is accounted for. Think about TV commercials – a commercials length and how often it is shown will impact its effect. Studying the two alone, however, may not tell us how the two interact. For example, longer commercials and more commercials may increase interest in a product, but if both are done, viewers may get annoyed and be turned off to the product. Example 5.14 on p. 355 Control Many scientific experiments have a simple design with only a single treatment, which is applied to all of the experimental units. This design is TREATMENTOBSERVE RESPONSE. This works well for science because the experiment is conducted in a controlled lab environment. When we must conduct experiments outside of a lab or with living subjects (which introduce other issues) such simple designs can yield invalid data. In other words, we cannot tell if the response was due to the treatment or the lurking variable. See Example 5.15 on p. 356. Placebo Effect A placebo is a dummy treatment. Many patients will respond favorably to any treatment, even a placebo. For example, often giving someone a sugar pill for a headache will make them feel better because they believe you actually gave them a pill for pain. The Placebo Effect is the response to a dummy treatment. The results of an experiment can give misleading results because the effects of the explanatory variable can be confounded by the placebo effect. We need to consider ways to combat lurking variables and confounding. Avoiding Lurking Variables and Confounding To eliminate the problem of the placebo effect and other lurking variables and confounding, we use a control group. A control group is a group that is similar to the group that receives the treatment, but they do not receive the actual treatment. In some cases they are allowed to believe that they received the treatment to control for the placebo effect. Using a control group allows us to trust our results more. Control Control is the 1st principle of Experimental Design. Comparison of several treatments in the same environment is the simplest form of control. Without control, experimental results can be dominated by such influences as the details of the experimental arrangement, the selection of subjects, and the placebo effect. Well-designed studies tend to compare several treatments. Control refers to the overall effort to limit variability in the way the experimental units are obtained and treated. Replication Even with control, there is still natural variability among experimental units (we would never expect two different samples to provide exactly the same information). Our hope is that units within one treatment group will respond similarly to each other but differently from other treatment groups. If each group only contained one subject, we would not trust the experiment. As we increase the number of subjects in each group, we have more reason to trust the experiment because each group would likely be similar due to the fact that chance would probably average out the groups. This is replication – using enough subjects to reduce chance variation. Randomization Randomization is the rule used to design experimental units to a treatment. Comparing the effects of several treatments is valid only when all treatments are applied to similar groups of experimental units. For example, if one corn variety is planted on more fertile ground or if one cancer drug is given to more seriously ill patients, the comparisons are meaningless. Differences among the groups of experimental units in a comparative experiment cause bias. Experimenters often attempt to match groups by placing so many smokers in both groups, so many of the same age group, gender and race in each group, etc. This is not good enough – why? Randomization The experimenter, no matter how hard he or she tries, would never be able to think of or consider every possible variable involved. Some variables, such as how sick a cancer patient is, are too difficult to measure due to their subjective nature. Our remedy is to allow chance (random selection) to make assignments that are not based upon any characteristic of the experimental units and that does not rely on the judgment of the experimenter. Look at Example 5.17 on p. 360. Example 5.18 on p. 360 The Physicians’ Health Study Randomized Comparative Experiment Logic behind a Randomized Comparative Experiment Randomization produces two groups of subjects that we expect to be similar in all respects before the treatments are applied. 2. Comparative design helps to ensure that influences other than the treatment operate (act) equally on both groups. 3. Therefore, any differences must be due to the treatment or the random assignment of subjects to the two groups. 1. We hope to see a difference in the responses that is large enough that it is unlikely to happen because of chance variation. We can use the laws of probability to learn if the differences in treatment effects are larger than what we would expect to see if only chance were operating. If they are, we call them statistically significant. You often see the phrase “statistically significant” in reports of investigations in many fields of study. This phrase tells you that the investigators found good evidence for the effect they were seeking. For example, the Physicians’ Health Study reported statistically significant evidence that aspirin reduces the number of heart attacks compared with a placebo. Completely Randomized Design When all experimental units are allocated at random among all treatments, the experiment is said to have a completely randomized design. Completely randomized designs can compare any number of treatments and the levels can be formed by a single factor or more than one factor. Example 5.17 on p. 360 is a completely randomized design with only one factor. Example 5.18 on p. 360 is a completely randomized design with 2 factors. Completely Randomized Design for TV Commercials We have 150 students who are willing to participate in our study. We have two different lengths of commercial and can show the commercial 1, 3 or 5 times. What are the explanatory variables? How would we set-up a Completely Randomized Design to test the effect of commercial length and the number of times we see the commercial? Choosing Random Groups with the Calculator We have 40 students and want to select 20 to be in treatment 1 and 20 to be in treatment 2. Label students 1-40 Use Random Integer function on calculator to select 20. Check for repeats. If any repeat replace those by selecting one random integer at a time. The last 20 are their own group. We have 75 students and want to select 25 for treatment 1, 25 for treatment 2 and 25 treatment 3. Label students 1-75. Use Random Integer function on calculator to select 25. Check for repeats. If any repeat replace those by selecting one random integer at a time. Then select another 25 the same way, checking for repeats again. The last 25 are their own group. Blocking Suppose you want to test a strength training regimen for upper-body strength. We are going to see how many push-ups subjects can do after going through the training. We expect some variation in the amount of push-ups done. We try to control this variability by placing subjects in groups with similar individuals. One way to accomplish this is to separate men and women. We would expect women to do fewer push-ups because they tend to have less upper-body strength. By separating the subjects by gender, we can reduce the variation in strength on the number of push-ups. This is the idea behind a block design. Look at Example 5.21 and 5.22 on p. 367-368. The progress of a certain kind of cancer differs in men and women. A clinical experiment to compare three therapies for this cancer therefore treats gender as a blocking variable. We randomly assign men to one of the three groups, and women to one of the three groups. The design is outlined (diagrammed below). Blocking Blocking allows us to reach separate conclusions about each block. So if we block by gender, we can draw conclusions about women and different conclusions about men. Wise experimenters form blocks based on the most important, unavoidable sources of variability among the experimental units. The mantra is: Control what you can, block what you can’t control, and randomize the rest. Matched Pairs Design Matching the subjects in various ways can produce more precise results than simple randomization. A Matched Pairs Design is the simplest use of matching. The subjects are matched in pairs – for example, an experiment to compare two advertisements for the same product might use pairs of subjects with the same age, sex, and income. The idea is that matched subjects are more similar than unmatched subjects, so that comparing responses within a number of pairs is more efficient than comparing responses of groups. We still randomly decide which subject from the pair sees which commercial. See Examples 5.23 and 5.24 on p. 368-369. Cautions about Experimentation Randomized Comparative Experiments depend on our ability to treat all experimental units identically in every way except for the treatments being compared. Good experiments require careful attention to detail. In particular, we must try to avoid researcher and subject bias. If those who measure the response variable know which treatment a subject receives or if the subject knows which treatment a subject received, it is a single-blind study. This causes bias because a researcher may want a certain result and look for it or a subject may have a certain idea about what something is supposed to do and make sure it happens. Cautions about Experimentation Most experiments have some weaknesses in detail. For example, the environment can have a major impact upon the outcomes. Even though experiments are the gold standard for evidence of cause and effect, really convincing evidence requires that a number of studies in different places with different details produce similar results. Lack of realism is the most serious potential weakness of experiments. The subjects, treatments or setting of an experiment may not realistically duplicate the conditions we really want to study. Look at Example 5.25, 5.26, and 5.27 on p. 370-371. Cautions about Experimentation Lack of realism limits our ability to apply the conclusions of an experiment to the settings of greater interest. Statistical analysis of an experiment cannot tell us how far the results will generalize to other settings. Still, randomized comparative experiments, because it can give convincing evidence for causation, is one of the most important ideas in statistics.