1 Hour

advertisement

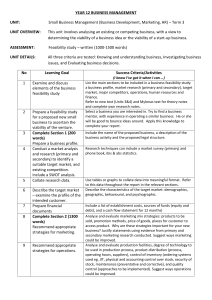

Oracle – Big Data THE INTELLIGENCE LIFE-CYCLE and Schema-Last Approach Dr Neil Brittliff PhD A little about myself… Awarded a PhD at the University of Canberra in March this year for my work in the Big Data space Currently employed as Data Scientist within the Australian Government Have been employed by 5 law enforcement agencies Developed Cryptographic Software to support the Australian Medicare System First used Oracle products back in 1986 Worked in the IT industry since 1982 Resides in Canberra (capital of Australia) Canberra is the only capital city in Australia that is not named after a person Interests Tennis (play) / Cricket (watch) Bushwalking and camping Piano Playing (very bad) Making stuff out of wood Enjoys the art of Programming (prefers the ‘C’ language) Pushing the limits of the Raspberry Pi 2 Talk Structure Motivation Principles and Constraints Intelligence Life-Cycle Collect & Collate Analyse & Produce Report & Disseminate Motivation Research What is a Schema The Problem with ETL Data Cleansing verses Data Triage A New Architecture Oracle Big Data The Schema-Last Approach Indexing Technologies and Exploitation User Reaction Observations and Opportunities 3 National Criminal Intelligence 4 The Law Enforcement community are also in the business of collecting and analysing criminal intelligence and information… data, and where possible, sharing that resulting To do this, they need rich, contemporary, and comprehensive criminal intelligence… The National Criminal Intelligence Fusion Capability, which brings together subject big data matter experts, analysts, technology and to identify previously unknown criminal entities, criminal methodologies, and patterns of crime. Fusion capability identifies the threats and vulnerabilities through the data. data use of It brings together, monitors and analyses and information from Customs, other law enforcement, Government agencies and industry to build an intelligence picture of serious and organised crime in Australia. Australian Institute of Criminology 5 • While many of the challenges posed by the volume of data are addressed in part by new developments in technology, the underlying issue has not been adequately resolved. • Over many years, there have been a variety of different ideas put forward in relation to addressing the increasing volume of data, such as data mining. Darren Quick and Kim-Kwang Raymond Choo Australian Institute of Criminology September 2014 Objectives Support the Australian Intelligence Criminal Model Simple Interface to exploit the data Data ingestion must be simple to do and minimise transformation Support the large variety of data sources Fast ingestion and retrieval times Enable exact and fuzzy searching 6 Support ‘Identity Resolution’ Support metadata Main the data’s integrity Preserve Data-Lineage/Provenance Reproduce the ingested data source exactly! We don’t want this! The Intelligence Life-Cycle 7 Plan, prioritise & direct Evaluate & review Report & disseminate Collect & collate Analyse & produce Intelligence – Data Source Classification DATA SOURCE CLASSIFICATION Analyse & produce Collect & collate Low High High 5% Low 95% 8 Some Definitions: 9 Collect & Collate Schema is from the Greek word meaning ‘form' or ‘figure' and is a formal representation of data model which has integrity constraints controlling permissible data values. Data munging or sometimes referred to as data wrangling means taking data that’s stored in one format and changing it into another format. That a major problem for the data scientist is to flatten the bumps as a result of the heterogeneity of data. Jimmy Lin and Dmitriy Ryaboy. Scaling big data mining infrastructure: The twitter experience. Schema First Schema Application 10 Schema Raw Data Cleanse Storage Analyse Schema Last Schema Raw Data Triage Storage Analyse Data Cleansing … 11 Data cleaning, also called data cleansing or scrubbing, deals with detecting Collect & Collate and removing errors and inconsistencies from data in order to improve the quality of data. “Data cleansing is the process of analysing the quality of data in a data source, manually approving/rejecting the suggestions by the system, and thereby making changes to the data. Data cleansing in Data Quality Services (DQS) includes a computer-assisted process that analyses how data conforms to the knowledge in a knowledge base, and an interactive process that enables the data steward to review and modify computer-assisted process results to ensure that the data cleansing is exactly as they want to be done.” Microsoft: 2012 Data Sources – Always Increasing 12 Collect & Collate Gap Data Cleansing - Doesn’t WORK 13 “Data cleansing can be time-consuming and tedious, but robust estimators are not a substitute for careful examination of the data for clerical errors and other problems. ” Collect & Collate David Ruppert. Inconsistency of resampling algorithms for high-breakdown regression estimators and a new algorithm. Journal of the American Statistical Association, 97: 148–149, 2002. “Formal data cleansing can easily overwhelm any human or perhaps the computing capacity of an organization.” N. Brierley, T. Tippetts, and P. Cawley. Data fusion for automated non-destructive inspection. Proceedings of the RSPA, 2014. “that the data volume may overwhelm the Extract Transform Load process and that data cleansing may introduce unintentional errors.” Vincent McBurney, 17 mistakes that ETL designers make with very large data, 2007. Collect & Collate Data Cleansing – Loss of Format 14 Input Date Cleansed Date Comment 20 July 2014 20-07-2014 Australian Date July-20-2014 20-07-2014 American Format (mmm-dd-yyyy) 2014-20-07 20-07-2014 Arabic Format (right to left) 20-07-14 20-07-2014 Data Ambiguity July 2014 01-07-2014 Imputed Value "If you torture the data long enough, it will confess.“ Clifton R. Musser ETL vs Triage Initiate Initiate Extract Triage Determine Load n Collect & Collate 15 n Suitability? Suitability? Transform Application Assessment ? n n Verify? Load Fuse Report Resolve Complete Complete We did our research … 16 Oracle’s BDA Collect & Collate (Big Data Appliance) 17 Data Storage/Collation Collect & Collate 18 Store the Data Semantically Built on an defined taxonomy/ontology Perfect to capture metadata Searched for the perfect Triple-Store Graph List Subject Predicate Triple Object The Architecture Analyse & Produce Index Data Exploitation IIR Semantic Store Data Exploration Feeds RDF / Modelling Historical Data Apache PIG Index SPARQL R Language Hbase SOLR BDA Data Flow Search Assistant Set Store Disseminate Palantir Collect & Collate New Data 19 Schema Last … Collect & Collate ‘Triaged’ Data Schema First Name Middle Name Last Name Full-Name Street Number Street Name Suburb State Postcode Full-Address Models 20 ACC Search Engines – ‘Smackdown’ Feature SOLR IIR License Apache License Commercial Storage Inverted List Third-party Database Next Release Inverse Document Frequency Normalized Score Support Google Like search Score Model Collect & Collate 21 Result Pagination Homophone Support Can use synonym support Phoneme Search Spread indexes across multiple nodes Schema-less Support Programming Interface Geo-spatial Rest SOAP - API Collect & Collate Collect & Collation Tool 22 Analyse & Produce Bongo – Exploration 23 Report & Disseminate Palantir – Semantic Interface 24 User Reaction Time to Triage < 1 Hour > 1 Hour < 24 Hour > 24 Hours General Size % Megabytes <1 > 1 < 100 > 100 < 1000 > 1000 25 • Developed a Palantir Plugin to search the Fusion Data Holding • Bulk Matching was a great success • In general, user reaction has been positive • Time to Triage was usually under an hour where cleansing could take weeks!!! Collect & Collate Ingestion Rate – The Improvement 26 Observations… The Bulk Matcher Performance and Reliability Interaction with Palantir Configuration over Customisation Search for the ‘Single Source of Truth’ Golden Record Acceptance of the Schema Last Approach Overwhelmed by Search Results 27 Further Reading and Contacts Strategic Thinking in Criminal Intelligence Jerry H Ratcliffe The Federation Press – 2009 ISBN 978 186287 734-4 Intelligence-Led Policing Jerry Ratcliffe Routledge – 2008 ISBN 978-1-843292-339-8 Data Matching Concepts and Techniques and Record Linkage, Entity Resolution, and Duplicate Detection Peter Christen Springer – 2012 ISBN 978-3-642-31163-5 Foundations of Semantic Web Technologies Pascal Hitzler, Markus Krötzsch, Sebastian Rudolph CRC Press – 2010 ISBN 978-1-4200-9050-5 Big Data – A revolution that will transform how we live, work, and think Viktor Mayer-Schönberger and Kenneth Cukier HMH – 2013 ISBN 978-0-544-00269-2 Sharma The Schema Last Approach to Data Fusion Neil Brittliff and Dharmendra Sharma The Schema Last Approach to Data Fusion AusDM 2014 A Triple Store Implementation to support Tabular Data Neil Brittliff and Dharmendra Sharma AusDM 2014 28 University of Canberra http://www.Canberra.edu.au Australian Institute of Criminology http://www.aic.gov.au