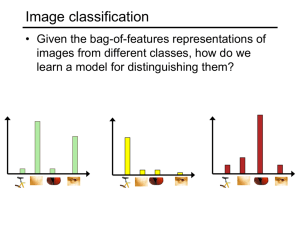

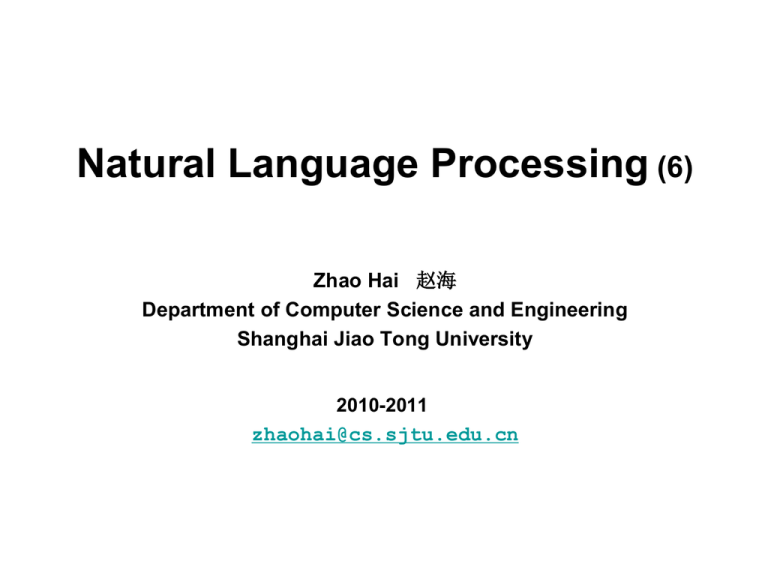

Basic Machine Learning

advertisement

Natural Language Processing (6)

Zhao Hai 赵海

Department of Computer Science and Engineering

Shanghai Jiao Tong University

2010-2011

zhaohai@cs.sjtu.edu.cn

Outline

Machine Learning Approaches for Natural

Language Processing

k-Nearest Neighbor

Support Vector Machine

Maximum Entropy (log-linear) Model

Global Linear Model

2

What’s Machine Learning

• Learning from some known data, and give

predictions on unknown data.

• Typically, classification.

• Types

– Supervised learning: labeled data are necessary

– Unsupervised learning: only unlabeled data are used

but some heuristic rules are necessary.

– Semi-supervised learning: both labeled and unlabeled

data are used.

3

What’s Machine Learning

• What we are talking about is supervised

machine learning.

• Natural language processing often asks

for structure learning.

4

Data

• Real data: you know nothing about it.

• Training data: for learning

• Test data: for evaluation

• Development data: for parameter tuning

5

Classification

• Basic operation in machine learning

– Binary classification

– Multi-class classification can be determined

by a group of binary classification results.

• Learning often results in a model.

• Prediction is given based on such a model.

6

Outline

Machine Learning Approaches for Natural

Language Processing

k-Nearest Neighbor

Support Vector Machine

Maximum Entropy (log-linear) Model

Global Linear Model

7

k-Nearest Neighbor (k-NN)

This part is basically revised from that

by Xia Fei

Instance-based (IB) learning

• No training: store all training instances.

“Lazy learning”

• Examples:

–

–

–

–

–

k-NN

Locally weighted regression

Radial basis functions

Case-based reasoning

…

• The most well-known IB method: k-NN

9

k-NN

+

+

o

o oo

?

+

o

+

o

+

o

o

o

o

oo

+

o

+

o

o

o

o

+

o

10

k-NN

• For a new instance d,

– find k training instances that are closest to d.

– perform majority voting or weighted voting.

• Properties:

– A “lazy” classifier. No training.

– Feature selection and distance measure are

crucial.

11

The algorithm

1. Determine parameter k

2. Calculate the distance between query-instance

and all the training instances

3. Sort the distances and determine k nearest

neighbors

4. Gather the labels of the k nearest neighbors

5. Use simple majority voting or weighted voting.

12

Picking k

• Use N-fold cross validation: pick the one

that minimizes cross validation error.

13

Normalizing attribute values

• Distance could be dominated by some

attributes with large numbers:

– Ex: features: age, income

– Original data: x1=(35, 76K), x2=(36, 80K),

x3=(70, 79K)

– Assume: age 2 [0,100], income 2 [0, 200K]

– After normalization: x1=(0.35, 0.38),

x2=(0.36, 0.40), x3 = (0.70, 0.395).

14

The Choice of Features

• Imagine there are 100 features, and only 2

of them are relevant to the target label.

• k-NN is easily misled in high-dimensional

space.

Feature weighting or feature selection

15

Feature weighting

• Stretch j-th axis by weight wj,

• Use cross-validation to automatically

choose weights w1, …, wn

• Setting wj to zero eliminates this

dimension altogether.

16

Similarity measure

• Euclidean distance:

• Weighted Euclidean distance:

• Similarity measure: cosine

17

Voting to determine the Label

• Majority voting:

c* = arg maxc i (c, fi(x))

• Weighted voting: weighting is on each neighbor

c* = arg maxc i wi (c, fi(x))

wi = 1/dist(x, xi)

We can use all the training examples.

18

Summary of kNN

• Strengths:

–

–

–

–

–

Simplicity (conceptual)

Efficiency at training: no training

Handling multi-class

Stability and robustness: averaging k neighbors

Predication accuracy: when the training data is large

• Weakness:

– Efficiency at testing time: need to calc all distances

– Theoretical validity

– It is not clear which types of distance measure and

features to use.

19

Outline

Machine Learning Approaches for Natural

Language Processing

k-Nearest Neighbor

Support Vector Machine

Maximum Entropy (log-linear) Model

Global Linear Model

20

Support Vector Machines

This part is mainly revised from the slides by

Constantin F. Aliferis & Ioannis Tsamardinos

Support Vector Machines

• Decision surface is a hyperplane (line in 2D) in

feature space (similar to the Perceptron)

• Arguably, the most important recent discovery

in machine learning

• In a nutshell:

– map the data to a predetermined very highdimensional space via a kernel function

– Find the hyperplane that maximizes the margin

between the two classes

– If data are not separable find the hyperplane that

maximizes the margin and minimizes the (a

weighted average of the) misclassifications

22

Support Vector Machines

• Three main ideas:

1. Define what an optimal hyperplane is (in way

that can be identified in a computationally

efficient way): maximize margin

2. Extend the above definition for non-linearly

separable problems: have a penalty term for

misclassifications

3. Map data to high dimensional space where it

is easier to classify with linear decision

surfaces: reformulate problem so that data is

mapped implicitly to this space

23

Which Separating Hyperplane to Use?

Var1

Var224

Maximizing the Margin

Var1

IDEA 1: Select the

separating

hyperplane that

maximizes the

margin!

Margin

Width

Margin

Width

Var225

Support Vectors

Var1

Support Vectors

Margin

Width

Var226

Setting Up the Optimization Problem

Var1

The width of the margin is:

2k

w

w x b k

w

w x b kk

So, the problem is:

max

k

w x b 0

Var2

2k

w

s.t. (w x b) k , x of class 1

(w x b) k , x of class 2

27

Setting Up the Optimization Problem

Var1

There is a scale and

unit for data so that k=1.

Then problem becomes:

max

2

w

w x b 1 s.t. (w x b) 1, x of class 1

w

(w x b) 1, x of class 2

w x b 1

1

1

Var2

w x b 0

28

Setting Up the Optimization Problem

• If class 1 corresponds to 1 and class 2 corresponds to 1, we can rewrite

(w xi b) 1, xi with yi 1

(w xi b) 1, xi with yi 1

• as

yi (w xi b) 1, xi

• So the problem becomes:

max

2

w

s.t. yi (w xi b) 1, xi

or

1 2

w

2

s.t. yi (w xi b) 1, xi

min

29

Linear, Hard-Margin SVM Formulation

• Find w,b that solves

1

2

min w

2

s.t. yi (w xi b) 1, xi

• Problem is convex so, there is a unique global minimum value

(when feasible)

• There is also a unique minimizer, i.e. weight and b value that

provides the minimum

• Non-solvable if the data is not linearly separable

• Quadratic Programming

– Very efficient computationally with modern constraint

optimization engines (handles thousands of constraints and

training instances).

30

Support Vector Machines

• Three main ideas:

1. Define what an optimal hyperplane is (in way

that can be identified in a computationally

efficient way): maximize margin

2. Extend the above definition for non-linearly

separable problems: have a penalty term for

misclassifications

3. Map data to high dimensional space where it

is easier to classify with linear decision

surfaces: reformulate problem so that data is

mapped implicitly to this space

31

Non-Linearly Separable Data

Var1

Introduce slack

variables i

i

i

w x b 1

w

w x b 1

1

1

Allow some

instances to fall

within the margin,

but penalize them

Var2

w x b 0

32

Formulating the Optimization Problem

Constraint becomes :

yi (w xi b) 1 i , xi

Var1

i 0

i

Objective function

penalizes for

misclassified instances

and those within the

margin

i

w x b 1

w

w x b 1

1

1

Var2

min

1

2

w C i

2

i

C trades-off margin width

and misclassifications

w x b 0

33

Linear, Soft-Margin SVMs

1

2

min w C i

2

i

yi (w xi b) 1 i , xi

i 0

• Algorithm tries to maintain i to zero while maximizing

margin

• Notice: algorithm does not minimize the number of

misclassifications (NP-complete problem) but the sum of

distances from the margin hyperplanes

• Other formulations use i2 instead

• As C, we get closer to the hard-margin solution

34

Robustness of Soft vs Hard Margin SVMs

Var1

Var1

i

i

Var2

w x b 0

Var2

w x b 0

Soft Margin SVN

Hard Margin SVN

35

Soft vs Hard Margin SVM

• Soft-Margin always have a solution

• Soft-Margin is more robust to outliers

– Smoother surfaces (in the non-linear case)

• Hard-Margin does not require to guess the cost

parameter (requires no parameters at all)

36

Support Vector Machines

• Three main ideas:

1. Define what an optimal hyperplane is (in way

that can be identified in a computationally

efficient way): maximize margin

2. Extend the above definition for non-linearly

separable problems: have a penalty term for

misclassifications

3. Map data to high dimensional space where it

is easier to classify with linear decision

surfaces: reformulate problem so that data is

mapped implicitly to this space

37

Disadvantages of Linear Decision

Surfaces

Var1

Var238

Advantages of Non-Linear Surfaces

Var1

Var239

Linear Classifiers

in High-Dimensional Spaces

Var1

Constructed

Feature 2

Var2

Constructed

Feature 1

Find function (x) to map to

a different space

40

Mapping Data to

a High-Dimensional Space

• Find function (x) to map to a different space, then SVM

formulation becomes:

1

2

min w C i

2

i

s.t. yi ( w ( x ) b) 1 i , xi

i 0

• Data appear as (x), weights w are now weights in the

new space

• Explicit mapping expensive if (x) is very high dimensional

• Solving the problem without explicitly mapping the data is

desirable

41

The Dual of the SVM Formulation

• Original SVM formulation

– n inequality constraints

– n positivity constraints

– n number of variables

min

w ,b

1 2

w C i

2

i

s.t. yi ( w ( x ) b) 1 i , xi

i 0

• The (Wolfe) dual of this

problem

– one equality constraint

– n positivity constraints

– n number of variables

(Lagrange multipliers)

– Objective function more

complicated

• NOTICE: Data only

appear as (xi) (xj)

1

min i j yi y j ( ( xi ) ( x j )) i

ai 2

i, j

i

s.t. C i 0, xi

y

i

i

0

i

42

The Kernel Trick

• (xi) (xj): means, map data into new space, then take the inner

product of the new vectors

• We can find a function such that: K(xi xj) = (xi) (xj), i.e., the

image of the inner product of the data is the inner product of the

images of the data

• Then, we do not need to explicitly map the data into the highdimensional space to solve the optimization problem (for training)

• How do we classify without explicitly mapping the new instances?

Turns out

sgn( wx b) sgn( i yi K ( xi , x ) b)

i

where b solves j ( y j i yi K ( xi , x j ) b 1) 0,

i

for any j with j 0

43

Examples of Kernels

• Assume we measure two quantities, e.g. expression

level of genes TrkC and SonicHedghog (SH) and we use

the mapping:

2

2

: xTrkC , xSH {x TrkC

, xSH

, 2 xTrkC xSH , xTrkC , xSH ,1}

• Consider the function:

K ( x z ) ( x z 1) 2

• We can verify that:

( x ) ( z )

2

2

2

2

x TrkC

z TrkC

x SH

z SH

2 xTrkC x SH zTrkC z SH xTrkC zTrkC x SH z SH 1

( xTrkC zTrkC x SH z SH 1) 2 ( x z 1) 2 K ( x z )

44

Polynomial and Gaussian Kernels

K ( x z ) ( x z 1) p

• is called the polynomial kernel of degree p.

• For p=2, if we measure 7,000 genes using the kernel once means

calculating a summation product with 7,000 terms then taking the

square of this number

• Mapping explicitly to the high-dimensional space means calculating

approximately 50,000,000 new features for both training instances,

then taking the inner product of that (another 50,000,000 terms to

sum)

• In general, using the Kernel trick provides huge computational

savings over explicit mapping!

• Another commonly used Kernel is the Gaussian (maps to a

dimensional space with number of dimensions equal to the number

of training cases):

K ( x z) exp( x z / 2 2 )

45

The Mercer Condition

• Is there a mapping (x) for any symmetric

function K(x,z)? No

• The SVM dual formulation requires calculation

K(xi , xj) for each pair of training instances. The

array Gij = K(xi , xj) is called the Gram matrix

• There is a feature space (x) when the Kernel is

such that G is always semi-positive definite

(Mercer condition)

46

Support Vector Machines

• Three main ideas:

1. Define what an optimal hyperplane is (in way

that can be identified in a computationally

efficient way): maximize margin

2. Extend the above definition for non-linearly

separable problems: have a penalty term for

misclassifications

3. Map data to high dimensional space where it

is easier to classify with linear decision

surfaces: reformulate problem so that data is

mapped implicitly to this space

47

Other Types of Kernel Methods

• SVMs that perform regression

• SVMs that perform clustering

• -Support Vector Machines: maximize margin while

bounding the number of margin errors

• Leave One Out Machines: minimize the bound of the

leave-one-out error

• SVM formulations that take into consideration difference

in cost of misclassification for the different classes

• Kernels suitable for sequences of strings, or other

specialized kernels

48

Variable Selection with SVMs

• Recursive Feature Elimination

– Train a linear SVM

– Remove the variables with the lowest weights (those variables

affect classification the least), e.g., remove the lowest 50% of

variables

– Retrain the SVM with remaining variables and repeat until

classification is reduced

• Very successful

• Other formulations exist where minimizing the number of

variables is folded into the optimization problem

• Similar algorithm exist for non-linear SVMs

• Some of the best and most efficient variable selection

methods

49

Comparison with Neural Networks

•

•

•

•

•

•

Neural Networks

Hidden Layers map to lower

dimensional spaces

Search space has multiple

local minima

Training is expensive

Classification extremely

efficient

Requires number of hidden

units and layers

Very good accuracy in typical

domains

•

•

•

•

•

•

•

SVMs

Kernel maps to a very-high

dimensional space

Search space has a unique

minimum

Training is extremely efficient

Classification extremely

efficient

Kernel and cost the two

parameters to select

Very good accuracy in typical

domains

Extremely robust

50

Why do SVMs Generalize?

• Even though they map to a very highdimensional space

– They have a very strong bias in that space

– The solution has to be a linear combination of the

training instances

• Large theory on Structural Risk Minimization

providing bounds on the error of an SVM

– Typically the error bounds too loose to be of practical

use

51

MultiClass SVMs

• One-versus-all

– Train n binary classifiers, one for each class against

all other classes.

– Predicted class is the class of the most confident

classifier

• One-versus-one

– Train n(n-1)/2 classifiers, each discriminating between

a pair of classes

– Several strategies for selecting the final classification

based on the output of the binary SVMs

• Truly MultiClass SVMs

– Generalize the SVM formulation to multiple categories

52

Summary for SVMs

• SVMs express learning as a mathematical

program taking advantage of the rich theory in

optimization

• SVM uses the kernel trick to map indirectly to

extremely high dimensional spaces

• SVMs extremely successful, robust, efficient,

and versatile while there are good theoretical

indications as to why they generalize well

53

SVM Tools: SVM-light

• SVM-light: a command line C program that

implements the SVM learning algorithm

• Classification, regression, ranking

• Download at http://svmlight.joachims.org/

• Documentation on the same page

• Two programs

– svm_learn for training

– svm_classify for classification

54

SVM-light Examples

• Input format

1 1:0.5 3:1 5:0.4

-1 2:0.9 3:0.1 4:2

• To train a classifier from train.data

– svm_learn train.data train.model

• To classify new documents in test.data

– svm_classify test.data train.model test.result

• Output format

– Positive score positive class

– Negative score negative class

– Absolute value of the score indicates confidence

• Command line options

– -c a tradeoff parameter (use cross validation to tune)

55

More on SVM-light

• Kernel

– Use the “-t” option

– Polynomial kernel

– User-defined kernel

• Semi-supervised learning (transductive

SVM)

– Use “0” as the label for unlabeled examples

– Very slow

56

Outline

Machine Learning Approaches for Natural

Language Processing

k-Nearest Neighbor

Support Vector Machine

Maximum Entropy (log-linear) Model

Global Linear Model

(this part is revised from that by Michael Collins)

57

Overview

• Log-linear models

– Feature function: sequence labeling

– Conditional probability

• The maximum-entropy property

• Smoothing, feature selection etc. in loglinear models

58

Mapping strings to Tagged Sequences

• a b e e a f h j => a/C b/D e/C e/C a/D f/C

h/D j/C

59

Part-of-Speech Tagging

• INPUT:

– Profits soared at Boeing Co., easily topping forecasts on Wall

Street, as their CEO Alan Mulally announced first quarter results.

• OUTPUT:

– Profits/N soared/V at/P Boeing/N Co./N ,/, easily/ADV topping/V

forecasts/N on/P Wall/N Street/N ,/, as/P their/POSS CEO/N

Alan/N Mulally/N announced/V first/ADJ quarter/N results/N ./.

• N = Noun

V = Verb

P = Preposition

Adv = Adverb

Adj = Adjective

…

60

Information Extraction

• Named Entity Recognition

• INPUT:

– Profits soared at Boeing Co., easily topping

forecasts on Wall Street, as their CEO Alan

Mulally announced first quarter results.

• OUTPUT:

– Profits soared at [Company Boeing Co.] , easily

topping forecasts on [Location Wall Street], as

their CEO [Person Alan Mulally] announced first

quarter results.

61

Named Entity Extraction as Tagging

• INPUT:

– Profits soared at Boeing Co., easily topping forecasts on Wall

Street, as their CEO Alan Mulally announced first quarter results.

• OUTPUT:

– Profits/NA soared/NA at/NA Boeing/SC Co./CC ,/NA easily/NA

topping/NA forecasts/NA on/NA Wall/SL Street/CL ,/NA as/NA

their/NA CEO/NA Alan/SP Mulally/CP announced/NA first/NA

quarter/NA results/NA ./NA

• NA = No entity

SC = Start Company

CC = Continue Company

SL = Start Location

CL = Continue Location

…

62

Extracting Glossary Entries from the Web

• Input:

• Output: St. Elmo’s Fire: A luminous, and often audible,

electric discharge that is sporadic in nature. It occurs from

objects, especially pointed ones, when the electrical field

strength near their surfaces attains a value near 100 volts

per centimeter...

63

The General Problem

Y

• We have some input domain

• Have a finite label set Y

• Aim is to provide a conditional probability

P( y | x)

for any x and y Y

64

An Example

• Hispaniola/NNP quickly/RB became/VB an/DT

important/JJ base/?? from which Spain expanded its

empire into the rest of the Western Hemisphere .

• There are many possible tags in the position ??

= {NN, NNS, Vt, Vi, IN, DT, . . .}

• The input domain is the set of all possible histories (or

contexts)

• Need to learn a function from (history, tag) pairs to a

probability

Y

P (tag | history )

65

Representation: Histories

•

•

•

•

•

•

t1 , t2 , w[1:n ] , i

w

A history is a 4-tuple

t1 , t2 are the previous two tags.

w[1:n ] are the n words in the input sentence.

i is the index of the word being tagged

is the set of all possible histories

Hispaniola/NNP quickly/RB became/VB an/DT

important/JJ base/?? from which Spain expanded its

empire into the rest of the Western Hemisphere .

–

t1 , t2 = DT, JJ

– w[1:n ] = <Hispaniola, quickly, became,…, Hemisphere, .>

–

i=6

66

Feature Vector Representations

• We have some input domain , and a finite

label set Y. Aim is to provide a conditional

probability P( y | x) for any x and y Y .

• A feature is a function f : Y Ɍ

(Often binary features or indicator functions

f : Y {0,1} ).

• Say we have m features k for k 1...m

A feature vector ( x, y ) Ɍm for any x and

y Y

67

An Example (continued)

•

is the set of all possible histories of form t1 , t2 , w[1:n ] , i

•

Y {NN , NNS ,Vt ,Vi , IN , DT ,...}

We have m features k : Y

•

• For example:

1

1

0

1

2 (h, t ) { 0

(h, t ) {

Ɍ for

k 1...m

if current word wi is base and t = Vt

otherwise

if current word wi ends in ing and t = VBG

otherwise

1 ( JJ , DT , Hispaniola,... , 6 , Vt ) 1

2 ( JJ , DT , Hispaniola,... , 6 , Vt ) 0

68

The Full Set of Features

in [Ratnaparkhi 96]

• Word/tag features for all word/tag pairs, e.g.,

100 (h, t ) {

1

0

if current word wi is base and t = Vt

otherwise

• Spelling features for all prefixes/suffixes of length 4, e.g.,

101 (h, t ) {

1

0

if current word wi ends in ing and t = VBG

otherwise

102 (h, t ) {

1

0

if current word wi starts with pre and t = NN

otherwise

69

The Full Set of Features in [Ratnaparkhi

96]

• Contextual Features, e.g.,

1 if t2 , t1 , t DT , JJ , Vt

0 otherwise

103 (h, t )

1 if t1 , t JJ , Vt

104 (h, t )

0 otherwise

1 if t Vt

105 (h, t )

0 otherwise

1 if previous word wi 1 the and t Vt

0 otherwise

106 (h, t )

1 if next word wi 1 the and t Vt

0 otherwise

107 (h, t )

70

The Final Result

• We can come up with practically any questions

(features) regarding history/tag pairs.

• For a given history x , each label in Y is

mapped to a different feature vector

( JJ , DT , Hispaniola,... , 6 , Vt ) 1001011001001100110

( JJ , DT , Hispaniola,... , 6 , JJ ) 0110010101011110010

( JJ , DT , Hispaniola,... , 6 , NN ) 0001111101001100100

( JJ , DT , Hispaniola,... , 6 , IN ) 0001011011000000010

71

Overview

• Log-linear models

– Feature function: sequence labeling

– Conditional probability

• The maximum-entropy property

• Smoothing, feature selection etc. in loglinear models

72

Log-Linear Models

• We have some input domain , and a finite label set Y .

Aim is to provide a conditional probability P(y|x) for any

x and y Y

• A feature is a function f : Y Ɍm

(Often binary features or indicator functions f : Y {0,1} ).

• Say we have m features k for k 1...m

A feature vector ( x, y ) Ɍm for any x and y Y

• We also have a parameter vector W Ɍm

• We define

eW ( x , y )

P ( y | x, W )

W ( x , y ')

e

y 'Y

73

More About Log-Linear Models

• Why the name?

log P( y | x,W ) W ( x, y ) log eW ( x , y ')

y 'Y

Linear term

Normalization term

• Maximum-likelihood estimates given training sample

( xi , yi ) for i 1...n, each ( xi , yi ) Y :

WML arg maxW

m

L (W )

where

n

L (W ) log P ( yi | xi )

i 1

n

n

i 1

i 1

W ( x , y ) log eW ( x , y ')

y 'Y

74

Calculating

the Maximum-Likelihood Estimates

• Need to maximize:

n

n

i 1

i 1

L(W ) W ( xi , yi ) log eW ( xi , y ')

• Calculating gradients:

dL

dW

W

n

n

i 1

i 1

( xi , yi )

y 'Y

W ( x , y ')

(

x

,

y

')

e

y 'Y i

i

W ( xi , z ')

e

z 'Y

eW ( xi , y ')

= ( xi , yi ) y 'Y ( xi , y ')

W ( xi , z ')

e

i 1

i 1

z 'Y

n

n

n

n

i 1

i 1

= ( xi , yi ) y 'Y ( xi , y ')P( y ' | xi , W )

Empirical counts

Expected counts

75

Gradient Ascent Methods

• Need to maximize

dL

dW

W

n

L(W )

n

( x , y )

i 1

where

i

i

i 1

y 'Y

( xi , y ')P( y ' | xi , W )

• Initialization: W 0

Iterate until convergence:

• Calculate dL |W

dW

• Calculate arg max L(W )

• Set W W *

(Line Search)

76

Conjugate Gradient Methods

• (Vanilla) gradient ascent can be very slow

• Conjugate gradient methods require calculation of

gradient at each iteration, but do a line search in a

direction which is a function of the current gradient,

and the previous step taken.

• Conjugate gradient packages are widely available. In

general: they require a function

dy

calc _ gradient (W ) ( L(W ), |w )

dx

And that’s about it!

77

Parameter Estimation for maximum entropy

a quick overview

• General Iterative Scaling (GIS)

– Improved Iterative Scaling (IIS)

• Better choices

– Binary classification: Conjugate Gradient Methods

– Multi-class classification: Limited memory BFGS

algorithm

Iterative Scaling

• Initialization:

W=0

Calculate H ( xi , yi )m

(Empirical counts)

i

Calculate C max i 1...n, yY (k ( xi , y ))

k 1

• Iterate until convergence:

Calculate E (W ) i y 'Y ( xi , y)P( y| xi ,W )

(Expected counts)

Hk

1

For k 1...m, set Wk Wk log

C

•

Ek (W )

Converges to maximum-likelihood solution provided that

k ( xi , yi ) 0 for all i, k

79

Derivation of Iterative Scaling

• Consider a vector of updates Ɍm, so that Wk 1 Wk

• The gain in log-likelihood is then L(W ) L(W )

•

L(W ) L(W )

(W ) ( xi , yi ) log yY e(W ) (xi , y)

n

n

i 1

i 1

( W ( xi , yi ) log yY e

n

n

i 1

)

i 1

( x , y ) log

( x , y ) log

n

i

i 1

n

(W ) ( xi , y )

e

y Y

i 1

W ( xi , z )

e

zY

i

n

n

i

i 1

( W ) (xi , y )

y Y

i

p ( y ' | xi , W )e ( xi , y)

i 1

80

n

n

( xi , yi ) 1 p ( y ' | xi , W )e ( xi , y)

i 1

i 1 yY

(from log( x) 1 x)

= ( xi , yi ) 1 yY p ( y ' | xi , W ) exp{( ( xi , y ) 0 (C Ci ( y ')))}

n

n

i 1

i 1

(where Ci ( y ') k ( xi , y '), and C=max i , y 'Ci ( y '))

k

n

n

( xi , yi ) 1 p ( y ' | xi , W )(

i 1

i 1 k

q( x) f ( x)

(from e x

k

( xi , y)

C

e

C k

q ( x)e f ( x ) for any q ( x) 0, and

x

C Ci ( y)

)

C

q( x) 1)

x

A(W , )

81

• We now have an auxiliary function A(W , ) such that

L(W , ) L(W ) A(W , )

• Now maximize A(W , ) with respect to each k :

n

n

dA

k ( xi , yi ) yY p( y ' | xi ,W )k ( xi , y)eC k

d k i 1

i 1

= H k eC k Ek (W )

• Setting derivatives equal to 0 gives iterative scaling:

Hk

1

k log

C

Ek (W )

82

Improved Iterative Scaling (Berger et. al)

n

n

( x , y )

(

x

,

y

)

1

p

(

y

'

|

x

,

W

)

e

i

i

i

i

i 1

i 1 yY

n

n

( xi , yi ) 1 p( y ' | xi , W )(

i 1

i 1 yY

k

( xi , y)

f ( xi , y)

e f ( xi , y) k )

(Where f ( xi , y) ( xi , y),

k

q( x) f ( x)

and from e x

q( x)e f ( x ) for any q( x) 0, and

x

q( x) 1)

x

A(W , )

Maximizing A(W , ) w.r.t. involves finding k ‘s which solve:

f ( x , y ')

(

x

,

y

)

p

(

y

'

|

x

,

W

)

(

x

,

y

)

e

0

k i i yY

i

k

i

n

n

i

i 1

i 1

k

83

Overview

• Log-linear models

– Feature function: sequence labeling

– Conditional probability

• The maximum-entropy property

• Smoothing, feature selection etc. in loglinear models

84

Maximum-Entropy Properties of

Log-Linear Models

• We define the set of distributions which satisfy linear

constraints implied by the data:

n

n

i 1

i 1

{ p : ( xi , yi ) yY ( xi , y )P( y | xi )}

Empirical counts

Expected counts

here, p is an n | Y | vector defining P( y | xi ) for all i, y.

• Note that at least one distribution satisfies these

constraints, i.e.,

1 if y yi

p( y | xi )

0 otherwise

85

Maximum-Entropy Properties

of Log-Linear Models

• The entropy of any distribution is:

H ( p) (

1

p( y | xi ) log p( y | xi ))

n i yY

• Entropy is a measure of “smoothness” of a

distribution

• In this case, entropy is maximized by uniform

distribution,

p( y | xi )

1

for all y, xi

|Y |

86

The Maximum-Entropy Solution

• The maximum entropy model is

p* arg max pP H ( p)

• Intuition: find a distribution which

– satisfies the constraints

– is as smooth as possible

87

Maximum-Entropy Properties of

Log-Linear Models

• We define the set of distributions which can be specified

in log-linear form

eW ( x , y )

Q { p : p( y | xi )

,W Ɍm

W ( x , y ')

i

e

y 'Y

i

Here, each p is an n | Y | vector defining P( y | xi ) for all i, y.

• Define the negative log-likelihood of the data

L( p ) logp ( yi | xi )

i

• Maximum likelihood solution:

p* arg min qQ L(q)

where Q is the closure of Q

88

Duality Theorem

• There is a unique distribution q* satisfying

– q* intersection of P and Q

– q* arg max pP H ( p)

(Max-ent solution)

– q* arg min L(q)

(Max-likelihood solution)

qQ

• This implies:

1. The maximum entropy solution can be written

in log-linear form

2. Finding the maximum-likelihood solution also

gives the maximum entropy solution

89

Developing Intuition

Using Lagrange Multipliers

• Max-Ent Problem: Find arg max pP H ( p)

• Equivalent (unconstrained) problem

max p infW m L( p, W )

where is the space of all probability distributions,

and

m

L( p,W ) ( H ( p) Wk (k ( xi , yi ) k ( xi , y ) p( y | xi ))))

k 1

i

i yY

• Why the equivalence?:

infW

m

H ( p) if all constraints satisfied, i.e., p P

L ( p, W )

otherwise

90

Developing Intuition Using Lagrange

Multipliers

• We can now switch the min and max:

max pP H ( p) max p infW m L( p,W ) infW m max p L( p, W ) infW m L(W )

where

L(W ) max p L( p, W )

91

• By differentiating L (q, W ) w.r.t. p, and setting the

derivative to zero (making sure to include Lagrange

multipliers that ensure for all i, p( y | xi ) 1 ), and solving

y

p* max p L( p,W )

•

gives

• Also,

Wkk ( xi , y )

p ( y | xi ,W )

ek

*

Wkk ( xi , y ')

y Y

ek

L(W ) max p L( p,W ) L( p* ( y | xi ,W ),W )

= logp* ( y | xi ,W )

i

i.e., the negative log-likelihood under parameters

92

To Summarize

• We’ve shown that

max pP H ( p) infW

m

L(W )

where L(W ) is negative log-likelihood

• This argument is pretty informal, as we have to be

careful about switching the max and inf, and we need to

relate infW L(W ) to finding q* arg min qQ L(q)

• See [Stephen Della Pietra, Vincent Della Pietra, and

Lafferty, 1997] for a proof of the duality theorem.

m

93

Is the Maximum-Entropy Property Useful?

• Intuition: find a distribution which

1. satisfies the constraints

2. is as smooth as possible

• One problem: the constraints are define by

empirical counts from the data.

• Another problem: no formal relationship between

maximum entropy property and generalization(?)

(at least none is given in the NLP literature)

94

Overview

• Log-linear models

– Feature function: sequence labeling

– Conditional probability

• The maximum-entropy property

• Smoothing, feature selection etc. in loglinear models

95

A Simple Approach: Count Cut-Offs

• [Ratnaparkhi 1998] (PhD thesis): include all

features that occur 5 times or more in training

data. i.e.,

k ( xi , yi ) 5

i

for all features k

96

Gaussian Priors

• Modified loss function

2

W

L(W ) W ( xi , yi ) log eW ( xi , y ') k 2

i 1

i 1

y 'Y

k 1 2

n

m

n

• Calculating gradients:

dL

dW

n

n

W ( xi , yi ) y 'Y ( xi , y ')P ( y ' | xi , W )

i 1

Empirical counts

i 1

1

2

W

Expected counts

• Can run conjugate gradient methods as before

• Adds a penalty for large weights

97

Experiments with Gaussian Priors

• [Chen and Rosenfeld, 1998] apply maximum entropy models to

language modeling: Estimate P( wi | wi 2 , wi 1 )

• Unigram, bigram, trigram features, e.g.,

1 if trigram is (the, dog, laughs)

0 otherwise

1 ( wi 2 , wi 1 , wi )

1 if bigram is (dog, laughs)

2 ( wi 2 , wi 1 , wi )

0 otherwise

1 if unigram is (laughs)

3 ( wi 2 , wi 1 , wi )

0 otherwise

k ( wi2 , wi1 , wi )W

P ( wi | wi 2 , wi 1 )

ek

e

w

k ( wi2 , wi1 , wi )W

k

98

Experiments with Gaussian Priors

• In regular (unsmoothed) maxent, if all n-gram features

are included, then it’s equivalent to maximum-likelihood

estimates!

Count ( wi 2 , wi 1 , wi )

P( wi | wi 2 , wi 1 )

Count ( wi 2 , wi 1 )

• [Chen and Rosenfeld, 1998]: with Gaussian priors, get

very good results. Performs as well as or better than

standardly used “discounting methods” such as KneserNey smoothing (see lecture on language model).

• Note: their method uses development set to optimize

parameters.

k ( wi2 , wi1 , wi )W

• Downside: computing e k

is SLOW.

w

99

Feature Selection Methods

• Goal: find a small number of features which make good

progress in optimizing log-likelihood

• A greedy method:

– Step 1 Throughout the algorithm, maintain a set of active

features. Initialize this set to be empty.

– Step 2 Choose a feature from outside of the set of active

features which has largest estimated impact in terms of

increasing the log-likelihood and add this to the active feature set.

– Step 3 Minimize L(W ) with respect to the set of active features.

Return to Step 2.

100

Figures

from [Ratnaparkhi 1998] (PhD thesis)

• The task: PP attachment ambiguity

• ME Default: Count cut-off of 5

• ME Tuned: Count cut-offs vary for 4-tuples, 3-tuples, 2tuples, unigram features

• ME IFS: feature selection method

101

Maximum Entropy ME and Decision Tree DT Experiments

on PP attachment

Experiment

Accuracy

Training Time

#of Features

ME Default

82.0%

10min

4028

ME Tuned

83.7%

10min

83875

ME IFS

80.5%

30hours

387

DT Default

72.2%

1min

DT Tuned

80.4%

10min

DT Binary

-

1 week

Baseline

70.4%

102

Figures from [Ratnaparkhi 1988] (PhD thesis)

• A second task: text classification, identifying

articles about acquisitions

Experiment

Accuracy

Training Time

#of Features

ME Default

95.5%

15min

2350

ME IFS

95.8%

15hours

356

DT Default

91.6%

18hours

DT Tuned

92.1%

10hours

103

Summary

• Introduced log-linear models as general approach for

modeling conditional probabilities P( y | x)

• Optimization methods:

– Iterative scaling

– Gradient ascent

– Conjugate gradient ascent

• Maximum-entropy properties of log-linear models

• Smoothing methods using Gaussian prior, and feature

selection methods

104

References

•

•

•

•

•

•

•

•

•

[Altun, Tsochantaridis, and Hofmann, 2003] Altun, Y., I. Tsochantaridis, and T. Hofmann. 2003.

Hidden Markov Support Vector Machines. In Proceedings of ICML 2003.

[Bartlett 1998] P. L. Bartlett. 1998. The sample complexity of pattern classification with neural

networks: the size of the weights is more important than the size of the network, IEEE

Transactions on Information Theory, 44(2): 525-536, 1998.

[Bod 98] Bod, R. (1998). Beyond Grammar: An Experience-Based Theory of Language. CSLI

Publications/Cambridge University Press.

[Booth and Thompson 73] Booth, T., and Thompson, R. 1973. Applying probability measures to

abstract languages. IEEE Transactions on Computers, C-22(5), pages 442–450.

[Borthwick et. al 98] Borthwick, A., Sterling, J., Agichtein, E., and Grishman, R. (1998). Exploiting

Diverse Knowledge Sources via Maximum Entropy in Named Entity Recognition. Proc. of the

Sixth Workshop on Very Large Corpora.

[Collins and Duffy 2001] Collins, M. and Duffy, N. (2001). Convolution Kernels for Natural

Language. In Proceedings of NIPS 14.

[Collins and Duffy 2002] Collins, M. and Duffy, N. (2002). New Ranking Algorithms for Parsing and

Tagging: Kernels over Discrete Structures, and the Voted Perceptron. In Proceedings of ACL

2002.

[Collins 2002a] Collins, M. (2002a). Discriminative Training Methods for Hidden Markov models:

Theory and Experiments with the Perceptron Algorithm. In Proceedings of EMNLP 2002.

[Collins 2002b] Collins, M. (2002b). Parameter Estimation for Statistical Parsing Models: Theory

and Practice of Distribution-Free Methods. To appear as a book chapter.

105

•

•

•

•

•

•

[Crammer and Singer 2001a] Crammer, K., and Singer, Y. 2001a. On the

Algorithmic Implementation of Multiclass Kernel-based Vector Machines. In

Journal of Machine Learning Research, 2(Dec):265-292.

[Crammer and Singer 2001b] Koby Crammer and Yoram Singer. 2001b.

Ultraconservative Online Algorithms for Multiclass Problems In Proceedings

of COLT 2001.

[Freund and Schapire 99] Freund, Y. and Schapire, R. (1999). Large Margin

Classification using the Perceptron Algorithm. In Machine Learning,

37(3):277–296.

[Helmbold and Warmuth 95] Helmbold, D., and Warmuth, M. On Weak

Learning. Journal of Computer and System Sciences, 50(3):551-573, June

1995.

[Hopcroft and Ullman 1979] Hopcroft, J. E., and Ullman, J. D. 1979.

Introduction to automata theory, languages, and computation. Reading,

Mass.: Addison–Wesley.

[Johnson et. al 1999] Johnson, M., Geman, S., Canon, S., Chi, S., & Riezler,

S. (1999). Estimators for stochastic ‘unification-based” grammars. In

Proceedings of the 37th Annual Meeting of the Association for

Computational Linguistics. San Francisco: Morgan Kaufmann.

106

•

•

•

•

•

•

[Lafferty et al. 2001] John Lafferty, Andrew McCallum, and Fernando

Pereira. Conditional random fields: Probabilistic models for segmenting and

labeling sequence data. In Proceedings of ICML-01, pages 282-289, 2001.

[Littlestone and Warmuth, 1986] Littlestone, N., andWarmuth, M. 1986.

Relating data compression and learnability. Technical report, University of

California, Santa Cruz.

[MSM93] Marcus, M., Santorini, B., & Marcinkiewicz, M. (1993). Building a

large annotated corpus of english: The Penn treebank. Computational

Linguistics, 19, 313-330.

[McCallum et al. 2000] McCallum, A., Freitag, D., and Pereira, F. (2000)

Maximum entropy markov models for information extraction and

segmentation. In Proceedings of ICML 2000.

[Miller et. al 2000] Miller, S., Fox, H., Ramshaw, L., and Weischedel, R.

2000. A Novel Use of Statistical Parsing to Extract Information from Text. In

Proceedings of ANLP 2000.

[Ramshaw and Marcus 95] Ramshaw, L., and Marcus, M. P. (1995). Text

Chunking Using Transformation-Based Learning. In Proceedings of the

Third ACLWorkshop on Very Large Corpora, Association for Computational

Linguistics, 1995.

107

•

•

•

[Ratnaparkhi 96] A maximum entropy part-of-speech tagger. In Proceedings

of the empirical methods in natural language processing conference.

[Schapire et al., 1998] Schapire R., Freund Y., Bartlett P. and Lee W. S.

1998. Boosting the margin: A new explanation for the effectiveness of voting

methods. The Annals of Statistics, 26(5):1651-1686.

[Zhang, 2002] Zhang, T. 2002. Covering Number Bounds of Certain

Regularized Linear Function Classes. In Journal of Machine Learning

Research, 2(Mar):527-550, 2002.

108

Assignment (17)

1.Use a K-NN classifier to classify the iris data . Use a 10-fold cross validation to

estimate the error rate. In order to see the effect of the value of K on the performance of

the K-NN classifier, try K=1, 3, 5, 7, 9. Plot the average error rate as a function of K and

report the variance of error for each K.

A matlab version of the KNN classifier

There are 3 matlab files - knn.m, countele.m, vecdist.m - in this zip file.

The 3 files were downloaded from http://neural.cs.nthu.edu.tw/jang/matlab/demo. The

file knn.m implements the KNN algorithm using the Euclidean distance metric. The files

countele.m and vecdist.m are helper files required by the knn() function. Place the three

files in the same folder. Type "help knn" in the matlab prompt to find out the function

usage.

Iris data:

The iris data set, one of the most well known data sets, has been used in

pattern recognition literature to evaluate the performance of various classication and

clustering algorithms. This data set consists of 150 4-dimensional patterns

belonging to three types of iris owers (setosa, versicolor, and virginica).

There are 50 patterns per class. The 4 features correspond to: sepal length,

sepal width, petal length and petal width (the unit of measurement is cm).

The data can be accessed at

http://www.cse.msu.edu/cse802/homework/resources/hw4/iris.txt. The class labels

are indicated at the end of every pattern.

109

2. Using the MATLAB implementation of the SVM classifier available here:

Train a one-vs-rest SVM on the iris data, and estimate the classification error using

10-fold cross-validation.

Train a one-v-one SVM on the iris data and estimate the classification error by

combining them using a majority vote rule.

Data can be downloaded from

http://www.cse.msu.edu/~cse802/homework/hw5/index.html

110