SVMs in a Nutshell: Support Vector Machine Basics

advertisement

SVMs in a Nutshell

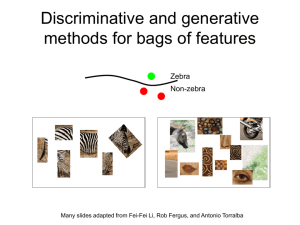

What is an SVM?

• Support Vector Machine

– More accurately called support vector

classifier

– Separates training data into two classes so

that they are maximally apart

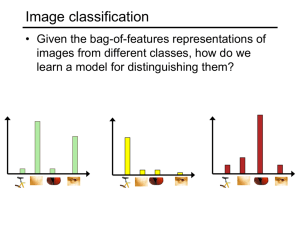

Simpler version

• Suppose the data is linearly separable

• Then we could draw a line between the

two classes

Simpler version

• But what is the best line? In SVM, we’ll

use the maximum margin hyperplane

Maximum Margin Hyperplane

What if it’s non-linear?

Higher dimensions

• SVM uses a kernel function to map the

data into a different space where it can

be separated

What if it’s not separable?

• Use linear separation, but allow training

errors

• This is called using a “soft margin”

• Higher cost for errors = creation of more

accurate model, but may not generalize

• Choice of parameters (kernel and cost)

determines accuracy of SVM model

• To avoid over- or under-fitting, use cross

validation to choose parameters

Some math

•

•

•

•

•

Data: {( x1, c1), (x2, c2), …, (xn, cn)}

xi is vector of attributes/features, scaled

ci is class of vector (-1 or +1)

Dividing hyperplane: wx - b = 0

Linearly separable means there exists a

hyperplane such that wxi - b > 0 if positive

example and wxi - b < 0 if negative example

• w points perpendicular to hyperplane

More math

• wx - b = 0

Support vectors

• wx - b = 1

• wx - b = -1

Distance between

hyperplanes is

2/|w|, so minimize

|w|

More math

•

•

•

•

For all i, either w xi - b 1 or wx - b -1

Can be rewritten: ci(w xi - b) 1

Minimize (1/2)|w| subject to ci(w xi - b) 1

This is a quadratic programming problem

and can be solved in polynomial time

A few more details

• So far, assumed linearly separable

– To get to higher dimensions, use kernel function

instead of dot product; may be nonlinear transform

– Radial Basis Function is commonly used kernel:

k(x, x’) = exp(||x - x’||2) [need to choose ]

• So far, no errors; soft margin:

– Minimize (1/2)|w| + C i

– Subject to ci(w xi - b) 1 - i

– C is error penalty