ii. data as a corporate resource

advertisement

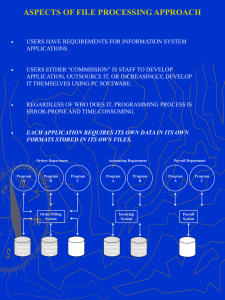

BACS 485 03/21/16 7:19 PM 485_Intro.doc DATABASE PROCESSING CONCEPTS I. PRIMARY POINTS OF LECTURE SERIES View data as a valuable corporate resource Be familiar with the concept of IRM (information resource mgt) Basic components of database system Comparison of File Processing and Database Processing Advantages/Disadvantages of File and Database Processing Costs and Risks of DBMS approach II. DATA AS A CORPORATE RESOURCE In the future, those companies that succeed will be those that treat data as a valuable corporate resource. In the past data was thought of as the private domain of functional areas. This was a natural out growth of current technology. More formally stated, this is the concept of IRM... A. Information Resource Management 25 years ago this aspect of database was not taught. You just jumped right into the technical aspects. Now, not so. Now, database must be viewed in the larger context as part of the corporate information resource. One of the primary purposes of information is to support managerial decision making. Success of company depends on the quality (and speed) of management decisions. This is a fairly new way to view database. Greatly affects the way systems are designed and thought of. The main point is that you need to design databases within the overall context of the company (no longer can you ignore corporate structure and build strictly to functional specifications). III. FILE VERSUS DATABASE PROCESSING ENVIRONMENTS Current Database systems are the end result of 40 years of evolution. Knowing the history of computer systems helps to understand why things are as they are and where they may be going. Also it helps avoid re-making the same mistakes again. Copyright Jay Lightfoot, Ph.D. 1 A. Brief History In the beginning there were no computers. Businesses relied on paper systems and written procedures. Some very clever methods were devised (and are still used today). The problem with these systems were that they: (partial list) could not grow infinitely were not responsive or flexible enough depended upon dedicated (life-long) workers do not provide companies with competitive edge One of the results of these manual systems was that each functional area "owned" their data and became very possessive of it. This was OK for then. When computers were introduced it gave a way to automate manual tasks. (In fact, the title "computer" used to apply to a person that did manual computations.) Allows company to: speed up tasks (without infinite personnel) get better task integrity (accuracy) grow companies bigger This was a big deal. Much fan-fare. People expected lots. The big companies were the first to buy computers. The early computers were very expensive and not very user-friendly. They cost so much that they were applied to the areas where the chance of success was best (i.e., payback of the huge investment). The best area to get quick pay-back (and get lots of good PR) was to automate independent application systems to automate jobs of clerical workers and solve a specific problem. For example, payroll, accounts payable, purchasing,... This gave early computer systems a bad reputation for putting people out of work (they still have that reputation to some people). The early system designers tried to program the computer exactly the same as the manual system. This was easier, but tended to prolong the fiction of functional data ownership. The system and its data were owned by the department responsible for the function. The data was considered a private resource. Computer technology advances and systems grow more powerful, but the functional users still consider data to be a private resource. (A fiefdom). BAD!!! Also, each time a new application was developed, new files were defined. It took years for people to realize (and really start to do something about) treating data as a corporate resource that needs to be managed. This was one of the factors that started the database movement. Imagine situation where money is not managed in company. Each department has a pile they control. No sharing, no cross-department information sharing about the money. If big purchase came up, no way to consolidate money to make it. It is ridiculous to consider, but that is exactly the situation of the traditional approach. Copyright Jay Lightfoot, Ph.D. 2 Let's take a closer look at the traditional file approach..... B. Traditional File Processing Approach In the traditional approach, computer systems are designed for and used by individual departments for specific tasks. Each department maintains and "owns" their own data. Thus, little sharing takes place between departments (only that sharing that is mandated and required). Each new system usually builds its own data files rather than try to access existing ones because to tough to coordinate modifications and requirements. A central DP shop provides the programming service for the functional departments (but it is still the departments data). The DP shop must somehow manage all programming needs for the whole company without the benefit of a global plan or centralized authority. Very tough to do! Large backlogs characterized this setup. Impatient departments sometimes built their own mini-DP shop to handle their own programming needs, but that seldom worked because existing data sharing goes away. 1. Advantages There are 2 transitive advantages to the traditional approach (but they go away quickly). Be aware that I am not defending these methods. I just want you to realize that when these systems initially developed, there were valid reasons (advantages). 1st advantage... a) Simple (natural) The traditional file processing method was the easiest way for systems to develop given the current state-of-the-art. Those people in the 50's weren't stupid. They realized that the situation was not ideal, it's just that the computer technology was limited (e.g., tapes and cards were available, not disks). (Later, it stayed popular because of momentum and the expense to change.) Also, the systems that were automated were not the real money-makers for the company. Marketing and production make money. Payroll and General Ledger just keep track of it. Lots easier ways to improve company performance elsewhere than by improving computerized accounting systems (e.g., work on mission critical manual systems). So, for the time period, the traditional systems were the natural way to start. Not so today! 2nd advantage of traditional systems... Copyright Jay Lightfoot, Ph.D. 3 b) Cheap (initially) Building integrated systems is much more complex than building stand-alone (traditional) systems. Thus, it costs more to build integrated systems than to build stand-alone ones. Computers were normally bought to save money, so it did not make sense to spend your "profit" on unnecessary integration. Integration was viewed as unnecessary because they had gotten along fine without it in the manual systems (or so they thought). Once a computerized manual system was working, it was cheaper to leave it alone than to severely modify it. (They did not know yet that 80% of all programming is maintenance.) 2. Disadvantages of traditional systems The disadvantages of the traditional file approach far outweigh the "so called advantages". This is why database was developed. 1st disadvantage.... a) Distributed Ownership In traditional systems each functional department "owned" its own data. This is the "my data" syndrome. They feel it is their personal property (like office furniture) to do with as they please. Often they are hesitant to let anyone else in the company see it (because then their power would be diminished) Merging data became a political process. Does not help global competitiveness. 3 negative aspects to distributed ownership..... (1) Uncontrolled Redundancy Each new system required new files to be built (since no one would share existing data even if technology existed to make it possible). Lots of data was stored multiple times. Once for each system that needed access to it. This was bad because: disk space is wasted data must be input several times data must be updated several times (multiple occurrences) data was inconsistent (next section) Copyright Jay Lightfoot, Ph.D. 4 Everyone realized that redundancy was a problem, but not one knew how to fix it short of giving up control of "their data". 2nd aspect..... (2) Inconsistent Data This is the worst of the problems -- it requires special attention. When you store data several times (several places) you WILL have inconsistencies not matter how careful you are. The worst case is where you miss an update. Thus, you have two (or more) versions of data... which is correct? A more subtle inconsistency is when the update is slightly incorrect (misspelling of name or different abbreviation) or the timing of the update is incorrect (raise after P/R period...). Since each copy of data is "owned" by a different group, it is really no ones responsibility to make sure that it is globally correct. Responsibility falls between the cracks. This allows multiple versions of the truth to be stored in the computer. This undermines the users confidence in a database if it appears to give incorrect answers. It can also can mess up customer relations if you send goods to the wrong address or bill for an incorrect invoice. 3rd negative aspect of distributed ownership.... (3) Islands of Information A problem that redundancy and the "my data" syndrome causes is that groups develop their own data environments that are independently maintained. These users become self-sufficient and cut off from others. They are not willing to share or receive information. Not only redundant data, but redundant tools and effort too. 2nd disadvantage of traditional systems... b) Limited Data Sharing Since everyone has their own copy of the data, no one shares. This wastes disk space and perpetuates the cycle of independent file systems. For example, you are designing a new system that could use data already defined and maintained by other departments. Since they are unwilling to share (and it would require some rewrite of their (working) system) you decide to take the easy way out and just re-enter the data. This is politically easier and simpler for the moment, but is very short sighted. It delays fixing the problem. 3rd disadvantage of traditional systems... Copyright Jay Lightfoot, Ph.D. 5 c) Inflexibility Independent islands of information do not easily cooperate. When changes are needed it becomes a political nightmare. People have to call in favors and make deals to get things done. If quick action and responsiveness are required -- forget it. This is where traditional file systems really start to hurt companies. It causes loss of business to more efficient competition. In addition, changes to file structures cause a ripple effect in all the programs that access that data. For example 9-digit zip codes were a big headache. Independent file-based systems tend to be designed for a specific purpose. They do not easily allow un-planned processing. The 1st negative aspect of inflexibility is... (1) No Data Independence This is very important. When data definition or processing changes in a traditional file system it requires many modifications. The reason for this is similar to the data redundancy discussion above. In this case, the data definition is redundantly stored. In addition, the way the data are processed is also stored redundantly. So, you have to make changes in many places to accommodate a "simple" data change. When you can make data modifications without changing programs you have "data independence". 2nd aspect of inflexibility... (2) Ad Hoc Queries Constrained Another result of inflexibility is that it is difficult to get information in any format other than the standard format. For instance, say a manager is asked (of the top of her head) "how many red widgets were sold on the east cost to people under 12 years old?". Chances are that a special report does not exist with this information. (Called "ad hoc" query -- one time only query). To get the information a special report must be generated. Programmer time is usually backlogged for months so it is easier to pull the information by hand from old reports. This defeats the purpose of the computer. The data is out there and would be fairly easy to collect if proper tools existed. Recently, tools have been built to help fix this problem (called 4th generation languages). These make it easier Copyright Jay Lightfoot, Ph.D. 6 for non-programmers to do ad hoc queries, but still requires in-depth knowledge about where and how the data are stored. Most managers don't know (or care to know) this. 4th disadvantage of traditional system... d) Poor Standard Enforcement Another result of distributed ownership. Standards are things like: size of data fields (5 or 9 digit zip codes?) abbreviations used (AV. or AVE. for avenue?) timing of changes (when do you close the books?) who can access data and when? how do you name data fields? how do you calculate accounting discounts? what operating system do we use (UNIX or DOS?) what file format is best (ASCII or EBCDIC?) etc… Standards are one of the main things that make a group of independent file systems into a corporate database. Traditional file systems have very poor (if any) standard enforcement primarily because no one is in charge of the data. No rules exist, so you get a free-for-all. 3 aspects to poor standards enforcement... (1) Processing Processing standards tell groups how and when to transform data into information. It can include the obvious "how do you calculate accounting discounts" but can also include more global questions like: what hardware is used what software is used what network protocol is used etc..... Traditional file systems develop in the absence of any centralized control, hence there are few processing standards enforced unless people informally get together or unless there is a strong push from management towards commonality. Copyright Jay Lightfoot, Ph.D. 7 2nd aspect of poor standards enforcement... (2) Storage The way data are stored also requires standards. The obvious storage standards include: how long are data fields what type are the data fields what are your abbreviations Some more subtle standards include: what type of disk drive do you use what encoding scheme is used is data compression or encryption used Traditional file based systems do not generally have any storage standards because no central control exerted to make them conform. 3rd aspect of standards enforcement... (3) Naming Finally, naming schemes are a type of standard not often found in traditional file based systems. Because each group builds their files in isolation, they way data are names is inconsistent. This means that even if users wanted to share data they would have to figure out what it is called first. Last disadvantage of traditional systems... e) Excessive Costs Traditional file based systems cost more in the long run. They may seem cheaper when you are developing them, but the combined effect of all the previously stated problems eventually adds up. I group the cost problems into 2 general categories... (1) Low Programmer Productivity All the complexity of determining file names and working out standards, and dealing with data independence problems results in LOW PROGRAMMER PRODUCTIVITY. The complexity Copyright Jay Lightfoot, Ph.D. 8 of trying to share data slows down programmers. Also, if they have to "reinvent the wheel" every time they do something it results in wasted effort. Finally, programmer productivity is lower because much time is spent in maintenance (next topic). (2) Excessive Program Maintenance Lack of data independence and the inflexibility of traditional systems means that LOTS of effort is spent just to keep the systems working. Statistics show that 70% to 90% of all programmer effort is spent maintaining existing programs -- not building new systems. This does not advance the state of the company, just keeps them even. Everyone suffers from the high cost of maintenance. Programmers hate it (lousy job), managers dislike it because it is not productive, users dislike it because of the backlogs. BAD!! This is a prime factor in low programmer productivity. Can be directly tied to lack of data sharing and centralized control. C. Database Approach 1. Life Cycle Connection Nolan has divided the life cycle of organizations information systems into 6 phases. STAGE 1: INITIATION - DP first introduced STAGE 2: CONTAGION - Spreads rapidly automating basic functions STAGE 3: CONTROL - Costs go up so more control needed STAGE 4: INTEGRATION - Pull together independent systems (try) --> STAGE 5: ARCHITECTURE - Develop enterprise-wide data model (plan) STAGE 6: DISTRIBUTION - Distribute data and processing The traditional approach occupies stages 1 through 4. As the organizational information system goals advanced, more money was spent. Traditional DP processes were concerned with automating existing systems. About stage 4 (integration) companies started experimenting with database approach. This approach combines (integrates) the islands of information into a useful centralized data repository. Note that it initially saved money (disk space, documentation, specification...) but gradually grew more expensive as you require more from your information system. The point is that database approach is a natural outgrowth to file processing system. An evolution. As managers expect more from their information systems, the database approach makes more sense. As database approach comes on-line, things become possible that could not be done without database. This makes going back to the old ways impossible (if you want to stay competitive). Copyright Jay Lightfoot, Ph.D. 9 Let's look at the characteristics of the database approach in detail... 2. Database Approach Characteristics As hinted above, the database approach does away with the "my data" syndrome. It collects all data into an organized repository. The approach brings a new way of thinking and some new tools that permit the control of redundancy, improved flexibility, and centralized data control. 3 features characterize the database approach: 1. Shareability of data 2. Centralized data management and control 3. Adaptability Here's a summary of the approach... The database approach begins by building a model of the organization (called an enterprise model). The model summarizes the structure of the data used (and needed) in the company. It shows the data entities and the relationships between them. (In real life, you seldom have a global enterprise model, rather you have a collection of smaller system models. Their collected whole is equivalent to the enterprise model idea.) The structure is carefully built so that all users needs are taken care of with a minimum of redundancy. (Thus, sharing and redundancy are both solved.) Next, you describe this structure to a piece of software (called a DBMS) that can maintain both it and data in its format. Finally you load the data and use the DBMS to access and maintain the it and its structure. It is the role of the DBMS to retrieve and store the data. Also the DBMS does its best to make sure the data are accurate (not necessarily correct). It is all very complex, more so that this would indicate. There are certain advantages to the database approach... 3. Advantages Basically, the advantages of the database approach fix the disadvantages of the traditional approach (on purpose). There should be no surprises here. The 1st advantage ... Copyright Jay Lightfoot, Ph.D. 10 a) Data Integration (conceptual model) For the first time, companies may see exactly what data they have and need. This sounds simple, but it is surprising how many companies don't know what data they have and require. Knowing this can lead to some efficiencies (e.g., why are we storing this information that no one wants?). Allows streamlining of requirements and systems if effort is applied. The 2nd advantage of database approach... b) Controlled Redundancy Very obvious. If you only store it once then you do away with redundancy and its related problems. Example: If you only store an employee address in one place then a single update is effective for all users. You cannot get rid of ALL redundancy. There are times when it is a good idea to keep redundant data (We'll talk about that later in the semester), but you can minimize it. This has a side benefit of saving disk space and requiring fewer maintenance programs. 3rd advantage of database approach... c) Data Sharing Since it is only stored in one place, you must share it. Actually, sharing is a very important advantage on its own. It means that you truly are managing your data and treating it like a company asset. However, when data are shared, it brings on some new problems not present in traditional systems. Like concurrency control. We'll talk about that later. 4th advantage... d) Data Integrity Your book calls this "data consistency". There is more to it than that. It refers to the problem of inconsistent data in file based systems. If data redundancy is reduced then data is only stored in one place, so the multiple inconsistent version problem is fixed. Integrity is the assurance that the data are: accurate complete Copyright Jay Lightfoot, Ph.D. 11 timely reliable It also deals with the notion that the data accurately represents the real world system it attempts to model. In other words, integrity is concerned with obvious things like: names spelled correctly proper account number all social security numbers are unique And also concerned with less obvious things like: customer discount applied to proper customer employee does not make more money than their boss Data integrity is a very important part of a DBMS (and a part that most do not do very well). 5th advantage database approach... e) Standard Enforcement Standards enforcement is an important (and often ignored) part of the database approach. Since you have centralized control of data it is easier to enforce standards. For example: naming standards (e.g., first 3 characters is system name) field definition standards (MM/DD/YY for dates) usage standards (no payroll data access on weekends) processing standards (common encryption algorithm) etc... The DBA is the person(s) responsible for standard enforcement. If they do a through job then the database if much more usable. 6th advantage... f) Centralized Control Centralized control is sort of a side benefit of the database approach (not the direct reason for using the approach, but a side effect.) Most of the benefits of centralized control are themselves advantages of the database approach. Centralized control provides some benefits that you might not immediately think of. For instance, 1st benefit of centralized control... Copyright Jay Lightfoot, Ph.D. 12 (1) Security/Privacy You can better control data security and privacy if you have centralized control. Security is the assurance that data is kept from unauthorized users. Privacy is the assurance that data is not misused by those users. (Similar concepts, but they are not identical) Having all the data in one spot within the conceptual model makes this easier. 2nd benefit of centralized control... (2) Integrity Integrity = accurate, complete, timely, reliable As mentioned before, integrity is much easier if you have a centralized body in charge of the data and the standards. Traditional file systems developed integrity through consistent "good programming practices" and voluntary cooperation among departments. This, in part, explains why some companies were more successful at system integration than others using similar equipment and doing similar processing (i.e., the informal network was better). 3rd benefit of centralized control... (3) Backup/Recovery Backup and recovery are often not done properly in file based systems because they are not thought of as necessary. (Amateur systems managers don't realize the importance of backups.) Ironically, backups are about the most important thing that you can do. When departments handled their own data, traditional based backup and recovery was done in a spotty and inconsistent manner. Some people were good at it and others were not. The reason that more problems did not occur was because companies usually only had 1 computer center (for all their independent file-based systems). The computer center handled backup and recovery without the department's knowledge. Backup and recovery are much easier when database methods used because the DBMS can handle the details (consistently). 4th benefit of centralized control... (4) Standards Obviously, standards are easier when they are centrally dictated. You cannot have standards without some central body (formal or informal) coming up with them. Copyright Jay Lightfoot, Ph.D. 13 7th advantage of the database approach... g) Flexibility/Responsiveness The database approach is much more flexible because you can access data without concern with where or how it is stored. This idea is very close to the idea of data independence. Some books also mention that database systems provide multiple retrieval paths to each data item. This is true (but misses the prime point about data independence). By default, a flexible system is also responsive. That is, you can make changes more quickly because there is less to change. (Less detail to worry about). Specifically, the flexibility of the database approach helps with... (1) Ad Hoc Queries Query languages have been developed that non-programmers can use to make one-time-only queries (ad hoc). In this way they can satisfy their unique information needs without using traditional programming services (i.e., avoid the backlog). GOOD!! However, it still does not fix the problem where users must have in-depth knowledge of data names and where it is stored. 4th generation languages (which is what most DBMS query tools are) help, but not quite as good as the sales literature promises. 2nd benefit from better flexibility... (2) Multiple User Views A side benefit of the database approach is called "views". A view is a single users perspective of the database. Briefly, users do not need to see the whole database. They typically only need to see a small portion (the same portion that the traditional file would have given). You define this database subset with the DBMS mechanism called "view definition". (We'll do this later.) Views enhance flexibility because they have great data independence. You can modify the underlying data without disturbing the view and change the view definition without modifying the programs that use it (with one exception -- you can’t remove data that the program directly uses). 8th advantage of the database approach... Copyright Jay Lightfoot, Ph.D. 14 h) Data Independence Traditional programming builds the data definition and the data processing into the same unit (called a program). This means that whenever the data definition changes, you have modify each program that uses it. BAD!!! The database approach separates the data definition and the data access methods from the programs that process the data. This provides data independence (that is the ability to change the data structure without modifying programs that use the data). 9th advantage of the database approach... i) Cost Savings The database approach saves money in the long run. However, as we will see later, a good DBMS is expensive, so this benefit is weak and a little harder to prove. Database cost savings come from 2 general areas... (1) Easier Application Development Much of the work in traditional application development is defining the data and writing code to access it. Also, a good deal of traditional application programs were devoted to integrity checks. The database approach minimizes this because the data are already defined and the access is up to the DBMS. Also, the integrity constraints can be built into the DBMS. This speeds up application development. However, it slows down analysis and building time because you have to do all that work up front. (No free lunches). Code re-use is another advantage of the database approach. In some systems, you can build generic routines and have the DBMS store them for later re-use. No need to reinvent the wheel and test these routines once you know they work. This idea was common in traditional file-based systems also, but is easier in database approach. 2nd area where you get cost savings from DBMS approach... (2) Reduced Maintenance Since the data, the data definition, and the application programs in a DBMS are all independent, you can change any without affecting the others (data independence). This makes program maintenance (and testing) easier and quicker (less money). Old-style systems forced the programmer to be concerned with "side effects" (unplanned effects of a program change). It required extensive planning and testing for even simple modifications (big $). The database approach does not totally do away with the problem of maintenance side effects, but at least it Copyright Jay Lightfoot, Ph.D. 15 minimizes them. The end effect is better programmer productivity. All is not rosy for database. There are some problems.... 4. Disadvantages This semester we will not dwell on the problems of the database approach. The advantages of database greatly outweigh the problems. However, I want you to have a realistic view of DBMS from this course (we are not "selling" DBMSs here.) a) Specialized Personnel and Hardware (big $) Although the database approach is cheaper in the long run due to improved programmer productivity (and other reasons), it has some up-front costs that are not trivial. These costs are often overlooked by anxious users. You don't want to be surprised. For example... (1) DBA, DA, Special Programmers If you plan on using existing people, you will have to send them to training classes on your DBMS product. These classes cost between $600 to $900 a day (plus expenses). Classes can last weeks. You can also do on-site training, but that is also very expensive. If you want to hire people already trained, they cost more than normal programmers (because they have had the training classes or experience). Either way, you cannot avoid the training cost and subsequent loss of productivity while they learn. In addition, you will have to hire (or train) a database administrator (DBA). This is a very specialized person (or group of people) and they are not cheap. ($50,000 to $150,000 per year normally.) 2nd area of increased costs... (2) Bigger Disk, CPU, Memory As far as hardware is concerned, you will probably have to upgrade your CPU, buy more fast RAM, and buy more disk drives. This costs big $. This is in addition to the cost of the DBMS itself. (They typically cost between $15,000 and $100,000 (or more). 3rd reason for increased cost... Copyright Jay Lightfoot, Ph.D. 16 (3) Potentially Slower Processing Once they spend all this money, many are surprised that the overall system throughput is slower than with their old file-based system (even with the beefed up CPU). The reason for this is that the machine is having to do a lot more things (e.g., concurrency control, security, integrity, recovery, ...). You want these things, it is just that people are surprised that they slow down the machine. The real gain in DBMS comes in programming cost savings better data consistency data sharing synergy. 2nd disadvantage of the database approach... b) Data Concurrency Problems Along with the advantages of data sharing come several problems. These problems are unique to systems that allow more than one functional area to use the same data at the same time. 1st data sharing problem... (1) Deadlock / Livelock When data are shared, the DBMS must be careful to only allow one user at a time to change it. If multiple users could change at the same time then it would be chaos. The DBMS does this by giving sole access to update data to one user at a time. Anybody else that needs it must wait until the first is through. Deadlock is a condition where 2 (or more) users are each waiting for the other to release the same piece of data. Neither can continue until the other gives up the needed data. Deadlock (a.k.a. deadly embrace) will continue until you turn the computer off or until one of the users "gives in". For example, imagine a 4-way stop sign with two cars waiting for each other. Traditionally the DBMS looks for deadlock and forces one user to release their data and start over. Livelock is a condition where a user cannot proceed for a long time because they are never given access to needed data. This is different from deadlock in that they do not have anything that anyone else wants. They are just "low on the priority list" so they never get access to a popular piece of data. Again, the DBMS has ways to fix this problem. Both problems are directly related to data sharing (and use lots of CPU cycles). The 2nd problem directly related to data sharing...(concurrency) Copyright Jay Lightfoot, Ph.D. 17 (2) Transaction Rollback One way the DBMS fixes deadlock is through "transaction rollback". Basically, a rollback is the process of "undoing" everything that a user has done. (A "transaction" is an arbitrary unit of work on the database.) When the DBMS selects a "victim", they UNDO everything that user has done (including releasing locks on data items). Once this is done then other users can continue. Rollbacks use lots of CPU cycles also and are normally result of data contention (via data sharing). 3rd problem of the database approach... c) Data Security Problems One of the few areas where the traditional approach was better. When you combine all the traditional file-based systems you also combine all the security concerns of that data. Strangely enough, the traditional file based approach had fairly good security because people guarded their data very carefully. Some of the security problems are not new (e.g., backup/recovery, authorization). They are just bigger. Other problems spring up specifically because a DBMS is present. For example, the potential for catastrophic failure. All in all, DBMS security is not new, just more intense. 1st aspect of DBMS security is failure potential... (1) Catastrophic Failure Possible When you put all your eggs in one basket, you risk losing them. Translation: Lack of redundancy can easily lead to losing everything if you are not careful. Not only are the data not redundantly stored, but the processing is also performed on single set of hardware. Dangerous! When you depend on one system (DBMS) to perform all data retrieval, storage, backup/recovery, integrity, ... then you are very vulnerable to a failure of that system. On a less catastrophic note, a single bad application can sometimes bring down the whole DBMS. This sort of thing rarely happened on old file-based systems. 2nd aspect of security problems present in DBMS... Copyright Jay Lightfoot, Ph.D. 18 (2) Elaborate Backup/Recovery This generally comes under the heading of security. You can provide backup tapes, backup disks, backup CPUs, ... all the way up to totally redundant sites. The more security you buy, the more it costs. This is not really that different from file-based systems since most of the big ones ran at a single hardware site. The problem is that all users are down when you do backups, not just a few functions. (Note, some fancy DBMSs, like ORACLE, do incremental backups so that users are not overly inconvenienced.) 4th disadvantage of the database approach... d) Organizational Conflict Shared database requires a consensus on data definition and ownership. This is where the DBA really earns their money. It takes a good politician to navigate this minefield. This is a political concern. Users from separate functional areas must agree on who owns the data, who can maintain it, when, how it is processed, distributed, .normally textbooks minimize the political aspects of data processing in the organization. You should remember that politics always follows large budgets and power. This is true in the DP area. The 5th database disadvantage... e) Complex Conceptual Design It is more complex to understand (and program) when you must be concerned with the entire enterprise model. DBMS programmers must be concerned with transaction interaction, timing, and a host of problems not present in old file-based systems. To solve this, few companies actually have a full enterprise model. Most have subsets of the model that link logically related sub-systems. This is a practical way to approach extreme complexity. It is not necessarily good, it is just the way things are until we have better tools to help the programmer deal with extreme detail. (e.g., CASE tools, SEEs, ...) 5th database disadvantage... f) Extra Cost of DBMS on Program Development Having a DBMS in place can slow down certain aspects of the system life-cycle. If you ever evaluate a DBMS, you should realize this. Copyright Jay Lightfoot, Ph.D. 19 1st of all you have testing difficulties... (1) Testing Difficulties You usually cannot test applications directly against the live database because it may damage it (again, all eggs in one basket...). This means that you must either make an extract of the full database or develop a sub-set of the database strictly for testing purposes. This takes time and disk space. It also may not adequately test the problems that often occur under "load" (multiple users). 2nd extra cost of program development... (2) Complex Programming Environment This is related to the "complex conceptual design" and "special programmers" topics above. Basically, DBMS products are more complex to use than file-based systems. It takes time to learn them. Also, a full enterprise model of a company is usually too complex for a single programmer to comprehend without aids (CASE tools, ...). Taken together, you lose some of your programmer productivity (short run) due to the complexity of the DBMS. All in all however, the advantages FAR OUTWEIGH the disadvantages. So the database approach is the way of the future. NEXT let’s examine the component parts of a DBMS.... IV. COMPONENTS OF A DATABASE SYSTEM A DBMS is not one big program. It is made up of several large complex sub-systems and a several groups of people (classes of users). To begin, the basic functions of a DBMS are: data storage, retrieval, and update a user-accessible catalog transaction support concurrency control services recovery services authorization services support for data communications integrity services services to promote data independence utility services (reorganize statistics, monitoring...) These diverse functions are handled by the collection of sub-systems discussed above. Copyright Jay Lightfoot, Ph.D. 20 1st component of DBMS A. Data (raw) The main purpose of a DBMS is to store and retrieve data. Obviously this is the most important component. There are 2 general categories of data: 1. actual (real) 2. virtual (derived) The actual data is stored on disk and can be read and written just as in traditional systems (although you don't worry about storage details) Virtual data is not actually stored anywhere. Rather, the derivation method is stored. When you require the information it is created from existing data. Example: Employee age would not really be stored in the database. Rather their birthday and the current day would be used to calculate it. In that way it would always be current. Another example is if you want a sum of a purchase order. The sum would not actually be on disk, rather it would be calculated "on the fly". 2nd component of DBMS... B. Structure (Repository, Schema) Raw data is not really very useful by itself. You cannot have any relationships among data items in raw data (just a bunch of data items). What is missing is the structure that ties the pieces together. We will be working with this all semester. It is a very powerful aspect of the DBMS approach. It is also one of the main complexities that must be dealt with. There are several basic concepts associated with structure: structure within "record" structure between "records" cardinality (1:1, 1:N, M:N) - nature of relationship degree (how many entities participate) generalization/specialization primary key uniqueness referential integrity user defined integrity rules A generic term for information about the structure of data is "metadata". In database terminology, the part of the DBMS that defines the structure is called the "schema". The Copyright Jay Lightfoot, Ph.D. 21 schema is stored in the DBMS component called the "catalog" or the repository. Again, we will get to most of this later, this is just an introduction. 3rd DBMS component ... C. DBMS The Data Base Management System (DBMS) is the generic name for the collection of subsystems used to create, maintain, and provide controlled access to data. They range in complexity from small PC-DBMS systems (Access, dBase IV, Rbase...) costing a few hundred dollars to large mainframe products (ORACLE, IBM DB2) costing several hundred thousand dollars. 1. DBMS Engine The central component of the DBMS. A module that provides access to the physical structure and the data. Also coordinates all of the other functions done by the DBMS. The central control module that receives requests from users , determines where the data is and how to get it, and issues physical I/O requests to the computer operating system. Also provides some misc. services such as memory and buffer management, index maintenance, and disk management. 2nd sub-component... 2. Interface Subsystem Provides facilities for users and applications to access the various components of the DBMS. Most DBMS products provide a variety of languages and interfaces to satisfy the different types of users and the different sub-systems that must be accessed. The following are common interfaces that are provided (some are missing in the smaller/cheaper products). Note that some DBMS combine the functions of several interfaces into a single sub-system (e.g., SQL is DDL, DML, and DCL combined). 1st interface component...(of 6) a) Definition Language (DDL) (structure) Used to define and maintain the database structures (e.g., records, tables, files, views, indexing, ...) Specifically DDL defines: all data items (type, specification...) all record types (tables in relational model) the relationships among record types (not in relational model) Copyright Jay Lightfoot, Ph.D. 22 user views (or subschemas) The DDL is used to define the conceptual database and turn it into a physical database 2nd interface component... b) Query Language (DML) Used to manipulate and query the data itself. Typically used by a host program or as ad hoc commands in interactive mode. For example, you could select a subset of data based upon some query criteria using the DML. In some database systems the DML also provides the commands to "navigate" the data structure. 3rd interface component... c) Control Language (DCL) Used to grant and revoke access rights to individuals (and groups). A "right" is the privilege to perform a data manipulation operation. For example, the DBA can grant a clerk the right to access and delete INVENTORY records (but not to update them). Another related concept is a database “role”. A role is a predefined set of access rights and privileges that can be assigned to a user. When the definition of the role changes, all users assigned to that role get the updated access rights. Again, not all DBMSs call the DDL, DML, and DCL separate interfaces. However, all 3 functions must be present in the DBMS. 4th interface component... d) Graphic Interface (QBE) Optional. Some modern DBMSs provide a graphical representation of the data structure (a table usually) that allows you to select which data items to query on and the conditions for selection. Normally this feature is found in Query By Example sub-systems of relational DBMSs. The graphic interface makes it easier for non-technical users to make complex queries. Also handy because it is a common interface that can cross diverse DBMS systems. 5th interface component... Copyright Jay Lightfoot, Ph.D. 23 e) Forms Interface Optional. A screen-oriented form is presented to the user. They respond by filling in the blanks. The result is that the DBMS uses the form that you design to input and output data. 6th interface component... f) High-Level Programming Language (interface) Programmers need to be able to access data via a high-level language. This could be old-style (3rd generation) languages like COBOL, FORTRAN, Pascal. Or it could be newer 4th generation languages like Toolbox, Mapper, Easytrieve, ... . Most big mainframe products (Ingress, Oracle, Informix...) include a 4GL as part of the DBMS. Studies have shown that application done using 4GLs result in a system that is up 10 to 20 times faster than using traditional 3rd generation languages. (Note, code does not run faster, it is just debugged sooner.) The interface usually is achieved by adding a few extra commands (verbs) to the standard language and having a pre-processor translate these verbs into DBMS calls (using the CALL format of the specific operating system). This method works well because the user does not need to know the complexities of operating system calls and the resulting code is somewhat portable. The interfaces to "old" languages is needed because there is a lot of code and programmer experience out there that cannot be ignored. 3rd sub-component DBMS... 3. Repository Dictionary Subsystem The word "repository" is used to relate back to the Information Resource Management concept mentioned earlier. The data in the database should be treated as a corporate resource. This resource must be managed. The repository is more than a "data dictionary" or "catalog". It is the central place that you store: system documentation data structure project life cycle information conceptual model information etc… CASE tools use it extensively. A DBMS system component must be present to manage and control access to the repository. To a certain extent, the DCL does part and CASE tools do part. Copyright Jay Lightfoot, Ph.D. 24 It provides facilities for recording, storing, and processing descriptions of an organization's data and data processing resources. Pretty new idea (still being defined by industry). 4th sub-component... 4. Data Integrity Subsystem Provides facilities for managing the integrity in the database and the integrity of metadata in the repository. There are 4 important functions: intrarecord integrity - enforce constraints on data item values and types within each record in the database referential integrity - enforce the validity of references between records in the database user-define integrity - Business rules (arbitrary) that must be upheld (e.g., employee can't make more than boss). concurrency control - Assuring the validity of data when multiple users access simultaneously (get into more later). This is a traditional weak spot on most commercial DBMSs. 5th sub-component... 5. Security Management Subsystem A subsystem that provides facilities to protect and control access to the database. The 2 most important aspects of security are: securing data from unauthorized access protect it against disasters The first is done through passwords, views, and protection levels. Encryption is also widely used. The second aspect uses backups, logs, before and after images, disaster recovery plans, etc. 6th sub-component... 6. Backup/Recovery Subsystem Copyright Jay Lightfoot, Ph.D. 25 A subsystem that logs transactions and database changes and periodically makes backup copies of the database. This is done so you don't lose data in the event of a problem. There are different levels of problems that backup/recovery prepares for. They range from redoing a transaction that was rolled back due to a concurrency conflict (minor) to totally restoring the database after the computer center is destroyed (major). 7th sub-component... 7. Application Development Subsystem Optional. Provides facilities so that end users and/or programmers can develop complete database applications. Some use elaborate CASE tools as well as screen and report generators to create full applications with minimal work. Others help write code from sketchy specifications. In any event, this is an aid to non-technical users and to beef up programmer productivity. 8th sub-component... 8. Performance Management Subsystem The DBA needs some way to determine if the DBMS is performing well. These tools (often called "monitoring utilities") give the DBA information needed to tune DBMS performance. Example: A monitor utility can find data items that are accessed frequently enough to need an index. They can also be used to determine if a data item needs to be on a faster disk drive or possibly replicated. 4th DMBS component... D. CASE Tools Computer-Aided Software Engineering (CASE) tools are used for automating the software development and maintenance tasks. Basically, you use a computer program to automate the software life-cycle work. This is fairly new and not widely used in industry, but you will have some contact during your career. Depending upon the CASE tool, it can automate: feasibility analysis requirements definition logical design prototyping Copyright Jay Lightfoot, Ph.D. 26 programming and testing implementation maintenance In future, most large software projects will use CASE tools (probably hooked into the data repository subsystem). Some of the advantages of using CASE tools are: improved productivity in development improved quality through automated checking automatic preparation and update of documentation encouragement of prototyping and incremental development automatic preparation of program code from requirements definition reduced maintenance efforts 5th DBMS component... E. Application Programs These are the specific procedures (programs) that manipulate the data in the database. They are written for specific applications needed by the business. You don't buy these as part of the database. Some are fairly predictable (payroll, accounts payable, general ledger) while others could be quite arbitrary and specialized. the main point is that they are specifically written for the business using business defined requirements. The best thing that the DBMS can do to help is to provide tools to help write and maintain the programs. 6th DBMS component (ROLE)... F. Data Administrators The next 3 "components" could better be called "database roles". They are people that perform certain tasks. This includes the DBA and the DA. The two positions are responsible for the technical and political management of the DBMS and its use. The DBA is the person responsible for managing the organization's data. DBA has the ultimate authority and responsibility for ensuring that the data is secure, accurate, and timely. Specifically, the DBA does: build and maintain the data dictionary resolve disputes about data usage, control... design and maintain the conceptual and physical database monitor database for performance tuning perform backups and recoveries etc... Copyright Jay Lightfoot, Ph.D. 27 Sometimes the DBA position is held by a group of people and sometimes the technical side is handled by a Data Administrator (DA) while the political side is handled by the DBA. 2nd "role"... G. Systems Developers These are programmers that write the application code to meet specification. They are typically well versed in the use of the DBMS tools and in the function that they are programming. Seldom are system developers the same people that use the system. This partly due to the specialized nature of the work and partly due to a need to provide security by separating the users and the designers of the system. 3rd "role"... H. End Users Finally, the end users are those that have a need to access the data. Generally, end users are non-technical and do not use most of the DBMS components (at least they don't know they are using them). To the end user, the DBMS should look no worse than the file-based system that it replaced (or you haven't done you job correctly). Hopefully it looks better. Copyright Jay Lightfoot, Ph.D. 28