Assessment for Program Improvement, Accountability, and Research

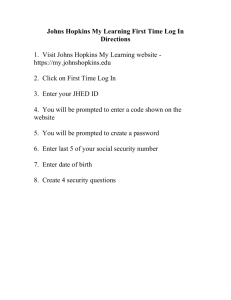

advertisement

A Comprehensive Unit Assessment Plan Program Improvement, Accountability, and Research Johns Hopkins University School of Education Faculty Meeting October 26, 2012 Toni Ungaretti Borrowed generously from Jim Wyckoff (October 10, 2010). Using Longitudinal Data Systems for Program Improvement, Accountability, and Research. University of Virginia Why Assessment? Assessment is a culture of continuous improvement that parallels the School’s focus on scholarship and research. It ensures candidate performance, program effectiveness, and unit efficiency. Overview • Program Improvement: By following candidates and graduates both during their programs and over time after graduation, programs can learn a great deal about their programs • Accountability: Value-added analysis of teacher/student data in longitudinal databases is one measure of program accountability • Research: A systematic program of experimentally designed research can provide important insights in how to improve candidate preparation Jim Wyckoff, 2010 Program Improvement Some Questions • Who are our program completers—age, ethnicity, areas of certification? • What characterizes the preparation they receive? • How well do they perform on measures of qualifications, e.g., licensure exams? • Where do our program completers teach/work? What is their attrition?—are they meeting program goals and mission? • How effective are they in their teaching/work? Ultimate impact! Accountability What Constitutes Effective Teacher Preparation? • Programs work with school districts to meet the teaching needs of the schools where their teachers are typically placed • Programs are judged by the empirically documented effectiveness of their graduates in improving the outcomes of the students they teach • Retention plays a role in program effectiveness as teachers substantially improve in quality over the first few years of their careers. Research How Can Programs Add Value? Selection: Who enters, how does that matter, and how can we influence it? Preparation: What preparation content makes a difference? Timing: Does it matter when teachers receive specific aspects of preparation? Retention: Why is retention important to program value added and what can affect it? Johns Hopkins University School of Education Comprehensive Unit Assessment Plan Assessment Cycle – Close the Loop What students learn What we change Unit and Program Improvement What we learn from a review of their learning Analysis of Assessment Data SOE Vision & Mission Assessment Tracking Johns Hopkins University School of Education How we track the learning Program Goals include Professional Standards Student Learning Outcomes Student Learning Outcome Assessment How we know that they learned How they learn it Major Assessment Points/Benchmarks ADMISSIONS MIDPROGRAM/PREINTERNSHIP PROGRAM COMPLETION (CLINICAL EXPERIENCES) POSTGRADUATION 2 YEARS OUT POSTGRADUATION 5 YEARS OUT Employer survey Employer survey Entry GPA Course assignments Course Grades GRE/SAT scores Course grades Admission demographics Content verification Test results (such as Alumni survey PRAXIS II, CPCE Exam) School partner E-Portfolio evaluation feedback E-Portfolio evaluation Personal essay Academic plan Teaching experience Interview ratings Disposition Survey Final comprehensive exam or graduate project Survey on diversity /inclusion dispositions Survey on diversity/ Inclusion dispositions Reflection on personal University Supervisor growth and goals and Cooperating Teacher evaluations Advisor/instructor input Course and Field Experience Student experience Assessment results survey Exit interview or End of Program Evaluation School partner feedback MSDE data linked to our graduates MSDE data linked to our graduates Alumni survey Collect data from graduates through surveys and/or focus groups Alignment of Conceptual Framework to Assessment Plan’s Benchmarks Conceptual Framework Themes/Student Outcomes Knowledgeable in their respective content area/discipline Reflective practitioners Committed to diversity Data-based decision-makers Integrators of applied technology Key Assessment Points Admission Mid-program Program Completion Post-Graduation Mid Program Program Completion Post Graduation Admissions Mid-Program Program Completion Post Graduation Mid-Program Program Completion Post Graduation Mid Program Program Completion Post Graduation Data Points Comprehensive Exams Praxis Exams Graduate Projects SOE Conceptual Framework Logic Model The Johns Hopkins University Mission Statement CONCEPTUAL FRAMEWORK THE SCHOOL OF EDUCATION VISION SOE Mission Inputs Initiatives Domains Resource Capability Key Assessment Points (For Assessments see Table 3) Admit Midpoint or Internship Program Completion Post Grad 2yr 5yr Outputs Student Outcomes Impact Content Experts Effective Teaching Excellent Professional Preparation High Quality Research Innovative Tools Knowledge Disposition Reflective practitioners Committed to Diversity Education Improvement Data Based Decision Makers Innovative Outreach Practice Integrators of Applied Technology Community Well-Being Program Assessment Plan • Mission, Goals, Objectives/Outcomes aligned with SOE mission and outcomes • National, State, and Professional Standards • Assessments –Descriptions, Rubrics, Benchmarks • Annual Process – Review of findings and recommendations for change – Review of assessments and adjustments • Documentation of stakeholder input – – ALL faculty, students, university supervisors, cooperating teachers, partner schools, MSDE, professional organizations, community members, employers