AdKDD-08 - Consistent Phrase Relevance Measures v2

advertisement

Scott Wen-tau Yih & Chris Meek

Microsoft Research

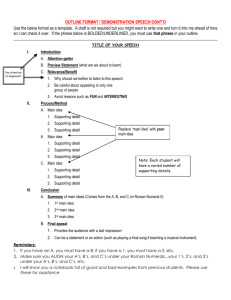

Keyword-driven Online Advertising

Sponsored Search

Ads with bid keywords that match the query

Contextual Advertising (keyword-based)

Ads with bid keywords that are relevant to the content

To deliver relevant ads leads to problems

related to phrase relevance measures.

query

flight to kyoto

Are these ads relevant to the query?

How relevant are the keywords behind the ads?

Given a document d and a phrase ph, we want to

measure whether ph is relevant to d (e.g., p(ph|d))

Applications – judging ad relevance

Sponsored search (query vs. ad landing page)

Ad relevance verification

Whether a keyword/query is relevant to the page

Contextual advertising (page vs. bid keyword)

External keyword verification

Whether the new keyword is relevant to the content page

For in-document phrases, we can use keyword extractor

(KEX) directly

[Yih et al. WWW-06]

Machine Learning model learned by logistic regression

Use more than 10 categories of features

e.g., position, format, hyperlink, etc.

TrueCredit

Digital

Camera Review

truecredit

0.879

Getnew

immediate

to

The

flagshipaccess

of Canon’s

your complete

creditS80

report

S-series,

PowerShot

from 3camera,

credit bureaus.

Just

digital

incorporates

per month,

including

8$14.95

megapixels

for shooting

$25K

ID Theft

still

images

andinsurance.

a movie

Contact

mode

thatTransUnion

records an for more

detail… 1024 x 768 pixels.

impressive

transunion

0.705

creditdocument?

bureaus 0.637

What if the phrase is

NOT in the

KEX

id theft

…

0.138

Given a document d and a phrase ph that is not in d

Estimate the probability that ph is relevant to d

TrueCredit

Get immediate access to

your complete credit report

from 3 credit bureaus. Just

$14.95 per month, including

$25K ID Theft insurance.

Contact TransUnion for more

detail…

truecredit

0.879

transunion

0.705

credit bureaus 0.637

id theft

…

0.138

credit bureau report

?

credit report services ?

equifax credit bureau ?

equifax credit report

?

exquifax

?

equfax

?

trans union canada

…

?

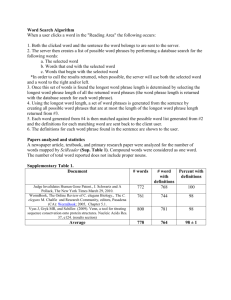

Given a document d and a phrase ph that is not in d

Estimate the probability that ph is relevant to d

Challenges

How do we measure it?

Lack of contextual information that in-doc phrases have

Consistent with the probabilities of in-doc phrases

May need some methods to calibrate probabilities

Calibrated cosine similarity methods

Treat in-doc and out-of-doc phrases equally

Map cosine similarity scores to probabilities

Regression methods based on semantic kernels

Given robust in-doc phrase relevance measures

Predict out-of-doc phrase relevance using similarity

between the target phrase and in-doc phrases

Regression methods achieve better empirical results

Introduction

Relevance measures using cosine similarity

Out-of-doc phrase relevance measure using

Gaussian process regression

Experiments

Conclusions

Step 1: Estimate sim(d,ph) → R

Represent d as a sparse word vector

Words in document d, associated with weights

Vec(d) = {‘truecredit’,0.9; ‘transunion’,0.7; ‘access’,0.1; … }

Represent ph as a sparse word vector via query expansion

Issue ph as a query to search engine; let the result page be

document d’

Vec(ph) ← Vec(d’)

sim(d,ph) = cosine(Vec(d),Vec(ph))

Choices of term-weighing schemes

Bag of words (SimBin), TFIDF (SimTFIDF)

Keyword Extraction (SimKEX)

Step 2: Map sim(d,ph) to prob(ph|d)

Via a sigmoid function where the weights are pre-learned

[Platt ’00]

sim (d , ph)

f log

1 sim (d , ph)

prob( ph | d )

1

1 exp( f )

The sigmoid function can be used to combine

multiple relevance scores

1

prob( ph | d )

m

1 exp( i i f i )

SimCombine: Combine SimBin, SimTFIDF & SimKEX

Introduction

Relevance Measures using cosine similarity

Out-of-doc phrase relevance measure using

Gaussian process regression

Experiments

Conclusions

TrueCredit

Get immediate access to

your complete credit report

from 3 credit bureaus. Just

$14.95 per month, including

$25K ID Theft insurance.

Contact TransUnion…

Relevant in-doc phrases:

TrueCredit, TransUnion

Out-of-doc phrases:

credit bureau report vs. Olympics

Which out-of-doc phrase is more relevant?

Step 1: Estimate probabilities of in-doc phrases

KEX(d) = {(‘truecredit’,0.88),(‘transunion’,0.71),

(‘credit bureaus’,0.64), (‘id theft’,0.14)}

Step 2: Represent each phrase as a TFIDF vector via query

expansion

x1=Vec(‘truecredit’), y1=0.88; x2=Vec(‘transunion’), y2=0.71

x3=Vec(‘credit bureaus’), y3=0.64; x4=Vec(‘id theft’), y4=0.14

Step 3: Represent the target phrase ph as a vector

x =Vec(ph), y=?

Step 4: Use a regression model to predict y

Input: (x1, y1), …, (xn, yn) and x

Output: y

We don’t specify the functional form of the regression model

Instead, we only need to specify the “kernel function”

k(x1, x2): linear kernel, polynomial kernel, RBF kernel, etc.

Conceptually, kernel function tells how similar x1 & x2 are

Changing kernel function changes the regression function

Linear kernel → Bayesian linear regression

(x1,y1), (x2,y2),

…, (xn,yn)

GPR

x

kernel function

e.g., k(xi,xj) = xi·xj

y

y k Τ (K n2 I ) 1 y

O(N3) from matrix inversion,

where N≤20 typically

Introduction

Relevance Measures using cosine similarity

Out-of-doc phrase relevance measure using

Gaussian process regression

Experiments

Conclusions

From sponsored search ad-click logs

(3-month period in 2007)

Randomly select 867 English ad landing pages

Each page is associated with the original query

and ~10 related keywords

(from internal query suggestion algorithms)

Labeled 9,319 document-keyword pairs

4,381 (47%) relevant; 4,938 (53%) irrelevant

Most keywords (81.9%) are out-of-document

10-fold cross-validation when learning is used

Accuracy

Quality of binary classification

False positive and false negative are treated equally

AUC (Area Under the ROC curve)

Quality of ranking

Equivalent to pair-wise accuracy

Cross Entropy

Quality of probability estimations

-log2[p(ph|d)] if ph is labeled relevant to d

-log2[1-p(ph|d)] if ph is labeled irrelevant to d

Better

0.704

7

6

0.681

5

4

0.654

3

0.663

2

0.651

1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Better

0.773

7

6

0.752

5

4

3

0.726

2

0.726

0.702

1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Better

0.835

7

6

0.864

5

4

3

0.882

2

0.887

0.939

1

0

0.2

0.4

0.6

0.8

1

Phrase relevance measure is a crucial task for online

advertising

Our solution: similarity & regression based methods

Consistent probabilities for out-of-doc phrases

Similarity-based methods

Simple and straightforward

The combined approach can lead to decent performance

Regression-based methods

Achieved the best results in our experiments

Quality depends on the in-doc relevance estimates & kernel

Future Work – More machine learning techniques

SimCombine

An ML method using basic similarity measures as features

Explore more features (e.g., query frequency, page quality)

Other machine learning models

Gaussian process regression

Learning a better kernel function

Kernel meta-training [Platt et al. NIPS-14]

Maximum likelihood training