One way communication complexity of generalised

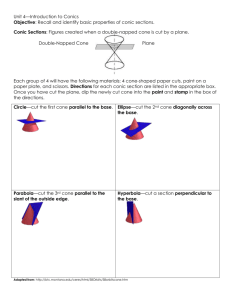

advertisement

Generalised probabilistic theories and

the extension complexity of polytopes

Serge Massar

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

Factorisation of

Communication / Slack

Matrix

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

M. Yannakakis, Expressing Combinatorial Problems by Linear Programs, STOC 1988

S. Gouveia, P. Parillo, R. Rekha, Lifts of Convex Sets and Conic Factorisations, Math. Op. Res. 2013

S. Fiorini, S. Massar, S. Pokutta, H. R. Tiwary, R. de Wolf, Linear vs. Semi definite Extended Formulations: Exponential

Separation and Strong Lower Bounds, STOC 2012

S. Fiorini, S. Massar, M. K. Patra, H. R. Tiwary, Generalised probabilistic theories and the extension complexity of

polytopes, arXiv:1310.4125

Linear

SDP

Conic

•

•

•

Polytopes

& Combinat.

Optimisation

•

•

•

•

•

•

Extended

Formulations

linear

SDP

Conic

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

Generalised Probabilistic Theories

• Minimal framework to build theories

– States = convex set

– Measurements: Predict probability of outcomes

• Adding axioms restricts to Classical or Quantum Theory

– Aim: find « Natural » axioms for quantum theory. (Fuchs, Brassar, Hardy,

Barrett, Masanes Muller, D’Ariano etal, etc…)

• GPTs with « unphysical » behavior -> rule them out.

– PR boxes make Communication Complexity trivial (vanDam 05)

– Correlations that violate Tsirelson bound violate Information Causality

(Pawlowski et al 09)

A bit of geometry

Cone C Í R n

x,x' ÎC & p,p' ³ 0 then px+p'x' ÎC

Dual Cone C* Í R n

C*= { y : x, y ³ 0 for all x ÎC}

Examples:

C=C*= { x ÎR n : xi ³ 0} = positive orthant

C=C*= { x ÎR n´n : x ³ 0} = cone of SDP matrices

But in general C ¹ C*

Generalised Probabilistic Theories

• Mixture of states = state

– State space is convex

• Theory predicts probability of outcome of measurement.

Generalised Probabilistic Theory GPT(C,u)

• Space of unnormalised states = Cone

C

w ÎC

Normalised

states

• Effects belong to dual Cone

e ÎC *

• Normalisation

–

–

–

–

Unit u ÎC *

0

Normalised state w ,u = 1

Measurement M = ìíe ÎC* : å e = u üý

î

þ

Probability of outcome

i : P(i | w

) = ei ,w

i

i

i

.u

C*

Classical Theory

• C=C*={ x ÎR : xi ³ 0} = positive orthant

• u=(1,1,1,…,1)

n

• Normalised state w=(p1,p2,…,pn)

Probability distribution over possible states

• Canonical measurement={ei}

ei=(0,..,0,1,0,..,0)

P(i | w) = ei ,w = pi

Quantum Theory

•

•

•

•

C=C*= { x ÎRn´n : x ³ 0} = cone of SDP matrices

u=I=identity matrix

Normalised states = density matrices

Measurements = POVM

Lorentz Cone

Second Order Cone Programming

• CSOCP={x = (x0, x1,…,xn) such that x12+x22+…+xn2≤ x02}

• Lorentz cone has a natural SDP formulation

-> subcone of the cone of SDP matrices

• Can be arbitrarily well approximated using linear

inequalities

• Linear programs include SOCP include SDP

• Status?

Completely Positive and Co-positive Cones

Co-positive cone

Cn = { X ÎR n´n : yT Xy ³ 0, y ÎR+n }

Completely positive cone

ì

ü

Cn* = í X ÎR n´n : X = å ykT yk , yk ÎR+n ý

k

î

þ

=cone of quantum states with real positive coefficients

Remarks:

-linear programming over C*n or Cn is NP hard

-deciding if x ÎC*n (or C n ) is NP hard

Status?

Open Question.

• Other interesting families of Cones ?

One way communication complexity.

a

b

Alice

w(a)

Bob : M(b)

r

Classical Capacity.

a

Alice

w(a)

Bob : M

r

• Holevo Theorem:

– How much classical information can be stored in a

GPT state? Max I(A:R) ?

– At most log(n) bits can be stored in w ÎC Í Rn

Proof 1: Refining Measurements

Generalised Probabilistic Theory GPT(C,u)

• States w ÎC Í Rn

• Measurement M = ìíe ÎC* Í R : å e = u üý

n

î

i

i

i

þ

• Refining measurements

– If ei=pfi+(1-p)gi with fi , gi ÎC * Í Rn

– then we can refine the measurement to contain

effect pfi and effect (1-p)gi rather than ei

• Theorem: Measurements can be refined so that all effects

are extreme points of C* (Krein-Milman theorem)

Proof 2: Extremal Measurements

Generalised Probabilistic Theory GPT(C,u)

n

• States w ÎC Í R

• Measurement M = ìíei ÎC* Í Rn : å ei = u üý

î

i

þ

• Convex combinations of measurements:

– M1={ei} & M2={fi}

– pM1+(1-p)M2={pei+(1-p)fi}

•

ì

ü

n

M

=

e

ÎC*

Í

R

:

e

=

u

If

í i

å i ý has m>n outcomes

î

þ

– Carathéodory: i

n

Then there exists a subset of size n, such that å pk ek = u , pk ³ 0

k=1

– Hence M=pM1+(1-p)M2 & M1 has n outcomes & M2 has m-1 outcomes.

• By recurrence: all measurements can be written as convex combination of

measurements with at most n effects.

Proof 3: Classical Capacity of GPT

a

Alice

w(a)

Bob : M

r

• Holevo Theorem for GPT (C Í Rn ,u)

– Refining a measurement and decomposing measurement into convex

combination can only increase the capacity of the channel

– Capacity of channel d log( # of measurement outcomes)

Capacity of channel ≤ log(n) bits

• OPEN QUESTION:

– Get better bounds on the classical capacity for specific theories?

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

Randomised one way communication

complexity with positive outcomes

b

a

Alice

w(a)

1 bit {0,1}

ì

ü

Bob: M (b) = íei (b) ÎC * : å ei (b) = u ý

i

î

þ

Cab = E[r | ab] = å r(i,b) w(a),ei (b) = w(a), f (b)

r(i,b)e

i

w(a) ÎC

f (b) = å r(i,b)ei (b) ÎC *

i

Theorem:

Randomised one way communication with positive outcomes using GPT(C,u)

and one bit of classical communication produces on average Cab on inputs a,b

If and only if

Cone factorisation of Cab = w(a), f (b) , w(a) ÎC, f (b) ÎC *

Different Cone factorisations

b

a

w(a)

Alice

ì

ü

Bob: M (b) = íei (b) ÎC * : å ei (b) = u ý

i

î

þ

r(i,b)e

Theorem:

Randomised one way communication with positive outcomes using GPT(C,u) and one

bit of classical communication produces on average Cab on inputs a,b

If and only if

Cone factorisation of Cab = w(a), f (b) , w(a) ÎC, f (b) ÎC *

Classical Theory: Cab = å wi (a) fi (b) with wi (a) fi (b) ³ 0 : positive rank of Cab

i

Quantum Theory: Cab = Tr w(a) f (b) with w(a), f (b) SDP matrices : SDP rank of Cab

Other Theories: Cone factorisation of Cab

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

Background: solving NP by LP?

•

•

•

•

•

•

•

•

•

Famous P-problem: linear programming (Khachian’79)

Famous NP-hard problem: traveling salesman problem

A polynomial-size LP for TSP would show P = NP

Swart’86–87 claimed to have found such LPs

Yannakakis’88 showed that any symmetric LP for TSP needs

exponential size

Swart’s LPs were symmetric, so they couldn’t work

20-year open problem: what about non-symmetric LP?

There are examples where non-symmetry helps a lot

(Kaibel’10)

Any LP for TSP needs exponential size (Fiorini et al 12)

Polytope

• P = conv {vertices} = {x : Aex < be}

v

e

Combinatorial Polytopes

• Travelling Salesman Problem (TSP) polytope

–

–

–

–

Rn(n-1)/2 : one coordinate per edge of graph

Cycle C : vC=(1,0,0,1,1,…,0)

PTSP=conv{vC}

Shortest cycle: min L ix with x ÎPTSP

• Correlation polytope

–

{

COR(n)=conv bT b :b Î{0,1}

n

}

• Bell polytope with 2 parties, N settings, 2 outcomes

• Linear optimisation over these polytopes is NP Hard

• Deciding if a point belongs to the polytope is NP Hard

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

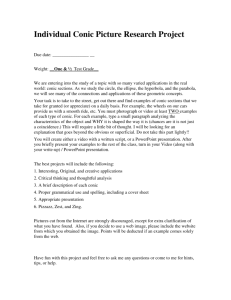

Extended Formulations

• View polytope as projection of a simpler

object in a higher dimensional space.

Q=extended formulation

p

P=polytope

Linear Extensions: the higher

dimensional object is a polytope

Q=extended formulation

p

P=polytope

Size of linear extended formulation = # of facets of Q

Conic extensions:

Extended object= intersection of cone and hyperplane.

Q

Cone=C

Precise formulation

p

Q= {(x, y) ÎR d+n : Ex + Fy = g, y ÎC }

Px (Q) = { x ÎR d : $y ÎR n & (x, y) ÎQ}

Polytope P

Conic extensions

Q

• Linear extensions

– positive orthant

C = { x ÎRn : xi ³ 0}

Cone=C

• SDP extensions

– cone of SDP matrices

C = { x ÎRn´n : x ³ 0}

p

• Conic extensions

– C=cone in Rn

Polytope P

•

Why this construction?

– Small extensions exist for many problems

– Algorithmics: optimise over small extended formulation is efficient for linear and SDP extension

– Possible to obtain Lower bound on size of extension

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

Slack Matrix of a Polytope

• P = conv {vertices} = {x : Aex – bee }

• Slack Matrix

– Sve= distance between v and e = Aexv – be

v

e

Factorisation Theorem

(Yannakakis88)

Theorem: Polytope P has Cone C extension

• Iff Slack matrix has Conic factorisation

– Sve = Te ,Uv with Te ÎC &Uv ÎC *

• Iff Alice and Bob can solve communication

complexity problem based on Sev by sending

GPT(C,u) states.

e

v

Alice

GPT(C)

Bob

s : <s>=Sev

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

S. Fiorini, S. Massar, S. Pokutta, H. R. Tiwary, R. de Wolf, Linear vs. Semi definite Extended Formulations: Exponential

Separation and Strong Lower Bounds, STOC 2012

• There do not exist polynomial size linear extensions of the TSP polytope

A Classical versus Quantum gap

a

b

Classical/Quantum Communication

Alice

a,b Î{0,1}

Bob

m : <m>=Mab

n

M ab = (1- aT b )

2

Supp(M ab ) = 0 if M ab = 0 (if a&b have one common 1 entry)

Supp(M ab ) =1 if M ab > 0 (if a&b dont have exactly one common 1 entry)

Simpler problem: # of classical bits to output Supp(M ab )

= W(n) (Razborov92)

# of classical bits required to produce on average M ab = W(n)

# of qubits to output Supp(M ab ) = O((log(n)) (deWolf03)

Theorem: Linear Extension Complexity

W(n)

of Correlation Polytope= 2

a

b

Classical Communication

Alice

a,b Î{0,1}

Bob

m : <m>=Mab

n

M ab = (1- aT b )

2

# of classical bits required to produce on average M ab = W(n)

Correlation polytope

{

COR(n)=conv bT b :b Î{0,1}

n

}

Observation: M ab = part of the slack matrix of COR(n)

Hence outputing M ab is at least as hard as outputing Slack Matrix of COR(n)

Theorem: Linear Extension Complexity of COR(n)=2W(n)

Linear extension complexity of

polytopes

Linear Extension Complexity of COR(n)=2W(n)

® Linear Extension Complexity of TSP(n)=2

W(n1/4 )

(since improved to 2 W(n) )

OPEN QUESTION?

• Prove that SDP (Quantum) extension

complexity of TSP, Correlation, etc.. polytopes

is exponential

– Strongly conjectured to be true

– The converse would almost imply P=NP

– Requires method to lower bound quantum

communication complexity in the average output

model (cannot give the parties shared

randomness)

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

Factorisation of

Communication / Slack

Matrix

•

•

•

Linear

SDP

Conic

Extended

Formulations

•

•

•

linear

SDP

Conic

Polytopes

& Combinat.

Optimisation

S. Fiorini, S. Massar, M. K. Patra, H. R. Tiwary, Generalised probabilistic theories and the extension complexity of polytopes,

arXiv:1310.4125

• GPT based on cone of completely positive matrices allow exponential saving with respect to classical (conjectured

quantum) communication

• All combinatorial polytopes (vertices computable with poly size circuit) have poly size completely positive extension.

Recall:

Completely Positive and Co-positive Cones

Co-positive cone

Cn = { X ÎR n´n : yT Xy ³ 0, y ÎR+n }

Completely positive cone

ì

ü

Cn* = í X ÎR n´n : X = å ykT yk , yk ÎR+n ý

k

î

þ

=cone of quantum states with real positive coefficients

Remarks:

-linear programmin over C*n or Cn is NP hard

-deciding if x ÎC*n (or C n ) is NP hard

Completely Positive extention of

Correlation Polytope

•

{

COR(n)=conv bT b :b Î{0,1}

n

}

• Theorem: The Correlation polytope COR(n)

has a 2n+1 size extension for the Completely

Positive Cone.

– Sketch of proof:

• Consider arbitary linear optimisation over COR(n)

• Use Equivalence (Bürer2009) to linear optimisation

over C*2n+1

• Implies COR(n)=projection of intersection of C*2n+1 with

hyperplane

Polynomialy definable 0/1 polytopes

0/1 polytope in R = conv{y Î{0,1} }

d

d

0/1polytope is polynomialy definable:

–Iff $ poly size circuit that, given input y Î{0,1}

d

will output 1 if y ÎP, 0 if y ÏP

Most polytopes of interest belong

to families of polynomially definable 0/1-polytopes:

COR ( n ) –TSP ( n ) –Etc…

Polynomialy definable 0/1-polytopes

0/1 polytope in R d = conv{y Î{0,1} }

d

0/1polytope is polynomialy definable:

–Iff $ poly size circuit that, given input y Î{0,1}

d

will output 1 if y ÎP, 0 if y ÏP

• Theorem (Maksimenko2012): All polynomialy definable 0/1polytopes in Rd are projections of faces of the correlation

polytope COR(poly(d)).

• Corollary: All polynomialy definable 0/1-polytopes in Rd have

poly(d) size extension for the Completely Positive Cone.

– Generalises a large number of special cases proved before.

– « Cook-Levin» like theorem for combinatorial polytopes

Summary

• Generalised Probabilistic Theories

– Holevo Theorem for GPT

• Connection between Classical/Quantum/GPT communication

complexity and Extension of Polytopes

– Exponential Lower bound on linear extension complexity of COR, TSP

polytopes

– All 0/1 combinatorial polytopes have small extension for the Completely

Positive Cone

– Hence: GPT(Completely Positive Cone) allows exponential saving with respect

to classical (conjectured quantum) communication.

• Use this to rule out the theory? (Of course many other reasons to rule out

the theory using other axioms)

• OPEN QUESTIONS: Gaps between Classical/Quantum/GPT for

– Other models of communication complexity?

– Models of Computation

From Foundations to Combinatorial Optimisation

Physical Theories

Comm.

Complexity

Factorisation of

Communication / Slack

Matrix

•

•

•

Extended

Formulations

•

•

•

Classical

Quantum

Generalised Probablisitic

Theories (GPT)

M. Yannakakis, Expressing Combinatorial Problems by Linear Programs, STOC 1988

S. Gouveia, P. Parillo, R. Rekha, Lifts of Convex Sets and Conic Factorisations, Math. Op. Res. 2013

S. Fiorini, S. Massar, S. Pokutta, H. R. Tiwary, R. de Wolf, Linear vs. Semi definite Extended Formulations: Exponential

Separation and Strong Lower Bounds, STOC 2012

There do not exist polynomial size linear extensions of the TSP polytope

S. Fiorini, S. Massar, M. K. Patra, H. R. Tiwary, Generalised probabilistic theories and the extension complexity of

polytopes, arXiv:1310.4125

• All combinatorial polytopes (vertices computable with poly size circuit) have poly size completely positive

extension.

• GPT based on cone of completely positive matrices allow exponential saving with respect to classical (conjectured

quantum) communication

Linear

SDP

Conic

•

•

•

Polytopes

& Combinat.

Optimisation

linear

SDP

Conic