Ethical Theory and Terminology

advertisement

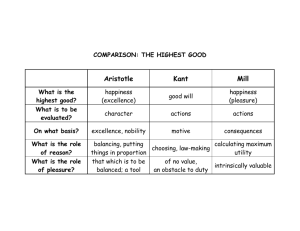

For Ethical Issues in Science and Technology J. Blackmon Select Themes in Ethics of Technology Ethical Theory and Terminology Conclusion Themes in Ethics of Technology Innovation Presumption: Liberty, Optimism, and Inevitability Situated Technologies Lifecycle Power Forms of Life Extrinsic Concerns Intrinsic Concerns Responsible Development Ethics and Public Policy Framework for Ethical Analysis of Emerging Technologies Themes in Ethics of Technology Innovation Presumption: Liberty, Optimism, and Inevitability Situated Technologies Lifecycle Power Forms of Life Extrinsic Concerns Intrinsic Concerns Responsible Development Ethics and Public Policy Framework for Ethical Analysis of Emerging Technologies Themes in Ethics of Technology Innovation Presumption: Liberty, Optimism, and Inevitability Situated Technologies Lifecycle Power Forms of Life Extrinsic Concerns Intrinsic Concerns Responsible Development Ethics and Public Policy Framework for Ethical Analysis of Emerging Technologies Why does the ethical evaluation of technology tend to focus on what might be problematic about it? Are we all luddites? Innovation Presumption: We should invent, adopt, and use new technologies. Innovation Presumption: We should invent, adopt, and use new technologies. Innovation Presumption + Unknown Consequences → Warranted Scrutiny Innovation Presumption: We should invent, adopt, and use new technologies. The popularity of the Innovation Presumption + Unknown Consequences → Warranted Scrutiny And it’s a win either way: Scrutiny allows us to avoid paying the negative consequences where the IP turns out to have been false. And should it turn out to be true in other cases, scrutiny may reveal why it’s true. So, we advocate scrutiny of the IP without adopting an unfair bias against it. Three Principles Supporting the IP 1. Liberty: We should be free to innovate so long as it isn’t harmful to others. (J. S. Mill’s Harm Principle) 2. Technological Optimism: Technology improves and will continue to improve human lives. 3. Technological Determinism: Technological advancement is inevitable. Three Principles Supporting the IP 1. Liberty: We should be free to innovate so long as it isn’t harmful to others. (J. S. Mill’s Harm Principle) 2. Technological Optimism: Technology improves and will continue to improve human lives. 3. Technological Determinism: Technological advancement is inevitable. Liberty: We should be free to innovate so long as it isn’t harmful to others. Harm Principle: The only purpose for which power can be rightfully exercised over any member of a civilized community, against his will, is to prevent harm to others. Liberty: We should be free to innovate so long as it isn’t harmful to others. Much remains to be said! What is harm? Who are the others? What kind of power, and how much? Liberty: We should be free to innovate so long as it isn’t harmful to others. Much remains to be said! What is harm? Who are the others? What kind of power, and how much? Cindy cuts Jake She’s a trained surgeon performing an emergency tracheotomy with no anesthesia. Cindy has caused physical damage and pain to Jake. She may even have done this against his will. Has Cindy caused harm to Jake? Cindy cuts Jake She’s a trained surgeon performing an emergency tracheotomy with no anesthesia. Cindy has caused physical damage and pain to Jake. She may even have done this against his will. Has Cindy caused harm to Jake? Cindy cuts Jake So perhaps whether Cindy harms Jake depends on whether she saves him from further/worse harm. Cindy cuts Jake So perhaps whether Cindy harms Jake depends on whether she saves him from further/worse harm. But then whether harm is being committed depends on what is yet to come. Cindy cuts Jake So perhaps whether Cindy harms Jake depends on whether she saves him from further/worse harm. But then whether harm is being committed depends on what is yet to come. This is a notorious problem: How far into the future should we look when assessing consequences? How far into the future should we look when assessing consequences? Even if we somehow decided, should this view of harm as something determined by future outcomes be defended, or should we look for a more plausible/satisfying alternative? The Dukes of Hazzard Bo and Luke Duke drive with extreme recklessness all over town, never hitting a single person due largely to luck. Have they harmed anyone? Liberty: We should be free to innovate so long as it isn’t harmful to others. Much remains to be said! What is harm? Who are the others? What kind of power, and how much? The Chalk Checker Ida parks in a 1-hour parking space for more than an hour when other 1-hour spaces are left unoccupied. She gets a ticket. The Chalk Checker In court, Ida cites the great philosopher J. S. Mill, saying, “The only purpose for which power can be rightfully exercised over any member of a civilized community, against her will, is to prevent harm to others. But fining me does not prevent harm to anyone, not even if we count mere inconvenience as a harm. After all, no one was harmed, not even inconvenienced.” The Chalk Checker In court, Ida cites the great philosopher J. S. Mill, saying, “The only purpose for which power can be rightfully exercised over any member of a civilized community, against her will, is to prevent harm to others. But fining me does not prevent harm to anyone, not even if we count mere inconvenience as a harm. After all, no one was harmed, not even inconvenienced.” The Chalk Checker Is Ida right? Which “other” has been harmed? And if she is right, does the city have a case against her? If the city does have a case against her, and yet no one has been harmed, then is J. S. Mill is wrong? Non-existing Future and Possible Persons Recall the commentator on Krasny’s Forum show: There are no passenger pigeons wanting to be brought back into existence. Non-existing Future and Possible Persons The problem generalizes. Test Cases Cindy the Surgeon The Dukes of Hazzard The Chalk Checker Non-Existing Future and Possible Pigeons (Persons) These cases test the Harm Principle. Test Cases Cindy the Surgeon The Dukes of Hazzard The Chalk Checker Non-Existing Future and Possible Pigeons (Persons) Harm Principle: The only purpose for which power can be rightfully exercised over any member of a civilized community, against his will, is to prevent harm to others. Questions What is harm? Who are the others? What kind of power? What kind of power? In many countries, the state has intervened in the sale and distribution of cigarettes— ostensibly for the good of the people. State Paternalism: In some cases, the state should limit the behavior of the people for their own good. What kind of power? Hard Paternalism: Restricting activity for the good of the person regardless of whether they are informed and consenting. Soft Paternalism: Restricting activity for the good of the person until that person meets certain standards for voluntary action. What kind of power? Thus, the Harm Principle and the Liberty Principle raise questions about the exercise of power. When power/intervention is called for, how should it be used? What kind of power? Laws use coercion. Financial incentives Self-regulation Liberty: We should be free to innovate so long as it isn’t harmful to others. Harm Principle: The only purpose for which power can be rightfully exercised over any member of a civilized community, against his/her will, is to prevent harm to others. While these principles may be attractive and compelling sentiments, they do not in themselves resolve important questions about harm, others, and power. Types of Value Instrumental Value: the value of something as a means to an end. Intrinsic/Final Value: the value of something for what it is or as an end. Types of Value Instrumental Value: the value of something as a means to an end. Intrinsic/Final Value: the value of something for what it is or as an end. Subjective Objective Types of Value Instrumental Value: the value of something as a means to an end. Intrinsic/Final Value: the value of something for what it is or as an end. Subjective Objective Instrumental Value: the value of something as a means to an end. Instrumental Value: the value of something as a means to an end. The hammer has instrumental value because it does something valuable: pound nails. Pounding nails gets value from easily and securely fastening pieces of wood together gets value from building safe comfortable structures gets value from Health, comfort, general happiness. Instrumental Value: the value of something as a means to an end. Instrumental value entails some noninstrumental value. Types of Value Instrumental Value: the value of something as a means to an end. Intrinsic/Final Value: the value of something for what it is or as an end. Subjective Objective Intrinsic/Final Value: the value of something for it is or as an end. Subjective: The value depends on how or whether we value it. Objective: The value is independent of how or whether we value it. Subjective Final Value: Valued by us as an end in itself Examples of things often thought to have subjective final value: Works of art Landscapes Mementos Religious artifacts Historical sites Objective Final Value: Valuable independent of whether it’s valued by us as an end in itself Examples of things often thought to have objective final value: Human beings Life Typically, technology is not thought to have objective final value. But of course, it affects things which do have it. Types of Theories Example: Animal Testing Suppose that developing a novel medical technology requires extensive animal testing which would cause considerable and persistent pain and suffering. Suppose also that these animals are morally considerable—their suffering matters in a moral sense. Should the testing go forward? Types of Theories One Response: It depends on the balance of the good and bad outcomes that would result (or are expected to result) from the testing. If the outcome is likely to be overall good, then Yes; if the outcome is likely to be overall bad, then No. Types of Theories Another Response: No. It is wrong to intentionally cause harm to animals. Types of Theories Another Response: Yes. It is permissible to use (nonhuman) animals in the service of humans. Types of Theories It depends on the balance of the good and bad outcomes. No. Yes. Types of Theories It depends on the balance of the good and bad outcomes. --Consequentialist No. --Deontological Yes. --Deontological Types of Theories Consequentialist Normative Theory: One ought to take the action which will result in the best outcome. Deontological Normative Theory: One ought to do something if it conforms to an operative rule. Types of Theories Consequentialist Normative Theory: One ought to take the action which will result in the best outcome. Deontological Normative Theory: One ought to do something if it conforms to an operative rule. Virtue Theory: One ought to do whatever is an instance of virtue: compassion, courage honesty, etc. Types of Theories Consequentialist Normative Theory: One ought to take the action which will result in the best outcome. Deontological Normative Theory: One ought to do something if it conforms to an operative rule. Virtue Theory: One ought to do whatever is an instance of virtue: compassion, courage, honesty, etc. Consequentialist Normative Theory Utilitarianism: One ought to take the action which will maximize utility. Utilitarianism: One ought to take the action which will maximize utility. Utilitarianism: One ought to take the action which will maximize utility. What is utility? Utilitarianism: One ought to take the action which will maximize utility. What is utility? Pleasure (Jeremy Bentham) Happiness (J. S. Mill) Utilitarianism: One ought to take the action which will maximize utility. What is utility? Pleasure (Jeremy Bentham) Happiness (J. S. Mill) Maximizing pleasure or happiness? Isn’t this just what they call hedonism? Isn’t this just what they call hedonism? Not really. For at least two reasons. First, there are versions of utilitarianism which go beyond base pleasure. What is utility? Pleasure (Jeremy Bentham) Happiness, and greater and lesser kinds of happiness (J. S. Mill) Preference Satisfaction? Well-being or welfare? Lack of pain and suffering? First, there are versions of utilitarianism which go beyond base pleasure. What is utility? Pleasure (Jeremy Bentham) Happiness, and greater and lesser kinds of happiness (J. S. Mill) Preference Satisfaction? Well-being or welfare? Lack of pain and suffering? “It is better to be a human being dissatisfied than a pig satisfied; better to be Socrates dissatisfied than a fool satisfied.” J. S. Mill There are different kinds of happiness: higher quality and lower quality. Second, it depends on what you mean by hedonism. Is utilitarianism a kind of hedonism? Yes, partly, by the original philosophical meaning. No, not at all, by a popular conception. Philosophical/Historical Definition: Hedonism is the view that the only intrinsic (non-instrumental) value is pleasure. A Common Definition: Hedonism is the pursuit of pleasure; sensual self-indulgence; self-gratification. Utilitarianism is emphatically not about maximizing one’s own happiness. That would be ethical egoism. Goal of Utilitarianism: The greatest good for the greatest number. And this can entail great personal sacrifice, which is entirely antithetical to ethical egoism. A good utilitarian: “The needs of the many outweigh the needs of the few, or the one.” Dr. Spock, Star Trek II The Wrath of Khan A good utilitarian: “The needs of the many outweigh the needs of the few, or the one.” Dr. Spock, Star Trek II The Wrath of Khan Which many? A good utilitarian: “The needs of the many outweigh the needs of the few, or the one.” Dr. Spock, Star Trek II The Wrath of Khan Which many? What needs? Utilitarianism: One ought to take the action which will maximize utility. What counts as utility? What is it to maximize it? From Ian Barbour’s ‘Philosophy and Human Values’ As Ian Barbour points out, if we are only concerned with the greatest good for the greatest number, certain questions and problems arise. As Ian Barbour points out, if we are only concerned with the greatest good for the greatest number, certain questions and problems arise. Who matters? As Ian Barbour points out, if we are only concerned with the greatest good for the greatest number, certain questions and problems arise. Who matters? How do we quantify and measure utility? And if there are different kinds, how do we compare them? As Ian Barbour points out, if we are only concerned with the greatest good for the greatest number, certain questions and problems arise. Who matters? How do we quantify and measure utility? And if there are different kinds, how do we compare them? Total utility is blind to distribution of utility; thus, there is nothing inherently wrong with disparities of justice and equality. Bill Gates walks into a bar… The total income of this group is $505,000, leading to an average income of $50,500. Bill Gates walks into a bar… Gates walks in with his $1 billion income. The total income of the group shoots way up to over $1 billion. Thus the mean income is over 0.1 billion dollars or $100 million. Who Matters? Most forms of utilitarianism are anthropocentric (human-centered). But increasingly the “circle of moral consideration” has expanded to include non-human animals. This raises questions, however, about which animals are included. This in turn raises the question of what makes an entity worthy of moral consideration. Who Matters? Also, do we include the happiness of only living beings? Or do we include the happiness of those who are yet to come? Who Matters? If the latter, that is if we include the happiness of those who are yet to come, how exactly do we do this? How far into the future do we have to look? And what we do now not only determines “happiness levels”, but it also determines who will end up coming into existence. “My parents should have waited until they were better off financially before having me!” Who Matters? As Barbour points out, if sum total happiness is the goal, then this might be achieved just by having a great number of moderately happy people in the world. Worse, they could no more that just tolerable well off, so long as there is enough of them. Derek Parfit calls this this the “repugnant conclusion”: This would not be a good world. How do we quantify the greatest good? Is there just one thing, happiness (or pleasure, or whatever), that can be measured on a single numerical scale? It would appear not. In fact, it appears that we have different kinds of goods. How do we quantify the greatest good? Is there just one thing, happiness (or pleasure, or whatever), that can be measured on a single numerical scale? It would appear not. In fact, it appears that we have different kinds of goods. If so, then what if they are incommensurable? Total utility is blind to distribution. Suppose the extermination of a small minority would make the majority so happy that the total happiness it increased. Suppose total national income can be increased if we accept great poverty for one segment of society. Total utility is blind to distribution. Suppose the extermination of a small minority would make the majority so happy that the total happiness it increased. Suppose total national income can be increased if we accept great poverty for one segment of society. According to utilitarianism, there is nothing inherently wrong here. Total utility is blind to distribution. This blindness to distribution results in a system that is unjust. Unless we are prepared to abandon justice, utilitarianism without justice must be rejected. We might reject utilitarianism altogether, or we might supplement it with a principle of justice. Utility and Justice If the total good were the only criterion, then we could justify a small social gain even if it entailed a gross injustice. But if justice were the only norm, then we would have to correct a small injustice even if it resulted in widespread suffering or social harm. Apparently, we must consider both justice and the total good.