LearningCausalityTextualData

advertisement

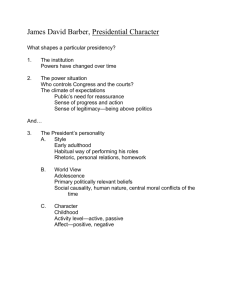

Learning Causality for News Events Prediction Kira Radinsky, Sagie Davidovich, Shaul Markovitch Technion - Israel Institute of Technology What is Prediction? “…a rigorous, often quantitative, statement, forecasting what will happen under specific conditions.“ [Wikipedia] “A description of what one thinks will take place in the future, based on previous knowledge.” [Online Dictionary] Why is News Event Prediction Important? Event Predicted event (Pundit) Al-Qaida demands hostage A country will refuse the exchange demand Strategic Intelligence Volcano erupts in Democratic Republic of Congo Thousands of people flee from Congo Strategic planning 7.0 magnitude earthquake strikes Haitian coast Tsunami-warning is issued China overtakes Germany Wheat price will fall as world's biggest exporter Strategic planning Financial investments Outline • Motivation • Problem definition • Solution • Representation • Algorithm • Evaluation Problem Definition: Events Prediction Ev is a set of events T is discrete representation of time Prediction Function , s.t.: occurred at time occurred at time Outline • Motivation • Problem definition • Solution • Representation • Algorithm • Evaluation Causality Mining Process: Overview News Articles acquisition • Crawling [NYT 1851-2009] • Modeling & Normalization Causality Pattern Classification • <Pattern, Constraint, Confidence> Event Extraction • Tagging • Dependency parsing (Stanford parser) Causality Relations extraction • Context inference Thematic roles normalized • Base forms • URIs assignment (Contextual Disambiguation) Thematic roles assignment • Based on VerbNet Index State Inference Causality Graph Building • Built on 20 machines • 300 million nodes • 1 billion edges • 13 million news articles in total Outline • Motivation • Problem definition • Solution • Representation • Algorithm • Evaluation Modeling an Event Comparison between events (Canonical) 1. (Lexicon & Syntax) Language & wording independent 2. (Semantic) Non ambiguous Generalization / abstraction Reasoning Many philosophies Property Exemplification of Events theory (Kim 1993) Conceptual Dependency theory (Schank 1972) Time Event & Causality Representation • Event Representation • Causality Representation 5 Quantifier kill Action Troops Attribute Afghan 1/2/1987 11:15AM +(3h) Timeframe Event2 “5 Afghan troops were killed” Army bombs 1/2/1987 11:00AM +(2h) US Army Timeframe Action US Event1 Weapon warehouse Location “US Army bombs a weapon warehouse in Kabul with missiles” Missiles Kabul Outline • Motivation • Problem definition • Solution • Representation • Algorithm • Causality Mining Process • Evaluation Machine Learning Problem Definition Goal function: Learning algorithm receives a set of examples and produces a hypothesis approximation of which is good Algorithm Outline Learning Phase 1. Generalize events 2. Causality prediction rule generation Prediction Phase 1. Finding similar generalized event 2. Application of causality prediction rule Algorithm Outline Learning Phase 1. Generalize events 1. How do we generalize objects? 2. How do we generalize actions? 3. How do we generalize an event? 2. Causality prediction rule generation Generalizing Objects the Russian Federation Eastern Europe RUS Russian Federation 185 Land border Russia Rouble (Rub) 643 USSR China Ontology – Linked data Generalizing Actions Levin classes (Levin 1993) – 270 classes Class Hit-18.1 Roles and Restrictions: Agent[+int_control] Patient[+concrete] Instrument[+concrete] Members: bang, bash, hit, kick, ... Frames: Example Syntax Semantics Name Basic Transitive Paula hit the ball cause(Agent, E)manner(during(E), directedmotion, Agent) !contact(during(E), Agent V Patient Agent, Patient) manner(end(E),forceful, Agent) contact(end(E), Agent, Patient) Generalizing Events: Putting it all together US 1/2/1987 11:00AM +(2h) “NATO strikes an army base in Baghdad” NATO Present Event Time-frame strikes Military facility bombs Weapon warehouse City Actor: [state of Nato] Property: [Hit1.1] Theme: [Military facility] Location: [Arab City] Action US Army Army base Similar verb Army Baghdad Generalization rule Action Country Location rdf:type Time-frame Past Event Instrument 1/2/1987 11:00AM +(2h) Missiles Location Kabul “US Army bombs a weapon warehouse in Kabul with missiles” Generalizing Events: HAC algorithm Generalizing Events: Event distance metric US 1/2/1987 11:00AM +(2h) “NATO strikes an army base in Baghdad” NATO Present Event Time-frame Action Army strikes Military facility bombs Weapon warehouse City Action US Army Baghdad Army base Similar verb Country Location rdf:type Time-frame Past Event Instrument 1/2/1987 11:00AM +(2h) Missiles Location Kabul “US Army bombs a weapon warehouse in Kabul with missiles” Algorithm Outline Learning Phase 1. Generalize events 2. Causality prediction rule generation Prediction Rule Generation Time 5 Quantifier kill Action Troops Attribute “5 Afghan troops were killed” Afghan 1/2/1987 11:15AM +(3h) Timeframe Effect Event Nationality Afghanistan 1/2/1987 11:00AM +(2h) Type Type US Army bombs US Army Timeframe Action Country Cause Event Weapon warehouse Location “US Army bombs a weapon warehouse in Kabul with missiles” Missiles Country Kabul EffectThemeAttribute = CauseLocationCountry Nationality EffectAction=kill EffectTheme=Troops Algorithm Outline Prediction Phase 1. Finding similar generalized event 2. Application of causality prediction rule Finding Similar Generalized Event 0.2 “Baghdad bombing” 0.7 0.8 0.75 0.3 0.65 0.1 0.2 Prediction Rule Application Time kill Action Troops Timeframe T1 + ∆ Predicted Effect Event bomb T1 Timeframe Nationality Theme1 Country Action Actor1 Attribute Input Event Location Instrument1 Location1 EffectThemeAttribute = CauseLocationCountry Nationality EffectAction=kill EffectTheme=Troops Outline • Motivation • Problem definition • Solution • Representation • Algorithm • Evaluation Prediction Evaluation Human Group 1: • Mark events E that can cause other events. Human Group 2: • Given: Random sample of events from E , predictions and time of events • Search the web and give estimation on the prediction accuracy Prediction Accuracy Results Highly certain Certain Algorithm 0.58 0.49 Humans 0.4 0.38 Causality Evaluation Human Group 1: • Mark events E for test for the second two control groups and the algorithm. Human Group 2: • Given: Random sample of events from E. • State what you think would happen following this event. Human Group 3: • Given: algorithm predictions + human (2nd group) predictions • Evaluate the quality of the predictions Causality Results [0,1) [1-2) [2-3) [3,4] Avg. Rank Avg. Accuracy Algorithm 0 2 19 29 3.08 77% Humans 3 24 23 2.86 72% • 0 The results are statistically significant Event Predicted Event (Human) Predicted event (Pundit) Al-Qaida demands hostage Al-Qaida exchanges exchange hostage A country will refuse the demand Volcano erupts in Democratic Republic of Congo Scientists in Republic of Congo investigate lava beds Thousands of people flee from Congo 7.0 magnitude earthquake strikes Haitian coast Tsunami in Haiti effects coast Tsunami-warning is issued 2 Palestinians reportedly shot dead by Israeli troops Israeli citizens protest against Palestinian leaders War will be waged Professor of Tehran University killed in bombing Tehran students remember slain professor in memorial service Professor funeral will be held Alleged drug kingpin arrested in Mexico Mafia kills people with guns in town Kingpin will be sent to prison UK bans Islamist group Islamist group would adopt another name in the UK Group will grow China overtakes Germany German officials suspend as world's biggest exporter tariffs Wheat price will fall Accuracy of Extraction Extraction Evaluation Action Actor Object Instrument Location Time 93% 74% 76% 79% 79% Entity Ontology Matching Actor Object Instrument Location 84% 83% 79% 89% 100% Related work Causality Information Extraction Goal: Extract causality relations from a text Techniques: 1. Usage of handcrafted domain-specific patterns [Kaplan and Berry-Rogghe, 1991] 2. Usage of handcrafted linguistic patterns [Garcia 1997],[Khoo, Chan, &Niu 2000], [Girju &Moldovan 2002] 3. Semi-Supervised pattern learning approaches, based on text features [Blanco, Castell, &Moldovan 2008], [Sil & Huang & Yates 2010] 4. Supervised pattern learning approaches based on text features [Riloff 1996],[Riloff & Jones 1999], [Agichtein & Gravano, 2000; Lin & Pantel, 2001] Related work Temporal Information Extraction Goal: Predicting the temporal order of events or time expressions described in text Technique: learn classifiers that predict a temporal order of a pair of events based on a predefined features of the pair. [Ling & Weld, 2010; Mani, Schiffman, & Zhang, 2003; Lapata & Lascarides,2006; Chambers, Wang, & Jurafsky, 2007; Tatu & Srikanth, 2008; Yoshikawa, Riedel, Asahara, & Matsumoto, 2009] Future work • Going beyond human tagged examples • Incorporating time into the equation • When will correlation mean causality? • Using other sources than news • Incorporating real time data (Twitter, Facebook) • Incorporating numerical data (Stocks, Weather, Forex) • Can we predict general facts? • Can a machine predict better than an expert? Summary • Canonical event representation • Machine learning algorithm for events prediction • Leveraging world knowledge for generalization • Using text as human tagged examples • Causality mining from text • Contribution to machine common-sense understanding “The best way to predict the future is to invent it” [Alan Kay]