Sparse Representations and the Basis Pursuit Algorithm

advertisement

Sparse & Redundant Signal

Representation

and its Role in Image Processing

Michael Elad

The Computer Science Department

The Technion – Israel Institute of technology

Haifa 32000, Israel

Mathematics and Image Analysis, MIA'06

Paris, 18-21 September , 2006

D x

Today’s Talk is About

Sparsity

and

Redundancy

We will try to show today that

• Sparsity & Redundancy can be used to design new/renewed &

powerful signal/image processing tools.

• We will present both the theory behind sparsity and redundancy,

and how those are deployed to image processing applications.

• This is an overview lecture, describing the recent activity in this

field.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

2/56

Agenda

1. A Visit to Sparseland

Motivating Sparsity & Overcompleteness

2. Problem 1: Transforms & Regularizations

How & why should this work?

3. Problem 2: What About D?

Welcome

to

Sparseland

The quest for the origin of signals

4. Problem 3: Applications

Image filling in, denoising, separation, compression, …

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

3/56

Generating Signals in Sparseland

K

M

N

A fixed Dictionary

A sparse

& random

vector

D α

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

N

x

• Every column in

D (dictionary) is

a prototype

signal (Atom).

• The vector is

generated

randomly with

few non-zeros in

random locations

and random

values.

4/56

Sparseland Signals Are Special

M

α

Multiply

by D

x Dα

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

• Simple: Every

generated signal is built

as a linear combination

of few atoms from our

dictionary D

• Rich: A general model:

the obtained signals are

a special type mixtureof-Gaussians (or

Laplacians).

5/56

Transforms in Sparseland ?

• Assume that x is known to emerge from

M.

.

• We desire simplicity, independence, and expressiveness.

• How about “Given x, find the α that generated it in M ” ?

N

x

Dα

M

T

K

α

D

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

6/56

So, In Order to Transform …

We need to solve an

under-determined

linear system of equations:

• Among all (infinitely

many) possible solutions

we want the sparsest !!

• We will measure sparsity

using the L0 norm:

α

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Dαx

Known

0

7/56

Measure of Sparsity?

p

p

k

j

p

2

f i pi

j 1

2

p

p

As p 0 we

get a count

of the non-zeros

in the vector

α

D x

0

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

1

-1

1

1

p

p

p0

p 1

+1

i

8/56

Signal’s Transform in Sparseland

A sparse

& random

vector

α

Multiply

by D

M

x Dα

• Is

4 Major

Questions

αα

ˆ

Min α

α

0

s.t. x Dα

α̂

? Under which conditions?

• Are there practical ways to get

α̂ ?

• How effective are those ways?

• How would we get D?

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

9/56

Inverse Problems in Sparseland ?

• Assume that x is known to emerge from

M.

• Suppose we observe y Hx v, a “blurred” and noisy

version of x with v 2 ε. How will we recover x?

• How about “find the α that generated the x …” again?

y M

M

Dα

Hx

N

x

Q

α K

ˆ

Noise

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

10/56

Inverse Problems in Sparseland ?

A sparse

& random

vector

M

x Dα

α

Multiply

by D

“blur”

by H

v

Min α

α

y HDα

y Hx v

• Is

4 Major

Questions

(again!)

αα

ˆ

0

s.t.

2

ε

α̂

? How far can it go?

• Are there practical ways to get

α̂ ?

• How effective are those ways?

• How would we get D?

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

11/56

Back Home … Any Lessons?

Several recent trends worth looking at:

JPEG to JPEG2000 - From (L2-norm) KLT to wavelet and nonlinear approximation

Sparsity.

From Wiener to robust restoration – From L2-norm (Fourier)

to L1. (e.g., TV, Beltrami, wavelet shrinkage …)

Sparsity.

From unitary to richer representations – Frames, shift-invariance,

Overcompleteness.

steerable wavelet, contourlet, curvelet

Approximation theory – Non-linear approximation

Sparsity & Overcompleteness.

ICA and related models

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Independence and Sparsity.

12/56

To Summarize so far …

The Sparseland

model for

signals is very

interesting

Who

cares?

We do! it is relevant to

us

So, what

are the

implications?

We need to answer the 4

questions posed, and then

show that all this works well

in practice

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

13/56

Agenda

1. A Visit to

Sparseland

Motivating Sparsity & Overcompleteness

T

2. Problem 1: Transforms & Regularizations

How & why should this work?

3. Problem 2: What About D?

The quest for the origin of signals

Q

4. Problem 3: Applications

Image filling in, denoising, separation, compression, …

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

14/56

Lets Start with the Transform …

Our dream for Now: Find

the sparsest solution of

Dαx

Known

Put formally,

P0 : Min α 0 s.t. x Dα

α

known

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

15/56

Question 1 – Uniqueness?

M

α

x Dα

Min α

Multiply

by D

α

0

s.t. x Dα

α̂

Suppose we can

solve this exactly

Why should we necessarily get

α α?

ˆ

α 0 α 0.

It might happen that eventually ˆ

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

16/56

Matrix “Spark”

Definition: Given a matrix D, =Spark{D} is the smallest *

and and

number of columns that are linearly dependent.

Donoho & E. (‘02)

Example:

D x

1

0

0

0

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

0 0 0 1

1 0 0 1

0 1 0 0

0 0 1 0

Rank = 4

Spark = 3

* In tensor decomposition,

Kruskal defined something

similar already in 1989.

17/56

Uniqueness Rule

Suppose this problem has been solved somehow

P0 : Min α 0 s.t. x Dα

α

Uniqueness

Donoho & E. (‘02)

If we found a representation that satisfy

σ

α0

2

Then necessarily it is unique (the sparsest).

This result implies that if

M

generates

signals using “sparse enough” , the

solution of the above will find it exactly.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

18/56

Question 2 – Practical P0 Solver?

M

α

Multiply

by D

x Dα

Min α

α

0

s.t. x Dα

Are there reasonable ways to find

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

α̂

α̂ ?

19/56

Matching Pursuit (MP) Mallat & Zhang (1993)

• The MP is a greedy

algorithm that finds one

atom at a time.

• Step 1: find the one atom

that best matches the signal.

• Next steps: given the

previously found atoms, find

the next one to best fit …

• The Orthogonal MP (OMP) is an improved

version that re-evaluates the coefficients after

each round.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

20/56

Basis Pursuit (BP) Chen, Donoho, & Saunders (1995)

Instead of solving

Min α 0 s.t. x Dα

α

Solve Instead

Min α 1 s.t. x Dα

α

• The newly defined problem is convex (linear programming).

• Very efficient solvers can be deployed:

Interior point methods [Chen, Donoho, & Saunders (`95)] ,

Sequential shrinkage for union of ortho-bases [Bruce et.al. (`98)],

Iterated shrinkage

[Figuerido & Nowak (`03), Daubechies, Defrise, & Demole (‘04),

E. (`05), E., Matalon, & Zibulevsky (`06)].

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

21/56

Question 3 – Approx. Quality?

M

α

Multiply

by D

x Dα

Min α

α

0

s.t. x Dα

α̂

How effective are the MP/BP

in finding α̂ ?

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

22/56

Evaluating the “Spark”

• Compute

D

DT

=

Assume

normalized

columns

DTD

• The Mutual Coherence M is the largest off-diagonal

entry in absolute value.

• The Mutual Coherence is a property of the dictionary

(just like the “Spark”). In fact, the following relation

can be shown:

1

1

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

M

23/56

BP and MP Equivalence

Equivalence

Donoho & E.

Gribonval & Nielsen

Tropp

Temlyakov

(‘02)

(‘03)

(‘03)

(‘03)

Given a signal x with a representation x Dα,

Assuming that α 0 0.51 1 M , BP and MP are

Guaranteed to find the sparsest solution.

MP and BP are different in general (hard to say which is better).

The above result corresponds to the worst-case.

Average performance results are available too, showing much better

bounds [Donoho (`04), Candes et.al. (`04), Tanner et.al. (`05), Tropp et.al. (`06),

Tropp (’06)].

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

24/56

What About Inverse Problems?

v

x Dα

A sparse

& random

vector

α

Multiply

by D

“blur”

by H

y Hx v

Min α 0 s.t.

α

y HD α

2

ε

α̂

• We had similar questions regarding uniqueness, practical solvers,

and their efficiency.

• It turns out that similar answers are applicable here due to several

recent works [Donoho, E. and Temlyakov (`04), Tropp (`04), Fuchs (`04),

Gribonval et. al. (`05)].

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

25/56

To Summarize so far …

The Sparseland model is

relevant to us. We can

design transforms and

priors based on it

Find the

sparsest

solution?

(a) How shall we find D?

(b) Will this work for

applications? Which?

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Use pursuit

Algorithms

Why works so

well?

A sequence of works

during the past 3-4

years gives theoretic

What next? justifications for these

tools behavior

26/56

Agenda

1. A Visit to

Sparseland

Motivating Sparsity & Overcompleteness

2. Problem 1: Transforms & Regularizations

How & why should this work?

3. Problem 2: What About D?

The quest for the origin of signals

4. Problem 3: Applications

Image filling in, denoising, separation, compression, …

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

27/56

Problem Setting

M

α

Multiply

by D

x Dα

α 0 L

D x

P

Xj

j 1

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Given these P examples and a

fixed size [NK] dictionary D,

how would we find D?

28/56

Uniqueness?

Uniqueness

Aharon, E., & Bruckstein (`05)

Comments:

If

x j jP1 is rich enough* and if

Spark D

L

2

then D is unique.

• “Rich Enough”: The signals from M could be clustered to L groups that

share the same support. At least L+1 examples per each are needed.

K

• This result is proved constructively, but the number of examples needed

to pull this off is huge – we will show a far better method next.

• A parallel result that takes into account noise is yet to be constructed.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

29/56

Practical Approach – Objective

X

P

D

Min

D ,A

j1

j

xj

2

2

Each example is

a linear combination

of atoms from D

D

s.t. j, j 0 L

Each example has a

sparse representation with

no more than L atoms

(n,K,L are assumed known, D has norm. columns)

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

A

Field & Olshausen

Engan et. al.

Lewicki & Sejnowski

Cotter et. al.

Gribonval et. al.

(96’)

(99’)

(00’)

(03’)

(04’)

30/56

K–Means For Clustering

Clustering: An extreme sparse coding

D

Initialize

D

Sparse Coding

Nearest Neighbor

Dictionary

Update

T

X

Column-by-Column by

Mean computation over

the relevant examples

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

31/56

The K–SVD Algorithm – General

D

Initialize

D

Sparse Coding

Use MP or BP

Dictionary

Update

X

T

Column-by-Column by

SVD computation

Aharon, E., & Bruckstein (`04)

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

32/56

K–SVD Sparse Coding Stage

P

2

j 1

2

Dα j x j

Min

A

For the jth

example

we solve

Min Dα x j

α

D

s.t. j, α j L

0

X

T

2

s.t. α 0 L

2

Pursuit Problem !!!

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

33/56

K–SVD Dictionary Update Stage

D

Gk : The examples in X j jP1

and that use the column dk.

dk ?

The content of dk influences

only the examples in Gk.

Let us fix all A apart from the

kth column and seek both dk

and the kth column to better

fit the residual!

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

34/56

K–SVD Dictionary Update Stage

We should solve:

Min

dk ,αk

T

αk dk

E

2

F

Residual

E

D

dk ?

αk

dk is obtained by SVD on the examples’ residual in Gk.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

35/56

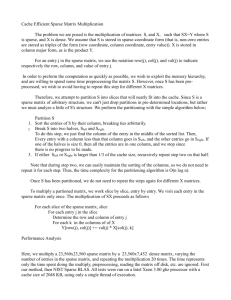

K–SVD: A Synthetic Experiment

Create A 2030 random dictionary

with normalized columns

Generate 2000 signal examples with

3 atoms per each and add noise

Train a dictionary using the KSVD

and MOD and compare

D

D

1

0.9

Results

Relative Atoms Found

0.8

0.7

0.6

0.5

0.4

0.3

0.2

MOD performance

K-SVD performance

0.1

0

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

0

5

10

15

20

25

Iteration

30

35

40

45

50

36/56

To Summarize so far …

The Sparseland model for

signals is relevant to us. In

order to use it effectively

we need to know D

How D

can be

found?

Show that all the

above can be

deployed to

applications

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Use the K-SVD

algorithm

Will it work

well?

We have

shown how to

practically train

D using the

What next?

K-SVD

37/56

Agenda

1. A Visit to

Sparseland

Motivating Sparsity & Overcompleteness

2. Problem 1: Transforms & Regularizations

How & why should this work?

3. Problem 2: What About D?

The quest for the origin of signals

4. Problem 3: Applications

Image filling in, denoising, separation, compression, …

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

38/56

Application 1: Image Inpainting

Assume: the signal x has been created

by x=Dα0 with very sparse α0.

D 0 x

Missing values in x imply

missing rows in this linear

system.

By removing these rows, we get

~

~

D x

Now solve

Min

0

.

=

~ ~

s.t. x D

If α0 was sparse enough, it will be the solution of the

above problem! Thus, computing Dα0 recovers x perfectly.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

39/56

Application 1: Side Note

Compressed Sensing is leaning on the very same principal,

leading to alternative sampling theorems.

Assume: the signal x has been created by x=Dα0 with very sparse α0.

Multiply this set of equations by the matrix Q which reduces

the number of rows.

The new, smaller, system of equations is

QD Qx

~

D ~

x

If α0 was sparse enough, it will be the sparsest solution of the

new system, thus, computing Dα0 recovers x perfectly.

Compressed sensing focuses on conditions for this to happen,

guaranteeing such recovery.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

40/56

Application 1: The Practice

Given a noisy image y, we can clean it using the Maximum Aposteriori Probability estimator by [Chen, Donoho, & Saunders (‘95)].

ˆ ArgMin

p

p

y D

2

x̂ D

ˆ

2

What if some of the pixels in that image are missing (filled

with zeros)? Define a mask operator as the diagonal matrix W,

and now solve instead

ˆ ArgMin

p

p

y WD

2

2

x̂ D

ˆ

When handling general images, there is a need to concatenate two

dictionaries to get an effective treatment of both texture and cartoon

contents – This leads to separation [E., Starck, & Donoho (’05)].

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

41/56

Inpainting Results

Predetermined

dictionary:

Curvelet (cartoon)

+ Overlapped

DCT (texture)

Source

Outcome

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

42/56

Inpainting Results

More about this

application will be

given in Jean-Luc

Starck talk that

follows.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

43/56

Application 2: Image Denoising

Given a noisy image y, we have already mentioned the

ability to clean it by solving

ˆ ArgMin

p

p

y D

2

2

x̂ D

ˆ

When using the K-SVD, it cannot train a dictionary for large

support images – How do we go from local treatment of

patches to a global prior?

The solution: Force shift-invariant sparsity - on each patch

of size N-by-N (e.g., N=8) in the image, including

overlaps.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

44/56

Application 2: Image Denoising

ˆ ArgMin

p

p

y D

2

x̂ D

ˆ

2

Our MAP penalty

becomes

2

2

1

x̂ ArgMin

x y R ij x Dij

2

2

ij

x ,{ } ij 2

ij

Extracts a patch

in the ij location

0

s.t. ij L

Our prior

0

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

45/56

Application 2: Image Denoising

2

2

1

x̂ ArgMin

x y Rij x Dij s.t.

2

2

D?2

x ,{ij }ij ,D

ij

ij

0

0

L

The dictionary (and thus the image

trained

on the corrupted

and

ij known

x=y and D known prior)x is

D and ij known

itself!

Compute ij per patch

ij Min Rij x D

2

2

0

s.t. 0 L

using the matching pursuit

D toan

minimize

x by

ThisCompute

leads to

elegant fusionCompute

of

1

2 denoising

the K-SVD

and

the

tasks.

T

T

x I R R y R D

Min R x D

ij

ij

2

using SVD, updating one

column at a time

ij

ij ij

ij

ij

ij

which is a simple averaging

of shifted patches

K-SVD

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

46/56

Application 2: The Algorithm

Sparse-code every

patch of 8-by-8

pixels

Update the

dictionary based on

the above codes

Compute the

output image

x

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

D

The computational

cost of this algorithm

is mostly due to the

OMP steps done on

each image patch O(N2×L×K×Iterations)

per pixel.

47/56

Denoising Results

Source

The results of this algorithm tested over a corpus

of images and with various noise powers compete

favorably with the state-of-the-art - the

GSM+steerable wavelets denoising algorithm by

[Portilla, Strela, Wainwright, & Simoncelli (‘03)],

giving ~1dB better results on average.

Result 30.829dB

Noisy image

20

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Initial dictionary

The obtained

dictionary after

(overcomplete

DCT) 64×256

10 iterations

48/56

Application 3: Compression

The problem: Compressing photo-ID images.

General purpose methods (JPEG, JPEG2000)

do not take into account the specific family.

By adapting to the image-content (PCA/K-SVD),

better results could be obtained.

For these techniques to operate well, train

dictionaries locally (per patch) using a

training set of images is required.

In PCA, only the (quantized) coefficients are stored,

whereas the K-SVD requires storage of the indices

as well.

Geometric alignment of the image is very helpful

and should be done.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

49/56

Application 3: The Algorithm

Divide the image into disjoint

15-by-15 patches. For each

compute mean and dictionary

Per each patch find the

operating parameters (number

of atoms L, quantization Q)

Warp, remove the mean from

each patch, sparse code using L

atoms, apply Q, and dewarp

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

Training set (2500 images)

On the training set

Detect main features and warp

the images to a common

reference (20 parameters)

On the

test image

50/56

Compression Results

Results

for 820

Bytes per

each file

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

11.99

10.49

8.81

5.56

10.83

8.92

7.89

4.82

10.93

8.71

8.61

5.58

51/56

Compression Results

Results

for 550

Bytes per

each file

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

15.81

13.89

10.66

6.60

14.67

12.41

9.44

5.49

15.30

12.57

10.27

6.36

52/56

Compression Results

?

?

Results

for 400

Bytes per

each file

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

?

18.62

12.30

7.61

16.12

11.38

6.31

16.81

12.54

7.20

53/56

Today We Have Discussed

1. A Visit to

Sparseland

Motivating Sparsity & Overcompleteness

2. Problem 1: Transforms & Regularizations

How & why should this work?

3. Problem 2: What About D?

The quest for the origin of signals

4. Problem 3: Applications

Image filling in, denoising, separation, compression, …

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

54/56

Summary

Sparsity and Overcompleteness are important

There are

ideas. Can be used in

difficulties in

designing better tools in

using them!

signal/image processing

Future transforms and

regularizations should be

data-driven, non-linear,

overcomplete, and

promoting sparsity.

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

This is an on-going work:

• Deepening our theoretical

understanding,

• Speedup of pursuit methods,

• Training the dictionary,

• Demonstrating applications,

•…

The dream?

55/56

Thank You for Your Time

More information, including these

slides, can be found in my web-page

http://www.cs.technion.ac.il/~elad

THE END !!

D x

Sparse and Redundant

Signal Representation,

and Its Role in

Image Processing

56/56