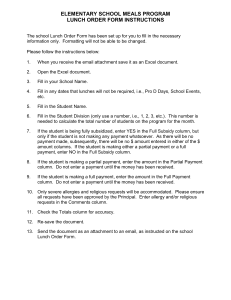

Here

advertisement

Cache Efficient Sparse Matrix Multiplication The problem we are posed is the multiplication of matrices S, and X, such that SX=Y where S is sparse, and X is dense. We assume that S is stored in sparse-coordinate form (that is, non-zero entries are stored as triples of the form (row coordinate, column coordinate, entry value)). X is stored in column major form, as is the product Y. For an entry j in the sparse matrix, we use the notation row(j), col(j), and val(j) to indicate respectively the row, column, and value of entry j. In order to preform the computation as quickly as possible, we wish to exploit the memory hierarchy, and are willing to spend some time preprocessing the matrix S. However, once S has been preprocessed, we wish to avoid having to repeat this step for different X matrices. Therefore, we attempt to partition S into slices that will neatly fit into the cache. Since S is a sparse matrix of arbitrary structure, we can't just drop partitions in pre-determined locations, but rather we must analyze a little of S's structure. We preform the partitioning with the simple algorithm below; Partition S 1. Sort the entries of S by their column, breaking ties arbitrarily. 2. Break S into two halves, Sleft and Sright. To do this step, we just find the column of the entry in the middle of the sorted list. Then, Every entry with a column less than that column goes in Sleft, and the other entries go in Sright. If one of the halves is size 0, then all the entries are in one column, and we stop since there is no progress to be made. 3. If either Sleft or Sright is larger than 1/3 of the cache size, recursively repeat step two on that half. Note that during step two, we can easily maintain the sorting of the columns, so we do not need to repeat it for each step. Thus, the time complexity for the partitioning algorithm is O(n log n). Once S has been partitioned, we do not need to repeat the steps again for different X matrices. To multiply a partioned matrix, we work slice by slice, entry by entry. We visit each entry in the sparse matrix only once. The multiplication of SX proceeds as follows For each slice of the sparse matrix, slice For each entry j in the slice Determine the row and column of entry j For each k in the columns of of X Y[row(j), col(j)] += val(j) * X[col(j), k] Performance Analysis Here, we multiply a 23,560x23,560 sparse matrix by a 23,560x7,452 dense matrix, varying the number of entries in the sparse matrix, and repeating the multiplication 20 times. The time represents only the time spent doing the multiply, preprocessing, reading the matrix off disk, etc. are ignored. First our method, then NIST Sparse BLAS. All tests were run on a Intel Xeon 3.00 ghz processor with a cache size of 2048 KB, using only a single thread of execution. Entries (thousands of entries0 Time (seconds) 10 17 20 28 30 38 40 50 50 63 60 68 70 84 90 107 100 118 NIST Spars Blas Entries Time (seconds) 10 56 20 71 30 82 40 95 50 109 60 118 70 135 80 143 90 158 100 168 Here we take the Kronecker product of two tri-banded matrices with a diagonal of the given size, to illustrate performance on a non-random sparsity pattern Our Method Diagonal Size Time (seconds) 50 1 55 2 60 2 65 4 70 5 75 7 NIST Sparse Blas Diagonal Size Time (seconds) 50 2 55 3 60 5 65 7 70 9 75 12