Multithreaded Producer

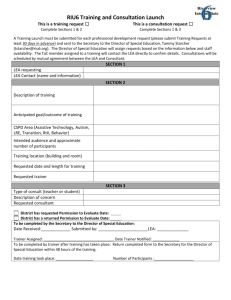

advertisement

High-performance

Multithreaded Producerconsumer Designs – from

Theory to Practice

Bill Scherer (University of Rochester)

Doug Lea (SUNY Oswego)

Rochester Java Users’ Group

April 11, 2006

java.util.concurrent

• General purpose toolkit for developing concurrent applications

– No more “reinventing the wheel”!

• Goals: “Something for Everyone!”

– Make some problems trivial to solve by everyone

– Develop thread-safe classes, such as servlets, built on

concurrent building blocks like ConcurrentHashMap

– Make some problems easier to solve by concurrent

programmers

– Develop concurrent applications using thread pools,

barriers, latches, and blocking queues

– Make some problems possible to solve by concurrency experts

– Develop custom locking classes, lock-free algorithms

April 11, 2006

Scherer & Lea

2

Overview of j.u.c

•

•

Executors

•

Concurrent Collections

–

Executor

–

ConcurrentMap

–

ExecutorService

–

ConcurrentHashMap

–

ScheduledExecutorService

–

CopyOnWriteArray{List,Set}

–

Callable

–

Future

–

ScheduledFuture

–

Delayed

–

CompletionService

–

ThreadPoolExecutor

–

ScheduledThreadPoolExecutor

–

AbstractExecutorService

–

Lock

–

Executors

–

Condition

–

FutureTask

–

ReadWriteLock

–

ExecutorCompletionService

–

AbstractQueuedSynchronizer

–

LockSupport

–

ReentrantLock

–

ReentrantReadWriteLock

•

•

Queues

–

–

–

–

–

–

–

BlockingQueue

ConcurrentLinkedQueue

LinkedBlockingQueue

ArrayBlockingQueue

SynchronousQueue

PriorityBlockingQueue

DelayQueue

•

Synchronizers

–

CountDownLatch

–

Semaphore

–

Exchanger

–

CyclicBarrier

Locks: java.util.concurrent.locks

Atomics: java.util.concurrent.atomic

–

Atomic[Type]

–

Atomic[Type]Array

–

Atomic[Type]FieldUpdater

–

Atomic{Markable,Stampable}Reference

Key Functional Groups

• Executors, Thread pools and Futures

– Execution frameworks for asynchronous tasking

• Concurrent Collections:

– Queues, blocking queues, concurrent hash map, …

– Data structures designed for concurrent

environments

• Locks and Conditions

– More flexible synchronization control

– Read/write locks

• Synchronizers: Semaphore, Latch, Barrier

– Ready made tools for thread coordination

• Atomic variables

– The key to writing lock-free algorithms

April 11, 2006

Scherer & Lea

4

Part I: Theory

April 11, 2006

Scherer & Lea

5

Synchronous Queues

• Synchronized communication channels

• Producer awaits explicit ACK from

consumer

• Theory and practice of concurrency

– Implementation of language synch.

primitives (CSP handoff, Ada rendezvous)

– Message passing software

– Java.util.concurrent.ThreadPoolExecutor

April 11, 2006

Scherer & Lea

6

Hanson’s Synch. Queue

datum item;

Semaphore sync(0), send(1), recv(0);

datum take() {

recv.acquire();

datum d = item;

sync.release();

send.release();

return d;

}

April 11, 2006

void put(datum d) {

send.acquire();

item = d;

recv.release();

sync.acquire();

}

Scherer & Lea

7

Hanson’s Synch. Queue

datum item;

Semaphore sync(0), send(1), recv(0);

datum take() {

recv.acquire();

datum d = item;

sync.release();

send.release();

return d;

}

April 11, 2006

void put(datum d) {

send.acquire();

item = d;

recv.release();

sync.acquire();

}

Scherer & Lea

8

Hanson’s Queue: Limitations

• High overhead

– 3 semaphore operations for put and take

– Interleaved handshaking – likely to block

• No obvious path to timeout support

– Needed e.g. for j.u.c.ThreadPoolExecutor

adaptive thread pool

– Producer adds a worker or runs task itself

– Consumer terminates if work unavailable

April 11, 2006

Scherer & Lea

9

Java 5 Version

• Fastest known previous implementation

• Optional FIFO fairness

– Unfair mode stack-based better locality

– Big performance penalty for fair mode

• Global lock covers two queues

– (stacks for unfair mode)

– One each for awaiting consumers, producers

– At least one always empty

April 11, 2006

Scherer & Lea

10

Remainder of Part I

• Introduction

• Nonblocking Synchronization

– Why use?

– Nonblocking partial methods

• Synchronous Queue Design

• Conclusions

April 11, 2006

Scherer & Lea

11

Nonblocking Synchronization

•

•

Resilient to failure or delay of any thread

Optimistic update pattern:

1) Set-up operation

(invisible to other threads)

2) Effect all at once

(atomic)

3) Clean-up if needed (can be done by any thread)

•

Atomic compare-and-swap (CAS)

bool CAS(word *ptr, word e, word n) {

if (*ptr != e) return false;

*ptr = n; return true;

}

April 11, 2006

Scherer & Lea

12

Why Use Nonblocking Synch?

• Locks

– Performance (convoying, intolerance of

page faults and preemption)

– Semantic (deadlock, priority inversion)

– Conceptual (scalability vs. complexity)

• Transactional memory

– Needs to support the general case

– High overheads (currently)

April 11, 2006

Scherer & Lea

13

Programmer Effort

Ad Hoc

NBS

Fine

Locks

HW

TM

Software

TM (STM)

Coarse

Locks

April 11, 2006

Canned

NBS

System Performance

Scherer & Lea

14

Linearizability [HW90]

• Gold standard for correctness

• Linearization Point where operations

take place

T3: Dequeue (a)

T1: Enqueue (a)

T2: Enqueue (b)

T4: Dequeue (b)

Time flows left to right

April 11, 2006

Scherer & Lea

15

Linearizability [HW90]

• Gold standard for correctness

• Linearization Point where operations

take place

T3: Dequeue (b!)

T1: Enqueue (a)

T2: Enqueue (b)

T4: Dequeue (a!)

Time flows left to right

April 11, 2006

Scherer & Lea

16

Partial Operations

• Totalized approach: return failure

• Repeat until data retrieved (“try-in-a-loop”)

– Heavy contention on data structures

– Output depends on which thread retries first

T1: Dequeue (b!)

T3: Enqueue (a)

T4: Enqueue (b)

T2: Dequeue (a!)

April 11, 2006

Scherer & Lea

17

Dual Linearizability

Break partial methods into two first-class

halves: pre-blocking reservation, postblocking follow-up

T1: Dequeue (a)

T3: Enqueue (a)

T4: Enqueue (b)

T2: Dequeue (b)

April 11, 2006

Scherer & Lea

18

Next Up: Synchronous

Queues

• Introduction

• Nonblocking Synchronization

• Synchronous Queue Design

– Implementation

– Performance

• Conclusions

April 11, 2006

Scherer & Lea

19

Algorithmic Genealogy

Fair mode

M&S

Queue

Source

Algorithm

Unfair mode

Treiber’s

Stack

Dual

Queue

Consumer

Blocking

Dual

Stack

Fair

SQ

Producer

Blocking,

Timeout,

Cleanup

Unfair

SQ

April 11, 2006

Scherer & Lea

20

Algorithmic Genealogy

Fair mode

M&S

Queue

Source

Algorithm

Unfair mode

Treiber’s

Stack

Dual

Queue

Consumer

Blocking

Dual

Stack

Fair

SQ

Producer

Blocking,

Timeout,

Cleanup

Unfair

SQ

April 11, 2006

Scherer & Lea

21

M&S Queue: Enqueue

Queue

E1

Dummy

Data

Data

Data

Queue

Dummy

Data

April 11, 2006

Data

Data

Scherer & Lea

Data

Data

Data

Data

E2

22

M&S Queue: Dequeue

Queue

Dummy

Data

Data

Data

Data

D1

Queue

D2

Old

Dummy

April 11, 2006

New

Dummy

Data

Data

Scherer & Lea

Data

23

The Dual Queue

• Separate data, request nodes (flag bit)

– queue always data or requests

• Same behavior as M&S queue for data

• Reservations are antisymmetric to data

– dequeue enqueues a reservation node

– enqueue satisfies oldest reservation

• Tricky consistency checks needed

• Dummy node can be datum or reservation

– Extra state to watch out for (more corner cases)

April 11, 2006

Scherer & Lea

24

Dual Queue: Enq. (Requests)

E3

Dummy

Res.

Queue

Res.

Res.

Res.

E1

E2

E1 Read dummy’s next ptr

E2 CAS reservation’s data ptr from nil to satisfying data

E3 Update head ptr

April 11, 2006

Scherer & Lea

25

Dual Queue: Enq. (Requests)

E3

Dummy

Res.

Queue

Res.

Res.

Res.

E2

Item

E1 Read dummy’s next ptr

E2 CAS reservation’s data ptr from nil to satisfying data

E3 Update head ptr

April 11, 2006

Scherer & Lea

26

Dual Queue: Enq. (Requests)

E3

Old

Dummy

New

Dummy

Queue

Res.

Res.

Res.

Item

E1 Read dummy’s next ptr

E2 CAS reservation’s data ptr from nil to satisfying data

E3 Update head ptr

April 11, 2006

Scherer & Lea

27

Synchronous Queue

• Implementation extends dual queue

• Consumers already block for producers

– add blocking for the “other direction”

• Add item ptr to data nodes

– Consumers CAS from nil to “satisfying request”

– Once non-nil, any thread can update head ptr

– Timeout support

• Producer CAS from nil back to self

• Node reclaimed when it reaches head of queue: seen as

fulfilled node

April 11, 2006

Scherer & Lea

28

The Test Environments

• SunFire 6800

– 16 UltraSparc III processors @ 1.2 GHz

• SunFire V40z

– 4 AMD Opteron processors @ 2.4 GHz

• Java SE 5.0 HotSpot JVM

• Microbenchmark performance tests

April 11, 2006

Scherer & Lea

29

Synchronous Queue

Performance

Producer-Consumer Handoff

60000

16

processor

SunFire

6800

ns/transfer

50000

40000

30000

14X difference

20000

10000

0

1

2

3

4

6

8

12

16

24

32

48

64

Pairs

April 11, 2006

SynchronousQueue

SynchronousQueue(fair)

SynchronousQueue1.6(fair)

HansonSQ

Scherer & Lea

SynchronousQueue1.6

30

ThreadPoolExecutor Impact

ThreadPoolExecutor [SPARC]

60000

50000

40000

ns/task

16

processor

SunFire

6800

30000

10X difference

20000

10000

0

1

2

3

4

6

8

12

16

24

32

48

64

threads

April 11, 2006

SynchronousQueue

SynchronousQueue(fair)

SynchronousQueue1.6

SynchronousQueue1.6(fair)

Scherer & Lea

31

Next Up: Conclusions

•

•

•

•

Introduction

Nonblocking Synchronization

Synchronous Queue Design

Conclusions

April 11, 2006

Scherer & Lea

32

Conclusions

• Low-overhead synchronous queues

• Optional FIFO fairness

– Fair mode extends dual queue

– Unfair mode extends dual stack

– No performance penalty

• Up to 14x performance gain in SQ

– Translates to 10x gain for TPE

April 11, 2006

Scherer & Lea

33

Future Work: Types of

Scalability

A. Constant overhead for operations,

irrespective of the number of threads

–

–

“Low-level” – doesn’t hurt scalability of apps

Spin locks (e.g. MCS), SQ

B. Overall throughput proportional to the

number of concurrent threads

–

–

–

“High-level” – data structure itself

Can be obtained via elimination [ST95]

Stacks [HSY04]; queues [MNSS05]; exchangers

April 11, 2006

Scherer & Lea

34

Part II: Practice

• Thread Creation Patterns

– Loops, oneway messages, workers & pools

• Executor framework

• Advanced Topics

– AbstractQueuedSynchronizer

April 11, 2006

Scherer & Lea

35

Autonomous Loops

• Simple non-reactive active objects contain a run loop of form:

• public void run() {

while (!Thread.interrupted())

doSomething();

}

• Normally established with a constructor containing:

• new Thread(this).start();

– Or by a specific start method

– Perhaps also setting priority and daemon status

• Normally also support other methods called from other threads

– Requires standard safety measures

• Common Applications

– Animations, Simulations, Message buffer Consumers, Polling

daemons that periodically sense state of world

April 11, 2006

Scherer & Lea

36

Thread Patterns for Oneway Messages

April 11, 2006

Scherer & Lea

37

Thread-Per-Message Web Server

class UnstableWebServer {

public static void main(String[] args) {

ServerSocket socket = new ServerSocket(80);

while (true) {

final Socket connection = socket.accept();

Runnable r = new Runnable() {

public void run() {

handleRequest(connection);

}

};

new Thread(r).start();

}

}

}

• Potential resource exhaustion unless connection rate is limited

– Threads aren’t free!

– Don’t do this!

April 11, 2006

Scherer & Lea

38

Thread-Per-Object via Worker Threads

• Establish a producer-consumer chain

– Producer

Reactive method just places message in a channel

Channel might be a buffer, queue, stream, etc

Message might be a Runnable command, event, etc

– Consumer

Host contains an autonomous loop thread of form:

while (!Thread.interrupted()) {

m = channel.take();

process(m);

}

• Common variants

– Pools

Use more than one worker thread

– Listeners

Separate producer and consumer in different objects

April 11, 2006

Scherer & Lea

39

Web Server Using Worker Thread

class WebServer {

BlockingQueue<Socket> queue = new

LinkedBlockingQueue<Socket>();

class Worker extends Thread {

public void run() {

while(!Thread.interrupted()) {

Socket s = queue.take();

handleRequest(s);

}

}

}

public void start() {

new Worker().start();

ServerSocket socket = new ServerSocket(80);

while (true) {

Socket connection = socket.accept();

queue.put(connection);

}

}

public static void main(String[] args) {

new WebServer().start();

}

}

April 11, 2006

Scherer & Lea

40

Channel Options

• Unbounded queues

– Can exhaust resources if clients faster than handlers

• Bounded buffers

– Can cause clients to block when full

• Synchronous channels

– Force client to wait for handler to complete previous task

• Leaky bounded buffers

– For example, drop oldest if full

• Priority queues

– Run more important tasks first

• Streams or sockets

– Enable persistence, remote execution

• Non-blocking channels

– Must take evasive action if put or take fail or time out

April 11, 2006

Scherer & Lea

41

Thread Pools

• Use a collection of worker threads, not just one

– Can limit maximum number and priorities of threads

– Dynamic worker thread management

Sophisticated policy controls

– Often faster than thread-per-message for I/O bound actions

April 11, 2006

Scherer & Lea

42

Web Server Using Executor Thread Pool

• Executor implementations internalize the channel

class PooledWebServer {

Executor pool =

Executors.newFixedThreadPool(7);

public void start() {

ServerSocket socket = new ServerSocket(80);

while (!Thread.interrupted()) {

final Socket connection = socket.accept();

Runnable r = new Runnable() {

public void run() {

handleRequest(connection);

}

};

pool.execute(r);

}

}

public static void main(String[] args) {

new PooledWebServer().start();

}

}

April 11, 2006

Scherer & Lea

43

Policies and Parameters for Thread Pools

• The kind of channel used as task queue

– Unbounded queue, bounded queue, synchronous hand-off,

priority queue, ordering by task dependencies, stream, socket

• Bounding resources

– Maximum number of threads

– Minimum number of threads

– “Warm” versus on-demand threads

– Keepalive interval until idle threads die

Later replaced by new threads if necessary

• Saturation policy

– Block, drop, producer-runs, etc

• These policies and parameters can interact in subtle ways!

April 11, 2006

Scherer & Lea

44

Pools in Connection-Based Designs

• For systems with many open connections (sockets), but relatively

few active at any given time

• Multiplex the delegations to worker threads via polling

– Requires underlying support for select/poll and nonblocking I/O

– Supported in JDK1.4 java.nio

April 11, 2006

Scherer & Lea

45

The Executor Framework

• Framework for asynchronous task execution

• Standardize asynchronous invocation

– Framework to execute Runnable and Callable tasks

– Runnable: void run()

– Callable<V>: V call() throws Exception

• Separate submission from execution policy

– Use anExecutor.execute(aRunnable)

– Not new Thread(aRunnable).start()

• Cancellation and shutdown support

• Usually created via Executors factory class

– Configures flexible ThreadPoolExecutor

– Customize shutdown methods, before/after hooks, saturation

policies, queuing

April 11, 2006

Scherer & Lea

46

Executor

• Decouple submission policy from task execution

• public interface Executor {

void execute(Runnable command);

}

• Code which submits a task doesn't have to know in what thread

the task will run

– Could run in the calling thread, in a thread pool, in a single

background thread (or even in another JVM!)

– Executor implementation determines execution policy

– Execution policy controls resource utilization, overload

behavior, thread usage, logging, security, etc

– Calling code need not know the execution policy

April 11, 2006

Scherer & Lea

47

ExecutorService

• Adds lifecycle management

• ExecutorService supports both graceful and immediate shutdown

public interface ExecutorService extends Executor {

void shutdown();

List<Runnable> shutdownNow();

boolean isShutdown();

boolean isTerminated();

boolean awaitTermination(long timeout, TimeUnit

unit);

// …

}

• Useful utility methods too

– <T> T invokeAny(Collection<Callable<T>> tasks)

– Executes the given tasks returning the result of one that

completed successfully (if any)

– Others involving Future objects—covered later

April 11, 2006

Scherer & Lea

48

Creating Executors

• Sample Executor implementations from

Executors

• newSingleThreadExecutor

– A pool of one, working from an unbounded queue

• newFixedThreadPool(int N)

– A fixed pool of N, working from an unbounded

queue

• newCachedThreadPool

– A variable size pool that grows as needed and

shrinks when idle

• newScheduledThreadPool

– Pool for executing tasks after a given delay, or

periodically

April 11, 2006

Scherer & Lea

49

ThreadPoolExecutor

• Numerous tuning parameters

– Core and maximum pool size

– New thread created on task submission until core size

reached

– New thread then created when queue full until maximum

size reached

– Note: unbounded queue means the pool won’t grow above

core size

– Maximum can be unbounded

– Keep-alive time

– Threads above the core size terminate if idle for more than

the keep-alive time

– Pre-starting of core threads, or else on demand

April 11, 2006

Scherer & Lea

50

Customizing ThreadPoolExecutor

• ThreadFactory used to create new threads

– Default: Executors.defaultThreadFactory

• Queuing strategies: must be a BlockingQueue<Runnable>

– Direct hand-off using SynchronousQueue: no internal

capacity; hands-off to waiting thread, else creates new one if

allowed, else task is rejected

– Bounded queue: enforces resource constraints, when full

permits pool to grow to maximum, then tasks rejected

– Unbounded queue: potential for resource exhaustion but

otherwise never rejects tasks

– Note: Queue is used internally—you cannot directly place

tasks in the queue

• Subclass customization through beforeExecute and

afterExecute hooks

April 11, 2006

Scherer & Lea

51

Rejected Task Handling

interface RejectExecutionHandler {

void rejectedExecution(Runnable r,

ThreadPoolExecutor e);

}

• Tasks are rejected by a pool

– When it saturates with a bounded queue and maximum pool size

– After it has been shutdown

• Four pre-defined policies—nested classes in ThreadPoolExecutor

– AbortPolicy: execute throws

RejectedExecutionException

– CallerRunsPolicy: execute invokes Runnable.run

directly

– Discards this task if the pool has been shutdown

– DiscardOldestPolicy: discards the oldest waiting task and

tries execute again

– Discards this task if the pool has been shutdown

– DiscardPolicy: silently discard the rejected task

April 11, 2006

Scherer & Lea

52

Case Study: Puzzle Solver

interface Puzzle<P, M> { // P: Position, M: Move

P initialPosition();

boolean isGoal(P position);

Iterable<M> moves(P position);

P applyMove(P position, M move);

}

• A general framework for searching the space of positions

(states) linked by moves (transitions)

– Applies to, for example, sliding block puzzles

– With discrete choices of move for each block

configuration

• Tools:

– ConcurrentHashMap

– ThreadPoolExecutor

– AtomicReference

– CountDownLatch

April 11, 2006

Scherer & Lea

53

Puzzle Solver: Node Class

• Represents a chain of moves from an initial position

– Contains a back pointer so we can reconstruct these moves

class Node<P, M> {

final P pos;

final M move;

final Node<P, M> pre;

Node(P p, M m, Node<P, M> n) {

pos = p; move = m; pre = n;

}

List<M> asMoveList() {

List<M> s = new LinkedList<M>();

for (Node<P,M> n=this; n.move != null; n=n.pre)

s.add(0, n.move);

return s;

}

}

April 11, 2006

Scherer & Lea

54

Puzzle Solver: Recursive (sequential) Soln.

public List<M> solve() {

P pos = puzzle.initialPosition();

return search(new Node<P, M>(pos, null, null));

}

List<M> search(Node<P, M> n) {

if (!seen.contains(n.pos)) {

seen.add(n.pos);

if (puzzle.isGoal(n.pos))

return n.asMoveList();

for (M move : puzzle.moves(n.pos)) {

List<M> result = search(new Node<P,M>(

puzzle.applyMove(n.pos, move), move, n));

if (result != null)

return result;

}

}

return null;

}

private final Set<P> seen = new HashSet<P>();

April 11, 2006

Scherer & Lea

55

Puzzle Solver: Concurrent Solution

// Inner class of concurrent Solver class

class TaskNode extends Node<P,M> implements Runnable

{

TaskNode(P p, M m, Node<P,M> n) { super(p, m, n);

}

public void run() {

if (isSolved() ||

seen.putIfAbsent(pos, true) != null)

return;

if (puzzle.isGoal(pos))

setSolution(this);

else

for (M move : puzzle.moves(pos)) {

P newPos = puzzle.applyMove(pos, move);

exec.execute(

new TaskNode(newPos, move, this));

}

}

}

April 11, 2006

Scherer & Lea

56

Puzzle Solver: Concurrent Solution (2)

class Solver<P, M> {

private final Puzzle<P,M> puzzle;

private final ExecutorService exec = ...

private final ConcurrentMap<P, Boolean> seen =

new ConcurrentHashMap<P, Boolean>();

Solver(Puzzle<P,M> p) { this.puzzle = p; }

public List<M> solve() throws InterruptedException {

exec.execute(new TaskNode(

puzzle.initialPosition(), null, null));

return getSolution(); // block until solved

}

boolean isSolved() { ... }

void setSolution(Node<P,M> n) { ... }

List<M> getSolution()throws InterruptedException { ... }

}

April 11, 2006

Scherer & Lea

57

Puzzle Solver: Thread Pool Configuration

• Entire framework only applies if either:

– Significant, independent work done in concrete isGoal, moves, and

applyMoves methods, or

– Many processors to keep busy

• Pool must not saturate; either

– Use unbounded queue—trading memory for time

– But note that sequential version may use a lot of memory too

– Use unbounded pool size

– More risky! Threads are much bigger than queue nodes

• Use AbortPolicy to make pool shutdown simple

– Use of ExecutorService also allows for cancellation

• Suggest:

exec = new ThreadPoolExecutor(

NCPUS, NCPUS, Long.MAX_VALUE, TimeUnit.NANOSECONDS,

new LinkedBlockingQueue<Runnable>(), new

AbortPolicy() );

April 11, 2006

Scherer & Lea

58

Puzzle Solver: Tracking the Solution

• Requirements:

– Set solution at most once

– getSolution must block until solution available

– Release resources once solution found

• Options

–

–

–

–

Use synchronized with wait/notifyAll

Use Lock and Condition await/signalAll

Use a custom FutureTask

Use atomic variable and a synchronizer:

CountDownLatch

April 11, 2006

Scherer & Lea

59

Interactive Messages

• Synopsis

– Client activates Server with a oneway message

– Server later invokes a callback method on client

– Callback can be either oneway or procedural

– Callback can instead be sent to a helper object of client

– Degenerate case: inform only of task completion

• Applications

– Completion indications from file and network I/O

– Threads performing computations that yield results

April 11, 2006

Scherer & Lea

60

Completion Callbacks

• The async messages are service

activations

• The callbacks are continuation

calls that transmit results

– May contain a message ID or

completion token to tell client

which task completed

• Typically two kinds of callbacks

– Success—analog of return

– Failure—analog of throw

• Client readiness to accept

callbacks may be statedependent

– For example, if client can only

process callbacks in a certain

order

April 11, 2006

Scherer & Lea

61

Completion Callback Example

• Callback interface

interface FileReaderClient {

void readCompleted(String filename);

void readFailed(String filename,IOException ex);

}

• Sample Client

class FileReaderApp implements FileReaderClient {

private byte[] data;

void readCompleted(String filename) {

// ... use data ...

}

void readFailed(String filename, IOException e){

// ... deal with failure ...

}

void doRead() {

new Thread(new FileReader(“file”,data,this)).start();

}

}

April 11, 2006

Scherer & Lea

62

Completion Callbacks continued

• Sample Server

class FileReader implements Runnable {

final String name;

final byte[] data;

final FileReaderClient client; // allow null

public FileReader(String name, byte[] data,

FileReaderClient c) {

this.name = name; this.data = data; this.client = c;

}

void run() {

try {

// ... read...

if (client != null)

client.readCompleted(name);

}

catch (IOException ex) {

if (client != null)

client.readFailed(name, ex);

}

}

}

April 11, 2006

Scherer & Lea

63

Locks and Synchronizers

• java.util.concurrent provides generally useful

implementations

– ReentrantLock, ReentrantReadWriteLock

– Semaphore, CountDownLatch, Barrier, Exchanger

– Should meet the needs of most users in most situations

– Some customization possible in some cases by

subclassing

• Otherwise AbstractQueuedSynchronizer can be used to

build custom locks and synchronizers

– Within limitations: int state and FIFO queuing

• Otherwise build from scratch

– Atomics

– Queues

– LockSupport for thread parking/unparking

April 11, 2006

Scherer & Lea

64

Synchronization Infrastructure

• Many locks and synchronizers have similar properties

– “acquire” operation that potentially blocks

– “release” operation that potentially unblocks other

– Examples:

– ReentrantLock, ReentrantReadWriteLock,

Semaphore, CountDownLatch

• Implementations have to deal with the same issues:

– Atomic state queries and updates

– Queue management

– Blocking, interruption handling, timeouts

• Nature of state and queuing policies can vary wildly

• For specific state and queuing policies a common infrastructure

can be factored out

April 11, 2006

Scherer & Lea

65

AbstractQueuedSynchronizer

• Common synchronization infrastructure for locks/synchronizers

– State can be represented as an int

– Queuing order is basically FIFO

• Supports notion of both exclusive and shared acquisition

semantics

– Exclusive acquire only allowed when not held either

exclusively or shared

– Shared acquire only allowed when not held exclusively

• BUT AQS knows nothing about this

– YOU define when the synchronizer can and can’t be

acquired

– YOU define all the usage rules

– Reentrant acquisition, only owner can release, …

– Barging is/is-not permitted

April 11, 2006

Scherer & Lea

66

Using AbstractQueuedSynchronizer

• Public method are implemented in terms of protected methods that

your subclass provides

– acquire calls tryAcquire

– acquireShared calls tryAcquireShared

– release calls tryRelease, etc …

• You implement whichever of the following suit your needs

– tryAcquire(int) , tryAcquireShared(int)

– tryRelease(int) , tryReleaseShared(int)

– isHeldExclusively()

– Used if you want to provide Condition objects

– Default implementations throw

UnsupportedOperationException

• You use the available state related methods to do the implementation

– int getState(), void setState(int),

boolean compareAndSetState(int, int)

April 11, 2006

Scherer & Lea

67

Example: Mutex

• Mutex: a non-reentrant mutual-exclusion lock

– Only need to implement exclusive mode methods

• State semantics:

– State == 0 means lock is free

– State == 1 means lock is owned

– Owner field identifies current owning thread

– Only owner can release, or use associated Condition

• Class outline

• class Mutex implements Lock {

Thread owner = null;

class Sync extends AbstractQueuedSynchronizer {

// AQS method implementations ...

}

Sync sync = new Sync();

// implement Lock methods in terms of sync ...

}

April 11, 2006

Scherer & Lea

68

Example: Mutex (2)

• boolean tryAcquire(int acquires) – Purpose:

– Acquire in exclusive mode if possible and return true;

– Else return false

– acquires argument semantics determined by the

application

• Mutex.Sync implementation

• public boolean tryAcquire(int unused) {

if (compareAndSetState(0, 1)) {

owner = Thread.currentThread();

return true;

}

return false;

}

– compareAndSetState(int expected, int

newState)

– Atomically set the state to the value newState if it

currently has the value expected. Return true on

success, else false

April 11, 2006

Scherer & Lea

69

Example: Mutex (3)

• boolean tryRelease(int releases) – Purpose:

– Set state to reflect the release of exclusive mode

– May fail by throwing IllegalMonitorStateException

if current thread is not the holder, and the holder is tracked

– Return true if this release allows waiting threads to proceed;

else return false

– releases argument semantics determined by the

application

• Mutex.Sync implementation

• public boolean tryRelease(int unused) {

if (owner != Thread.currentThread())

throw new IllegalMonitorStateException();

owner = null;

setState(0);

return true; // wake up any waiters

}

April 11, 2006

Scherer & Lea

70

Example: Mutex (4)

• Condition support

– AQS provides inner ConditionObject class

– AQS subclass must:

– Support exclusive acquisition by the current thread

– Report exclusive ownership via isHeldExclusively

– Ensure release(int) fully releases and a subsequent

acquire(int) fully restores the exclusive mode state

• Mutex.Sync implementation

• protected boolean isExclusivelyHeld() {

return owner == Thread.currentThread();

}

protected Condition newCondition() {

return new ConditionObject();

}

April 11, 2006

Scherer & Lea

71

Example: Mutex (5)

• Mutex.Sync implementation of Lock methods:

public void lock()

{ sync.acquire(1); }

public boolean tryLock() { return sync.tryAcquire(1);

}

public void unlock()

{ sync.release(1); }

public Condition newCondition() {

return sync.newCondition();

}

public boolean isLocked(){

return sync.isHeldExclusively();

}

public boolean hasQueuedThreads() {

return sync.hasQueuedThreads();

}

public void lockInterruptibly()throws

InterruptedException {

sync.acquireInterruptibly(1);

}

public boolean tryLock(long timeout, TimeUnit unit)

throws InterruptedException

{

return sync.tryAcquireNanos(1,

unit.toNanos(timeout));

}

AQS Queue Management

• Basic queuing order is FIFO

• Single queue for both shared and exclusive acquisitions

– Practical trade-off:

– Two queues more expressive; but

– Atomically working with two queues much harder

• Queue query methods can aid with anti-barging semantics

– boolean hasQueuedThreads()

– Returns true if any thread is queued

– Thread getFirstQueuedThread()

– Returns the longest waiting thread if any

• Additional monitoring/management methods (slow!):

– Collection<Thread> getQueuedThreads()

– Collection<Thread>

getExclusiveQueuedThreads()

– Collection<Thread> getSharedQueuedThreads()

April 11, 2006

Scherer & Lea

73

April 11, 2006

Scherer & Lea

74

Dual Stack: Implementation

TOS

TOS

TOS

TOS

Rest

Data

Request

Fulfiller

Rest

Rest

Request

Rest

(1)

April 11, 2006

(2a)

(2b)

Scherer & Lea

(3b)

75