POLI 209: Analyzing Public Opinion

advertisement

Logistic RegressionDichotomous Dependent

Variables

March 21 & 23, 2011

Objectives

By the end of this meeting, participants

should be able to:

a) Explain why OLS regression is

inappropriate for dichotomous dependent

variables.

b) List the assumptions of a logistic

regression model.

c) Estimate a logistic regression model in R.

d) Interpret the results of a logistic regression

model.

The Problems with OLS and Binary

Dependent Variables

•

•

With a dichotomous (zero/one or similar)

dependent variable the assumptions of

least squares regression (OLS) are

violated.

OLS assumes a linear relationship between

the dependent variable and the

independent variable which cannot be true

with only two categories for the

dependent variable (more of a conceptual

than a technical issue).

The Problems with OLS and Binary

Dependent Variables

•

•

•

Least squares regression (OLS) assumes

normally distributed variables which with a

dichotomous dependent variable cannot be true

(a not that difficult problem).

OLS assumes that the variances of the error

terms are the same, which cannot be true for

dichotomous dependent variables. This makes

hypothesis testing very difficult (major problem).

More intuitively, regression with dichotomous

dependent variables will frequently predict

values outside of the actual range of the

dependent variable.

A Brief Return to OLS

a) OLS is computed with a simple single

formula

•

•

•

A straight linear model

Fit a line to various points

The fit will not be perfect but least squares will

compute the smallest possible difference

b) That method cannot work for

dichotomous dependent variables

Logistic Regression and Maximum

Likelihood

a) The way to compute regression with a

dichotomous dependent variables is

through a procedure known as maximum

likelihood.

b) Maximum likelihood is an iterative

process based on probability theory that

needs the use of a computer.

Logistic Regression and Maximum

Likelihood

c) Instead of fitting a single line, maximum

likelihood models are a guided trial and error

process where a set of coefficients are chosen and

a likelihood function computed.

d) From that initial function, different likelihood

functions are computed to try to get closer and

closer to the true probability of the dependent

variable.

e) When a function can get no closer to the

dependent variable’s probability the process

ends.

Logistic Regression and Maximum

Likelihood

•

Logistic regression is the most commonly

used of all of the maximum likelihood

estimation methods. The other primary

method for computing models involving

dichotomous dependent variables is

probit. Generally, probit results are

comparable to logit results.

Assumptions of Logit

•

•

•

•

•

•

It does not assume a linear relationship.

The dependent variable needs to be binary and

coded in a meaningful way. It is standard to

code the category of interest as the higher value

(for example: voter, Democrat, etc.).

All the relevant variables need to be included in

the model.

The variables in the model need to be relevant.

Error terms need to be independent.

There should be low error rates on the predicting

variables.

Assumptions of Logit

•

•

•

Independent variables should not be

highly correlated with each other

(multicollinearity). This problem will

likely present as high standard errors.

There should be no major outliers on the

independent variables.

Samples need to be relatively large (such

as 10 cases per predictor). If the samples

are too small, standard errors can be very

large and in some cases very large

coefficients will also occur.

The Logit Formula

a) On first glance, the formula for logit is not all

that much different than that for OLS:

yi*=a+bx1i+bx2i+ei

b) Where Y is the dependent variable, X is the

independent variable(s), and e is the error term.

•

•

It is important to note that Y is a probability rather

than a strict value

Also key: Y* is a transformation of Y, so you are

modeling probability indirectly. (Technical: Y* is log

of odds.)

c) The b values can be thought of like in OLS but

their intuition is somewhat different.

The Logit Formula

d) a can be thought of as a shift parameter, it

shifts the term to the left or the right.

•

•

a <0 shifts the curve to the right

a >0 shifts the curve to the left

e) The value of b1 can be thought of as the

stretch parameter, it stretches the curve or

shrinks it.

f) The sign of b1 can be thought of as the

direction parameter, they determine the

direction of the curve.

A Diagram of a Logit Function: y vs. y*

Interpreting Logit Regression Results

a) Logit coefficients cannot be interpreted in

the same way as in OLS. Since the

relationship described is not a linear one, it

cannot be said that a unit change in the

independent variable leads to <blank>

change in dependent variable.

b) Logit coefficients can tell you the direction

of the relationship between the dependent

and independent variable, whether it is

statistically significant and give you a

general sense of the magnitude.

Interpreting Logit Regression Results

c) Statistical significance in logit is based on whether

the effect of the independent variable on the

dependent variable is statistically different from

zero. (Similar to OLS.)

d) Since logit coefficients lack the direct interpretation

of OLS coefficients, many people prefer to use odds

ratios instead.

•

•

•

•

Odds ratios show the effect of the independent variable on

the odds of the dependent variable occurring.

Values greater than 1 mean that the predictor makes the

dependent variable more likely to occur.

Values less than 1 mean that the predictor is less likely to

occur.

Example: Kentucky odds of winning pre & post Kansas

Interpreting Logit Regression Results

e) Coefficients in logit are the effect of the

predictor on the log of the odds (for the

dependent variable).

f) Odds ratios remove the log component of

the coefficient and compute the effect of

the predictor on the odds of the dependent

variable occurring

Goodness of Fit-R2 Like Measures

a) Unlike linear regression, there is no intuitive

equivalent of the R2 statistic for logit models.

b) The desire to create comparable measure has led

to the creation of a variety of so called pseudo R2

measures.

c) In general, the findings are that these pseudo R2

measures perform poorly, so R doesn’t even

report them.

d) If you do calculate pseudo R2 values, you can

report it as a general sense of the fit but it does

not have the same direct interpretation as in

OLS.

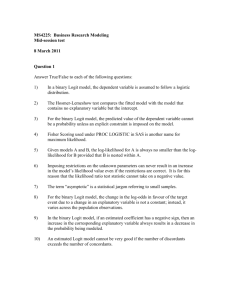

Example: Interpreting Logit Results

a) Data from the 2003 Carolina poll (N=423)

b) The dependent variable is a measure of whether the

person thinks the country was heading on the right

(0) or wrong track (1)

c) The predictors are:

•

•

•

Evaluation of Bush: (1)Excellent- (4)Poor

Party: (1)Democrat (2)Independent (3)Republican

Ideology: (1) Very Liberal- (5) Very Conservative

Bush

Party

Ideology

Constant

Coef. Std. Error

z p>|z| Odds Ratio

1.49

0.17 8.82

0.00

4.44

-0.18

0.16 -1.09

0.27

0.84

0.12

0.12 1.00

0.32

1.13

-3.80

0.76 -5.03

0.00

--

Example: Interpreting Logit Results

a) What do the results tell us about the relationship

between evaluations of Bush and whether or not a

person thinks the country is on the wrong track?

How sure are we of this result?

b) What do the results tell us about the relationship

between partisanship and whether or not a person

thinks the country is on the wrong track? How sure

are we of this result?

c) What do the results tell us about the relationship

between ideology and whether or not a person

thinks the country is on the wrong track? How sure

are we of this result?

Example in R

library(foreign)

ps.are<-read.spss('http://j.mp/classdata',

use.value.labels=FALSE,to.data.frame=TRUE)

ps.are$voted<-as.numeric(ps.are$po_4==1)

ps.are$strength<-abs(ps.are$po_party-4)

logit.model<-glm(voted~dm_income+strength,

data=ps.are, family=binomial(link="logit"))

odds.ratios<-exp(logit.model$coefficients)

summary(logit.model)

odds.ratios

(odds.ratios-1)*100

Example: Interpreting Logit Results

a) What do the results tell us about the relationship

between partisan strength and whether or not a

person voted in 2004? How sure are we of this

result?

b) What do the results tell us about the relationship

between income and whether or not a person voted

in 2004? How sure are we of this result?

What all this means for your papers…

•

•

If your dependent variable is continuous

or has a range longer than 4, use OLS

regression. It is the simplest and the

findings are most intuitive. Even when

some of the assumptions are violated OLS

tends to be a very robust method.

If your dependent variable is

dichotomous, use logit. It is simplest and

most common of the maximum likelihood

estimation methods.

What all this means for your papers…

If your dependent variable is short ordered (i. e.

less than 4 but more than 2 categories), try to

reduce the number of categories to 2 or increase

them to 4 or more

•

•

•

•

•

•

Drop DK/NA responses

Drop middle categories (unless they are the categories

of interest)

Combine multiple categories

Split the data into two parts and run separate analyses

Create a scale to increase the range of the dependent

variable

• Other circumstances: ordered logit (beyond

the scope of this course).

For March 25

a) Turn-in your preliminary data analysis

(one copy per group).

b) Read WKB chapter 15.

c) Based on your reading of chapter 15, what

insight did you find most relevant for your

final paper? (Turn-in individually.)