Introduction to logistic regression

advertisement

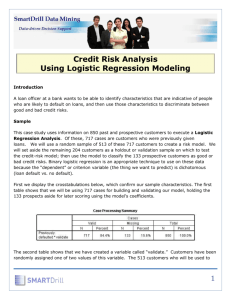

Logistic Regression Logistic regression is generally thought of as a method for modeling in situations for which there is a binary response variable. The predictor variables can be numerical or categorical (including binary). Multinomial (aka polychotomous) logistic regression can be used when there are more than two possible outcomes for the response. But here the focus will be in the typical binary response version. Letting Y be the binary response variable, it is assumed that P (Y 1) is possibly dependent on x , a vector of predictor values. The goal is to model p( x ) P(Y 1 | x ) . Since Y is binary, modeling p (x ) is really modeling E (Y | x ) , which is what is done in OLS regression, with a numerical response. If we model p (x ) as a linear function of predictor variables, e.g., 0 1 x1 ... p x p , then the fitted model can result in estimated probabilities which are outside of [0,1]. What tends to work better is to assume that exp( 0 1 x1 ... p x p ) p( x ) , 1 exp( 0 1 x1 ... p x p ) where x1 ,..., x p may be the original set of explanatory variables, but the predictors may include transformed and constructed variables. It is wrong to assume that the untransformed explanatory variables are all that are ever needed! For example, if there are just two explanatory variables, v1 and v2 , then a 1st-order model which just uses v1 and v2 as predictors may not be adequate, but a 2nd-order model using x1 v1 , x2 v2 , x3 v12 , x 4 v 22 , & x5 v1v2 may provide a nice fit. It can be noted that p( x ) log 0 1 x1 ... p x p . 1 p( x ) log p( x ) 1 p( x ) is called the logit. While the model for p (x ) is somewhat complicated, the model for the logit is linear in the predictors. Also note that no matter what value ˆ0 ˆ1 x1 ... ˆ p x p is, the corresponding estimate of p (x ) will be between 0 and 1. The unknown parameters (the coefficients, 0 , 1 ,..., p ) are typically estimated by maximizing the likelihood, n p( x) yi 1 p( xi ) 1 yi , i 1 which is just an expression for P(Y1 y1 ,..., Yn yn | x1 ,..., xn ) . (The mles are determined numerically, by maximizing the log likelihood.) When OLS regression is used to fit a model for a numerical variable, t tests, F tests, and especially residuals are typically used to arrive at the final model. The situation is different with logistic regression: one can use (approx.) z tests and chi-square tests (which are approx. generalized likelihood ratio tests based on asymptotic theory), but residuals aren’t used like they are for OLS regression. To test H 0 : j 0 vs. H1 : j 0 , one can use the test statistic Z ˆ j Vˆar ( ˆ j ) 1 , using the fact that H0 Z ~ approx . N (0,1) (provided that the sample size isn’t too small). To test H 0 : q 1 ... p 0 against the general alternative, one can use an (approx.) chi-square test based on the difference in the estimated log likelihoods corresponding to the two models (one based on the assumption that the null hypothesis is true, and one which includes terms involving xq 1 ,..., x p ). I won’t give further details here, but I will show you how to perform such a test using R. These chi-square tests can be used to compare a full 3rd-order model to an additive rd 3 -order model, to compare a 2nd-order model to a 1st-order model, etc. Polynomial models tend to be used a lot in logistic regression. Perhaps this is due to the fact that while it can be believed that exp( g ( x )) p( x ) 1 exp( g ( x)) for some function g (x ) , it is often difficult to determine what should be used for g (x ) . However, it can be hoped that g (x ) can be suitably approximated by a sufficiently complex polynomial when the coefficients are fit using adequate data. When considering polynomial models, one doesn’t square or cube binary predictors (since squaring or cubing 0 and 1 has no effect). But interaction variables involving binary predictors can be useful (but with two binary predictors one doesn’t need to go beyond a simple product interaction). Although polynomial modeling, combined with stepwise and/or best subsets routines, may lead to a decent model, sometimes it’s good to investigate transformations of explanatory variables. One can do this graphically. First, divide the x j values up into four to ten groups, using three to nine cut points. For now, suppose that six groups are used. Then fit a model with x j replaced by w1 , w2 ..., w6 , where the wk are indicator variables. For each case, set wk equal to 1 if 1 Under the radical sign, the notation is for the estimator of the variance of regression coefficient). ˆ j (the estimator of the x j belongs to the k th group, and set wk equal to 0 otherwise (so that for each case exactly one of the indicator variables is equal to 1). Finally, plot the estimated coefficients for the indicator variables against the corresponding group midpoint or group mean. The pattern of the plotted points indicates whether x j should go into the model linearly, or whether it should be transformed. For example, suppose that for a sample of 24 cases, the x j values are distributed as shown below. x x x x x x xx x x x x x x x x x x x x xx x x xj The cutpoints indicated by the green arrows can be used to form six groups. The red marks show the group means. If the graph of the plotted points is as shown below, then x j should go into the model linearly. This is because the term ̂ j x j would have about the same effect on the value of the fitted logit as ˆ1w1 ˆ2 w2 ... ˆ6 w6 – each gives a contribution to the logit proportional to x j . The terms involving the indicators are flexible enough to allow x j to affect the logit in a nonlinear way, but the fit indicates (in this case) that the effect of the x j on the logit is (approx.) linear. Coefficient o o o o o o group mean 0 Coefficient o o o o o o group mean 0 The second plot above suggests that it may be good to use 1 x j as a predictor instead of x j . The shapes which suggest a positive power transformation (e.g., squaring) and a two- level thresholding transformation (which can be accomplished by replacing an explanatory variable by an indicator variable) are shown below. Positive power two-level thresholding It should be noted that this technique does adjust for the effects of other predictors (as do c+r plots, which are used for assessing the need for transformations in OLS regression).