Understanding and Interpreting Standardized Assessment Measures

advertisement

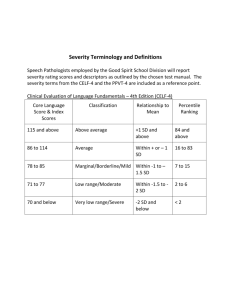

Setting the Stage • Physical working conditions greatly impact test performance • High levels of focus / attention are required • Minimize opportunities for distraction The Examiner: Friend or Foe? • The assessment process can be intimidating for many students • Take 3-5 minutes to establish rapport • Establish a positive relationship • Demonstrate that you care about helping the student more than about the test. Introducing the Assessment • Explain purpose of the assessment using positive language • “I’d like us to do a few activities similar to what you do in class everyday. This will let me know how you are doing and how I can help.” • The goal is for students to perceive the assessment as a helpful tool instead of a dreaded test Standardization: a basis for comparison • Goal of assessment is to understand an individual’s skills compared to a representative sample • Following standardization rules ensures that results can be interpreted accurately • Breaking standardization cannot help the student, but may be harmful Standard Scores • Raw scores are transformed into standard scores based on the performance of the normative sample. • Most standardized tests we use in the schools have an average of 100 and a standard deviation of 15. • Standard scores express how far a student’s score lies above or below the average of the total distribution of scores. Bell Curve Standard Error Of Measurement (SEM) and Confidence Bands •Tests are imperfect, therefore test scores are imperfect. No standardized test is perfectly reliable. •The obtained score on a given day is likely to be different than what it would be on another day. •Confidence bands around the obtained score reflect the known degree of imprecision. SEM and Confidence Bands • Most tests allow the examiner to choose the level of confidence to place around the obtained score: 68%, 90% or 95%. • As the percentage of ‘confidence’ increases, the width of the confidence band increases. • Comparing confidence bands on subtests help guide the interpretation of cluster scores (more on this later). Percentile Ranks • Shows a student’s relative position compared to the standardization sample. • Performance at the 45th percentile means the student did as well or better than 45 percent of peers in the normative sample. • Not the same as percent correct. PR refers to percentage of people, PC refers to a percentage of items correct. Age and Grade Equivalents Cautions: • They likely do not reflect the student’s actual skill level. • They are not equal units, therefore they cannot be added, subtracted or averaged. • The difference between GE’s of 2.1 and 2.3 are not the same as differences between GE’s of 7.1 and 7.3. • That said, AE’s and GE’s on the WJ-III/NU are not all bad. If Test Results Don’t Support Teacher’s Observations in the Classroom • Compare task demands (e.g., Writing Samples vs more typical writing tasks in the classroom). • Compare observed behavior in the classroom to behavior during the 1:1 testing session. • Look at RPI vs Standard Score. Woodcock Johnson Clusters “What are we measuring?” 1. 2. Basic Reading Skills—measures skill in applying phonic and structural analysis skills to the pronunciation of unfamiliar printed words. Subtests include Letter-Word ID and Word Attack. Reading passages in science class, books/poems in English, cell formation in Biology, story problems in Math. Reading Comprehension—a students ability to read a short passage and pick an appropriate word so the passage makes sense. Can a student understand, make sense, and relay what they have read in sequence? After reading a passage, can a student answer who, what, where questions? “What are we measuring?” 1. Written Expression—how quickly/fluently a student can not only write, but formulate, a simple sentence. How creative a student can be in formulating sentences when given certain demands. For example, in the classroom, this may include journal entries, stories, poems, essays, etc. “What are we measuring?” 1. Math Calculation Skills—students basic calculation skills. The foundations of math skills. Adding, subtracting, multiplying, dividing, and a combination of these basic computations. Depending on student level, this could include some components of trigonometry, geometry, and calculus. 2. Math Reasoning Skills—a student must listen to the problem, recognize the procedure to solve this problem, and do the calculations. This area measures a students ability to pick out important information from a paragraph of extraneous information and compute relatively basic calculations. How do we explain results to parent/staff? 1. Bell curve to demonstrate average level and where the student falls in relation to others the students same age/grade. Use a laminated copy with white board marker. Explanation of Results: 1. Woodcock Johnson Tests of Achievement Printouts: TABLE OF SCORES Woodcock-Johnson III Normative Update Tests of Achievement (Form A) WJ III NU Compuscore and Profiles Program, Version 3.2 Norms based on age 15-2 CLUSTER/Test Raw W AE EASY to DIFF RPI SS (68% Band) GE BRIEF READING - 532 15-11 13-3 25 92/90 103 (99-107) 10.4 BASIC READING SKILLS - 543 26 16-0 >30 97/90 112 (108-117) 13.2 READING COMP - 518 12-11 10-8 16-7 81/90 92 (88-96) 7.5 WRITTEN EXPRESSION - 511 12-8 10-4 18-1 80/90 91 (86-96) 7.2 ________________________________________ Letter-Word Identification 70 556 23 16-2 >30 98/90 112 (108-116) 13.0 Writing Fluency 17 501 10-3 8-11 12-0 46/90 78 (73-84) 4.9 Passage Comprehension 33 509 11-6 9-6 14-11 74/90 89 (84-95) 6.1 Writing Samples 18-D 520 >30 13-9 >30 95/90 109 (103-116) 13.0 Word Attack 30 530 >30 15-3 >30 96/90 109 (102-116) 14.8 Reading Vocabulary - 526 14-2 11-10 17-7 86/90 97 (93-100) 8.7 ________________________________________ WJ Printouts Continue: 1. Age/Grade Profile: graphic depicts where a student falls based upon his chronological age in relation to the average level. 2. Standard Score/Percentile Rank Profiles. 3. Parent Report depicts boxes of ‘negligible’, ‘very limited’, ‘limited’, limited to average’, average’, ‘average to advanced’, ‘advanced’, and ‘very advanced’. This report puts X’s in the areas where the student falls for each task. Also provides national percentiles for each task. Other Presentation Styles/Ideas: 1. Document camera so everyone is looking at same documents. 2. Projector so everyone can follow the IEP/ESER at the same time and see what notes, changes, or additions are added. 3. Copies of evaluation results for everyone at the meeting. 4. Sit near the parent/guardian so you can talk to them about the results, show them the information on your charts, graphs, bell curves, etc. and be able to clarify anything confusing. Remember…. Not all parents/guardians/staff understand statistics, standard scores, scaled scores, bell curves, averages, etc. Talk in simple terms and DESCRIBE subtests/clusters as they relate to the child in the classroom! Resources: 0 WJ-III Assessment Service Bulletins can be found here: http://www.riverpub.com/clinical/wjasb.html #5 compares WJ-III with other achievement tests #8 discusses educational interventions based on WJ-III/ NU performance. #11 explains the W scale and the Relative Proficiency Index (RPI) 0 Essentials of WJ III Tests of Achievement Assessment, Mather, Wendling and Woodcock, 2001