How to Set Performance Test Requirements and Expectations

advertisement

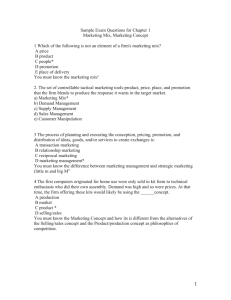

Readiness Index – Is your application ready for Production? Jeff Tatelman SQuAD October 2008 Agenda Building a Metrics Program Typical Test Organizations Readiness for Change Metrics and Productivity Production Readiness Introduction & Objective Four Key Metrics Reporting Typical Test Organizations No metrics collected No collection of requirements Limited formal reporting on project status Some central test repositories but not dash boarding results Minimal effort towards process improvement Dimensions Of Readiness Motivation Investment Skills Education Culture Support Staff Aids/Maturity Process Maturity Getting Started Set Measurement Objectives Select Measures Develop Measurement Program How to Use the Results Set Measurement Objectives Determine Approach Determine Scope Identify best method to track and analyze agreed metrics Determine Timescale One application? One project? Determine Method Which measures will be included as a priority Focus on a few to start with to get ‘quick wins’ Full project lifecycle? Time boxed approach, eg. 1, 3 or 6 months Determine Deliverables Report, audience, next steps Metrics and Productivity Strategic Tactical Is it to increase customer satisfaction? Is it to achieve CMM level 2? Is it to achieve industry standards? Is it to improve vendor delivery? Is it to increase timeliness of system delivery? Operational Is it to reduce defects found in testing? Is it to improve requirements definition? Measuring Unit Examples Strategic Quality Customer satisfaction Timeliness (delivery) Personnel Industry benchmarking Tactical Estimating and planning System quality System delivery cost/time Budget Productivity Cost of quality Operational Schedule tracking Effort tracking Defects Problem resolution System availability Develop Measurement Program Establish Specific Measurement Objectives Define a Critical Metrics Set Select Measures to Support the Metrics Set Put Collection Mechanisms into Place Determine Timing of Data Collection Establish Mechanism for Evaluating Results Communicate Results Establish Process for Future Planning Based on Metrics How To Use The Results Conduct root cause analysis of the data Identify areas which would have the biggest impact and look to how to improve Implement procedures to continually improve the processes Revisit original list and determine what to address next Key Points to Remember Don’t try to measure too much Understand the goals of your team before you determine what to measure Determine which metrics support these goals Don’t let your metrics define the behavior of the team Change or choose a set of relative metrics that can not be manipulated Monitor and identify trends , define areas for improvement Allan Page Measurement that Matter, Better Software October 2005 Production Readiness Based On Mike Ennis’ Presentation at Star West 2006 How do you know when a product is ready to ship? Quality Metrics Customer Commitment Release Dates Are Preset Indefinite Testing Adequate Test Coverage Time & Resources Release Criteria Release Criteria No open critical or high defects Minimal number of medium & low defects that have been approved by Senior Management Product is able to run for 72 consecutive hours No open installation or configuration issues All pre-defined performance criteria has been met Determining What to Measure Test Case Execution Percentage Test Case Success Percentage The percentage of tests that have passed during the test cycle Number of Unresolved Defects The percentage of tests that have been attempted during the test cycle The number of open defects that are currently logged against the product Defect Arrival Rate The number of defects found in a given day, week or build Readiness Metrics Objective Provide management an overall picture to assess if the project is ready to be placed in production. Evaluate an application go / no-go production readiness status by Tracking test case execution and defect metrics over time Calculating the Production Readiness Index based on the above metrics Test Case Spreadsheet Example Defect Spreadsheet Example Setting Range For Each Metric Test Case Execution Percentage 10 = 9 = 8 = 7 = … etc 100% 90-99% 80-89% 70-79% Defect Arrival Rate This Week 10 = 0 9 = 1-2 8 = 3-4 … etc Creating and Analyzing Readiness Example Defects Arrival Rate RATING RANGES 9 10 = 0, 9 = 1-2, 8 = 3-4, 7 = 4-5, 6 = 5-6, 5=6-7, 4=… Total Open Defects 9 10 = 0-5, 9 = 5-10, 8 = 11-15, 7 = 16-20, 6 = 20-25 … Test Success % 7 10 = 100%, 9 = 99-97%, 8 = 96-94%, 7 = 93-90%... Test Completion % 6 10 = 100-95%, 9 = 94-90%, 8 = 89-85%, 7 = 84-80%.. TOTAL RATING 31 Green = 35-40, Yellow = 29-34 Red < 28 Spider Chart Example Test Completion 10 8 6 4 2 Defect Arrival Rate 0 Unresolved Defects Test Success Management Graph Example Test Execution Ratings (green) - scale of 10, goal = 10 Defect Ratings (red) - reverse scale of 10, goal = 0 10.0 8.9 7.7 8.0 6.0 4.0 3.1 2.0 1.1 0.0 Test Completion Test Success Unresolved Defects Defect Arrival Rate Readiness Index Example Production Readiness Index - scale of 100 94 100 60 40 81 73 80 32 30 34 38 34 3 4 5 6 46 41 7 8 100 72 45 47 51 10 11 12 20 20 0 1 2 9 Week / Day 13 14 15 16 Final Thoughts Managing the risks Understand the relationships between the metrics Learn to anticipate and minimize the risks before they happen Always know the information behind the data Are you ready to release? Redefine your Release Criteria using the individual/overall rating scale Use colors for presentation & effectiveness Let the data speak for itself Key Attributes Of a Good Measure Usefulness Trustworthiness Does it indicate the health of a system...advanced warning? Simplicity Does not provide false indications Timeliness Does it tell us what’s happening, wrong or need to know? Too difficult to read, it will get abandoned Accessibility Must be visible, easy to get to Questions jeffcolorado@juno.com