75 Years After Likert: Thurstone Was Right!

advertisement

Assessing Personality

75 Years After Likert:

Thurstone Was Right!

(And some implications for I/O)

Colleagues

Sasha Chernyshenko

Steve Stark

Thurstone

In a series of papers in the late 1920s,

Thurstone asserted “Attitudes Can Be

Measured” and provided several methods

for their measurement

He assumed that a conscientious person

would endorse a statement that reflected

his/her attitude…but

“as a result of imperfections, obscurities, or

irrelevancies in the statement, and

inaccuracy or carelessness of the subjects”

not everyone will endorse a statement, even

when it matches their attitude

Thurstone, Psych Review,

1929

For N1 people with attitude S1, all should

endorse a statement with scale value S1 if

they were conscientious and the item was

perfect; but only n1 actually endorse the

item

These people will endorse another

statement with scale value S2 with a

probability p that is a function of |S1-S2|

Figure from Thurstone’s paper:

Thurstone 1929

Thurstone 1928 Attitudes

Can Be Measured

Gave an example of an attitude

variable, militarism-pacifism, with six

statements representing a range of

attitudes:

Thurstone 1928

Thurstone 1928

A pacifist “would be willing to indorse all or

most of the opinions in the range d to e and

… he would reject as too extremely

pacifistic most of the opinions to the left of

d, and would also reject the whole range of

militaristic opinions.”

“His attitude would then be indicated by the

average or mean of the range that he

indorses”

Implications

On Thurstone’s pacificism-militarism scale,

three people might endorse two items each:

Person 1 endorses f and d, and is very

pacifistic

Person 2 endorses e and b, and is neutral

Person 3 endorses c and a, and is very

militaristic

Thus, it is crucial to know which items are

endorsed!

Likert 1932

Proposed a much simpler approach: A fivepoint response scale with options “Strongly

Approve”, “Approve”, “Neutral”,

“Disapprove”, and “Strongly Disapprove”.

The numerical values 1 to 5 were assigned

to the different response options

And an individual’s score was the sum or

mean of the numerical scores

Likert 1932

Likert evaluated his scales by

Split-half reliability

Item-total correlations

To make this work, he hit upon the

idea of reverse scoring, e.g.,

statements like d and f from

Thurstone needed to be scored in the

opposite direction of statements like a

and c.

Likert 1932

When computing item-total correlations, “if a

zero or very low correlation coefficient is

obtained, it indicates that the statement fails

to measure that which the rest of the

statements measure.” (p. 48)

“Thus item analysis reveals the

satisfactoriness of any statement so far as

its inclusion in a given attitude scale is

concerned”

Likert 1932

Likert discarded intermediate

statements like “Compulsory military

training in all countries should be

reduced but not eliminated”

Such a statement is “double-barreled

and of little value because it does not

differentiate persons in terms of their

attitudes” (p. 34)

Likert Scaling

Although Likert didn’t articulate a

psychometric model for his procedure, his

analysis implies what Coombs (1964) called

a dominance response process.

Specifically, someone high on the trait or

attitude measured by a scale is likely to

“Strongly Agree” with a positively worded

item and “Strongly Disagree” with a

negatively worded item

Example of a Dominance Process

Prob of Positive Response

Person endorses item if her standing on

the latent trait, theta, is more extreme

than that of the item.

1.0

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0.0

Person

Item

-3

-2

-1

0

Theta

1

2

3

Thurstone Scaling

Thurstone assumed people endorse

items reflecting attitudes close to their

own feelings

Coombs (1964) called this an ideal

point process

Sometimes called an unfolding model

Example of an Ideal Point Process

Person endorses item if his standing on the

latent trait is near that of the item.

“I enjoy chatting quietly with a friend at a cafe.”

Disagree either because:

Too introverted (uncomfortable in public places)

Too extraverted (chatting over coffee is boring)

Too

Introverted

Item

Too

Extraverted

Important Point:

The item-total correlation of

intermediate ideal point items will be

close to zero!

Which Process is Appropriate

for Temperament Assessment?

In a series of studies, we’ve

Examined appropriateness of dominance process

by fitting models of increasing complexity to data

from two personality inventories

Compared fits of dominance and ideal point

models of similar complexity to 16PF data

Compared fits of dominance and ideal point

models to sets of items not preselected to fit

dominance models

Fitting Traditional Dominance

Models to Personality Data

Data

16PF 5th Edition

• 13,059 examinees completed 16 noncognitive scales

Goldberg’s Big Five factor markers

• 1,594 examinees completed 5 noncognitive scales

Models examined

Parametric – 2PLM, 3PLM

Nonparametric – Levine’s Maximum Likelihood Formula

Scoring (MFSM)

Three-Parameter Logistic

Model

Three-Parameter Logistic

Model

Three-Parameter Logistic

Model

Three-Parameter Logistic

Model

Three-Parameter Logistic

Model

Three-Parameter Logistic

Model

Two-Parameter Logistic

Model

Methods for Assessing Fit: Fit Plots

Prob. of Positive Response

1.0

0.8

0.6

IRF

EMP

0.4

0.2

0.0

-3.0

-2.0

-1.0

0.0

Theta

1.0

2.0

3.0

Methods for Assessing Fit: ChiSquares

Chi-squares typically computed for single items

2

O

k

E

k

i

i2 i

E i k

k 1

s

Ei k N P ui k * f d

Very important to examine item pairs and triplets

May indicate violations of local independence or misspecified model

Eij (k , k ') N P(ui k ) P (u j k ') f ( )d

Methods for Assessing Fit: ChiSquares

To aid interpretation of chi-squares:

Adjust to sample size of 3,000

Compare groups of different size

The expected value of a non-central chi-square is equal to its df

plus N times the noncentrality parameter d

E( ) df Nd

2

where N is the sample size. So an estimate of the noncentrality

parameter is

2

dˆ ( df ) / N .

Adjusted Chi-square

To adjust to a sample size of, say,

250, use

Adjusted df 250( df ) / N

2

2

For IRT, we usually adjust to N =

3000, and divide by the df to get an

adjusted chi-square/df ratio

Less than 2 is great, less than 3 is OK

Adjusted Chi-square/df for

an Ability Test

<1

Singlets

Doublets

Triplets

11

77

327

FREQUENCY TABLE OF ADJUSTED (N=3000) CHISQUARE/DF RATIOS

1<2

2<3

3<4

4<5

5<7

>7

Mean

3

2

1

0

2

1

1.877

44

31

16

12

9

1

1.829

424

264

92

14

16

3

1.71

AdjChf < 3

SD

2.923

1.734

1.092

Results for 16 PF Sensitivity

Scale: Mean Chi-sq/df Ratios

Model

Singles

Doubles

Triples

2PL

0.98

4.05

5.45

3PL

0.87

3.89

5.23

SGR

0.99

7.76

7.12

MFS-dich

2.91

2.61

2.42

MFS-poly

1.55

2.68

2.58

What if Items Assessed Trait Values

Along the Whole Continuum?

Items on existing personality scales

have been pre-screened on item-total

correlation

We speculate that items measuring

intermediate trait values are

systematically deleted

So, what happens if a scale includes

some intermediate items?

TAPAS Well-being Scale

Tailored Adaptive Personality

Assessment System

Assesses up to 22 facets of the Big

Five

Well-being is a facet of emotional

stability

We wrote items reflecting low,

moderate, and high well-being

For example, TAPAS WellBeing Scale

WELL04, “I don’t have as many happy

moments in my life as others have

WELL17, “My life has had about an

equal share of ups and downs

WELL41, “Most days I feel extremely

good about myself

In total, 20 items. 5 negative items, 9

positive, and 6 neutral

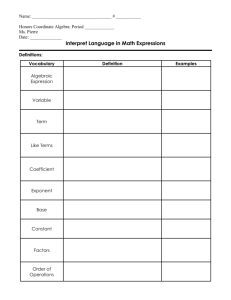

Traditional Analysis Results

Item_Name

Initial SME

Location

Reverse

Mean

SD

Factor

Loading

CITC

(alpha=.76)

1

2

3

4

5

WELL02

WELL04

WELL06

WELL09

WELL13

negative

negative

negative

negative

negative

r

r

r

r

r

2.14

2.08

2.23

2.22

2.20

0.80

0.87

0.78

0.76

0.77

-0.40

-0.45

-0.55

-0.53

-0.54

0.35

0.40

0.45

0.42

0.45

6

WELL16

neutral

2.48

0.85

0.08

0.08

7

WELL17

neutral

2.82

0.73

0.13

0.15

8

WELL19

neutral

2.85

0.65

-0.09

-0.05

9

10

11

12

13

14

15

16

17

18

19

20

WELL20

WELL23

WELL26

WELL29

WELL30

WELL34

WELL38

WELL40

WELL41

WELL43

WELL45

WELL46

neutral

neutral

neutral

positive

positive

positive

positive

positive

positive

positive

positive

positive

3.00

3.03

2.80

2.89

2.77

3.13

2.80

2.53

2.96

3.13

2.82

2.89

0.89

0.64

0.78

0.74

0.74

0.70

0.82

0.75

0.73

0.66

0.70

0.72

0.04

0.07

-0.14

0.36

0.56

0.46

0.57

0.56

0.56

0.63

0.53

0.47

0.06

0.11

0.06

0.48

0.42

0.35

0.49

0.48

0.50

0.55

0.46

0.41

r

r

Fit Plot for 2PL WELL17

Prob. of Positive Response

Fit Plot for Item 7

1.0

0.8

0.6

IRF7

0.4

EMP7

0.2

0.0

-3.0

-2.0

-1.0

0.0

Theta

1.0

2.0

3.0

An Ideal Point Model: The

Generalized Graded Unfolding

Model (GGUM)

Roberts, Donoghue, & Laughlin (2000). Applied

Psychological Measurement.

The model assumes that the probability of

endorsement is higher the closer the item to the

person

GGUM software provides maximum likelihood

estimates of item parameters

GGUM

P( Zi 0 | j )

The probability of disagree is:

1 exp{3 i ( j d i )}

1 exp{3 i ( j d i )} exp{ i [( j d i ) i1 ]} exp{ i [2( j d i ) i1 ]}

and the probability of agree is

P( Z i 1| j )

exp{ i [( j d i ) i1 ]} exp{ i [2( j d i ) i1 ]}

1 exp{3 i ( j d i )} exp{ i [( j d i ) i1 ]} exp{ i [2( j d i ) i1 ]}

GGUM Estimated IRF for

Moderate Item

Prob. of Positive Response

GGUM ORF for Option 2

1.0

0.8

0.6

0.4

0.2

0.0

-3.0

-2.0

-1.0

0.0

1.0

2.0

Well-Being

IRF for Agree response to TAPAS Well-being item “My life

has had about an equal share of ups and downs.”

3.0

TAPAS Well-being Scale

2PL Results:

<1

Singlets

Doublets

Triplets

20

17

5

FREQUENCY TABLE OF ADJUSTED (N=3000) CHISQUARE/DF RATIOS

1<2

2<3

3<4

4<5

5<7

>7

Mean

0

0

0

0

0

0

0

1

0

0

1

2

3

2.955

0

1

0

0

1

5

5.408

SD

0

6.439

6.512

GGUM Results:

<1

Singlets

Doublets

Triplets

20

22

9

FREQUENCY TABLE OF ADJUSTED (N=3000) CHISQUARE/DF RATIOS

1<2

2<3

3<4

4<5

5<7

>7

Mean

0

0

0

0

0

0

0

0

0

0

0

0

2

0.997

0

0

1

1

1

0

1.081

SD

0

3.256

2.001

Summary of Findings

2PLM and 3PLM fit scales developed by traditional

methods OK, but if moderate items are included

Chi-square doublets and triplets can be large, especially when

moderate items are included

Discrimination parameter estimates are uniformly small for

moderate items (and item-total correlations are near zero).

GGUM fits all items, including moderate items

Adj. chi-square to df ratios are small for doubles and triples

GGUM discrimination parameter estimates are large for the

moderate items!

So, for Well-Being

Fitting a dominance item response

theory model (the 2-parameter

logistic) produced an adjusted ChiSquare to df ratio of 2.955 for pairs

The ideal point model yielded an

adjusted Chi-square/df ratio of 0.997

for pairs

Conclusion

Ideal point model seems more appropriate

for temperament assessment

BUT there’s a “Fly in the ointment” for I/O

Correct specification of response process

does not guarantee more accurate

assessment, because …

Traditional items are easily FAKED

Examples of “Traditional” Items

that are Easily Faked

In each case, the positively keyed response is

obvious.

I get along well with others. (A+)

I try to be the best at everything I do. (C+)

I insult people. (A-)

My peers call me “absent minded.” (C-)

Because these items consist of individual statements, they

are commonly referred to as “single stimulus” items.

Army Assessment of

Individual Motivation (AIM)

Uses tetrads:

•

•

•

•

I get along well with others. (A+)

I set very high standards for myself. (C+)

I worry a lot. (ES-)

I like to sit on the couch and eat potato

chips. (Physical condition-)

Respondent picks the statement that is

Most Like Me and the statement that is

Least Like Me

Army AIM has shown less score inflation

What psychometric model would describe

this type of data????

So…

US Army researchers Len White and

Mark Young (and others) found some

fake resistance and criterion-related

validity for the tetrad format

But modeling four-dimensional items

was too hard for me!

How about two-dimensional items?

Multidimensional Pairwise

Preference (MDPP) Format

Create items by pairing stimuli that are similar in

desirability, but representing different dimensions

“Which is more like you?”

• I get along well with others. (A+)

• I always get my work done on time. (C+)

This led to my work on personality assessment

over the past 10 years

And the result is:

Tailored Adaptive

Personality Assessment

System (TAPAS)

TAPAS is designed to overcome existing limitations of

personality assessment for selection by incorporating

recent advancements in:

Temperament/personality assessment

Item response theory (IRT)

Computerized adaptive testing (CAT)

Our goal is for TAPAS to be innovative in both how we

assess (IRT, CAT) and what we assess (facets of

personality)

TAPAS Vision

Fully customizable assessment to fit array of users’

needs

Users can select

any dimension from a comprehensive superset;

a scale length to suit their needs

a response format (depends on faking worries)

adaptive or static

Resulting scores can be used to predict multiple criteria

or as source of feedback

TAPAS Facet Dimensions

Based

on factor analysis of each of the

Big Five dimensions

E.g., Roberts, B., Chernyshenko, O.S., Stark, S., & Goldberg,

L. (2005). The structure of conscientiousness. Personnel

Psychology

Analyzed 7 major personality inventories

Currently 21 facets + additional “physical

condition” facet for military jobs

TAPAS Facet Dimensions

Conscientiousness

Six facet hierarchical structure:

Industriousness: task- and goal-directed

Order: planful and organized

Self-control: delays gratification

Traditionalism: follows norms and rules

Social Responsibility: dependable and reliable

Virtue: ethical, honest, and moral

Factor Analysis Results

For each facet, we have an empirical mapping of existing scales to our

facets

Provide basis for existing scale classification

Validity of each facet can be investigated via meta-analysis

Scale Name

Industrious

ness

neo competence

.88

neo achievement striving

.76

ab5c organization

.75

ab5c purposefulness

.67

neo self-discipline

.65

ab5c efficiency

.63

ab5c rationality

.50

neo dutifulness

.49

Order

-.28

.02

.11

.18

.22

.36

.16

-.05

Factor

SelfResponsibi Traditional

control

lity

ism

.14

.10

-.01

-.12

.10

.09

.05

.11

-.10

-.04

-.02

-.11

-.11

-.03

-.02

-.19

-.03

-.07

.12

-.28

.16

.14

-.02

.26

Virtue

-.09

-.18

-.17

.24

.16

.21

-.01

.09

TAPAS Military Meta-Analysis

42 studies or technical reports

1988-2006

Small number of police and fire-fighter studies were also

included

22 TAPAS facets

8 criteria (e.g., task proficiency, contextual

performance, leadership, attrition, fitness)

1494 empirical correlations

TAPAS Military Meta-Analysis

Industriousness Results

N

kd

kc

Job/Task Performance

38964

14

36

Contextual Performance

19423

9

18

Counterproductivity

17673

8

17

Attrition

17912

5

8

Leadership

9429

12

20

Training Performance

6156

8

27

Adaptability

1291

3

4

18044

5

17

Criterion

.05

.21

-.14

-.09

.15

.14

.17

.18

Validity tables can be used to guide the choice of facets!

Corrected

Validity

.06

.26

-.18

-.10

.18

.17

.21

.23

Physical Fitness

Observed

Validity

TAPAS Civiliam Meta-Analysis

Studies or technical reports in the period

1988-2006

Same 8 criterion categories and 22 TAPAS

facets

4755 validity coefficients (so, in total, we

have over 6,000 validities in our database)

“How” TAPAS Measures

Our research on the item response process for

personality stimuli (Stark et al., 2006; Chernyshenko et al., 2007)

suggests that

Response endorsement is driven by the similarity between

the person and the behavior described by the stimulus

(aka, an ideal point process)

Implications:

Different models (not the 3PL or SGR) should be used for

item administration and scoring: e.g., GGUM

Multiple stimuli per item are possible (i.e., pairs)

“How” TAPAS Measures

The choice of 4 response formats will be

available

Single statement dichotomous (Agree/Disagree)

Single statement polytomous (SA,A,D,SD)

Unidimensional pairwise preference (i.e., twoalternative forced choice)

Multidimensional pairwise preference (Stark,

2002)

•

Used when faking is likely

Single Statement Scales

Generalized Graded Unfolding Model (GGUM;

Roberts et al., 1998)

Reverse scoring is not needed

Basic idea: a person endorses an item if it

accurately describes him/her

Thus, the probability of endorsement is higher

the closer the item to the person

GGUM IRFs for two

Personality Statements

"I enjoy chatting quietly with a friend at a café."

(Sociability)

"I am about as organized as most people."

(Order)

1.0

1.0

0.9

0.9

0.8

0.8

0.7

0.6

0.6

P(theta)

P(Theta)

0.7

0.5

0.4

0.5

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0.0

-3.0

-2.0

-1.0

0.0

Theta

1.0

2.0

3.0

0.0

-3.0

-2.0

-1.0

0.0

Theta

1.0

2.0

3.0

Multidimensional Pairwise

Preference (MDPP) Format

Create items by pairing stimuli that are similar in

desirability, but representing different dimensions

“Which is more like you?”

• I get along well with others. (A+)

• I set very high standards for myself. (C+)

MDPP Roots: Assessment of

Individual Motivation (AIM)

AIM utilizes forced-choice tetrad format to reduce

social desirability effects

Greater resistance to faking than ABLE (a single

statement personality inventory developed by the

Army researchers)

Low correlations (.00 to .25) with examinee race

and gender and measures of cognitive ability

Predicts attrition and various job and training

performance criteria in research and operational

testing

MDPP Roots: Assessment of

Individual Motivation (AIM)

But, due to quasi-ipsative scoring

AIM items are difficult to create and

Score accuracy cannot be checked against

known scores, because no formal

psychometric model for stimulus

endorsement is available

CAT is not possible without a psychometric

model

IRT Model for Scoring

Multidimensional Pairwise Preference Items

(Stark, 2002; Stark, Chernyshenko, & Drasgow, 2005)

1 = Agree

0 = Disagree

Pst {1,0}

Ps {1}Pt {0}

P( s t ) i (d s , d t )

Pst {1,0} Pst {0,1} Ps {1}Pt {0} Ps {0}Pt {1}

Respondent evaluates each stimulus (personality statement) separately

and makes independent decisions about endorsement.

Stimuli may be on different dimensions.

Single stimulus response probabilities P{0} and P{1} computed using a

unidimensional ideal point model for “traditional” items (GGUM)

Refer to new pairwise preference model as MDPP

MDPP IRF for Item Measuring

Sociability and Order

(a)

MDPP Model Performance

Stark & Drasgow (2002)

.77 correlation between estimated and known

scores in 2-D tests, 20 pairs, 10% unidimensional

Stark & Chernyshenko

.88 for 5-D tests, 50 items, 5% unidimensional

All possible pairings of dimensions was not

required for good parameter recovery

CAT vs. Nonadaptive

Average Correlation Across Dimensions

Nonadaptive

Adaptive

%

Items Per

Unidim. Construct

5

5

10

20

5

10

10

20

5

20

10

20

3-d

5-d

7-d

10-d

3-d

5-d

7-d

10-d

.73

.85

.93

.73

.85

.93

.74

.85

.92

.72

.87

.93

.74

.85

.93

.74

.85

.93

.76

.87

.93

.75

.86

.94

.74

.87

.93

.76

.86

.94

.75

.87

.94

.75

.86

.94

.87

.93

.96

.87

.92

.96

.87

.92

.96

.85

.93

.96

.87

.93

.96

.84

.90

.96

.86

.93

.96

.85

.93

.96

.86

.93

.96

.87

.93

.96

.88

.93

.96

.87

.93

.96

* CAT yielded similar correlations with only half as many items.

* 10-d CAT correlations > .9 with 100 items (only 5 unidim!).

Summary of MDPP Model

Studies

MDPP items are attractive for applied use:

Faking is more difficult

Can create huge pool with relatively few statements

representing each dimension (20 stimuli = 190 items)

5% unidimensional pairings sufficient for accurate score

recovery

As with SS models, MDPP CAT can reduce test length

by about 50% while maintaining accuracy, which is

important if many dimensions assessed.

Current Empirical TAPAS

Studies

Comparing MDPP format to single statement

(SS) format

Testing what makes forced-choice items

resistant to faking

# of dimensions?

Matching on social desirability?

Matching on statement locations?

Study 1: Benchmark Study

4-D MDPP measure (41 pairs) designed using “conventional

wisdom”

Match stimuli on social desirability (average difference

between SocD did not exceed 1.08 on 5-point scale)

Match stimuli to have different locations on respective

dimensions (average distance 4.3 units on Z-score metric)

4-D SS measure (40 items)

Both measures administered under faking and honest conditions (N

= 510 and N = 574)

2-D SS measure (20 items) – all honest (n=1084)

Very Strong Faking

Instructions!

Unlike in the previous sections where the instructions

asked you to be as honest and accurate as possible,

we now ask that you PRETEND you are not yet in the

Army, but very much want to be. Imagine a recruiter

asks you to take this questionnaire to determine if you

are GOOD ARMY MATERIAL. If you score well, you

will be let into the Army. If you don’t score well, you

will not.

For the remaining sections, you are to answer the test

questions by describing yourself in a way that will

make you look like “good Army material” so you are

sure to pass the test and get into the Army.

Remember you are not yet in the Army, but very much

want to be. In other words, create the best possible

impression of yourself and convince the Army that you

will make a good Soldier.

Study 1: Benchmark Study

Comparability of formats under Honest Conditions

dom_MDPP

dom_MDPP

1.00

enr_MDPP

0.27

ord_MDPP

0.12

trad_MDPP

0.11

dom_GGUM

0.59

enr_GGUM

0.21

ord_GGUM

0.20

trad_GGUM

0.05

ord_GOLD

0.21

trad_GOLD

0.06

enr_MDPP

0.27

1.00

0.09

0.12

0.22

0.49

0.15

0.10

0.13

0.10

ord_MDPP

0.12

0.09

1.00

0.33

0.02

0.06

0.49

0.21

0.50

0.24

trad_MDPP

0.11

0.12

0.33

1.00

0.08

0.13

0.34

0.54

0.35

0.50

Study 1: Benchmark Study

Honest

dom_MDPP

enr_MDPP

ord_MDPP

trad_MDPP

dom_GGUM

enr_GGUM

ord_GGUM

trad_GGUM

TRAD_GOLD

ORD_GOLD

Faking

0.10

0.17

-0.07

0.48

0.13

0.25

-0.19

0.65

31.43

29.96

0.32

0.95

0.32

1.56

0.44

0.65

0.36

1.25

31.31

29.69

Difference

0.21

0.78

0.39

1.08

0.31

0.41

0.54

0.60

-0.12

-0.26

Effect Size

0.32

0.97

0.70

1.06

0.41

0.59

0.71

0.77

-0.03

-0.05

MDPP scales created using conventional wisdom are as fakable as SS scales

in strong faking conditions

In faking conditions, respondents chose items with “more positive” location

(i.e., > 20% endorsement shift across conditions)

Study 2: Location Matching

11-D MDPP static measure with 117 items

Match stimuli on similarity in locations (average distance

2.09 z-score units)

11-D SS measure (7 items each)

Both measures administered under faking and honest

conditions (N = 286 and N = 358)

Again, very strong faking instructions

Study 2: Location Matching

Unlike benchmark study, only 20 out of 117

items showed inflated percent endorsement

shifts

Note that we matched only on locations, not

Soc.D

Scored 97 pair 11-D MDPP measure

Similar correlations across formats as in

benchmark study

But, less score inflation

Study 2: Location Matching

MDPP Scores

ORD_MDPP97

SOC_MDPP97

TRAD_MDPP97

ENR_MDPP97

DOM_MDPP97

IND_MDPP97

INTE_MDPP97

TRUST_MDPP97

CURI_MDPP97

WELL_MDPP97

PHYC_MDPP97

Honest (N= 358) Faking (N=276) Difference Effect Size

-0.08

0.10

0.18

0.38

0.13

0.06

-0.07

-0.12

-0.24

-0.01

0.23

0.30

-0.77

-0.57

0.20

0.28

-0.29

-0.33

-0.04

-0.06

-0.72

-0.43

0.29

0.49

-0.17

-0.01

0.15

0.26

-0.24

-0.18

0.07

0.07

0.01

0.13

0.12

0.20

-0.38

-0.26

0.12

0.20

-0.54

-0.28

0.25

0.42

Compare to: SS scales in benchmarking study had .41 SD inflation for DOM,

and .79 SD inflation for TRAD

Conclusions

MDPP model (Stark, 2002) can be used effectively to score real

MDPP response patterns

MDPP scores agree with SS scores under honest conditions

Fake resistance of forced-choice format should not be taken for

granted

E.g., must match on item locations, not just Soc.D

Our MDPP CAT algorithm has constraints on location difference

and Soc.D difference

Adaptive testing format may further decrease fakability (e.g.,

NCAPS results with UPP scales)

But, there is lots of R&D work to be done…

Current Work

TAPAS is being implemented by the

US Army for enlistment screening

June 8 for applicants without high

school diplomas

Will it predict their attrition and

counter-productive behaviors?

Current Work

We have about 50 statements for each of

the 13 dimensions that are being used by

the US Army

Are some statements overused? We don’t

have a exposure control algorithm

In principle, each of the approximately 650

statements could be paired with any of the

other 649…but there are lots of constraints

on item selection…

In Sum,

TAPAS designed to bring the latest in

Psychometric theory

Computer technology

Personality theory

Our goal is to produce an easily

customizable assessment tool to meet

the needs of diverse users and

researchers