Stanford_TMT - Methods in Information Science and Data

advertisement

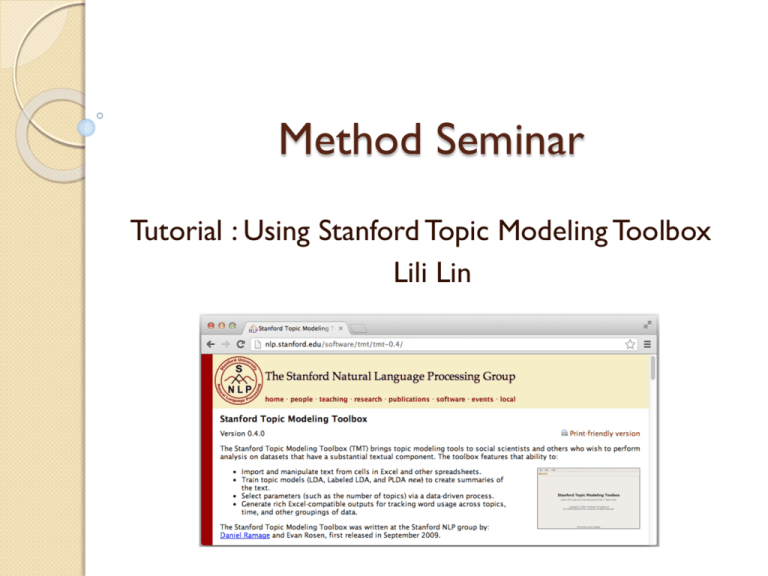

Method Seminar Tutorial : Using Stanford Topic Modeling Toolbox Lili Lin Contents Introduction Getting Started Prerequisites Installation Toolbox Running Latent Dirichlet Allocation Model (LDA Model) Labeled LDA Model Contents Introduction Getting Started Prerequisites Installation Toolbox Running Latent Dirichlet Allocation Model (LDA Model) Labeled LDA Model Introduction http://nlp.stanford.edu/software/tmt/tmt0.4/ The Stanford Topic Modeling Toolbox was written at the Stanford NLP group by: Daniel Ramage and Evan Rosen, first released in September 2009 Topic models (e.g. LDA, Labeled LDA) training and inference to create summaries of the text Introduction - LDA Model LDA model is a unsupervised topic model User need to define some important parameters, such as number of topics It is hard to choose the number of topics Even with some top terms for each topic, it is still difficult to interpret the content of the extracted topics Introduction – Labeled LDA Model Labeled LDA is a supervised topic model for credit attribution in multi-labeled corpora. If one of the columns in your input text file contains labels or tags that apply to the document, you can use Labeled LDA to discover which parts of each document go with each label, and to learn accurate models of the words best associated with each label globally Contents Introduction Getting Started Prerequisites Installation Simple Testing Toolbox Running LDA Model Labeled LDA Model Prerequisites A text editor (e.g. TextWrangler) for creating TMT processing scripts. TMT scripts are written in Scala, but no knowledge of Scala is required to get started. An installation of Java 6SE or greater: http://java.com/en/download/index.jsp. Windows, Mac, and Linux are supported. Installation Download the TMT executable (tmt0.4.0.jar) from http://nlp.stanford.edu/software/tmt/tmt0.4/ Double-click the jar file to open toolbox or run the toolbox with the command line : java -jar tmt-0.4.0.jar You should see a simple GUI Simple Testing Example data and scripts for simple testing ◦ Download the example data file: pubmed-oasubset.csv ◦ Download the first testing script: example-0test.scala Note: the data file and the script should be put into the same folder Simple Testing - GUI Load script: File Open script Simple Testing - GUI Edit script: val pubmed = CSVFile("pubmed-oa-subset.csv”) Simple Testing - GUI Run the script: click the button ‘Run’ Simple Testing - Command Line Contents Introduction Getting Started Prerequisites Installation Toolbox Running Latent Dirichlet Allocation Model (LDA Model) Labeled LDA Model LDA Model – Data Preparation 173, 777 Astronomy papers were collected from the Web of Science (WOS) covering the period from 1992 to 2012 In the file ‘astro_wos_lda.csv’, every record includes paper ID (the first column), title (the second column) and published year (the third column) LDA Training – Script Loading File Open script Navigate to example-2-lda-learn.scala Open LDA Training – Data Loading Edit Script : ‘val source = CSVFile("astro_wos_lda.csv”)’ ‘Column(2) ~>’ Note: if your text cover 2 columns or more than 2 columns, such as the third and forth columns, you can use ‘Columns(3,4) ~> Join(" ") ~>’ to replace ’Column(2) ~>’ LDA Training – Parameter Selection Edit Script : val params = LDAModelParams(numTopics = 30, dataset = dataset, topicSmoothing = 0.01, termSmoothing = 0.01) LDA Training – Model Training Run : Out of Memory due to the big data LDA Training – Model Training Change the size of Memory Run LDA Training – Output Generation lda-b2aa1797-30-751edefe ◦ description.txt : A description of the model saved in this folder ◦ document-topic-distributions.csv : A csv file containing the perdocument topic distribution for each document in the training dataset ◦ 00000-01000 : Snapshots of the model during training LDA Training – Output Generation /params.txt : Model parameters used during training /tokenizer.txt : Tokenizer used to tokenize text for use with this model /summary.txt : Human readable summary of the topic model, with top-20 terms per topic and how many words instances of each have occurred /log-probability estimate.txt : Estimate of the log probability of the dataset at this iteration /term-index.txt : Mapping from terms in the corpus to ID numbers /description.txt : A description of the model saved in this iteration /topic-termdistributions.csv.gz : For each topic, the probability of each term in that topic LDA Training – Command Line Java –Xmx4G –jar tmt-0.4.0.jar example2-lda-learn.scala LDA Inference – Script Loading File Open script Navigate to example-3-lda-infer Open LDA Inference – Trained Model Loading Edit Script: val modelPath = file("lda-b2aa1797-30-751edefe”) LDA Inference – Data Loading Edit Script: ‘val source = CSVFile("astro_wos_lda.csv”)’ ‘Column(2) ~>’ Note: Here we just use the same dataset as the inference data, but actually it should be some new dataset LDA Inference – Model Inference Change the size of Memory Run LDA Inference – Output Generation Navigate to the folder ’lda-b2aa1797-30-751edefe’ ◦ astro_wos_lda-document-topic-distributuions.csv : A csv file containing the per-document topic distribution for each document in the inference dataset ◦ astro_wos_lda-top-terms.csv: A csv file containing the top terms in the inference dataset for each topic ◦ astro_wos_lda-usage.csv LDA Inference – Command Line Java –Xmx4G –jar tmt-0.4.0.jar example3-lda-infer.scala LLDA Model – Data Preparation 4,770 metformin papers were collected from pubMed covering the period from 1997 to 2011 Training data : metformin_train_data_llda.csv (2798 papers), every record includes paper ID (the first column), bio-term list (the second column), title (the third column) and abstract (the forth column), the number of bio-terms in very record is at least 3 Inference data: metformin_infer_data_llda.csv (4770 papers), every record includes paper ID (the first column), title (the second column) and abstract (the third column) LLDA Training – Script Loading File Open script Navigate to example-6-llda-learn.scala Open LLDA Training – Data Loading Edit Script : ‘val source = CSVFile("metformin_train_data_llda.csv")’ ‘Columns(3,4) ~> Join(" ") ~>’ ’Column(2) ~>’ LLDA Training – Model Training Run LLDA Training – Output Generation llda-cvb0-bd54e9b6-176-1213c7f4-222a08a4 ◦ description.txt : A description of the model saved in this folder ◦ document-topic-distributions.csv : A csv file containing the perdocument topic distribution for each document in the training dataset ◦ 00000-01000 : Snapshots of the model during training LLDA Training – Output Generation /params.txt : Model parameters used during training /tokenizer.txt : Tokenizer used to tokenize text for use with this model /summary.txt : Human readable summary of the topic model, with top-20 terms per topic and how many words instances of each have occurred /term-index.txt : Mapping from terms in the corpus to ID numbers /description.txt : A description of the model saved in this iteration /label-index.txt : Topics extracted after LLDA training /topic-termdistributions.csv.gz : For each topic, the probability of each term in that topic LLDA Training – Command Line Java –Xmx4G –jar tmt-0.4.0.jar example6-llda-learn.scala LLDA Inference – Jar Script The TMT toolbox doesn’t provide script for LLDA inference A java script, packaged into ‘llda-infer.jar’, was generated in order to conduct LLDA inference LLDA Inference – Command Line java -jar llda-infer.jar metformin_infer_data_llda.csv llda-cvb0bd54e9b6-176-1213c7f4-222a08a4 metformin_infer_result.csv LLDA Inference – Output Generation A file named metformin_infer_result.csv will be generated after LLDA Inference Thanks….. Any Question?