SlidesAdvancedWorkshopHILT2015

advertisement

ADVANCED TOPICS IN TEXT ANALYSIS WITH THE

HATHITRUST RESEARCH CENTER

Humanities Intensive Learning and Teaching (HILT)

Indianapolis

July 29, 2015

HILT 2015

Sayan Bhattacharyya

Eleanor Dickson

About us

Sayan Bhattacharyya

CLIR Postdoctoral Fellow

Graduate School of Library and Information Science

University of Illinois, Urbana-Champaign

sayan@illinois.edu

Eleanor Dickson

HTRC Digital Humanities Specialist

Scholarly Commons, University Library

University of Illinois, Urbana-Champaign

dicksone@illinois.edu

The HTRC team

• The HTRC team at the University of Illinois, UrbanaChampaign, led by J. Stephen Downie (HTRC co-director)

• Members:

Loretta Auvil

Sayan Bhattacharyya

Boris Capitanu

Kahyun Choi

Tim Cole

Eleanor Dickson

Megan Senseney

Colleen Fallaw

Harriett Green

Yun Hao

Peter Organisciak

Janina Sarol

Craig Willis

• The HTRC team at Indiana University, Bloomington, led by

Beth Plale (HTRC co-director)

Resources for “advanced” HTRC

functionalities

• For materials from HTRC-related papers,

presentations, and workshops, see the HTRC

Publications and Presentations page

Resources for “advanced” HTRC

functionalities (contd.)

• For tutorials, “how-to” guides, and examples, see the

HathiTrust Research Center Knowledge Base Pages

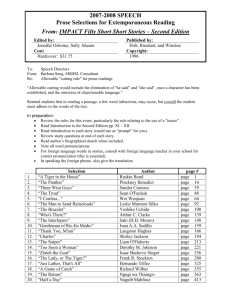

Today’s workshop’s agenda

– Discussion of HTRC’s “Advanced Functionalities”:

• HTRC Data Capsule

• HTRC Extracted Features

– What is happening “under the hood”…

• …in HTRC Portal’s topic modeling algorithm?

• …in HTRC Portal’s Dunning log-likelihood algorithm?

– Situating HTRC tools within the “bigger picture” of text

analysis

• Terminology — so that you can converse beyond HTRC-world

• Limits and caveats you should keep in mind

• When to go beyond HTRC’s own tools?

– Useful, free tools HTRC users have built/contributed

HTRC Data Capsule (under the hood)

• The HTRC Data Capsule works by giving a researcher their own virtual

machine (VM).

• A researcher can configure the VM just as she would configure her

own desktop computer with her own tools.

• Custom tools can be downloaded into the VM

• e.g. custom algorithms

• When a VM is loaded with text data,…

• ….then, the VM switches into "secure mode”,

• network and other data channels are restricted…

• …to protect access to the data.

• Researcher’s algorithm works against text data.

• Results are emailed to the user.

HTRC Data Capsule (under the hood)

Photo from Mindat.org

Extracted Features (EF) Data: Recap

• Available at: https://sharc.hathitrust.org/features

• Texts from the HTDL corpus not in the public domain are not available for

download

– This limits the usefulness of the corpus for research.

• However...

– considerable text analysis can be done non-consumptively,

using just the extracted features, even when actual textual

content is not downloadable

• Page-level features, extracted, are adequate for certain kinds of

algorithmic analysis:

– Contents of pages supplied only as bags-of-words

• analysis using relational information in pages (such as

• sequences of words) not possible with EF data

Partial view of an EF data file json (“basic”)

"features":{

…

…

"header":{

"tokenCount":7,

"lineCount":3,

…

"tokenPosCount":{

"I.":{"NN":1},

"THE":{"DT":1},

"INTRODUCTION":{"NN":1},

….

"AND":{"CC":1}}},

"body":{

"tokenCount":205,

"lineCount":35,

"emptyLineCount":9,

"sentenceCount":6,

"tokenPosCount":{

"striking":{"JJ":1},

"London":{"NNP":1},

"four":{"CD":1},

".":{".":7},

"dramatic":{"JJ":2},

"stands":{"VBZ":1},

...

"growth":{"NN":1}

}

},

"footer":{

Extracted Features (EF) data

has utility even for out-of-copyright material!

• Page-level extracted features (EF) provide “a sense of the

content” of each workset at the level of individual works.

– For a workset consisting only of out-of-copyright material, such “sense

of the content” can be obtained by:

• Downloading contents of the workset and manually browsing

(“flipping through”) the contents; OR

• running provided, off-the-shelf algorithms that perform content

exploration

– Disadvantage of off-the-shelf algorithms:

» a blunt instrument, incapable of focused questions

» limited to merely the provided algorithms

– EF dataset allows the flexibility of developing/running one’s own

queries and algorithms against the text data, without depending on

provided algorithms

Partial view of EF data file json (“advanced”)

A use case for “Basic” EF data

•

Recall from rom Workshop 1:

– Dickens’s novels Little Dorrit and Bleak House are somewhat similar…

• …in that they both speak about the injustices of the 19th century British

judicial system.

– However, Little Dorrit and Bleak House are also different.

• Little Dorrit:

– emphasis is on civil law rather than prisons.

• Bleak House:

– prisons and incarceration play an important role

• We can corroborate this using the EF data:

• Count (using the ‘grep -o’ command at the shell command-line) the

number of pages in which the word ‘prison’ occurs at least once, for:

– the workset LittleDorrit1; and

– for the workset BleakHouse1

• Compare these two counts

Occurrences of “prison” in Bleak

House and Little Dorrit

•

Count (separately for Little Dorrit and Bleak House)

–

the number of pages in which the word ‘prison’ occurs at least once:

• Downloaded EF dataset corresponding to the LittleDorrit1 workset:

hvd.32044025670571.basic.json

– grep –o prison hvd.32044025670571.basic.json | wc –l

» Returns: 173

• Downloaded EF dataset corresponding to the BleakHouse1 workset:

miun.aca8482,0001,001.basic.json

– grep –o prison miun.aca8482,0001,001.basic.json | wc –l

» Returns:

•

29

Compare these two counts:

173 >> 29

(‘Prison’ is way more prevalent in Bleak House than in Little Dorrit !)

(Q.E.D.)

Ooleridgecurrences of “prison” in Bleak House and

Little Dorrit

(caveats)

• We simply counted the occurrences of the word “prison” within each

json. This does give us a rough estimate, but to make a precise, accurate

computation, you should actually parse the json to count the frequency of

occurrences of “prison”.

– (Try this as an exercise. You may want to try writing a simple program using a

programming language (e.g. python) to carry out the computation.)

• Another simplification we made was to simply count occurrences of the

exact word “prison”.

– For a large sized sample, this ought not matter so much.

– However, as an exercise, you may want to redo the activity by

considering any expression that contains the string prison — e.g.

“imprisonment”, “prisoner”, “prisons”, “prisoners”, etc.

• (Hint: Type man grep at the unix command line to see what other options

there are for using grep, and see if grep can do this job for you.)

Activity 1:

Count occurrences of “prison” in Bleak House and Little Dorrit

• In this activity, you will do hands-on what you just observed in

the slide presentation

• Grab Activity 1 Handout (paper version)

• Go to:

http://people.lis.illinois.edu/~sayan/HILT2015Workshop2

for the online version of the handout,

“Activity1_Handout_HILT_Advanced_Workshop.docx”.

• Follow the instructions in the handout.

– For the unix commands, you may want to copy-paste the commands

directly from the online document, so that you won’t make typos

while copying from paper.

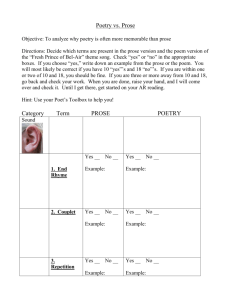

Distinguishing prose from poetry

• English verse traditionally (at least until around the 20th

century, anyway) had the first letter of each line capitalized.

– Evidently, this is not the case for English prose!

• Intuition:

– In a workset, the more poetry there is, the more will be the

proportion of characters that are capitalized letters

» Try to corroborate this intuition in the case of two

worksets consisting, respectively, of a volume of verse

and a volume of prose

Prose and poetry in Keats and Coleridge

•

Coleridge wrote a lot of poetry and prose. Almost all that

Keats wrote was poetry.

–

•

Let’s try to corroborate this!

We put these two books (each in its own workset):

– The Works of Samuel Taylor Coleridge: Prose and Verse [from the

University of Wisconsin Library]

• http://babel.hathitrust.org/cgi/pt?id=wu.89001273754;vie

w=1up;seq=1

– The Poetical Works of John Keats [from the University of Illinois

Library]

• http://babel.hathitrust.org/cgi/pt?id=uiug.3011206592669

0;view=1up;seq=1

• We then downloaded the two corresponding “basic” jsons via

the Extracted Features Syncing algorithm.

Distinguishing prose from poetry

• The Works of Samuel Taylor Coleridge : Prose and Verse

(wu.89001273754)

– % cat wu.89001273754.advanced.json| grep -o '\"S\"' |

wc –l

• 462 (Call this CS)

– % cat wu.89001273754.advanced.json| grep -o '\”s\"' |

wc –l

• 1003 (Call this Cs)

– Proportion of ‘S’ (capitalized) to all ‘s’ (capitalized or not)

= CS / (CS + Cs)

= 462/(462 + 1003)

= 0.315

=

32 %

Distinguishing prose from poetry

• The Poetical Works of John Keats (uiug.30112065926690)

– % cat uiug.30112065926690.advanced.json |grep -o

'\"S\"' | wc –l

• 239

(Call it KS)

– % cat uiug.30112065926690.advanced.json |grep -o

'\”s\"' | wc –l

• 209

(Call it Ks)

– Proportion of ‘S’ (capitalized) to all ‘s’ (capitalized or not)

= KS / (KS + Ks)

= 239/(239+209)

= 0.533

=

53 %

Distinguishing prose from poetry

• Proportion of ‘S’ (capitalized) to all ‘s’ (capitalized or not) in

The Poetical Works of John Keats

= 53 %

• Proportion of ‘S’ (capitalized) to all ‘s’ (capitalized or not) in

The Works of Samuel Taylor Coleridge: Prose and Verse

= 32 %

53% is much higher than 32%

This corroborates the intuition!

Distinguishing prose from poetry

(caveats)

• For simplicity, we deliberately made the two worksets consist

of a single volume each.

• However, this could introduce sampling error:

– For example, what if the particular edition of Keats’s collected

works that was chosen for analysis contains a lot of textual

apparatus in prose? That could make it seem that the proportion

of prose in Keats’s work is more than that in Coleridge’s!

• Always try to take a large enough sample (something

which is true of statistical analysis in general).

– For example, in this particular case, you would ideally want, in a

real-life situation, to have several volumes of Coleridge’s collected

works in a “Coleridge” workset, and likewise several volumes of

Keats’s collected works in a “Keats” workset.

Distinguishing prose from poetry

(more caveats)

• We made another simplification

• We simply counted the occurrences of the strings “S” and

“s” within the json.

• This gave us a rough estimate from the extracted feature

called beginLineChars as part of the json.

• However, to make a precise, accurate computation, you

should actually parse the json to count the frequency of

occurrences of “S” and “s” within beginLineChars for

each json.

– (Try this as an exercise. You may want to try writing a simple

program using a programming language (e.g. python) to carry out

the computation.)

Activity 2

• In this activity, you will do hands-on what you just observed in

the slide presentation

• Grab Activity 2 Handout (paper version)

• Go to

http://people.lis.illinois.edu/~sayan/HILT2015Workshop2

for the online version of the handout,

“Activity2_Handout_HILT_Advanced_Workshop.docx”.

• Follow the instructions in the document.

• For the Unix commands, you may want to copy-paste the

commands from the online document, so that you won’t

make typos while copying from paper.

Different characteristics of prose and

poetry (contd.)

• As a future exercise at home, choose a work by

Coleridge that is pure prose (e.g. Coleridge’s Biographia

Literaria), and repeat the above calculation.

– Hypothesis:

• The proportion of ‘S’ (capitalized) to all ‘s’

(capitalized or not) will be below even 32%

• You could try verifying this hypothesis at home!

Distinguishing prose from poetry (contd.):

You could create larger Keats and Coleridge worksets!

Distinguishing prose from poetry:

Creating Keats and Coleridge worksets

• For a manageable workset size

– Select the items from only the first two pages of results (i.e. the first

20 items)

• In the author field, type:

– john AND keats

– samuel AND coleridge

• Remember to capitalize the AND .

Distinguishing prose from poetry:

Keats and Coleridge worksets (contd.)

• We could (although we won’t) repeat the previous exercise on

larger Keats and Coleridge worksets

– Keats mostly wrote only poetry,

– while Coleridge wrote lots of prose as well as poetry,

we would expect the same kinds of results for these

Keats and Coleridge worksets, as well.

– As an exercise, verify this hypothesis (at home)!

– In general, we can hypothesize that the higher the

proportion of capitalized letters, the larger is the

proportion of poetry in a workset.

Extracted Features (EF) and Data Capsule (DC)

(Comparison/Contrast)

• Both are:

• Mechanisms to enable non-consumptive analysis of

copyrighted text

• You may want to use EF when:

• You want to see/access some representation of the text

• EF permits you to access the extracted features, which are a

representation of the text

• Working with JSONs is useful/familiar to you

• The EF dataset is in the form of JSONs

• Photo

You

want to use DC when:

frommay

Mindat.org

• Relational/sequential information about words on a page is

important to you

• EF dataset consists of bags-of-words, losing

sequential/relational information

HTRC Extracted Features and HTRC Data Capsule

are complementary, not competitive approaches

Downloading EF data for a workset can help a user identify

(e.g. by doing analysis using frequency counts), a smaller

body of texts…

that the user wants to subject to a closer analysis…

using techniques that that bag-of-words can't

support,…

Photo from Mindat.org

such as techniques that make use of the

sequential / relational information between

words and sentences

Topic modeling in more detail

Useful references (some of which we will be drawing from):

Blei, David M. ‘Probabilistic Topic Models.’ Communications of the

Association for Computing Machinery (ACM), Vol. 55, No. 4. April 2012.

Schmidt, Benjamin. ‘Words Alone: Dismantling Topic Models in the

Humanities.’ Digital Humanities Quarterly, Vol. 2, No. 1. Winter 2012.

Murdock, Jaimie and Colin Allen. ‘Visualization Techniques for Topic Model

Checking.’ Annual Meeting of the Association for the Advancement of

Artificial Intelligence (AAAI), 2015.

Brett, Megan R. ‘Topic Modeling: A Basic Introduction’. Journal of Digital

Humanities,

Vol. 2, No. 1. Winter 2012.

Photo from

Mindat.org

Goldstone, Andrew and Ted Underwood.’What can topic models of PMLA

teach us about the history of literary scholarship?’ The Stone and the Shell

Blog, Dec 14, 2012.

Topic modeling (recap)

Recapitulation from introductory workshop:

What are topic models?

“Algorithms for discovering the main themes

that pervade a large and otherwise

unstructured collection of documents.” (Blei)

Photo from Mindat.org

What do topic models do?

“Organize a collection according to

discovered themes.” (Blei)

Topic modeling (recap)

Recap from introductory workshop:

What are topic models?

“Algorithms for discovering the main themes

that pervade a large and otherwise

unstructured collection of documents.” (Blei)

Photo from Mindat.org

What do topic models do?

“Organize a collection according to

discovered themes.” (Blei)

Topic modeling (recapitulation)

(‘Visualize single texts’ in Underwood’s taxonomy)

• Partial snapshot of the results of topic modeling Bleak House

using HTRC Portal’s TM algorithm (with number of

tokens/topic = 20):

Topic Modeling (TM) algorithm:

What is happening “under the hood”?

• A “topic model” assigns every word in every document to one of a given

number of topics.

Photo from Mindat.org

Figure credit: Blei (2012)

Topic Modeling (TM) algorithm:

What is happening “under the hood”?

• A “topic model” assigns every word in every document to one of a given

number of topics.

• Three terms are at play:

• word (we know what ‘word’ is)

• topic (this term needs unpacking)

• document (this term needs unpacking)

• A topic is a distribution of words—a guess made by the TM algorithm about

how words tend to co-occur in a document.

Photo from Mindat.org

•

A document is the basic unit in terms of which the TM algorithm is treating the

body of text (the corpus) being analyzed

• For the Portal TM algorithm, ‘documents’ are: individual pages

• If you are running your own TM algorithm with EF data, you can choose

what ‘document’ is for you (finest-grain being: the page)

Topic Modeling (TM) algorithm:

What is happening “under the hood”? (contd.)

• Depending on how you choose your parameters:

• how many topics you ask the algorithm to create, and

• how many words you ask the algorithm to make each topic contain,

• you will get different results.

• A topic is a probability distribution of words—a guess made by the

algorithm about how words tend to co-occur in a document.

• Similarly, every document is modeled as a mixture of topics in

different proportions.

Photo from Mindat.org

• The algorithm

• knows nothing about the content (subject matter) of any document

• knows nothing about the order of words of any given document.

Evaluation of topic model quality

• A topic modeling algorithm can create many

different topic models

– based on different choices of parameters for the

model

• Which of these topic models should be used

for a particular corpus?

– For large, unstructured corpora, often the answer is:

• Use that topic model which is the most interpretable.

Model checking

• The “model checking problem”

– How can we compare topic models based on

how interpretable they are?

• Use statistical / mathematical measures to compare

– E.g.: compare models based on the coherence of the topics

found

» mathematically estimate coherence using similarity

measures and thesauri like Wordnet

– Such approaches not always easy for non-specialists without

math / stat training

• Use inspection/ visualization methods to compare

– Usual approach for humanists using TM

Requirements for successful topic

modeling?

• A sufficiently large corpus:

• Rule-of-thumb: number of documents should be “at least in the

hundreds” (Brett, 2012)

• Recall that we topic-modeled single, individual novels

(e.g. Little Dorrit, Bleak House) in Workshop 1

• Doing so made sense only because those novels are long

(hundreds of pages), and page = document for the TM algorithm

in HTRC Portal.

Photo from Mindat.org

• Some “familiarity with the corpus” (Brett, 2012)

• Sounds counterintuitive, but needed. Why?

• Some “topics” produced by TM can be wildly off the mark

• You have to be able to recognize spurious “topics”

Pitfalls in topic modeling

“The downside of too-aggressive topic modeling is that it

occasionally leads us to treat junk as an oracle.” (Ben Schmidt)

“A poorly supervised machine-learning algorithm is like a bad

research assistant. It might produce some unexpected

constellations that show flickers of deeper truths, but there

are lots of ways to get creative rearrangements.” (Ben

Schmidt)

Photo from Mindat.org

Limitations of topic modeling

(and how to guard against them)

Good rules of thumb to follow:

• Don’t blindly trust the HTRC portal’s word-cloud visualization for TM.

• Always also open the results text file and examine its contents, to

see if they make some intuitive sense

• Be aware that choices made about stopwords can shape results

• (More about that soon!)

• Photo

Treat

topic modeling results “as a heuristic, and not as evidence” (Ted

from Mindat.org

Underwood).

• Use TM results not as an end in itself, but as a guide for asking

further questions of your corpus

• e.g. for deciding what texts from your corpus you should

now do a close-reading of.

Limitations of topic modeling

(and how to guard against them) [contd.]

• Use the HTRC Portal topic model algorithm for

preliminary/exploratory topic modeling of your workset

• But for serious research/publishing, a standalone TM

tool independent of HTRC may be needed

• e.g. Mallet [McCallum 2002], http://mallet.cs.umass.edu

• To do TM with HT data outside of HTRC:

Photo from Mindat.org

• Approach the HathiTrust for the OCR content

and do TM directly with the OCR (if your institution is

a member of the HT consortium); or

• Download text data as EF content (JSONs)

When should you use the HTRC Portal’s topic modeling

algorithm, and when should you use Mallet?

• Know the limitations of your tool:

• The Portal-provided TM algorithm

• makes a tradeoff between simplicity-of-use and power:

• Does allow you to tune these 2 parameters:

• the number of topics produced (K)

• the number of words per topic (N)

• Does not allow you to tune these 2 “hyper-parameters”:

extent of variability between sizes of topics (α)

Photo from Mindat.org •

• extent of variability in distributions of topics across

words (β)

• Mallet as a standalone TM tool allows you to vary (“tune”)

• all four parameters (“knobs”): K, N, α, and β

Topic Explorer (Murdock and Allen):

Model checking using visualization

• Topic Explorer:

– Created by Jaimie Murdock and Colin Allen (Indiana

University) — collaborators of the HTRC

– a systematic/advanced way for topic model checking by

visualization

• provides an intuitive, visual estimate of interpretability of the

model

– well-suited for scholarship in the humanities

– Available for download at:

https://github.com//inpho/topic-explorer

– We will discuss it a bit later

“Repurposing” algorithms through the

use of stop lists

• Choices made about stopwords can significantly

shape results of algorithms

– Examples:

• A linguist may be interested in questions about language itself

• A sociologist or historian may be interested in the substantive

content of language

– A linguist may want to run algorithms blocking out certain

content words, such as place names, personal names

– A sociologist or historian may be interested in blocking out

function words, such as prepositions, conjunctions, articles,

etc.

– The same algorithm can be shaped, through the use of stoplists, to

answer different kinds of questions

The power of stop lists (especially in topic modeling)

stop lists can significantly affect how broad/focused the topics are

• The “character problem”:

Matthew Jockers (in http://www.matthewjockers.net/2013/04/12/secret-recipe-for-topicmodeling-themes/):

“I am running a smallish topic modeling job over a corpus of 50 novels …

Without any preprocessing I get topics that look like these two:

“…one is obviously a “Moby Dick” topic and the other a “Dracula” topic.”…topics

formed in this way because of the power of the character names…

The presence of the character names tends to bias the model and make it

collect collocates that cluster around character names… [so] we get these

broad novel-specific topics.”

The power of stop lists

(especially in topic modeling)

• The “character problem”:

Matthew Jockers (in http://www.matthewjockers.net/2013/04/12/secretrecipe-for-topic-modeling-themes/):

“To deal with this character “problem,” I begin by expanding the usual

topic modeling “stop list” from the 100 or so high frequency, closed class

words (such as “the, of, a, and. . .”) to include about 5,600 common

names, or “named entities.” I posted this “expanded stop list” to my

blog… feel free to copy it… I built my expanded stop list through a

combination of named entity recognition and the scraping of baby name

web sites… with the expanded stop list, I get topics that are much less

about individual novels and much more about themes that cross novels.

[An example:]”

The power of stop lists

(especially in topic modeling)

• The “character problem”:

Matthew Jockers (in http://www.matthewjockers.net/2013/04/12/secretrecipe-for-topic-modeling-themes/):

“As a next step in preprocessing, therefore, I employ Part-of-Speech

tagging or “POS-Tagging” in order to identify and ultimately “stop out” all

of the words that are not nouns!...

• “…let me justify this suggestion with a small but important caveat: I think

this is a good way to capture thematic information;

“it certainly does not capture such things as affect (i.e. attitudes towards

the theme) or other nuances that may be very important to literary

analysis and interpretation.”

Stoplists and HTRC portal algorithms

• Currently, some (not all) of the portal algorithms allow users

to specify a URL where the user puts his/her custom stoplist.

• In future, the workflow will be modified to make this possible

for all the portal algorithms.

• Activity (for you to explore later):

– Explore the portal algorithms and see which ones of them currently

allow you to specify your own stop lists

Dunning log-likelihood algorithm

(What is happening under the hood?)

Recapitulation:

Abbreviated plot summaries from the Dickens Fellowship website:

Bleak House: A prolonged law case

concerning the distribution of an estate,

which brings misery and ruin to the suitors

but great profit to the lawyers, is the

foundation for this story. Bleak House is the

home of John Jarndyce, principal member

of the family involved in the law case.

Little Dorrit: Here Dickens plays on the

theme of imprisonment, drawing on his

own experience as a boy of visiting his

father in a debtors' prison. William Dorrit is

locked up for years in that prison, attended

daily by his daughter, Little Dorrit. Her

unappreciated self-sacrifice comes to the

attention of Arthur Clennam, recently

returned from China, who helps bring

about her father's release but is himself

incarcerated for a time.

Dunning’s Log-likelihood:

Little Dorrit vs. Bleak House

Little Dorrit = analysis workset

Bleak House = reference workset

Words that are represented “most time more” in Little Dorrit than in

Bleak House.

Dunning’s Log-likelihood:

Bleak House vs. Little Dorrit

Bleak House = analysis workset

Little Dorrit = reference workset

Words that are represented “most times more” in Bleak House than

in Little Dorrit.

Dunning’s log-likelihood algorithm

in more detail

• Useful references (some of which we will be drawing from):

‒ Schmidt, Benjamin. ‘Comparing Corpuses by Word Use.’ Oct 6, 2011.

‒ Underwood, Ted.’Identifying diction that characterizes an author or genre.’

Nov 9, 2011.

• The Dunning “log-likelihood” (“logarithmic likelihood”)

statistic provides an idea about: Which words tend to occur

most times more in the analysis workset with respect to the

reference workset?

Photo from Mindat.org

• Although

the word cloud represents the words with the

higher Dunning scores as bigger, the Dunning score is not

about simple frequency counts. It is a probability measure

(hence the “tend to occur”).

Dunning’s log-likelihood statistic

(under the hood)

From Ted Underwood (2011):

“if I want to know what words or phrases characterize [some corpus A in relation to

some other corpus B], how do I find out?...

“If you compare ratios (dividing word frequencies in the corpus A that interests you, by

the frequencies in a corpus B used as a point of comparison), you’ll get a list of very rare

words.

“But if you compare the absolute magnitude of the difference between frequencies

[subtraction], you’ll get a list of very common words.

Photo from Mindat.org

“So

the standard algorithm [instead] is Dunning’s log likelihood:

— a formula that incorporates both absolute magnitude (O is the observed

frequency) and a ratio (O/E is the observed frequency divided by the frequency

you would expect).”

Dunning’s log-likelihood algorithm

(Class Activity: Think about Pedagogical use)

• Activity 3:

• Think about the Little Dorrit / Bleak House Dunning log-likelihood analysis.

• Discuss/brainsrorm in small groups (of two) how you may use the Dunning

log-likelihood analysis algorithm in connection with a writing assignment

for undergraduate students

• Can a Dunning log-likelihood computation complement a “compareand-contrast” essay-writing task?

How can the quantitative analysis help the student write the essay?

Photo from•Mindat.org

• Can your group come up with a prompt for such an assignment?

• Can you think of pairs of books that would be a good fit for such an

assignment?

• Can you think of ways to make the Dunning log-likelihood algorithm

work in tandem with HathiTrust+Bookworm?

Similar words (to a given word) by chronology:

David Mimno’s WordSim

• Co-occurrence-in-context-based per-year "similar"

words finder API,

http://mimno.infosci.cornell.edu/wordsim/nearest.

html (David Mimno):

• uses HTRC’s 250,000-book prototype

• will eventually work with HTRC’s Extracted Features

Photo from Mindat.org

release (4.8 million pre-1923 volumes)?

• Try it out!

Jaimie Murdock and Colin Allen’s

Topic Explorer

• Visualization for document-document relations and topic-document relations

• Interactive

• Supports rapid experimentation for interpretive hypothesis

• On clicking a topic,

• documents will reorder

• according to that topic’s weight in each document

• topic bars will reorder

• according to the topic weights in the highest weighted

document

• Keeps the following visible:

• comparative topic distribution

Photo from

• Mindat.org

document composition

• Supports indirect comparison of different topic models (with different

numbers of topics)

• Uses multiple windows,

• each with a model with a different number of topics

Jaimie Murdock and Colin Allen’s

Topic Explorer (interface)

Photo from Mindat.org

Jaimie Murdock and Colin Allen’s

Topic Explorer (interface)

Photo from Mindat.org