Benchmarck Assessment

advertisement

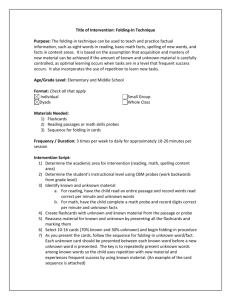

Local AIMSweb® Manager: Taking the Role An introduction to: •Course Overview •Role of a Local AIMSweb Manager (LAM) •Skills needed to become a successful LAM Training Outcomes • Learn the Big Picture: – – • Learn to Administer and Score: – – – – • Oral Reading (R-CBM) Reading Comprehension (MAZE) Math Computation (M-CBM) Early Numeracy (TEN) Learn to use AIMSweb Software: – – – • Overview of AIMSweb System and using Curriculum-Based Measurement (CBM) Overview of Progress Monitoring to affect student achievement and determine efficacy of interventions Benchmark Assessments/Strategic Monitoring Progress Monitoring Management of the AIMSweb System Learn the fundamentals of organizing and implementing the AIMSweb Assessment System: – – – Training others in your organization Student database upload Expectations you should have for this course in assisting you with this process. Training Intentions: For the Novice or Advanced AIMSweb User: – Materials: Receive training, materials and information that is useful for training others. – Administration & Scoring Practice: Learn how to conduct training sessions that will ensure inter-rater reliability, plus related materials. – Training Accounts: Learn how to use the AIMSweb software, and how to request training accounts for your own trainings. – Training Scripts: Follow along with pre-scripted scenarios that allow you exposure to the software. These materials may be duplicated and used in your own schools for subsequent training. Realistic Expectations: – A comprehensive two day workshop in a train-the-trainer approach. – DURING THE WORKSHOP: The two days spent here provide you with solid familiarity, practice, and materials. This workshop will provide you with sufficient tools and exposure to the assessments and software which you may use a springboard to further learning and successful adoption of AIMSweb . – AFTER THE WORKSHOP: Requires frequent practice using materials provided and self-study to maintain and enhance skills taught. – Give yourself permission and time to experiment with software, practice presenting PowerPoint slides to others, and review readings as needed. Success comes most readily to those who have spent time with the system until a comfort level is achieved. – Do not be frustrated if you leave here feeling less than fully confident with all which is presented. This training will provide you with tools to use for weeks and months to come until you do gain fluency with the system. Then you will feel most confident when training others. Helpful Hints: – Take thoughtful notes on the PowerPoint slide presentations. These will be the same slides that you are welcome to use in your own organizations as needed to train others. – Ask questions. If you’re thinking about it —someone else is likely doing the same! – Download and store these files in a safe location on your computer for future use. – Generate a support network: Get to know someone seated near you. Gather contact information from these individuals. In the future, it may be helpful for you to share notes and converse about this training as you begin to implement and/or use AIMSweb. – Not everyone here will be taking this course in order to learn to train others. Some may be simply improving their own knowledge or skills about AIMSweb. That is OK! Use the information presented in whichever ways it is most meaningful to you. Everyone is very welcome here! Continued Support: – AIMSonline Discussion Forum (located within your training accounts) – Documentation: Training scripts, user guides, Help button. – support@edformation.com Use (judiciously) when you have technical questions that are not answered in the tools already provided to you. – Web link with downloadable materials for this training. Let’s Begin: What are Local AIMSweb Managers (LAMs)? • LAMs are individuals who take a leadership position in the use of the AIMSweb system within their organizations. • As a LAM, you will likely be responsible for training colleagues to utilize various components of the AIMSweb system, according to the organization’s needs. • Successful LAMs have a strong background in assessment and standardized test administration. Examples of LAMs include: • • • • • School Psychologists, Speech Pathologists, other ancillary staff Teachers Administrators Reading Consultants Special Education Staff Successful LAMs Successful LAMs are able to: • Gain a solid understanding of the concepts surrounding the use of CBM and AIMSweb • Understand the importance of training staff well so that trustworthy data are obtained • Are fluent with the key factors which make AIMSweb training and implementation successful within your organization – – – – – Data integrity Not taking on too much at once Listening to the needs of your staff Knowing the hierarchy of support available to you and staff Building logical support systems within your organization New to AIMSweb? Welcome to the AIMSweb System AIMSweb is an assessment and reporting system using: – Curriculum-Based Measurement (CBM) assessments • Cover all core academic areas • Scientifically researched for stability and validity • Designed for formative assessment—continually informing educators of the benefit instructional programs provide – Data management and reporting software • AIMSweb is user-friendly, web-based software • Used to manage and report student data • Reports assist staff in making determinations about the efficacy of instructional programs over time • Program evaluation tool: Assists educators in determining the value of instructional programs and interventions for populations such as: – – – – Individual students Small groups of students Classrooms, grade-levels Schools, districts/large organizations OVERVIEW OF CURRICULUM-BASED MEASUREMENT AS A GENERAL OUTCOME MEASURE Mark R. Shinn, Ph.D. Michelle M. Shinn, Ph.D. Formative Evaluation to Inform Teaching Summative Assessment: Culmination measure. Mastery assessment. Pass/fail type assessments which summarize the knowledge students learn. Typical summative assessments include: • End of chapter tests • High-stakes tests (e.g., State assessments) • GRE, ACT, SAT, GMAT, etc. tests • Driver’s license test • Final Exams. Formative Evaluation: Process of assessing student achievement during instruction to determine whether an instructional program is effective for individual students. Informs: • When students are progressing, continue using your instructional programs. • When tests show that students are not progressing, you can change your instructional programs in meaningful ways. Big ideas of Benchmark Assessment It’s about using General Outcome Measures (GOMs) for formative assessment/evaluation to: • Inform teaching AND • ensure accountability. It’s different from, but related to, summative high-stakes testing/evaluation, which: • Doesn’t inform teaching. • Mostly used for accountability/motivation. Today’s High Stakes Evaluation World High Stakes Tests are USUALLY appropriate for only for summative evaluation. NOT useful for decisions teachers need to make every day (formative): • For whom do I need to individualize instruction or find more intensive instructional programs? • How do I organize my classrooms for instructional grouping? • How do I know that my teaching is “working” for each student so that I can make changes in instruction when necessary? NOT very useful to administrators who must make decisions about allocating instructional resources, especially in a preventative or responsive model. High Stakes Evaluation World (continued) •Reliability/Validity issues on High-stakes tests: • • • • • • • • Guessing factor (bubble-in, skipping items, etc.) Cheating—at various levels Culture-sensitivity concerns Test may not match what is taught Fatigue Enabling behaviors may not be present for test that is required Text anxiety Political pressures on student performance Testing often takes place after year(s) of instruction with long time frames between (annual). Information about success and failure rates provided too late to make changes. Primary use of high stakes tests then may be to “assign the blame” to students, their parents, teachers, or schools. High Stakes Evaluation World (continued) High Stakes = High cost in terms of: • Loss of instructional time. • Time for test taking. • Paying for the tests. In summary, typical High Stakes Testing is: • Too little! • Too late! • At too high a cost! An Example: Weight High standard: All children will have a healthy weight by the end of third grade. High Stakes Assessment: Based on assessing body density. • Weighing each student. • Immersing each student in a large tub filled with water, and measuring the amount of water displaced. • Divide weight by displacement and get density, a very accurate picture of physical status. Weight (continued) After 8-9 YEARS of growth, we would: 1. 2. 3. 4. Place students who are “unhealthy” in remedial programs. Create new health programs. Blame the effectiveness of old health programs. Blame the students (or their families) for over - or under eating. Formative Evaluation: Same Standard - Different Assessment High Standard: All children will have a healthy weight by the end of third grade. Benchmark Assessment: Monitor weight directly, frequently, and continuously. From birth, measure weight frequently and continuously with a simple, albeit less precise, general outcome measure, weight in pounds, using a scale. At ANY Point in Development The child could be weighed and a decision made about healthy weight. This process is: • Efficient. • Sufficiently accurate. • Proactive. • Cost effective We would know their health status before they reached the high stakes point! AIMSweb in a Picture and a Sentence AIMSweb is a 3-tier Progress Monitoring System based on direct, frequent and continuous student assessment which is reported to students, parents, teachers and administrators via a web based data management and reporting system for the purpose of determining response to instruction. Common Characteristics of GOMs The same kind of evaluation technology as other professions Powerful measures that are: • Simple • Accurate • Efficient indicators of performance that guide and inform a variety of decisions • Generalizable thermometer that allows for reliable, valid, cross comparisons of data General Outcome Measures (GOMs) from Other Fields Medicine measures height, weight, temperature, and/or blood pressure. Federal Reserve Board measures the Consumer Price Index. Wall Street measures the Dow-Jones Industrial Average. Companies report earnings per share. McDonald’s measures how many hamburgers they sell. CBM is a GOM Used for Scientific Reasons Based on Evidence Reliable and valid indicator of student achievement Simple, efficient, and of short duration to facilitate frequent administration by teachers Provides assessment information that helps teachers plan better instruction Sensitive to the improvement of students’ achievement over time Easily understood by teachers and parents Improves achievement when used to monitor progress Things to Always Remember About CBM Designed to serve as “indicators” of general reading achievement: CBM probes don’t measure everything, but measure the important things. Standardized tests to be given, scored, and interpreted in a standard way Researched with respect to psychometric properties to ensure accurate measures of learning Items to Remember (continued) Are sensitive to improvement in brief intervals of time Also tell us how students earned their scores (qualitative information) Designed to be as short as possible to ensure its “do ability” Are linked to decision making for promoting positive achievement and Problem-Solving What is CBM? • CBM is a form of Curriculum-Based Assessment (CBA). • Curriculum-Based Measurement (CBM) is the method of monitoring student progress through direct, continuous assessment of basic skills. • CBM is used to assess skills such as reading fluency, comprehension, spelling, mathematics, and written expression. Early literacy skills (phonics and phonological awareness) are similar measures and are downward extensions of CBM. • CBM probes last from 1 to 4 minutes depending on the skill being measured and student performance is scored for speed and accuracy to determine proficiency. Because CBM probes are quick to administer and simple to score, they can be given frequently to provide continuous progress data. The results are charted and provide for timely evaluation based on hard data. Origins of CBM as General Outcome Measures Curriculum-Based Measurement (CBM) was developed more than 20 years ago by Stanley Deno at the University of Minnesota through a federal contract to develop a reliable and valid measurement system for evaluating basic skills growth. CBM is supported by more than 25 years of school-based research by the US Department of Education. Starting in the area of reading, researchers have expanded to investigate additional academic areas over the years. With significant additional research, AIMSweb now offers CBM assessments in 7 areas and 2 languages, with new areas currently being tested and developed. Supporting documentation can be found in 100’s of articles, book chapters, and books in the professional literature describing the use of CBM to make a variety of important educational decisions. Skill Areas Currently Assessable via AIMSweb: • Early Literacy [K-1 benchmark, Progress Monitor (PM) any age] – – – – • Early Numeracy (K-1 benchmark, PM any age) – – – – • • • • • • • Letter Naming Fluency Letter sound fluency Phonemic Segmentation Fluency Nonsense Word Fluency Oral Counting Number identification Quantity discrimination Missing number Oral Reading (K-8, PM any age) MAZE (Reading comprehension); (1-8, PM any age) Math Computation (1-6, PM any age) Math Facts (PM any age) Spelling (1-8, PM any age) Written Expression (1-8, PM any age) Early Literacy and Oral Reading—Spanish (K-8) Advantages of CBM • Direct measure of student performance. • Correlates strongly with “best practices” for instruction and assessment, and research-supported methods for assessment and intervention. • Focus is on repeated measures of performance. (This cannot be done with most norm-referenced and standardized tests due to practice effect or limited forms.) Advantages of Using CBM • Quick to administer, simple, easy, and cost-efficient. • Performance is graphed an analyzed over time • Sensitive to even small improvements in performance This is KEY—as most standardized/norm-referenced tests do NOT show small, incremental gains. • CBM allows teachers to do what they do better! • Capable of having many forms—whereas most standardized tests only have a maximum of two forms. • Monitoring frequently enables staff to see trends in individual and group performance—and compare those trends with targets set for their students. Big Ideas of Benchmark (Tier 1) Assessment Benchmarking allows us to add systematic Formative Evaluation to current practice. For Teachers (and Students) • • • Early Identification of At Risk Students Instructional Planning Progress Monitoring For Parents • • Opportunities for Communication/Involvement Accountability For Administrators • • Resource Allocation/Planning and Support Accountability Benchmark Testing: Useful for Communicating with Parents Designed for collaboration and communication with parents. Student achievement is enhanced by the teacher-parent communication about achievement growth on a continuous basis. A Parent Report is produced for each Benchmark Testing. 2006 School Calendar Year (2006-2007): Benchmarking (Tier 1) 2007 2-weeks during: September 1 to October 15 January 1 to February 1 May 1 to June 1 Benchmark (Tier 1) for Oral Reading (R-CBM) 1 • Set of 3 probes (passages) at grade-level* (~1 through 8). • Administer the same set, three times per year, to all students. • Requires 3 minutes per student, 3 times per year. 2 3 How the AIMSweb System Works for Benchmark (Tier 1): Oral Reading (R-CBM) as an Example Research suggests there is no significant practice effect by repeating the set of three passages for benchmark assessment periods. Using same passage sets for each benchmark increases confidence in data obtained, reduces extraneous variables. • Students read aloud for 1 minute from each of the three Edformation Standard Reading Assessment Passages. • Passages contain meaningful, connected text. • Number of words read correctly (wrc) and number of errors are counted per passage read. • Scores reported as WRC/errors Benefits of Using Edformation’s Standard Reading Assessment Passages Passages are written to represent general curriculum or to be “curriculum independent” For additional data on R-CBM passages, review: Standard Reading Assessment Passages for Use in General Outcome Measurement: A manual describing development and technical features. Kathryn B. Howe, Ph.D. & Michelle M. Shinn, Ph.D. Allow decision making about reading growth, regardless of between-school, between-school-district, between-teacher differences in reading curriculum Are graded to be of equal difficulty Have numerous alternate forms for testing over time without practice effects Sample R-CBM Assessment Passage — Student Copy Standard Reading Assessment Passage Student Copy: • No numbers • Between 250-300 words (exception: 1st grade) • An informative first sentence • Same font style and size • Text without pictures Sample R-CBM Assessment Passage — Examiner Copy Standard Reading Assessment Passage Examiner Copy: Pre-numbered so they can be scored quickly and immediately. Data: Get the MEDIAN score for student’s 3 passages: 67 / 2 1 min. 85 / 8 1 min. 74 / 9 1 min. Why use Median vs. Average? Averages are susceptible to outliers when dealing with small number sets. Median Score is a statistically more reliable number than average for R-CBM. The Data: Get the MEDIAN score for 3 passages: 67 / 2 85 / 8 74 / 9 1 min. 1 min. 1 min. 1. Throw out the HIGH and LOW scores for Words Read Correct The Data: Get the MEDIAN score for 3 passages: 67 / 2 1 min. 85 / 8 74 / 9 1 min. 1 min. 2. Throw out the HIGH and LOW scores for the Errors. Remaining scores = MEDIAN. 3. Report this score in your AIMSweb account. =74/8 Managing Data after Assessment: • Take median score for each student and report in AIMSweb System. =74/8 • AIMSweb instantly generates multiple reports for analysis and various decision-making purposes. A few of the many reports available appear here: S A M P L E S For Teachers: Classroom Report Box & Whiskers Graphs (box plots): A Brief Explanation AIMSweb commonly uses box plots to report data. This chart will help familiarize yourself with box plots: Consider bell-curve. Box plots are somewhat similar in shape and representation. outlier Above Average Range 90th percentile 75th percentile Average range of population included in sample. Median (50th percentile) Below Average Range 10th percentile 25th percentile Report Beginning of Year Status Individual Report: Student Know When Things are Working Have Data to Know When Things Need Changing Data to Know that Changes Made a Difference Data to Know that Things Went Well Identifying At Risk Students For Teachers: Classroom Report At-a-Glance Views of Student Ranking & Growth Follow student progress over time. Compare Sub-group Trends: Compare a School to a Composite Many Reporting Options Available Finally… Benchmark Testing, using simple general, RESEARCHED outcome measures, provides an ONGOING data base to teachers, administrators, and parents for making decisions about the growth and development of basic skills. Professionally managed by staff in a process that communicates that WE are in charge of student learning. The End Administration and Scoring of READING-CURRICULUM-BASED MEASUREMENT (R-CBM) for Use in General Outcome Measurement Mark R. Shinn, Ph.D. Michelle M. Shinn, Ph.D. Overview of Reading-CBM Assessment Training Session Part of a training series developed to accompany the AIMSweb Improvement System. Purpose is to provide the background information and data collection procedures necessary to administer and score Reading - Curriculum Based Measurement (R-CBM). Designed to accompany: • Administration and Scoring of Reading Curriculum-Based Measurement for Use in General Outcome Measurement (R-CBM) Training Workbook • Standard Reading Assessment Passages • AIMSweb Web-based Software • Training Video Training Session Goals Brief review of Curriculum-Based Measurement (CBM) and General Outcome Measurement GOM). • Its Purpose. • Its Origins. Learn how to administer and score through applied practice. General Outcome Measures from Other Fields Medicine measures height, weight, temperature, and/or blood pressure. Federal Reserve Board measures the Consumer Price Index. Wall Street measures the Dow-Jones Industrial Average. Companies report earnings per share. McDonald’s measures how many hamburgers they sell. Common Characteristics of GOMs Simple, accurate, and reasonably inexpensive in terms of time and materials. Considered so important to doing business well that they are routine. Are collected on an ongoing and frequent basis. Shape or inform a variety of important decisions. Origins of CBM as General Outcome Measures Curriculum-Based Measurement (CBM) was developed more than 20 years ago by Stanley Deno at the University of Minnesota through a federal contract to develop a reliable and valid measurement system for evaluating basic skills growth. CBM is supported by more than 25 years of school-based research by the US Department of Education. Supporting documentation can be found in more than 150 articles, book chapters, and books in the professional literature describing the use of CBM to make a variety of important educational decisions. CBM was Design to Provide Educators With……. The same kind of evaluation technology as other professions… Powerful measures that are: • Simple • Accurate • Efficient indicators of student achievement that guide and inform a variety of decisions How the AIMSweb System Works for Benchmark (Tier 1): Oral Reading (R-CBM) Research suggests there is no significant practice effect by repeating the set of three passages for benchmark assessment periods. Using same passage sets for each benchmark increases confidence in data obtained, reduces extraneous variables. • Students read aloud for 1 minute from each of the three Edformation Standard Reading Assessment Passages. • Passages contain meaningful, connected text. • Number of words read correctly (wrc) and number of errors are counted per passage read. • Scores reported as WRC/errors Benefits of Using Edformation’s Standard Reading Assessment Passages Passages are written to represent general curriculum or to be “curriculum independent” For additional data on R-CBM passages, review: Standard Reading Assessment Passages for Use in General Outcome Measurement: A manual describing development and technical features. Kathryn B. Howe, Ph.D. & Michelle M. Shinn, Ph.D. Allows decision making about reading growth, regardless of between-school, between-school-district, between-teacher differences in reading curriculum Are graded to be of equal difficulty Have numerous alternate forms for testing over time without practice effects QuickTime™ and a An Example of R-CBM - Illustration 1 Practice Video H.263 decompressor are needed to see this picture. Observation Questions What did you observe about this child’s reading? Is she a good reader? Give your reason(s) for your answer to the second question? About how many words did she read correctly? QuickTime™ and a An Example of R-CBM - Illustration 2 Practice Video H.263 decompressor are needed to see this picture. Observation Questions What did you observe about this child’s reading? Is she a good reader? Give your reason(s) for your answer to the second question? About how many words did she read correctly? Qualitative Features Worth Noting R-CBM Workbook: Page 19 R-CBM is Used for Scientific Reasons Based on Evidence: It is a reliable and valid indicator of student achievement. It is simple, efficient, and of short duration to facilitate frequent administration by teachers. It provides assessment information that helps teachers plan better instruction. It is sensitive to the improvement of students’ achievement over time. It is easily understood by teachers and parents. Improves achievement when used to monitor progress. Things to Always Remember About R-CBM Are designed to serve as “indicators” of general reading achievement. R-CBM doesn’t measure everything, but measures the important things. Are Standardized tests to be given, scored, and interpreted in a standard way. Are researched with respect to psychometric properties to ensure accurate measures of learning. Items to Remember (continued) Are Sensitive to improvement in Short Periods of time. Also tell us how students earned their scores (Qualitative Information). Designed to be as short as possible to ensure its “do ability.” Are linked to decision making for promoting positive achievement and Problem-Solving. Benefits of Using Edformation’s Standard Reading Assessment Passages Are written to represent general curriculum or be “curriculum independent.” Allow decision making about reading growth, regardless of betweenschool, between-school-district, between-teacher differences in reading curriculum. Are graded to be of equal difficulty. Have numerous alternate forms for testing over time without practice effects. Administration and Scoring of R-CBM What Examiners Need To Do… • Before Testing students • While Testing students • After Testing students Things you Need Before Testing 1. Standard Reading Assessment Passage Student Copy: • No numbers • Between 250-300 words (exception: 1st grade) • An informative first sentence • Same font style and size • Text without pictures • Obtain from your LAM Things you Need Before Testing 2. Standard Reading Assessment Passage Examiner Copy: •Pre-numbered so they can be scored quickly and immediately. •Obtain from your LAM. 3. Tier 1 (Benchmark) R-CBM Probes: 1. AIMSweb Manager provides staff with copies of three grade-level probes (teacher and student copies). 2. FALL: Staff administer three, grade-level probes to each student. Report median score. 3. WINTER: Repeat administration of same three probes to each student. Report median score. 4. SPRING: Repeat administration of same three probes to each student. Report median score. 4. Additional Assessment Aids Needed Before Testing A list of students to be assessed Stop Watch (required—digital preferred) Clipboard Pencil Transparencies or paper copies of examiner passages Dry Marker or Pencil Wipe Cloth (for transparencies only) Setting up Assessment Environment Assessment environments are flexible and could include… • A set-aside place in the classroom • Reading station in the hall way • Reading stations in the media center, cafeteria, gym, or empty classrooms Things You Need to do While Testing Follow the standardized directions: • R-CBM is a standardized test • Administer the assessment with consistency • Remember it’s about testing, not teaching • Don’t teach or correct • Don’t practice reading the passages • Remember best, not fastest reading • Sit across from, not beside student R-CBM Standard Directions for 1 Minute Administration 1) Place the unnumbered copy in front of the student. 2) Place the numbered copy in front of you, but shielded so the student cannot see what you record. 3) Say: When I say ‘Begin,’ start reading aloud at the top of this page. Read across the page (DEMONSTRATE BY POINTING). Try to read each word. If you come to a word you don’t know, I will tell it to you. Be sure to do your best reading. Are there any questions? (PAUSE) 4) Say “Begin” and start your stopwatch when the student says the first word. If the student fails to say the first word of the passage after 3 seconds, tell them the word, mark it as incorrect, then start your stopwatch. 5) Follow along on your copy. Put a slash ( / ) through words read incorrectly. 6) At the end of 1 minute, place a bracket ( ] ) after the last word and say, “Stop.” 7) Score and summarize by writing WRC/Errors “Familiar” Shortened Directions When students are assessed frequently and know the directions. Say: When I say ‘Begin,’ start reading aloud at the top of this page. Items to Remember Emphasize Words Read Correctly (WRC). Get an accurate count. 3-Second Rule. No Other Corrections. Discontinue Rule. Be Polite. Best, not fastest. Interruptions. Accuracy of Implementation (AIRS) Things to do After Testing Score immediately! Determine WRC. Put a slash (/) through incorrect words. If doing multiple samples, organize your impressions of qualitative features. What is a Word Read Correctly? Correctly pronounced words within context. Self-corrected incorrect words within 3 seconds. What is an Error? Mispronunciation of the word Substitutions Omissions 3-Second pauses or struggles (examiner provides correct word) What is not Incorrect? (Neither a WRC or an Error) Repetitions Dialect differences Insertions (consider them qualitative errors) Example Juan finished reading after 1 minute at the 145th word, so he read 145 words total. Juan also made 3 errors. Therefore his WRC was 142 with 3 errors. Reported as: 142/3. Calculating and R-CBM Scores Record total number of words read. Subtract the number of errors. Report in standard format of WRC/Errors (72/3). R-CBM Scoring Rules and Examples A complete list of scoring rules can be found in the Appendix of your workbook. Please review and become familiar with the more unusual errors. Benchmark Data: Obtain MEDIAN score for student’s 3 passages: 67 / 2 85 / 8 74 / 9 1 min. 1 min. 1 min. Why use Median vs. Average? Averages are susceptible to outliers when dealing with small number sets. Median Score is a statistically more reliable number than average for R-CBM. The Data: Obtain MEDIAN score for 3 passages: 67 / 2 85 / 8 74 / 9 1 min. 1 min. 1 min. 1. Throw out the HIGH and LOW scores for Words Read Correct (WRC) The Data: Obtain MEDIAN score for 3 passages: 67 / 2 1 min. 85 / 8 74 / 9 1 min. 1 min. 2. Throw out the HIGH and LOW scores for the Errors. Remaining scores = MEDIAN. 3. Report this score in your AIMSweb account. =74/8 Determining Inter-Rater Agreement Example: Dave 2 examiners observed Dave’s reading: 1 scored Dave as 100 WRC 1 scored Dave as 98 WRC • They agreed that Dave read 98 of the words correct. • They disagreed on 2 words correct. Inter-rater Agreement Formula: Agreements/(Agreements + Disagreements) x 100=IRA (98)/ (98 + 2)= 98/100 = .98 See R-CBM .98 x 100 = 98% Workbook: Inter-rater Agreement for Dave is: 98%. Page 14 (Goal is 95% or better.) Prepare to Practice You now have the building blocks to begin R-CBM assessment to ensure reading growth. • Practice to automaticity — You’ll become more efficient. • Determine IRR with AIRS for accuracy and efficiency with a colleague. • Stay in tune by periodically checking AIRS. Prepare to Practice Practice Exercise 1: Let’s Score Practice Exercise 1: Let’s Score This student read 73 WRC/7 Errors Practice Exercise 2: Let’s Score Practice Exercise 2: Let’s Score This student read 97 WRC/3 Errors Practice Exercise 3: Let’s Score Practice Exercise 3: Let’s Score This student read 141 WRC/2 Errors Practice Exercise 4: Let’s Score Practice Exercise 4: Let’s Score This student read 86 WRC/5 Errors Practice Exercise 5: Let’s Score Practice Exercise 5: Let’s Score This student read 88 WRC/2 Errors Practice Exercise 6: Let’s Score Practice Exercise 6: Let’s Score This student read 99 WRC/1 Error Practice Exercise 7: Let’s Score Practice Exercise 7: Let’s Score This student read 96 WRC/6 Errors Practice Exercise 8: Let’s Score Practice Exercise 8: Let’s Score This student read 139 WRC/2 Errors The End Administration and Scoring of READING-MAZE (R-MAZE) for Use in General Outcome Measurement Power Point Authored by Jillyan Kennedy Based on Administration and Scoring of Reading R-MAZE for Use with AIMSweb Training Workbook By Michelle M. Shinn, Ph.D. Mark R. Shinn, Ph.D Overview of the CBM R-MAZE Assessment Training Session The Purpose is to provide background information, data collection procedures, and practice opportunities to administer and score Reading MAZE. Designed to accompany: • Administration and Scoring of Reading R-MAZE for Use in General Outcome Measurement Training Workbook • Standard R-MAZE Reading Passages • AIMSweb Web-based Software Training Session Goals 1. Brief review of Curriculum Based Measurement (CBM) and General Outcome Measurement (GOM). • Its Purpose • Its Origin 2. Learn how to administer and score CBM R-MAZE through applied practice. R-MAZE is Used for Scientific Reasons Based on Evidence • It is a reliable and valid indicator of student achievement. • It is simple, efficient, and of short duration to facilitate frequent administration by teachers. • It provides assessment information that helps teachers plan better instruction. • It is sensitive to the improvement of students’ achievement over time. • It is easily understood by teachers and parents. • Improves achievement when used to monitor progress. Curriculum Based Measurement Reading R-MAZE CBM R-MAZE is designed to provide educators a more complete picture of students’ reading skills, especially when comprehension problems are suspected. Best for : • Students in Grades 3 through 8 • Students in Grades 3 through 8, but who are performing below | grade-level Area CBM R-MAZE Reading Timing 3 minutes Test Arrangements Individual, Small Group, or Classroom Group What is Scored? # of Correct Answers Curriculum Based Measurement Reading R-MAZE (Continued) • R-MAZE is a multiple-choice cloze task that students complete while reading silently. • The students are presented with 150-400 word passages. • The first sentence is left intact. • After the first sentence, every 7th word is replaced with three word choices inside a parenthesis. • The three choices consist of: 1) Near Distracter 2) Exact Match 3) Far Distracter Sample Grade 4 R-MAZE Passage Examples of R-MAZE R-MAZE Workbook: Page 9 Observation Questions 1. What did you observe about Emma’s and Abby’s R-MAZE performances? 2. What other conclusions can you draw? Things to Always Remember About CBM R-MAZE • Are designed to serve as “indicators” of general reading achievement. • Are standardized tests to be given, scored, and interpreted in a standard way. • Are researched with respect to psychometric properties to ensure accurate measures of learning. Things to Always Remember About CBM R-MAZE (Continued) • Are sensitive to improvement in Short Periods of time. • Designed to be as short as possible to ensure its “do ability.” • Are linked to decision making for promoting positive achievement and Problem-Solving. Administration and Scoring of CBM R-MAZE What examiners need to do . . . • Before testing students • While testing students • After testing students Items Students Need Before Testing What the students need for testing: • CBM R-MAZE practice test • Appropriate CBM R-MAZE passages • Pencils Items Administrators Need Before Testing What the tester uses for testing: • Stopwatch • Appropriate CBM R-MAZE answer key • Appropriate standardized directions • List of students to be tested. Additional Assessment Aids • A List of students to be tested • Stopwatch (required—digital preferred) Setting up Assessment Environment Assessment environments are flexible and could include… • The classroom if assessing the entire class. • A cluster of desks or small tables in the classroom for small group assessment. • Individual desks or “stations” for individual assessment. Things You Need to do While Testing Follow the standardized directions: • Attach a cover sheet that includes the practice test so that students do not begin the test right away. • Do a simple practice test with younger students. • Monitor to ensure students are circling answers instead of writing them. • Be prepared to “Prorate” for students who may finish early. • Try to avoid answering student questions. • Adhere to the end of timing. CBM R-MAZE Standard Directions 1) 2) Pass R-MAZE tasks out to students. Have students write their names on the cover sheet, so they do not start early. Make sure they do not turn the page until you tell them to. Say this to the student (s): When I say ‘Begin’ I want you to silently read a story. You will have 3 minutes to read the story and complete the task. Listen carefully to the directions. Some of the words in the story are replaced with a group of 3 words. Your job is to circle the 1 word that makes the most sense in the story. Only 1 word is correct. 3) Decide if a practice test is needed. Say . . . Let’s practice one together. Look at your first page. Read the first sentence silently while I read it out loud: ‘The dog, apple, broke, ran after the cat.’ The three choices are apple, broke, ran. ‘The dog apple after the cat.’ That sentence does not make sense. ‘The dog broke after the cat.’ That sentence does not make sense. ‘The dog ran after the cat.’ That sentence does make sense, so circle the word ran. (Make sure the students circle the word ran.) CBM R-MAZE Standard Directions (Continued) Let’s go to the next sentence. Read it silently while I read it out loud. ‘The cat ran fast, green, for up the hill. The three choices are fast, green, for up the hill. Which word is the correct word for the sentence? (The students answer fast) Yes, ‘The cat ran fast up the hill’ is correct, so circle the correct word fast. (Make sure students circle fast) Silently read the next sentence and raise your hand when you think you know the answer. (Make sure students know the correct word. Read the sentence with the correct answer) That’s right. ‘The dog barked at the cat’ is correct. Now what do you do when you choose the correct word? (Students answer ‘Circle it’. Make sure the students understand the task) That’s correct, you circle it. I think you’re ready to work on a story on your own. CBM R-MAZE Standard Directions (Continued) 4) Start the testing by saying . . . When I say ‘Begin’ turn to the first story and start reading silently. When you come to a group of three words, circle the 1 word that makes the most sense. Work as quickly as you can without making mistakes. If you finish a/ the page/first side, turn the page and keep working until I say ‘Stop’ or you are all done. Do you have any questions? 5) 6) 7) 8) 9) Then say, ‘Begin.’ Start your stopwatch. Monitor students to make sure they understand that they are to circle only 1 word. If a student finished before the time limit, collect the student’s R-MAZE task and record the time on the student’s test booklet. At the end of 3 minutes say: Stop. Put your pencils down. Please close your booklet. Collect the R-MAZE tasks. CBM R-MAZE Familiar Directions 1) After the students have put their names on the cover sheer, start the testing by saying . . . When I say ‘Begin’ turn to the first story and start reading silently. When you come to a group of three words, circle the 1 word that makes the most sense. Work as quickly as you can without making mistakes. If you finish a/ the page/first side, turn the page and keep working until I say ‘Stop’ or you are all done. Do you have any questions? 2) 3) 4) 5) 6) Then say, ‘Begin.’ Start your stopwatch. Monitor students to make sure they understand that they are to circle only 1 word. If a student finished before the time limit, collect the student’s R-MAZE task and record the time on the student’s test booklet. At the end of 3 minutes say: Stop. Put your pencils down. Please close your booklet. Collect the R-MAZE tasks. Things to Do After Testing • Score immediately to ensure accurate results! • Determine the number of words (items) correct. • Use the answer key and put a slash (/) through incorrect words. CBM R-MAZE Scoring What is correct? The students circles the word that matches the correct word on the scoring template. What is incorrect? An answer is considered an error if the student: 1) Circles an incorrect word 2) Omits word selections other than those the student was unable to complete before the 3 minutes expired Making Scoring Efficient 1) Count the total number of items up to the last circled word. 2) Compare the student answers to the correct answers on the scoring template. Mark a slash [/] through incorrect responses. 3) Subtract the number of incorrect answers from the total number of items attempted. 4) Record the total number of correct answers on the cover sheet followed by the total number of errors (e.g., 35/2). CBM R-MAZE Prorating If a student finishes all the items before 3 minutes, the score may be prorated. 1) When the student finished, the time must be recorded and the number of correct answers counted. For example, the student may have finished in 2 minutes and correctly answered 40 items. 2) Convert the time taken in seconds. (2 minutes = 120 seconds) 3) Divide the number of seconds by the number correct. (120/40 = 3) 4) Calculate the number of seconds in the full 3 minutes. (3 minutes = 180 seconds) 5) Divide the number of full seconds by the calculated value from step 3. (180/3 = 60) Summary You now have the building blocks to begin CBM R-MAZE to ensure literacy growth. • Practice to automaticity --You’ll become more efficient • Check IRR using the AIRS for accuracy/efficiency with a colleague • Stay in tune by periodically checking AIRS Practice Exercise 1: Let’s Score R-CBM Workbook: Page 17 Practice Exercise 2: Let’s Score R-CBM Workbook: Page 19 THE END. Math-Curriculum Based Measurement (M-CBM) Training Session Overview of Math Computation CBM (M-CBM) and Math Facts Probes for use with AIMSweb Part of a training series developed to accompany the AIMSweb Improvement System. Purpose is to provide the background information and data collection procedures necessary to administer and score Reading Comprehension- Curriculum Based Measurement (MAZE). Designed to accompany: • Administration and Scoring of M-CBM Workbook • Standard Math-CBM probes • AIMSweb Web-based Software Training Session Goals Brief review of M-CBM • Its Purpose. • Its Origins. Learn how to administer and score through applied practice. Why Assess Math Computation via CBM: • • • Computational skills are critical for math success. Using the algorithms for computation assist in building math skills needed to master higher-level math proficiency. Broad-range achievement tests in math cover many skills, but few of each type. Why assess Math via M-CBM Cont’d: • • • Many broad-range math achievement tests have only one form. • Difficult to monitor progress repeatedly over time • Hard to determine where student’s skill-levels start/stop M-CBM provides many, narrow-band tests (many items across a gradelevel or problem type) Simple to administer, score, chart progress. M-CBM Methodology: • • • Research has shown that having students write answers to computational problems for 2-4 minutes is a reliable, valid means of assessing student progress in math computation. Appropriate for average-performing students in Grades 1-6 Appropriate for students enrolled above Grade 6, but are performing below this level. M-CBM Methodology: • Based on expected math computation skills for Grades 1-6: • Benchmarking: M-CBM (10 probes available per grade level) • Strategic Monitoring: M-CBM (40 probes available per grade level) • Progress Monitoring: M-CBM (40 probes available per grade level) or: • Progress Monitoring: Math Facts: (40 probes available per grade level) • Each probe contains 2 pages of computations, (front/back) • Students complete probes under standardized conditions (See specific directions) • Administer individually, small group, or class-wide: (Grade 1-3 = 2 minutes) (Grade 4-6 = 4 minutes) M-CBM Grade-Level Probes • All M-CBM Grade-level type probes level are based on Prototype Probe • Prototype Probes: All types of problems contained within the scope of a grade-level skill spectrum are consistent in order/sequence across all probes for each specified grade level. A-1: Same type of problem on each page Grade 2: Probe 1 Grade 2: Probe 2 M-CBM Sample 2nd Grade Probe (Student Copy) M-CBM Sample 2nd Grade Probe (Teacher’s Answer Key) Scoring based on number of DIGITS CORRECT (DS) M-CBM Math Facts: Addition Page 1 of 2 Grades 1-3 (fewer problems) Page 1 of 2 Grades 4-6 (more problems) M-CBM Math Facts: Probe options • Addition (+) facts • Subtraction (-) facts • Addition/Subtraction (+ / -) mixed facts • Multiplication facts (x) • Division facts (÷) • Multiplication/Division mixed facts (x / ÷) • Addition/Subtraction/Multiplication/Division mixed facts (+, -, x, ÷) AIMSweb Math Fact Probes Used primarily for instructional planning and short-term progress monitoring (p.8 in M-CBM Workbook) M-CBM Administration Setup Things you will need: 1. Appropriate M-CBM or Math Facts Probes 2. Students need pencils. 3. Stopwatch or timers Setting up the testing room: 1. Large group: Monitor carefully to ensure students are not skipping and X-ing out items 2. Small group/individual: Monitor similarly. 3. If students are off task, cue with statements such as: “Try to do EACH problem.” or “You can do this kind of problem so don’t skip.” M-CBM Directions for Administration Different M-CBM’s require different instructions (4 versions): Standard Math-Curriculum Based Measurement Probe instructions: • Grades 1-3: Page 13 of workbook • Grades 4-6: Page 14 of workbook Single-Skill Math Fact Probes—Standard Directions: • Grades 1-6 probes: Page 15 of workbook Multiple Skill Math Fact Probes—Standard Directions: • Grades 1-6 probes: Page 16 of workbook M-CBM After Testing—Scoring What is Correct? • Grades 1-6: Score Digits Correct (DC). Each digit correct in any answer = 1 point. • If problem is “X”-ed out, ignore X and score anyway. • Use Answer Key for quick scoring. • See Page 18 of workbook for examples. M-CBM: How to score Correct Digits (CD) Correct Digits - Each correct digit that a student writes is marked with an underline and counted. M-CBM: How to score Incomplete Problems Incomplete Problems - Sometimes students don’t finish a problem. Score for the number of correct digits that are written. M-CBM: How to score “X”-ed out problems X-ed Out Problems - Sometimes students start a problem and then cross it out. Sometimes students go back and write answers for problems they have crossed out. Ignore the X and score what you see. M-CBM: How to score reversals Legibility and Reversed or Rotated Numbers - Sometimes trying to figure out what number the student wrote can be challenging, especially with younger students or older students with mathematics achievement problems. To make scoring efficient and reliable, we recommend attention to three rules: 1. If it is difficult to determine what the number is at all, count it wrong. 2. If the reversed number is obvious, but correct, count it as a correct digit. 3. If the numbers 6 or 9 are potentially rotated and the digit is currently incorrect, count it as an incorrect digit. How to score M-CBM: Critical Processes (CP) Scoring Scoring Rules for Answer and Critical Processes: When students’ Grade 5 or Grade 6 M-CBM probes are scored for the number of CDs in the answer only and critical processes, the examiner uses the answer key that details which digits are to be counted. Each problem has an “assigned CD value” based on what AIMSweb believes is the most conventional method of solving the computational problem. Compare how the same multi-step multiplication problem would be scored using the different methods. Answer Only: Answer & Critical Processes: How to score M-CBM: Critical Processes (CP) Scoring (Correct vs. Incorrect) Should the student solve the problem correctly, their score would be 13 CD. Should the student solve the problem and not write any of the CD, the score would be 0 CD. (p. 21 in M-CBM Workbook) Although you don’t need to count every digit written in a correct answer, it is important to write the number of CDs awarded to the problem next to the answer. How to score M-CBM: Critical Processes (CP) Scoring (Work not shown) If the answer is correct as shown below-left, their score is the number of CDs possible shown in the answer key. If they do not show their work and the answer is incorrect, the examiner can only “score what they see” as shown below. (p. 21 in M-CBM Workbook) How to score M-CBM: Answer & Critical Processes (CP) Scoring (Alignment) When students’ answers are not aligned correctly according to place value: If the answer is correct, ignore the alignment problem and count the digits as correct as shown below. If the answer is incorrect, count the digits as they appear in approximate place value as shown below, even if a place value error may seem obvious. (p. 22 in M-CBM Workbook) The End Administration and Scoring of Early Numeracy Curriculum-Based Measurement (CBM) for use in General Outcome Measurement Overview of TEN-CBM Assessment Training Session Part of a training series developed to accompany the AIMSweb Improvement System. Purpose is to provide the background information and data collection procedures necessary to administer and score the Tests of Early Numeracy (TEN). Designed to accompany: • Test of Early Numeracy (TEN): Administration and Scoring of AIMSweb Early Numeracy Measures for Use with AIMSweb Training Workbook • Standard Early Numeracy Measures • AIMSweb Web-based Software • Training Video Training Session Goals Learn how to administer and score through applied practice. • Oral Counting • Number Identification • Quantity Discrimination • Missing Number Early Numeracy General Outcome Measures (EN-GOMs) Listen and follow along—Oral Counting Number Identification: Listen and follow along Quantity Discrimination: Listen and follow along Missing Number: Listen and follow along Things You Need BEFORE Testing Three Major Tasks for efficient and accurate assessment: 1. Understanding the typical timeframe for administering specific tests. 2. Getting the necessary testing materials; and 3. Arranging the test environment. Recommended AIMSweb Early Numeracy Assessment Schedule Things to Do BEFORE Testing — Oral Counting Specific Materials Arranged • Examiner copy for scoring • Clipboard or other hard surface for recording student answers. • Stopwatch. • Tape recorder (optional) to aid in any scoring questions or for qualitative analysis. The student does not need any materials. DURING Testing — Oral Counting Place examiner copy on clipboard so student cannot see. Say specific directions to the student. “When I say start I want you to start counting aloud from 1 like this 1, 2, 3 until I tell you to stop. If you come to a number you don’t know, I’ll tell it to you. Be sure to do your best counting. Are there any questions? Ready, Start.” Start your stopwatch. If the student fails to say “1” after 3 seconds, say “one” and continue. Follow along on the examiner copy. Score according to scoring rules. After one minute has expired,place a bracket after the last number said and say “Stop.” Reported as Correct Oral Counts/errors [COC/errors] Things to do AFTER Testing — Oral Counting Score Immediately! Determine COC. Put a slash (/) through incorrectly counted numbers. What is a Correct Oral Count? • Any number named that comes next in a sequence. • Repetitions. • Self-Corrections. What is an Error? • Hesitations of three seconds or more Provide the correct number — once only! • Omissions of a number in a sequence. Practice Exercise 1 — Oral Counting “When I say start I want you to start counting aloud from 1 like this 1, 2, 3 until I tell you to stop. If you come to a number you don’t know, I’ll tell it to you. Be sure to do your best counting. Are there any questions? Ready, start.” Practice Exercise 1: Listen and score—Oral Counting Practice Exercise 1 — Compare your results: Oral Counting Practice Exercise 2 — Listen and Score: Oral Counting Practice Exercise 2 — Compare your results: Oral Counting Number Identification — Standard Directions • Student copy in front of student • Examiner copy on clipboard so student does not see • Say these specific directions to the student: “Look at the paper in front of you. It has a number on it (demonstrate by pointing). What number is this?” CORRECT RESPONSE: “Good. The number is 8. Look at the number next to 8. (demonstrate by pointing). What number is this?” INCORRECT RESPONSE: “This number is 8 (point to 8). What number is this? Good. Let’s try another one. Look at the number next to 8. (demonstrate by pointing). What number is this?” Number Identification — Standard Directions, continued CORRECT RESPONSE: “Good. The number is 4.” (Turn the page). INCORRECT RESPONSE: “This number is 4 (point to 4). What number is this? Good.” (Turn the page). Continue with these specific directions to the student: “The paper in front of you has numbers on it. When I say start, I want you to tell me what the numbers are. Start here and go across the page (demonstrate by pointing). If you come to a number you don’t know, I’ll tell you what to do. Are there any questions? Put your finger on the first one. Ready, start.” Number Identification — Standard Directions, continued • • • • • Start your stopwatch. If the student fails to answer the first problem after 3 seconds, tell the student to “try the next one.” If the student does not get any correct within the first 5 items, discontinue the task and record a score of zero. Follow along on the examiner copy. Put a slash (/) through any incorrect responses. The maximum time for each item is 3 seconds. If a student does not provide an answer within 3 seconds, tell the student to “try the next one.” At the end of 1 minute, place a bracket ( ] ) around the last item completed and say “Stop.” Reported as Correct Number Identifications/errors [CND/errors] What is a Correct Number Identification? • Any number the student correctly identifies. What is an Error?—Number Identification • Substitutions: if the child states any number other than the item number • Hesitations of three seconds or more Tell the student to try the next one • Omissions or skips (if student skips entire row, count all as errors) If a student misses five items consecutively, discontinue testing. Practice Exercise 2 —Number Identification “The paper in front of you has numbers on it. When I say start, I want you to tell me what the numbers are. Start here and go across the page (demonstrate by pointing). If you come to a number you don’t know, I’ll tell you what to do. Are there any questions? Put your finger on the first one. Ready, start.” Practice Exercise 3: Listen and Score--Number Identification Practice Exercise 3 — Number Identification Practice Exercise 4 — Listen and Score: Number Identification Practice Exercise 4 — Compare your results: Number Identification Quantity Discrimination — Standard Instructions • • • Place the student copy in front of the student. Place the examiner copy on a clipboard and position so the student cannot see what the examiner records. Say these specific directions to the student: “Look at the piece of paper in front of you. The box in front of you has two numbers in it (demonstrate by pointing). I want you to tell me the number that is bigger.” CORRECT RESPONSE: “Good. The bigger number is 7. Now look at this box (demonstrate by pointing). It has two numbers in it. Tell me the number that is bigger.” INCORRECT RESPONSE: “The bigger number is 7. You should have said 7 because 7 is bigger than 4. Now look at this box (demonstrate by pointing). It has two numbers in it. Tell me the number that is bigger.” Quantity Discrimination — Standard Instructions, continued CORRECT RESPONSE: “Good. The bigger number is 4.” (Turn the page.) • • INCORRECT RESPONSE: “The bigger number is 4. You should have said 4 because is bigger than 2.” (Turn the page.) Continue with the following: “The paper in front of you has boxes on it. In the boxes are two numbers. When I say start, I want you to tell me the number in the box that is bigger. Start here and go across the page (demonstrate by pointing). If you come to a box and you don’t know which number is bigger, I’ll tell you what to do. Are there any questions? Put your finger on the first one. Ready, start.” Start your stopwatch. If the student fails to answer the first problem after 3 seconds, tell the student to “Try the next one.” Quantity Discrimination — Standard Instructions, continued • • • • If the student does not get any correct within the first 5 items, discontinue the task and record a score of zero. Follow along on the examiner copy. Put a slash (/) through any incorrect responses. The maximum time for each item is 3 seconds. If a student does not provide an answer within 3 seconds, tell the student to “try the next one.” At the end of 1 minute, place a bracket ( ] ) around the last item completed and say “Stop.” Reported as Quantity Discriminations/errors [QD/errors] Scoring Rules Rule 1: If a student states the bigger number, score the item as correct. Rule 2: If the student states both numbers, score the item as incorrect. Rule 3: If the student states any number other than the bigger number, score the item as incorrect. Rule 4: If a student hesitates or struggles with an item for 3 seconds, tell the student to “try the next one.” Score the item as incorrect. Rule 5: If a student skips an item, score the item as incorrect. Rule 6: If a student skips an entire row, mark each item in the row as incorrect by drawing a line through the row on the examiner score sheet. Rule 7: If a student misses 5 items consecutively, discontinue testing. Practice Exercise 5 — Let’s Score! Practice Exercise 5 — Compare your results Practice Exercise 6 — Let’s Score! Practice Exercise 6 — Let’s Score! Missing Number — Standard Instructions • • • Place the student copy in front of the student. Place the examiner copy on a clipboard and position so the student cannot see what the examiner records. Say these specific directions to the student: “The box in front of you has two numbers in it (point to first box). I want you to tell me the number that goes in the blank. What number goes in the blank?” CORRECT RESPONSE: “Good. 1 is the number that goes in the blank.” Let’s try another one (point to second box). What number goes in the blank?” INCORRECT RESPONSE: “The number that goes in the box is 1. See 1, 2, 3 (demonstrate by pointing). 1 goes in the blank. Let’s try another one (point to second box).What number goes in the blank?” Missing Number — Standard Instructions, continued CORRECT RESPONSE: “Good. 7 is the number that goes in the blank.”(Turn the page.) INCORRECT RESPONSE: “The number that goes in the blank is 7. See 5, 6, 7 (demonstrate by pointing). 7 goes in the blank.” (Turn the page.) • • Continue with the following: “The piece of paper in front of you has boxes with numbers in them. When I say start you are going to tell me the number that goes in the blank for each box. Start with the first box and go across the row (demonstrate by pointing). Then go to the next row. If you come to one you don’t know, I’ll tell you what to do. Are there any questions? Put your finger on the first one. Ready, start.” Start your stopwatch. If the student fails to answer the first problem after 3 seconds, tell the student to “try the next one.” Missing Number — Standard Instructions, continued • • • • If the student does not get any correct within the first 5 items, discontinue the task and record a score of zero. Follow along on the examiner copy. Put a slash (/) through any incorrect responses. The maximum time for each item is 3 seconds. If a student does not provide an answer within 3 seconds, tell the student to “try the next one.” At the end of 1 minute, place a bracket ( ] ) around the last item completed and say “Stop.” Reported as Missing Numbers/errors [MN/errors] Scoring Rules Rule 1: If a student correctly states the missing number, score the item as correct. Rule 2: If a student incorrectly states the missing number next, score the item as incorrect by placing a slash through the number on the examiner score sheet. Rule 3: If a student hesitates or struggles with an item for 3 seconds, tell the student to “try the next one” (demonstrate by pointing). Score the item as incorrect. Rule 4: If a student skips an item, score the item as incorrect. Rule 5: If a student skips an entire row, mark each item in the row as incorrect by drawing a line through the row on the examiner score sheet. Rule 6: If a student misses 5 items consecutively, discontinue testing. Practice Exercise 7 — Let’s Score! Practice Exercise 7 — Compare your results Practice Exercise 8 — Missing Number Practice Exercise 8 — Compare your results: Missing Number Quantitative Features Checklist After the student completes the ENGOM measures, judge the degree to which the student exhibits the early numeracy skills listed opposite. Accuracy of Implementation (AIRS) One for each type of early numeracy probe. Find them in the Appendix of the administration and scoring manual. Summary You now have the building blocks to begin TEN assessment. • Practice to Automaticity — You’ll become more proficient. • Get Checked Out with AIRS for accuracy/efficiency by a colleague. • Stay in Tune by periodically checking AIRS. The End Progress Monitoring Strategies for Writing Individual Goals in General Curriculum and More Frequent Formative Evaluation Mark Shinn, Ph.D. Lisa A. Langell, M.A., S.Psy.S. Big Ideas About Frequent Formative Evaluation Using General Outcome Measures and the Progress Monitoring Program One of the most powerful interventions that schools can use is systematic and frequent formative evaluation. Benchmark Assessment is not enough for some students because they may be in ineffective programs too long. (3 mos +) The solution is to write individualized goals and determine a feasible progress monitoring schedule. The core of frequent progress monitoring is: 1. Survey-Level Assessment 2. Goal setting using logical educational practices 3. Analysis of student need and resources for determining progress monitoring frequency. Formative Assessment Formative Assessment: Process of assessing student achievement during instruction to determine whether an instructional program is effective for individual students. • When students are progressing, keep using your instructional programs. • When tests show that students are not progressing, you can change your instructional programs in meaningful ways. • Has been linked to important gains in student achievement (L. Fuchs, 1986) with effect sizes of .7 and greater. Systematic formative evaluation requires the use of: Standard assessment tools… 1. 2. That are the same difficulty That are Given the same way each time. More Severe Achievement Problems and/or More Resource Intensive Programs Require More Frequent Formative Evaluation Benchmark Testing (3 - 4 x Per Year) is not enough for some students. With Very Low Performers, Not Satisfactory to Wait This Long! Programs That are More Resource Intensive… Title I, English Language Learning, Special Education Should monitor student outcomes more frequently than the Benchmark Testing schedule. Formative Evaluation of Vital Signs Requires Quality Tools Technical adequacy (reliability and validity); Capacity to model growth (able to represent student achievement growth within and across academic years); Treatment sensitivity (scores should change when students are learning); Independence from specific instructional techniques (instructionally eclectic so the system can be used with any type of instruction or curriculum); Capacity to inform teaching (should provide information to help teachers improve instruction); Feasibility (must be doable). Thinking About A Student’s Data Sample Student: Melissa Smart 3rd grade student Progress Monitor 8 Melissa Smart 110 92 77 50 34 Formative Evaluation—Is simply data enough? Formative Evaluation: Is data and a goal enough? Formative Evaluation: Are data, goals & trends enough? Formative Evaluation is Impossible without all data: Goals Make Progress Decisions Easier Current Goal Setting Practices Are Unsatisfying! Do you like these IEPs? I do not like these IEPs I do not like them Jeeze Louise We test, we check We plan, we meet But nothing ever seems complete. Would you, could you Like the form? I do not like the form I see Not page 1, not 2, not 3 Another change A brand new box I think we all Have lost our rocks! Need Shift to Few But Important Goals Often Ineffective Goal Smorgasboard! • • • • • • • • • • • Student will perform spelling skills at a high 3rd grade level. Student will alphabetize words by the second letter with 80% accuracy. Student will read words from the Dolch Word List with 80% accuracy. Student will master basic multiplication facts with 80% accuracy. Student will increase reading skills by progressing through Scribner with 90% accuracy as determined by teacher-made fluency and comprehension probes by October 2006. To increase reading ability by 6 months to 1 year as measured by the Woodcock Johnson. Student will make one year's growth in reading by October 2006 as measured by the Brigance. Student will be a better reader. Student will read aloud with 80% accuracy and 80% comprehension. Student will make one year's gain in general reading from K-3. Students will read 1 story per week. Improving the Process of Setting Goals for Formative Evaluation Set a few, but important goals. Ensure goals are measurable and linked to validated formative evaluation practices. Base goal setting on logical educational practices. Reduce the Number of Goals to a Few Critical Indicators Reading In (#) weeks (Student name) will read (#) Words Correctly in 1 minute from randomly selected Grade (#) passages. Spelling In (#) weeks (Student name) will write (#) Correct Letter Sequences and (#) Correct Words in 2 minutes from randomly selected Grade (#) spelling lists. Math Computation In (#) weeks (Student name) will write (#) Correct Digits in 2 minutes from randomly selected Grade (#) math problems. Written Expression In (#) weeks (Student name) will write (#) Total Words and (#) Correct Writing Sequences when presented with randomly selected Grade (#) story starters. Ensure the Goals are Measurable and Linked to Validated Formative Evaluation Practices Goals should be based on quality tests like CBM. Based on validated practices such as how often, how many samples, etc. Conducting a Survey Level Assessment Students are tested in successive levels of general curriculum, beginning with their current expected grade placement, until a level at which they are successful is determined. John Conducting a Survey John 3rd grade 4th grade Levelpassage Assessmentpassage 62/4 49/7 John 5th grader: 5th grade passage 26/12 Base Goal Setting on Logical Educational Practices Example of PLEP statement: John currently reads about 26 words correctly from Grade 5 Standard Reading Assessment Passages. He reads Grade 3 reading passages successfully; 62 correct words per minute with 4 errors, which is how well beginning 3rd grade students read this material. Goal Setting Strategies Current Performance Information based on Survey-Level Assessment (SLA). Know the Time Frame for the Goal. Determine a Future Performance Level. Setting the Time Frame, Goal Level Material, and Criterion Time Frame End of Year (At Risk or Grade-Level Expectations) In 18 Weeks… Annual IEP Goals (Special Education) In 1 year… (or) In 32 Weeks… Setting the Goal Material Logical Task-• Matching or Not Matching Expected Grade Placement • Title I: Fourth Grader--Grade 4 Material? • Grade 4: Special Education Student--Grade 4 Material? When Grade-Level Expectations Are Not Appropriate Consider the Severity of the Discrepancy Consider the Intensity of the Program Determining the Criterion for Success: Options to use 1. Local Benchmark Standards. 2. Linkage to High Stakes Tests. 3. Normative Growth Rates. 4. Developing Your Own Sample of Standards. 1. Benchmark Standards Advantages and Disadvantages Advantages Disadvantages Easily Understood Uncomfortable, Especially in Low Achievement Environments Can Indicate When Student No Longer Needs Specialized Instruction Issues of “Equity” Determining Least Restrictive Environment (LRE) 2. Linkage to High Stakes Standards Advantages and Disadvantages Advantages Disadvantages Reduces Problems of Equity when Local Achievement is Low Need Linkage to High Quality High Stakes Test Increases Usefulness of High Stakes Tests Linkage Must Be Established Empirically Helps Specify Long-Term Targets (What Grade 2 Student Needs to Read to Be Successful on Grade 6 Test) Adoption of Assumption that the Attainment of the Target Guarantees Passing High Stakes Test Normative Growth Rates Criterion for Success = Score on SLA + (Grade Growth Rate times # of Weeks) Score on SLA (30)+ (Ambitious Grade Growth Rate (2.0) times # of Weeks (32) Or 30 + (2.0 * 32) or 30 + 64 = Annual goal of 94 WRC 3. Growth Rate Standards Advantages and Disadvantages Advantages Easily Understood Disadvantages May Underestimate What Can Be Attained with High Quality Instruction Developing Your Own Sample of Standards Developing a Small Local Norm Benchmark Standards Advantages and Disadvantages Advantages Same Advantages as Benchmark Standards Disadvantages Same Disadvantages of Benchmark Standards Small Sample Size How Frequently to Assess? Balancing IDEAL with FEASIBLE Making Data-Based Decisions With Progress Monitor Need at LEAST 4-7 data points before making programming decision— …and you may sometimes want to collect more if you are uncertain. Err on the side of caution Criteria To Consider: Trendline meets Aimline for ultimate goal: Consider return to LRE. Trendline and AIMline will intersect in relatively near future? Keep with current intervention until goal is reached. Trendline exceeds AIMline? Consider increasing goal or difficulty level. Trendline not going to intersect AIMline—moves in opposite direction: Consider adding additional intervention, changing variable, and/or insensifying program changes (LRE). The End