simultaneous multithreading and thread

advertisement

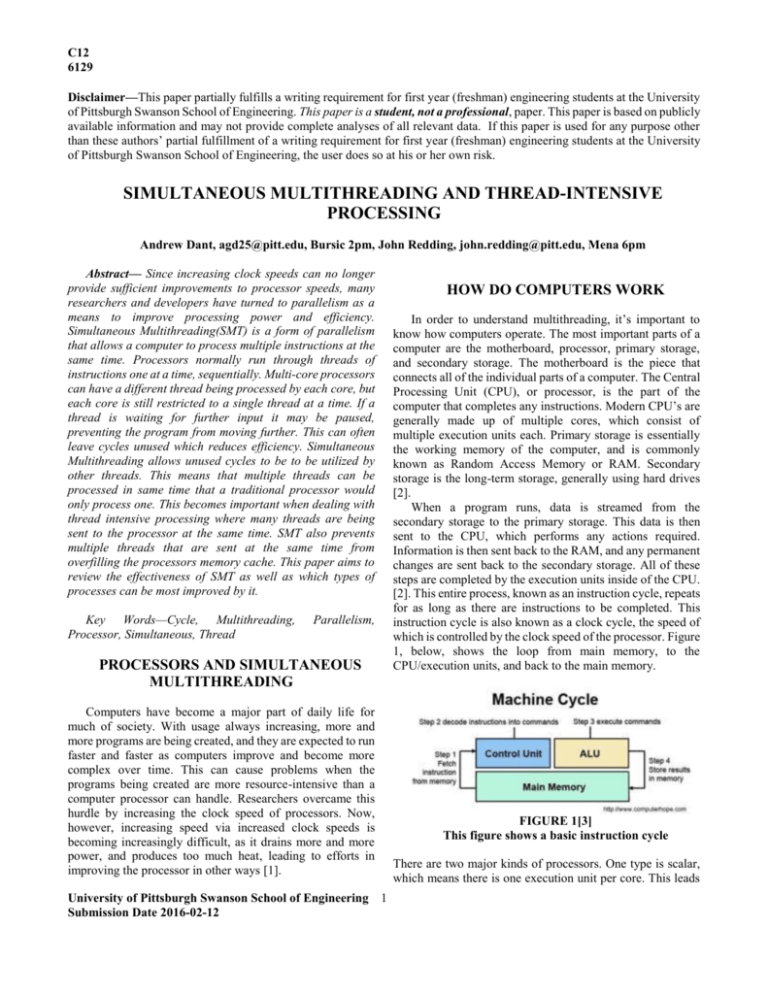

C12 6129 Disclaimer—This paper partially fulfills a writing requirement for first year (freshman) engineering students at the University of Pittsburgh Swanson School of Engineering. This paper is a student, not a professional, paper. This paper is based on publicly available information and may not provide complete analyses of all relevant data. If this paper is used for any purpose other than these authors’ partial fulfillment of a writing requirement for first year (freshman) engineering students at the University of Pittsburgh Swanson School of Engineering, the user does so at his or her own risk. SIMULTANEOUS MULTITHREADING AND THREAD-INTENSIVE PROCESSING Andrew Dant, agd25@pitt.edu, Bursic 2pm, John Redding, john.redding@pitt.edu, Mena 6pm Abstract— Since increasing clock speeds can no longer provide sufficient improvements to processor speeds, many researchers and developers have turned to parallelism as a means to improve processing power and efficiency. Simultaneous Multithreading(SMT) is a form of parallelism that allows a computer to process multiple instructions at the same time. Processors normally run through threads of instructions one at a time, sequentially. Multi-core processors can have a different thread being processed by each core, but each core is still restricted to a single thread at a time. If a thread is waiting for further input it may be paused, preventing the program from moving further. This can often leave cycles unused which reduces efficiency. Simultaneous Multithreading allows unused cycles to be to be utilized by other threads. This means that multiple threads can be processed in same time that a traditional processor would only process one. This becomes important when dealing with thread intensive processing where many threads are being sent to the processor at the same time. SMT also prevents multiple threads that are sent at the same time from overfilling the processors memory cache. This paper aims to review the effectiveness of SMT as well as which types of processes can be most improved by it. Key Words—Cycle, Multithreading, Processor, Simultaneous, Thread Parallelism, PROCESSORS AND SIMULTANEOUS MULTITHREADING Computers have become a major part of daily life for much of society. With usage always increasing, more and more programs are being created, and they are expected to run faster and faster as computers improve and become more complex over time. This can cause problems when the programs being created are more resource-intensive than a computer processor can handle. Researchers overcame this hurdle by increasing the clock speed of processors. Now, however, increasing speed via increased clock speeds is becoming increasingly difficult, as it drains more and more power, and produces too much heat, leading to efforts in improving the processor in other ways [1]. University of Pittsburgh Swanson School of Engineering 1 Submission Date 2016-02-12 HOW DO COMPUTERS WORK In order to understand multithreading, it’s important to know how computers operate. The most important parts of a computer are the motherboard, processor, primary storage, and secondary storage. The motherboard is the piece that connects all of the individual parts of a computer. The Central Processing Unit (CPU), or processor, is the part of the computer that completes any instructions. Modern CPU’s are generally made up of multiple cores, which consist of multiple execution units each. Primary storage is essentially the working memory of the computer, and is commonly known as Random Access Memory or RAM. Secondary storage is the long-term storage, generally using hard drives [2]. When a program runs, data is streamed from the secondary storage to the primary storage. This data is then sent to the CPU, which performs any actions required. Information is then sent back to the RAM, and any permanent changes are sent back to the secondary storage. All of these steps are completed by the execution units inside of the CPU. [2]. This entire process, known as an instruction cycle, repeats for as long as there are instructions to be completed. This instruction cycle is also known as a clock cycle, the speed of which is controlled by the clock speed of the processor. Figure 1, below, shows the loop from main memory, to the CPU/execution units, and back to the main memory. FIGURE 1[3] This figure shows a basic instruction cycle There are two major kinds of processors. One type is scalar, which means there is one execution unit per core. This leads Andrew Dant John Redding to a limit in speed and efficiency, as only one instruction cycle at most is happening per cycle. Because of this, if one instruction gets hung up due to a data dependency, the entire program stops until the data dependency is cleared. When this happens, the CPU is said to be performing at a sub-scalar level [4]. This sub-scalar performance led to the development of superscalar processors. This means that there are multiple execution units within each core. This allows the CPU to process multiple instructions at the same time, which prevents hung up instructions from halting the entire work flow. Most, if not all, modern processors are superscalar. This becomes very important when implementing different types of parallelism [4]. A thread, short for thread of execution, is the basic unit of CPU operations. A thread is the smallest sequence of instructions which is independently handled by an operating system’s scheduler for execution on a CPU [6]. Many computer processes can be broken up into smaller parts which, while related to one another, can be performed independently of one another by the processor. Because threads are the most basic entities scheduled for execution, all processes are converted into threads before being sent to the CPU. On a traditional, single threaded computer, there is a sequential order for all threads related to any specific process, and separate processes must progress one at a time. Almost all modern computers and software utilize some form of multithreading, which is a form of parallelism [6]. In computing, parallelism is a broad term for completing multiple tasks at the same time, or finding ways to overlap the execution of operations that do not need to occur sequentially. The fact that all threads are very small, and all share a similar design means that there are several forms of multithreading and parallelism involving threads. Due to their complexity and importance, multithreading and parallelism will be discussed in more detail later in this paper. Each thread sent to the processor consists of a set of operations or instructions which it will complete, as well as a number of resources which may be used in the execution of the intended operations. Every thread has a thread ID, a program counter, a set of registers, and a stack [6]. The thread ID is a unique identifier assigned to each thread, so that it can be interacted with by other threads. The program counter specifies which operations should be next executed. In a single threaded processor, the processor will execute one instruction, from one thread, from one process at a time sequentially, even if multiple threads from multiple processes need to be completed. Registers are small amounts of physical memory set apart for use as short term storage of things such as temporary variables by the processor, and each thread is assigned a register or registers to use while being processed. Registers are an extremely important aspect of a processors physical architecture, as all threads will need to store temporary information on the registers in order to complete their intended operations. A stack is a very commonly used data structure which utilizes a last in, first out(LIFO) method of adding and removing items, which means that the most recently added item on the stack must be removed from the stack first, before any others can be removed. All items on a stack are removed in the reverse of the order in which they were added [6]. While the elements of a thread listed above are all generally thread specific, there are also resources that can be shared between threads. When an individual process is broken into multiple threads that can be executed independently of one another, that process is said to be multithreaded. All of the threads within a multithreaded process share access to certain pertinent resources, such as code sections, data, and open files [6]. The ability to break an individual process into multiple independently operating threads can be extremely Execution Units Computer processors have groups of components, called execution units, also known as functional units, which are responsible for performing the calculations and operations sent to the processor [5]. An individual execution unit completes at most one instruction per instruction cycle. Modern processors implement what is called a superscalar architecture, meaning that there are multiple identical execution units in each core, allowing multiple instructions to be completed at once by the same processing core. This is a form of instruction-level parallelism, which is covered in more depth later in this paper. Since each executional unit can execute up to one instruction per cycle, a processor with X number of execution units can be thought of as having X units available per cycle. The frequency of cycles is defined by the clock speed. All processors have a specific clock speed, which refers to the number of clock cycles which occur per second. Modern processors have billions of clock cycles per second, but this does not mean that billions of instructions are completed per second. Many operations take multiple cycles to complete, and there are often instructions within a thread of instructions which can only be completed in a certain order. This leads to a significant loss in efficiency, since individual operations can not only preoccupy an execution unit for several cycles, but can also prevent other operations in the thread from occurring during those cycles [5]. Traditional superscalar processors, despite being able to execute multiple instructions at once, can still only draw new instructions from a single thread at a time. If a thread has fewer new instructions available than the number of available execution units, the extra execution units are left completely idle. This shortcoming in traditional superscalar architecture can be greatly improved upon via a method called multithreading [5]. However, in order to understand multithreading, one first needs an understanding of both threads and parallelism. WHAT IS A THREAD AND WHY DO PROGRAMS USE THEM 2 Andrew Dant John Redding useful, and is the basis for the concept of thread-level parallelism. One example of the power of multithreaded programs, which are extremely common in modern computing, is a word processor, such as Microsoft Word or Google Docs. The processor may have one thread for displaying graphics, another for logging user input, and another still running a spelling and grammar checker on the words that the user types [6]. 12+3 and 3+9 do not directly interact at this level, they can both be calculated at the same time. This means that both buttons can be used to complete these expressions, reducing the problem to ’15-12’. Only one button is required for this, giving a final answer of ‘3’. In this example, we completed a 5 step problem in 3 cycles. This is the benefit of superscalar processors. Both of these methods are very common. So common in fact, that they are often used together. Superscalar processors now use pipelining to improve efficiency even further. Instructions are lined up and sent into multiple execution units in a row, building up as they go along [7]. This creates a kind of expansion effect in the middle, where many instructions are being executed at once. This can be achieved either through software or hardware. Software changes would include coding a program to run in parallel, and having the computer mark the instructions to do so. Hardware changes would include more execution units and more registers [7]. WHAT IS PARALLELISM Parallelism is a term used very broadly when relating to programs. Basically, parallelism is when multiple things are happening within the same timeframe. These things do not need to be happening within the same instant, and in many cases are unable to happen within the same instant. In most cases, one calculation is started, and then put on hold while another is started. Parallelism is generally broken into two main types: instruction-level(ILP) and thread-level(TLP) [7]. Instruction Level Instructional-level parallelism is one way of running multiple parts of a program together. Transfer of data from the RAM to the processor is not instantaneous, which means there are times where the processor is doing nothing but waiting for input. This decreases overall efficiency. One method of parallelism is pipelining. This method consists of starting many instructions before finishing any. A good way to think about this is an assembly line of envelope stuffers. The first person starts with a piece of paper. They fold the piece of paper, and pass it to the next person, who puts the paper into an envelope. The next person receives this stuffed envelope, and sticks a shipping label on it. This is then passed to a final person who puts a stamp on it and seals it, putting it in the ‘done’ pile. This is how pipelining works in a program as well. Multiple instructions line up in sequence, and begin executing one piece at a time. All of the data is loaded, then all of the calculations are made, then all of the data is written. This leads to a lower count of wasted cycles due to hung up instructions [8]. Another method of parallelism is superscalar processing. Having multiple execution units is very important, as it allows a CPU to complete multiple instructions truly in parallel. When using pipelining, the CPU can complete multiple instructions during the same timeframe, but not in the same instant. Multiple execution units allows multiple instructions to be executed per clock cycle [9]. One way to imagine this is two buttons, given the problem ‘4*3+12/4-3+9’. Only one operation can be completed per button, and they must be independent. For example, because 4*3 and 12/4 do not interact directly on the first level, both of these can be completed at the same time. When both buttons are used, the problem is reduced to ‘12+3-3+9’. This is the end of the clock cycle, and the buttons reset. Next, because FIGURE 2 [7] Shows the improved efficiency of combining pipelining and superscalar processing Figure 2, above, shows the timetable a program would follow if pipelining and superscalar processing were combined. Thread Level Thread-level parallelism(TLP) is a concept that is similar to instruction-level parallelism(ILP), but on a larger scale. Whereas ILP sorts instructions within a thread, TLP changes between entire threads. If a thread is determined to be more efficient, the next clock cycle will be spent executing the other thread. It is important to note that threads cannot be run within the same cycle using this method [9]. There are two major kinds of thread-level parallelism. The first kind is coarse-grained parallelism(CMT) [3]. This type of parallelism uses a set number of cycles to work on a thread before switching to another. At that time, all of the information used in the other thread is saved to the register, and the other thread is immediately started. This removes the wasted cycles that were previously used to transition between threads when one was completed. The method of setting the amount of cycles to run varies. Some programs may continue one thread until the processor hits a cache miss, while others use a predetermined number of cycles. Below is a diagram on coarse-grained TLP. 3 Andrew Dant John Redding FIGURE 3.2 [7] Compares super-scalar(right) to FMT(left) FIGURE 3.1 [7] Compares super-scalar(left) to CMT(right) Figure 3.2, above, shows the workflow of a standard superscalar processor on the right side, and the workflow of a superscalar processor using FMT on the left. FMT is more rapid in thread changes than CMT is. This leads to each thread taking more cycles to fully complete, but less time overall for the threads to complete. Figure 3.1, above, shows the workflow of a standard superscalar processor on the left, and the workflow of a superscalar processor using CMT on the right. CMT removes the cycles used on switching between threads. The other kind of thread-level parallelism is fine-grained parallelism(FMT)[7]. This method switches rapidly between threads. Whereas coarse-grained TLP allowed threads to have a couple cycles before switching, fine-grained TLP switches threads every single cycle. This makes each individual thread take longer to complete, but the overall completion time is greatly reduced [7]. WHAT IS SIMULTANEOUS MULTITHREADING Simultaneous multithreading is the full integration of TLP and superscalar processing. Thread-level parallelism can be used to greatly reduce the vertical waste of resources. This does nothing for the wasted execution units in each cycle. Likewise, superscalar processing reduces horizontal waste, but does very little for wasted clock cycles. SMT is the combination of these two technologies, in order to minimize waste, both horizontal and vertical [7]. This means that performance is greatly increased, with the majority of every cycle being used to complete necessary operations within the threads being processed. 4 Andrew Dant John Redding Changes to Physical Architecture All processors with simultaneous multithreading implement a form of superscalar architecture, along with certain architectural modifications. Most of the components necessary for simultaneous multithreading are components already necessary for conventional superscalar designs. There are however certain modifications necessary to accommodate the rapid access of multiple threads and their respective resources. Because threads often share many resources, SMT can cause significant interference between threads within shared physical structures. This interference is most notable in the form of increased short term memory requirements. Each thread being used has to make memory references to its own set of variables and stored information every time the thread is used. Since more threads are being used, SMT processors have significantly increased memory requirements [11]. This issue can be overcome by having a larger number of registers built into the architecture of SMT processors. SMT processors also benefit from additional pipelining stages for accessing the registers [11]. FIGURE 3.3 [7] Compares CMT(left) with FMT(middle) and SMT(right) Changes to Software Though the necessary changes to the physical architecture of SMT processors are relatively minor, there are numerous ways in which the software being processed can be adjusted to improve the effectiveness of SMT. While simultaneous multithreading can improve efficiency in traditionally coded software to a degree, some changes to how software is programmed are necessary in order to fully take advantage of the capabilities of SMT. Many software structuring and behavioral changes have been made over time in accommodating the instruction and thread level parallelism employed by our current processors, so it is not abnormal for proper implementation of SMT to require some changes on the part of programmers and software designers. Software support for SMT borrows many mechanisms from software support for previously implemented forms of multithreading, but also requires the use of some new methods in order to fully take advantage of its hardware capabilities [12]. As discussed in the section on physical architecture, SMT requires a significant increase in register use. An increase in the number of physical registers in the processor is one potential method to combat this shortcoming, but it is also possible to reduce the need for additional registers via simple changes to the software being processed. One study conducted at the University of Washington found that there are multiple kinds of compiler optimizations for traditional processors which can be applied as a means of significantly improving SMT efficiency with only minor adjustments [12]. Another University of Washington study found that, of several methods tried, the most effective way to improve SMT efficiency and memory usage was a simple change to the software that controls short term memory allocation in order to eliminate inherent contention [11]. The study also found In Figure 3.3, three kinds of parallelism are shown. Column c shows coarse-grained thread-level parallelism. Column d shows fine-grained TLP. Finally, column e shows SMT. This figure is great for comparing all three types of parallelism, and showing that SMT has a lower unused execution unit/cycle count. PROGRESSION FROM SINGLETHREAD PROCESSORS TO SIMULTANEOUS MULTITHREADING Thread parallelism has been an idea for much longer than it was able to be implemented effectively. The first multithreading processor was created in the early 1950s, and was capable of handling two threads at once. This technology was then quickly improved and by the late 1950s, processors existed that could handle up to 33 threads [10]. In the 1960s, superscalar processing was beginning to be developed, with registers and instructions being tagged in order to make them more easily recognizable by execution units [10]. Progress was slowly made over the next 20 years, with four-way multithreading being possible by the late 1980s. In the 1990s, the idea of simultaneous multithreading was just being developed and expanded upon. However, there was no processor truly capable of SMT until the 2000s [10]. IMPLEMENTATION OF SIMULTANEOUS MULTITHREADING 5 Andrew Dant John Redding that once this simple change was implemented, several other beneficial changes became available, which they believe to be worthy of further research [11]. As a final example, a team of researchers, also at the University of Washington, developed and partially tested a concept for a brand new method of implementing SMT called mini-threads [13]. The basic concept of which is breaking threads into smaller ‘minithreads’ which are capable of sharing architectural registers [13]. The researchers believe that implementing this method could significantly improve SMT processing speeds, while simultaneously decreasing the number of necessary registers [13]. While the concept of mini-threads is not implemented on any commercially available processors as of yet, the researchers state that they plan to continue to explore the concept of mini-threads as future work [13]. The fact that there are already so many potentially viable options being considered for improving SMT in the future provides strong support for the idea that this already impressive technology can become even more beneficial with future research. Figure 3.4, above, shows the difference between a standard superscalar processor, and a multicore processor. Multicore processors are basically multiple processors combined into one. This allows each core to execute instructions completely independently, without switching between threads. What Kinds of Processes Are Most Affected Many programs are affected by SMT, although entire workflows and activities can be impacted. Among these are workflows relying on multitasking, and activities such as video editing and 3D rendering. When editing a video, data is being encoded in tiny sections, frame by frame. These sections are all completely independent of each other – as long as they end up in the right order at the end, it doesn’t matter how they are processed. Because of this, as long as the data transfer can be handled, SMT gives a huge benefit. The same is true of rendering a 3D model. Calculations are made per point of the model, resulting in thousands of calculations. This is greatly improved by SMT [14]. MULTICORE PROCESSORS AND THEIR RELATION TO SIMULTANEOUS MULTITHREADING IS THIS THE BEST WAY TO IMPROVE PROCESSING POWER Multicore processors are CPUs that have multiple cores of execution. Each core is made up of multiple execution units, which means one multicore processor is capable of doing the work of multiple superscalar processors. This opens up another method of parallelism, in which threads are run simultaneously, and completely independently. Below is a diagram showing how a multicore processor compares to a regular superscalar processor [7]. Alternative Methods of Improving Processor Power Alternative methods of improving CPU performance include increasing clock speed and increasing the number of cores of execution. These two methods both have progressed greatly over the last few decades. Increasing clock speed has the side-effect of a higher temperature, and also can cause a greater error-rate. This means that clock speed recently has plateaued around 4 GHz, with most processors tending to stay around 3 GHz. Without further improvements to cooling, increasing clock speed is no longer a viable way of improving performance. Increasing the number of cores of execution in a processor is a method of improving performance that is still being explored. Every generation of processors has a greater number of cores, both logical and physical. Some high end server processors have up to 15 cores of execution [15]. One alternative to SMT is completely different than any other, and that is a whole new CPU design. Currently, CPUs function in what is essentially 2 dimensional space. Within the past couple years, a large amount of funding has gone toward researching 3 dimensional processors. This would increase the efficiency of CPUs by up to a thousand times, not only in terms of performance, but also power consumption [16]. Advantages of Simultaneous Multithreading FIGURE 3.4 [7] 6 Andrew Dant John Redding Simultaneous multithreading gives an efficiency boost that is hard to match with other methods of improvement. While clock speeds have been very helpful up to this point, it is no longer reasonable to expect large returns for the effort and resources required to increase the clock speed further. The same can be said of multicore processors. With more cores, transistors must decrease in size further. Eventually we will be unable to fit any more on a chip. This means that we must find another way to improve performance. Should a 3 dimensional processor be developed and created in a reasonably cheap manner, it is likely that the benefits would far outweigh that of SMT. However, it is unlikely that such a powerful processor will be developed in the near future, whereas SMT is already being implemented. another program uses this cache, and can gain access to the encryption key, that program also has access to any data the intended program does. This flaw generally does not affect personal computers, but it is a serious concern for large-scale servers [18]. WHERE DO WE GO FROM HERE? Simultaneous multithreading is currently one of the most viable ways to improve the efficiency and performance of future processors. After years of progress being made in parallelism, at both the instruction and thread-level, simultaneous multithreading technology is just now becoming available in consumer PCs. SMT is an innovative and versatile technology, and its future in commercial computing is clear. The benefits of this technology far outweigh the drawbacks, and it can continue to be improved through multiple kinds of simple software changes. Simultaneous multithreading will continue to be researched, improved, and implemented in our processors for years to come. Shortcomings of Simultaneous Multithreading Nothing exists without a downside. The same is true of SMT. Two major issues that currently exist with simultaneous multithreading are bottlenecks occurring when resources are shared, and security flaws. Because simultaneous multithreading executes many threads at one time, data is being pulled in large amounts from RAM and caches. While this is not an issue with smaller processors, when the thread count starts increasing, it is very easy to hit a wall in terms of data transfer. This can lead to threads taking even longer to complete than they usually would. One way to imagine this is the fire alarm in a crowded building being pulled, with only 2 exits. When you have a small number of people, it is very easy to make two exits handle the output required. However, when the building is filled with hundreds of people, a mob forms by the doors, with everybody fighting to get out at once. This leads to a decrease in output, which may have been avoidable by forming a line, and having everybody exit in order. The same can be said for SMT. In some cases, when many threads are going to be using one resource, it is best to perform them sequentially, than to have too many threads try to access the same register. Another major flaw with SMT is the security flaws it opens up. When multiple threads share one execution unit, they do not have to be from the same program. Two different programs can access the same execution unit at any one time. Normally this would be a good thing, as it decreases the time a thread spends waiting to be executed. This sharing of execution units, however, also means that the programs are sharing the same registries and caches. This means that any data that is being used by one program can be accessed by another program. This could lead to programs gaining access to information that should have been encrypted [17]. Encryption keys exist to access encrypted data. A program carries this key, which means that it must be saved to a cache at some point. Generally this is not a problem. However, when REFERENCES [1] P. Persson Mattsson (2014, Nov. 13) “Why Haven’t CPU Clock Speeds Increased in the Last Few Years?” ComSol (online article) https://www.comsol.com/blogs/havent-cpuclock-speeds-increased-last-years/ [2]”The Instruction Cycle” (website) http://www.cs.uwm.edu/classes/cs315/Bacon/Lecture/HTM L/ch05s06.html [3] R. S. Singh (2014, Apr. 23) “What is clock cycle, machine cycle, instruction cycle in a microprocessor?“Quora (online article) https://www.quora.com/What-is-clock-cycle-machine-cycleinstruction-cycle-in-a-microprocessor [4] J. Smith, G. Sohi (1995). “The Microarchitecture of Superscalar Processors” (online article) ftp://ftp.cs.wisc.edu/sohi/papers/1995/ieeeproc.superscalar.pdf [5] G. Torres (2006). “Execution Units” Hardware Secrets (website) http://www.hardwaresecrets.com/inside-intel-coremicroarchitecture/4/ [6] A. Silberschatz, P. Galvin, G. Gagne (2013). “Operating System Concepts, Ninth Edition” Kendallville, Indiana: Courier (print book) Chapters 2-4 [7] P. Mazzucco(2001, June 15) “Multithreading” SLCentral (website) http://www.slcentral.com/articles/01/6/multithreading/print.p hp [8] Hawkes (2000) “Enhancing Performance with Pipelining” FSU (website) http://www.cs.fsu.edu/~hawkes/cda3101lects/chap6 [9] E. Karch (2011, Apr. 1,). “CPU Parallelism: Techniques of Processor Optimization” MSDN Blogs. (online article) 7 Andrew Dant John Redding http://blogs.msdn.com/b/karchworld_identity/archive/2011/0 4/01/cpu-parallelism-techinques-of-processoroptimization.aspx [10] M. Smotherman ( April 2005) “History of Multithreading” (website) http://people.cs.clemson.edu/~mark/multithreading.html [11]J. Lo, S. Eggers, et al. “Tuning Compiler Optimizations for Simultaneous Multithreading.” Dept. of Computer Science and Engineering, University of California. (online article). http://www.cs.washington.edu/research/smt/papers/smtcomp iler.pdf [12] L. K. McDowell, S. J. Eggers, S. D. Gribble (2003). “Improving Server Software Support for Simultaneous Multithreaded Processors.” University of Washington (online article) http://www.cs.washington.edu/research/smt/papers/serverSu pport.pdf [13] J. Redstone, S. Eggers, H. Levy (2003) “Mini-threads: Increasing TLP on Small-Scale SMT Processors.” University of Washington (online article) http://www.cs.washington.edu/research/smt/papers/minithre ads.pdf [14] P. Manadhata, V. Sekar (2003). “Simultaneous Multithreading” (online presentation) http://www.cs.cmu.edu/afs/cs/academic/class/15740f03/www/lectures/smt_slides.pdf [15] ”Xeon Processor” Intel (website) http://ark.intel.com/products/75251/Intel-Xeon-ProcessorE7-4890-v2-37_5M-Cache-2_80-GHz [16]T Puiu “3D stacked computer chips could make computers 1,000 times faster” ZME Science (online article) http://www.zmescience.com/research/technology/3dstacked-computer-chips-43243/ [17] A. Fog (2009). “How Good is Hyperthreading?” Agner’s CPU Blog (online article) http://www.agner.org/optimize/blog/read.php?i=6&v=t [18] C. Percival (2005). “Hyper-Threading Considered Harmful” (online article) http://www.daemonology.net/hyperthreading-consideredharmful/ ACKNOWLEDGMENTS We would like to acknowledge our writing instructor, Keely Bowers, our co-chair, Kyler Madara, and our chair, Sovay McGalliard for their help in the writing of this paper. 8