Parallel Computing Concepts: Lecture Notes

advertisement

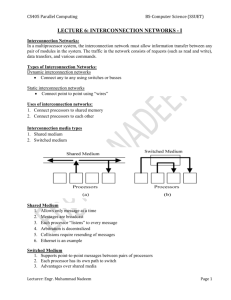

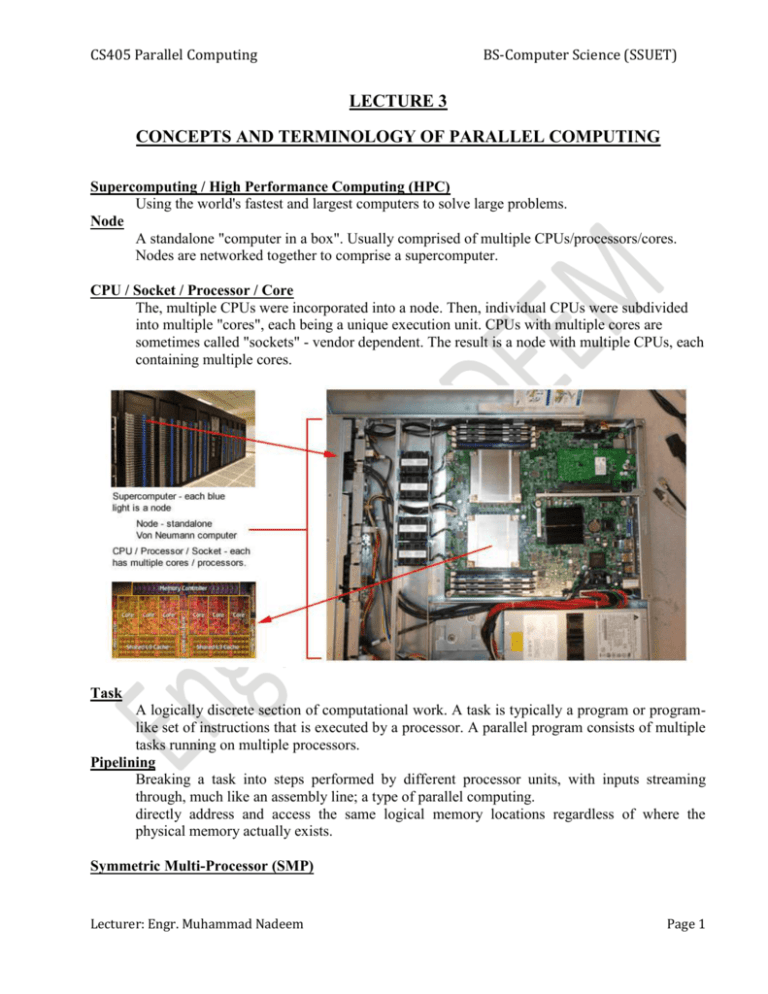

CS405 Parallel Computing BS-Computer Science (SSUET) LECTURE 3 CONCEPTS AND TERMINOLOGY OF PARALLEL COMPUTING Supercomputing / High Performance Computing (HPC) Using the world's fastest and largest computers to solve large problems. Node A standalone "computer in a box". Usually comprised of multiple CPUs/processors/cores. Nodes are networked together to comprise a supercomputer. CPU / Socket / Processor / Core The, multiple CPUs were incorporated into a node. Then, individual CPUs were subdivided into multiple "cores", each being a unique execution unit. CPUs with multiple cores are sometimes called "sockets" - vendor dependent. The result is a node with multiple CPUs, each containing multiple cores. Task A logically discrete section of computational work. A task is typically a program or programlike set of instructions that is executed by a processor. A parallel program consists of multiple tasks running on multiple processors. Pipelining Breaking a task into steps performed by different processor units, with inputs streaming through, much like an assembly line; a type of parallel computing. directly address and access the same logical memory locations regardless of where the physical memory actually exists. Symmetric Multi-Processor (SMP) Lecturer: Engr. Muhammad Nadeem Page 1 CS405 Parallel Computing BS-Computer Science (SSUET) Hardware architecture where multiple processors share a single address space and access to all resources; shared memory computing. Communications Parallel tasks typically need to exchange data. There are several ways this can be accomplished, such as through a shared memory bus or over a network, however the actual event of data exchange is commonly referred to as communications regardless of the method employed. Synchronization The process of coordinating the behavior of two or more processes, which may be running on different processors. Lock A synchronization device that allows access to data to only one processor at a time. Granularity In parallel computing, granularity is a qualitative measure of the ratio of computation to communication. Coarse: relatively large amounts of computational work are done between communication events Fine: relatively small amounts of computational work are done between communication events Parallel Overhead The amount of time required to coordinate parallel tasks, as opposed to doing useful work. Parallel overhead can include factors such as: 1. 2. 3. 4. 5. Task start-up time Synchronizations Data communications Software overhead imposed by parallel compilers, libraries, tools, operating system, etc. Task termination time Massively Parallel Refers to the hardware that comprises a given parallel system - having many processors. The meaning of "many" keeps increasing, but currently, the largest parallel computers can be comprised of processors numbering in the hundreds of thousands. Embarrassingly Parallel Solving many similar, but independent tasks simultaneously; little to no need for coordination between the tasks. Lecturer: Engr. Muhammad Nadeem Page 2 CS405 Parallel Computing BS-Computer Science (SSUET) Scalability Refers to a parallel system's (hardware and/or software) ability to demonstrate a proportionate increase in parallel speedup with the addition of more processors. Factors that contribute to scalability include: Hardware - particularly memory-cpu bandwidths and network communications 2. Application algorithm 3. Parallel overhead related 4. Characteristics of your specific application and coding 1. Multiprogramming: A computer running more than one program at a time (like running Excel and Firefox simultaneously). Multiprocessing: A computer using more than one CPU at a time. Multitasking: Multitasking is the ability of an operating system to execute more than one program simultaneously. Though we say so but in reality no two programs on a single processor machine can be executed at the same time. The CPU switches from one program to the next so quickly that appears as if all of the programs are executing at the same time. Multithreading: Multithreading is the ability of an operating system to execute the different parts of the program, called threads, simultaneously. The program has to be designed well so that the different threads do not interfere with each other. This concept helps to create scalable applications because you can add threads as and when needed. Individual programs are all isolated from each other in terms of their memory and data, but individual threads are not as they all share the same memory and data variables. Hence, implementing multitasking is relatively easier in an operating system than implementing Hardware Multithreading: Increasing utilization of a processor by switching to another thread when one thread is stalled. Fine-Grained Multithreading: A version of hardware multithreading that suggests switching between threads after every instruction. Coarse-Grained Multithreading: A version of hardware multithreading that suggests switching between threads only after significant events, such as a cache miss. Lecturer: Engr. Muhammad Nadeem Page 3 CS405 Parallel Computing BS-Computer Science (SSUET) Simultaneous Multithreading (SMT): SMT is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution to better utilize the resources provided by modern processor architectures. The Intel Pentium 4 was the first modern desktop processor to implement simultaneous multithreading. Concurrent Computing In concurrent computing, a program is one in which multiple tasks can be in progress at any instant. Parallel Computing In parallel computing, a program is one in which multiple tasks cooperate closely to solve a problem. Distributed Computing In distributed computing, a program may need to cooperate with other programs to solve a problem. Lecturer: Engr. Muhammad Nadeem Page 4