MRA Part 3-Applications (2007)

advertisement

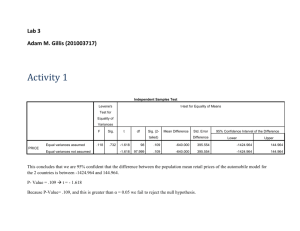

Multiple Regression Analysis: Part 3 Use of categorical variables in MRA 1 Changing Gears What if we wish to include categorical variables into our regression equation? For instance, we have two categorical variables (say gender and ethnic group) and two continuous variables (say reading comprehension and visual processing speed) to predict performance 2 Regression and Mean Comparisons Independent samples ttest: comparing two means Tests the null hypothesis of… Accomplished via the usual t-statistic formula: x1 x2 t s 2p s 2p n1 n2 mean std. dev. group 1 5 5 4 8 6 2 5 6 5 4 5.00 1.56 group 2 8 9 4 7 7 8 10 9 9 9 8.00 1.70 3 Calculating t… Pooled variance estimate 2 2 9(1.56 ) 9(1.70 ) 2 sp 2.667 10 10 2 t-ratio t 58 3 4.108 2.667 2.667 0.7303 10 10 Effect size estimate (variance accounted for) 2 2 t 4.108 r2 2 0.484 2 t df 4.108 18 4 Our usual approach in SPSS gpid 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 score 5 5 4 8 6 2 5 6 5 4 8 9 4 7 7 8 10 9 9 9 Code groups & enter associated score Run an independent samples t-test to get the following… t-test for Equality of Means Sig. (2Mean Std. Error tailed) Difference Difference 95% CI of difference t df Lower Upper -4.108 18 0.001 -3 0.730 -4.534 -1.466 5 What if we coded groups as 0’s & 1’s? Could construct a Point-Biserial correlation rpb ( M Y 1 M Y 0 ) pq y (3).5 rpb 0.696 2.156 Can you guess what 0.6962 equals? gpid 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 score 5 5 4 8 6 2 5 6 5 4 8 9 4 7 7 8 10 9 9 9 dc 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 6 Pearson, r pb and regression Taking advantage of the fact that rpb is merely a Pearson-product-moment correlation in disguise… Let’s regress y onto our binary variable to get the following Model Summary Model 1 R R Square a .696 .484 Adjus ted R Square .455 Std. Error of the Es timate 1.63299 a. Predictors : (Constant), dummyc Coefficientsa Model 1 (Cons tant) dummyc Uns tandardized Coefficients B Std. Error 5.000 .516 3.000 .730 Standardized Coefficients Beta .696 t 9.682 4.108 Sig. .000 .001 a. Dependent Variable: score Do these look familiar? 7 This can be greatly expanded… ANOVA can be run using… Dummy Coding Effect Coding Orthogonal Coding Multiple categories can be modeled N-Way designs can be accommodated ANCOVA Repeated Measures 8 Adding a rd 3 group… Method 1 Method 2 Method 3 3 5 2 4 8 4 3 9 4 4 3 8 7 4 2 5 6 7 8 6 7 9 10 9 Means: 4.75 4.625 7.75 9 Dummy Coding Series of binary variables One group receives all zeros Other two groups are differentiated by 1’s Characteristics… Method 1 1 … 1 2 2 … 2 3 3 … 3 Score 3 5 … 9 4 4 … 5 6 7 … 9 d1 0 0 0 0 0 0 0 0 1 1 1 1 d2 0 0 0 0 1 1 1 1 0 0 0 0 10 Submitting this to an MRA… ANOVAb Model 1 Regress ion Res idual Total Sum of Squares 50.083 86.875 136.958 df 2 21 23 Mean Square 25.042 4.137 F 6.053 Sig. .008 a a. Predictors : (Constant), Dummy Code s eparating 3 from 1 & 2, Dummy Code s eparating 2 from 1 & 3 b. Dependent Variable: Exam Score Coefficientsa Model 1 (Cons tant) Dummy Code s eparating 2 from 1 & 3 Dummy Code s eparating 3 from 1 & 2 Uns tandardized Coefficients B Std. Error 4.750 .719 Standardized Coefficients Beta t 6.605 Sig. .000 -.125 1.017 -.025 -.123 .903 3.000 1.017 .592 2.950 .008 a. Dependent Variable: Exam Score 11 Comparing group means All groups explicitly compared to “uncoded” group. To make other comparisons, either 1.) re-run the analysis with a different coding scheme 2.) use the following equation: t bi b j 1 1 SE y y ' ni n j Concerns over type 1 error in such comparisons remain 12 Effect Coding Series of variables (vectors) having values of -1, 0, 1 One group receives all -1’s Other two groups differentiated by 0’s & 1’s Characteristics… Method 1 1 … 1 2 2 … 2 3 3 … 3 Score 3 5 … 9 4 4 … 5 6 7 … 9 e1 1 1 1 1 0 0 0 0 -1 -1 -1 -1 e2 0 0 0 0 1 1 1 1 -1 -1 -1 -1 13 Solution Coeffi cientsa Model 1 (Const ant) e1 e2 Unstandardized Coeffic ients B St d. Error 5.708 .415 -.958 .587 -1. 083 .587 St andardiz ed Coeffic ients Beta -.328 -.370 t 13.749 -1. 632 -1. 845 Sig. .000 .118 .079 a. Dependent Variable: Ex am Score Characteristics: Intercept = __________ -0.958 represents ___________ -1.083 represents ____________ 14 Recovering cell means Y’ = 5.708 + e1(-0.958) + e2(-1.083) Thus, someone in group 1… Y’ = 5.708 + 1(-0.958) + 0(-1.083) = 4.75 For group 2… Y’ = 5.708 + 0(-0.958) + 1(-1.083) = 4.625 For group 3… Y’ = 5.708 + (-1)(-0.958) + (-1)(-1.083) = 7.749 15 Recovering the missing coefficients If e1 gives us the effect for being in group 1, and… e2 gives us the effect for being in group 2… What is the effect for being in group 3? How do we get it? Method 1: recode the cells so that a different cell gets all -1’s. Method 2: take advantage of the fact that all b’s must sum to zero*. Thus… e3 + (-0.958) + (-1.083) = 0 e3 = 0.958 + 1.083 = 2.041 16 Unequal group sizes due to population differences Unequal group sizes May represent attrition in study, or other problems May reflect existing group size differences in population If we wish to preserve information about unequal population sizes… Use weighted effect coding Instead of one group getting all -1’s… Group gets weighted code of –n2/n1 where n1 is the ‘baseline group and n2 is the group identified by vector 17 Two-Way ANOVA Revisited Factor B: Anxiety Level Low Medium Factor A: Task Difficulty 3 2 1 5 1 9 Easy 6 7 4 7 Difficult 0 2 0 0 3 3 8 3 3 3 High 9 9 13 6 8 0 0 0 5 0 18 Recall the cell means Factor A: Task Difficulty Factor B: Arousal Low Medium High Total Easy 3.00 6.00 9.00 6.00 Difficult 1.00 4.00 1.00 2.00 Total 2.00 3.00 5.00 4.00 19 Dummy Coding / Effect Coding Task difficulty Easy = 0 Difficult = 1 Anxiety Vector 1 Task difficulty Vector 1 Low = 0 Medium = 0 High = 1 Vector 3 = TD x Vector 1 Vector 4 = TD x Vector 2 Easy = 1 Difficult = -1 Anxiety Low = 0 Medium = 1 High = 0 Vector 2 Vector 2 Low = 1 Medium = 0 High = -1 Low = 0 Medium = 1 High = -1 Vector 3 = TD x Vector 1 Vector 4 = TD x Vector 2 20 Results using dummy coding… Coefficientsa Model 1 (Constant) Dummy Code for difficulty Dummy Code 1 for anxiety Dummy Code 2 for anxiety Dummy Code Interaction 1 Dummy Code Interaction 2 Unstandardized Coefficients B Std. Error 3.000 1.000 -2.000 1.414 Standardized Coefficients Beta -.289 t 3.000 -1.414 Sig. .006 .170 3.000 1.414 .408 2.121 .044 6.000 1.414 .816 4.243 .000 -2.4E-015 2.000 .000 .000 1.000 -6.000 2.000 -.645 -3.000 .006 a. Dependent Variable: Performance 21 Results of Effect Coding ANOVAb Model 1 Regres sion Residual Total Sum of Squares 240.000 120.000 360.000 df 5 24 29 Mean Square 48.000 5.000 F 9.600 Sig. .000a a. Predic tors: (Constant), int2, ecanx2, ecdiff, int1, ecanx1 b. Dependent Variable: Performanc e Coeffi cientsa Model 1 (Const ant) ec diff ec anx1 ec anx2 int 1 int 2 Unstandardized Coeffic ients B St d. Error 4.000 .408 -2. 000 .408 -2. 000 .577 1.000 .577 1.000 .577 1.000 .577 St andardiz ed Coeffic ients Beta -.577 -.471 .236 .236 .236 t 9.798 -4. 899 -3. 464 1.732 1.732 1.732 Sig. .000 .000 .002 .096 .096 .096 a. Dependent Variable: Performanc e 22 Combining Categorical and Continuous Variables Type of Treatment by Pre-treatment functioning to predict Outcome Race/Ethnicity by Attitudes toward health care to predict wellness visits Recent vs. non-recent hire by openness to new experience to predict likelihood of change. 23 Example: Workplace Deviance & Moral Reasoning Research Question: will scores on a moral reasoning measure, that reflect “maintaining norms” (~Kohlberg’s conventional level) interact with organizational injustice to produce workplace deviance? Continuous Measures: Maintaining Norms Categorical Measure: High vs. Low Organizational Injustice 24 Results Coefficientsa Model 1 (Constant) Condition MNxCon MNCENT Unstandardized Coefficients B Std. Error 2.569 .144 .391 .205 -.100 .014 -.019 .010 Standardized Coefficients Beta .186 -.007 -.266 t 17.828 1.909 -7.143 -1.886 Sig. .000 .059 .001 .062 Collinearity Statistics Tolerance VIF .998 .475 .475 1.002 2.107 2.105 a. Dependent Variable: Average Workplace Deviance Score ANOVAb Model 1 Regres sion Residual Total Sum of Squares 11.491 98.641 110.132 df 3 95 98 Mean Square 3.830 1.038 F 3.689 Sig. .015a a. Predic tors: (Constant), MNCENT, Condit ion, MNx Con b. Dependent Variable: Average W orkplace Devianc e Sc ore R2 = .104 Note: data based on an actual study, interaction effect is manufactured for purpose of illustration. 25 Graph of Interaction Interaction Chart for Dummy x Continuous Variable Interaction 6 5 Predicted Value 4 dummy code=0 dummy code=1 3 2 1 0 Low High Two Sample Points 26 And then there’s contrast coding Recall our one-way teaching example Two orthogonal contrasts: M1 & M2 vs. M3 M1 vs. M2 Method 1 Method 2 Method 3 3 5 2 4 8 4 3 9 4 4 3 8 7 4 2 5 6 7 8 6 7 9 10 9 Means: 4.75 4.625 7.75 27 Accomplished by Contrast Coding C1: -0.5M1 + -0.5M2 + 1M3 C2: 1M1 + -1M2 + 0M3 ANOVAb Model 1 Regres sion Residual Total Sum of Squares 50.083 86.875 136.958 df 2 21 23 Mean Square 25.042 4.137 F 6.053 Sig. .008a a. Predictors: (Constant), Orthogonal comparison 2 (g1=g2), Orthogonal comparison 1 .5(1)+.5(2)=3 b. Dependent Variable: Exam Score Coefficientsa Model 1 (Constant) Orthogonal comparison 1 .5(1)+.5(2)=3 Orthogonal comparison 2 (g1=g2) Unstandardized Standardized Coefficients Coefficients B Std. Error Beta 5.708 .415 t 13.749 95% Confidence Interval for B Correlations Sig. Lower Bound Upper Bound Zero-order Partial .000 4.845 6.572 Part Collinearity Statistics Tolerance VIF 2.042 .587 .604 3.477 .002 .821 3.263 .604 .604 .604 1.000 1.000 .063 .508 .021 .123 .903 -.995 1.120 .021 .027 .021 1.000 1.000 a. Dependent Variable: Exam Score 28