Progress monitoring - Professional Development Management System

advertisement

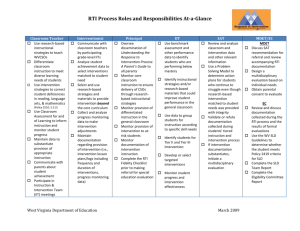

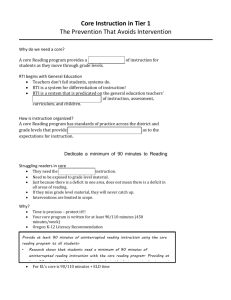

Multiple Sources of Data RtI Documentation: Understanding, Interpreting and Connecting Data for Educational Decision Making Andrea Ogonosky, Ph.D., LSSP aogonosky@msn.com 1 A complementary relationship between the RTI process and psychoeducational testing exists in evaluating for a potential learning disability. RtI: Problem Solving Assessment Progress Monitoring Diagnostics Progress Monitoring Diagnostics Universal Screening Progress Monitoring Interventions Student Instructional Level Supplemental Interventions 120 min per week additional Student Instructional Level Supplemental Interventions 90 min per week additional Grade Level Instruction/ Support Fidelity Intervention Well Checks Observe in Tiers I and II/III (ICEL) Consult with Teacher Review data weekly in PLC/ Planning meetings Check data collection Talk to parent From the Student Perspective The Team Goal is to create… Academic Learning, Mastery, and Achievement Social, Emotional, and Behavioral Development Independent Learner Self-Manager Activity How are you defining Tier 1 instruction and strategies? Is there consistency with this definition throughout your district? What information are you using to determine appropriate access to instruction? Successful Data Collection • • • • • Use of naturally occurring data Led by General Education, Supported by Special Education Problem-Solving Model Implemented with Integrity Systematic decision rules consistently implemented Access to Technology to Manage and Document DataBased Decision Making • Evidence of Improved Academic and Behavior Outcomes for All Students It is vitally important that there is an understanding that there is continued documented discussion and consultation between the teacher, the team, and the interventionist(s). Components Addressed When Using Multiple Data Sources • The interrelationship between classroom achievement and cognitive processing criteria – Classroom achievement – Academic Deficit (RtI) – Cognitive Processing – Behavior 10 Balancing Assessments -- Assessment systems -- Multiple measures -- Varied types -- Varied purposes -- Varied data sets -- Balanced with needs Multiple Data Sources Criterion Referenced Assessment Formative Summative Screen Progress Monitor Norm Referenced Assessment Diagnostic Comparative Progress Monitor Curriculum Based Measurement Rate of Improvement Universal Screen Progress Monitor Criterion Referenced Tests • Most common type of test used by teachers. • Criterion Referenced Tests measure mastery of a subject based on specific preset standards. • The questions used in the test are meant to show how much a student knows and how that student’s performance compares to expectations. Problems with Mastery Measurement • Hierarchy of skills is logical, not empirical. • Performance can be misleading: assessment does not reflect maintenance or generalization. • Assessment is designed by teachers or sold with textbooks, with unknown reliability and validity. • Number of objectives mastered does not relate well to performance on high-stakes tests. 13 Norm Referenced Tests • Norm-referenced tests compare a student's score against the scores of a group of student’s who have already taken the same exam, called the "norm group." • Score are often interpreted using percentiles. • Comparative in nature, aligned to researched based developmental and cognitive levels. Curriculum Based Measurement • • • Describes academic competence at a single point in time Quantifies the rate at which students develop academic competence over time Used to align and analyze effective instruction and intervention to increase student achievement 15 Types of Curriculum Based Measurement • Universal Screening data on all students provides an indication of individual student performance and progress compared to the peer group’s performance and progress. • Universal Screening data form the basis for examining trends (or patterns)on specific academic or behavior skills. Types of Curriculum Based Measurement Progress monitoring documents student growth over time to determine whether the student is progressing as expected in the designated level of instructional intervention. Generally this is often presented in graph form. District decision Rules Selection of goal-level material Collection baseline data and setting of realistic or ambitious goals Administration of timed, alternate measures weekly Application of decision-making rules to graphed data every 69 weeks Activity • List Formative Assessments your district/campus are currently using. • List Progress Monitors your district/campus are currently using. What norms? • List Summative Assessments your district/campus are currently using. • How do you use this data to aid in determining patterns of strengths and weaknesses? Dr. O’s Suggestion Begin with the End in Mind: STAAR, EOC RtI Data Analysis Achievement Data (Formative, Diagnostic) Academic Deficit Verification Hypothesis Generation Cognitive Processing Data Collection Component Data Review: Consider the impact of each domain relative to the “problem” 21 INSTRUCTION Instructional decision making regarding selection and use of materials Clarity of instructions Communication with expectations and cues Sequencing of lesson designs Pace of instruction Variety of practice activities CURRICULUM Long range direction for instruction Instructional philosophy Instructional materials Intent Stated outcomes of the content/instruction Pace of the steps leading to the outcomes General learner criteria as identified in the school improvement plan, LEA curriculum, and benchmarks ENVIRONMENT Physical arrangement of the room Furniture/equipment Rules Management plans Routines Expectations Peer context Task pressure Peer and family expectations LEARNER This is the last domain to consider and is addressed when: The curriculum and instruction are appropriate The environment is positive This domain includes student performance data: Academic Social/emotional 22 Existing Data Review – Determine the student’s current grade level/class status: academic progress (grades, unit tests, district benchmark, and work samples) – Teacher description/quantifiable concern – Parent Contact(s) – RtI data – Medical Information – Classroom Observations (ICEL) Analyze Multiple Data Sources Begin With Universal Screening Data Student score relative to cut-score in identified area of concern. If below cut-score possible need for increased intensity of supports (Tier 2) If above cut-score instruction is supported through Tier 1 supports Analyze Multiple Data Sources Review Teacher Progress Monitoring Data Formative and Summative Assessments Are they differentiated to identified area of concern? Evidence of alignment to student variables (learning styles) How does student compare to class peers in the identified area of concern Student Data Review student strengths in identified area of concern Review Student skill deficit/weaknesses in identified area of concern Determine Student Functional Levels • Identified assets and weaknesses • Identified critical life events, milestone • Identified academic variables such as “speed of acquisition” or retention of information • Identified issues of attendance, transitions, motivation, access to instruction Classroom Achievement Note: analysis of data assumes that the student has received adequate opportunities to learn and reasonable instructional options • Gather relevant and specific information about the student's performance in the general education curriculum (relative to peers) • Determine if a pattern of severe delay in classroom achievement in one or more areas has existed over time • Provide information about the curriculum and corresponding instructional demands Classroom Achievement Guiding question: What has been taught to the student and how? 1. Does the student meet the educational standards that apply to all students? 2. What are the academic task requirements for students in the referred student's classroom? 3. Has the student received instruction on the same content as the majority of students in the same grade? 4. What are the student's skills in relation to what has been taught? Classroom Achievement 5. What is the range of student skill levels? 6. Are there any other student’s performing at the same level as the referred student? Classroom Achievement / Academic Deficit : Sources of Data – – – – – – Observation: at least 1 within each Tier of support work samples: should be more than worksheets error analysis: is it aligned with focused instruction? criterion-referenced measures task analysis curriculum-based measures such as inventories. quizzes, rubrics, running records, informal reading inventories – record review: be careful not to dismiss the importance of historical data Analyze Multiple Data Sources Analyze Diagnostic Data Student strengths and weaknesses relative to identified area of concern What specific skills are lacking that present a barrier for student progress? Need to know developmental acquisition of skills relative to the identified area of concern. Is the identified skill deficit significantly below grade expectations? What effective practices are needed to address this skill deficit? Analyze Multiple Data Sources Review Progress Monitoring Data What curriculum based measure is your district using? What norms are being used to set goals. What ROI is expected? Progress Monitoring Ongoing and frequent monitoring of progress quantifies rates of improvement and informs instructional practice and the development of individualized programs. Integrity of Progress Monitoring Selected progress monitoring tools meet all of the following criteria: (1) Has alternate forms of equal and controlled difficulty; (2) specifies minimum acceptable growth; (3) provides benchmarks for minimum acceptable endof-year performance; (4) reliability and validity information for the performance level score are available. What does your district use? Rate of Improvement • Typical Rate of Improvement - the rate of improvement or the rate of change of a typical student at the assessment. • Targeted Rate of Improvement - the rate of improvement that a targeted student would need to make by the end of the instruction/intervention. • Attained Rate of Improvement - the actual rate of improvement the student ends up achieving as a function of the intervention. Integrity of Progress Monitoring Both conditions are met: (1) Frequency is at least weekly for Tier 1 and 2 students ; (2) Procedures are in place to ensure implementation accuracy (i.e., appropriate students are tested; scores are accurate; decision making rules are applied consistently). Additional Assessments (Tiers 2&3) Analogy: If there is a medical concern, you…. …. go to the doctor If the doctor is not sure what is wrong….. ….. more tests are given Question • What are the tests your campuses are giving in Tiers 2 &3? Should be diagnostic in nature. • What about progress monitoring? How are they measuring growth? Analyze Multiple Data Sources Review Outcome Data Student score relative to peers on district common or benchmark assessments Student score relative to peers on end of unit or other identified summative assessments Student score relative to peers on state assessments Align Data Sources Universal Screening Progress Monitoring Diagnostic Assessments Outcome Assessments Does the data tell a clear and concise story of the student’s learning needs? If there is inconsistency team must investigate why. Review integrity of instruction Alignment to student needs (Tier 1) Student variables Scientific inquiry • Triangulation: verification of a hypothesis or identification of a pattern by comparing and contrasting data • The ARD committee must be confident that the data collectively reveals a pattern consistent with the definition of SLD. • Data collected aligns with characteristics of other disability conditions. Scientific Inquiry • Explore potential alternate explanations when data is inconsistent • Ask (and answer additional) questions if the answer does not lie in the data • Assessment data not only provides answer to questions regarding eligibility, but it serves to design instructional and behavioral intervention strategies that are likely to improve student learning Improving SLD Identification Process • In order to improve the diagnostic process, you need to approach diagnosis and the FIE as a compilation of formal and informal data. • Problem Identification & Analysis–process of assessment and evaluation for identifying and understanding the causal and maintaining variables of the problem Cognitive Strengths/Assets and Weaknesses/Deficits • Measure abilities associated with the area of academic skill deficit and abilities not associated with the academic deficit – Broad abilities, narrow abilities, and core cognitive processes – Usually select a global measure for this, but can do this with focused assessment of processes if the referral is specific enough • How efficient is the student’s learning process – if deficiencies present they will interfere with/affect learning Cognitive Patterns • Some issues to consider: – The cognitive deficiency must be normatively based not person based; a weakness derived from ipsative analysis (intra-individual) is not relevant regardless of statistical significance – Low achievement in the absence of any cognitive deficiencies is not consistent with any processing model of LD Relationship of cognitive deficits to academic deficits • Determine the presence of a processingachievement consistency/concordance – There is a need to have measured the narrow abilities related to academic areas explained to describe pattern. • To do this step, must be familiar with the cognitive processes that are associated with achievement areas. Diagnostic Conclusions • The determination of a disability category, (especially MR and LD) must be made through the use of professional judgment, including consideration of multiple information/data sources to support the eligibility determination.(TEA, §89.1040 Eligibility Criteria, Frequently Asked Questions) • Information/data sources = statewide assessment results, formal evaluation test scores, RtI progress monitoring data, informal data (e.g., work samples, interviews, rating scales), anecdotal reports Why is a Tiered Model Important to SLD Identification? “Need to Knows” • Individual Differences in the Processes in the Learner’s Mind or Brain • Curriculum and Instructional Materials • Teachers’ Delivery of Instruction / Classroom management Processing – Disability & Intervention • Processing strengths and weaknesses associated with diagnosing LD, but also important and associated with other disabilities • If learning depends on processing, then having information about a student’s strengths and weaknesses can assist in design of interventions, selection of accommodations, and determination of modifications Main Processing Components to Assess (Dehn, 2005) Attention processing Auditory processing Executive processing Fluid reasoning Long-term retrieval Phonemic awareness Planning Processing speed Short-term memory Simultaneous processing Successive processing Visual Processing Working Memory Integrity of Diagnostic Data Cognitive Processing Model: (Fletcher, 2003): a multi-test discrepancy approach…and carries with it the problems involved with estimation of discrepancies and cut points. What is your district’s philosophy for defining processing strengths and weaknesses? Is your staff consistent with interpretation? Lack of clarity around what is to be accomplished is the biggest barrier to assessment for eligibility that you will encounter. Flawed logic….school staff understand the role assessment and diagnostic data play in decision making for struggling learners. Underscoring the Problem “Most teachers just don’t possess the skills to collect data, draw meaningful conclusions, focus instruction, and appropriately follow up to assess results. That is not the set of skills we were hired to do.” Convergence of Data: What to Look For • Increasing intensity of data collection aligned with student need/response to interventions • Diagnostic data that describes student strengths, weaknesses and response to intervention • Documentation of supplemental instruction aligned with diagnostic data Putting It All Together • Use achievement and RtI data to form hypothesis • Based upon hypothesis design normative achievement and cognitive processing assessment battery When Assessing Consider: • Rate of learning • Level of academic performance in one of the 8 areas for SLD • Memory • Processing speed • Attention/concentration • Visual motor integration • Behavior • • • • Executive functioning Speech/language Primary language Phonological processing • Academic fluency • Others? All the data have been collected…What constitutes a convergence of evidence? Essential Question How can assessment team and parents feel confident in making an appropriate SLD eligibility decision? Validating Underachievement Students who demonstrate reasonable progress in response to research-based strategies and interventions should not be determined eligible under the SLD category even though they may have academic weaknesses. What is reasonable progress? Identifying a SLD is a complex task and requires personalized, student-centered problem-solving. It is predicated on a belief that scaffolded, differentiated, high-quality general education instruction can be effective for most children and that only a few students demonstrate the severe and persistent underachievement associated with a SLD. Good decisions are based on A strong RTI framework Sufficient data collection and documentation Clearly articulated decision-making processes References • Essentials of Psychological Assessment Series. Hoboken, New Jersey: John Wiley & Sons. – Dehn, M.J. (2006). Essentials of Processing Assessment. – Flanagan, D.P. & Alfonso, V.C. (2011) Essentials of Specific Learning Disability Identification • SLD Identification Using Multiple Sources of Data. Cheramie, G. & Ogonosky, A. (2012). Presentation to Weatherford ISD.