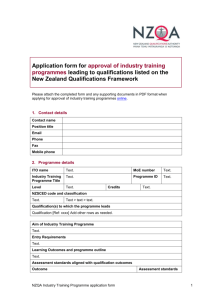

Slides: PPT-File

advertisement

Classification and clustering methods

development and implementation for

unstructured documents collections

by

Osipova Nataly

St.Petesburg State University

Faculty of Applied Mathematics and Control Processes

Department of Programming Technology

Contents

Introduction

Methods description

Information Retrieval System

Experiments

Contextual Document Clustering

was developed in joined project of

Applied Mathematics and Control

Processes Faculty, St. Petersburg State

University and

Northern Ireland Knowledge Engineering

Laboratory (NIKEL), University of Ulster.

Definitions

Document

Terms dictionary

Dictionary

Cluster

Word context

Context or document conditional

probability distribution

Entropy

Document conditional probability

distribution

Document x

y

tf(y)

p(y|x)

y – words

word1

5

5/m

tf(y) – y frequency

word2

10

10/m

word3

6

6/m

p(y|x) – y conditional

probability in document x

…

wordn

m – document x size

16

16/m

(5/m, 10/m,6/m,…,16/m ) – document conditional probability

distribution

Word context

Word w

Document x1

…

Document x2

Document xk

y

tf(y)

p(y|x1)

y

tf(y)

p(y|x1)

y

tf(y)

p(y|x1)

word1

5

5/m1

word1

7

7/m1

word1

20

20/mk

word2

10

10/m1

word3

12

12/m1

word4

9

9/mk

3

3/mk

…

wordn1

…

…

16

16/m1

wordn2

4

4/m1

wordnk

y

tf(y)

p(y|w)

word1

5+7+20=32

32/m

word2

10

10/m

word3

12

12/m

3

3/m

…

wordnk

…

Context

conditional

probability

distribution

Contents

Introduction

Methods description

Information Retrieval System

Experiments

Methods

document clustering method

dictionary build methods

document classification method using training

set

Information retrieval methods:

keyword search method

cluster based search method

similar documents search method

Contextual Documents Clustering

Documents

Dictionary

Distances calculation

Clusters

Narrow context words

Entropy

y context conditional probability

distribution

p1 p2

pn

p1+p2+…+pn=1

n

H H(

p1 p2

pn

) pi * log( pi )

i 1

Uncertainly measure, here it is used to characterize commonness

(narrowness) of the word context.

Contextual Document Clustering

maxH(y)=H (

)

Entropy

H ([ p1, p2 ]) ( p1 log p1 p2 log p2 )

p1 , p2 1

log 2 (2)

0

H(

0.5

)

H(

α

1

)

H(

)

Word Context - Document Distance

y context conditional

probability distribution

Average conditional

probability distribution

Document x

conditional probability

distribution

p1

p 12 p1 12 p 2

p2

Word Context - Document Distance

JS[p1,p2]=H(

)

- 0.5H(

)

- 0.5H(

)

Jensen-Shannon divergence

JS{ 1 , 1 }[ p1, p 2] 0

2 2

JS{ 1 , 1 }[ p1, p 2] 0 p1 p 2

2 2

Dictionary construction

Why:

- big volumes:

60,000 documents, 50,000 words => 15,000

words in a context

- narrow context words importance

Dictionary construction

Delete words with

1. High or low frequency

2. High or low document frequency

3. 1. and 2.

Retrieval algorithms

keyword search method

cluster based search method

search by example method

Keyword search method

Document 1

Document 2

Document 3

Document 4

word 1

word 10

word 15

word 11

word 2

word 25

word 2

word 21

word 3

word 30

word 32

word 3

…

…

…

…

word n1

word n2

word n3

word n4

Request: word 2

Result set:

document 1

document3

Cluster based search method

Documents

Documents

Documents

Cluster 1

Cluster 2

Cluster 3

word 1

word 12

word 1

word 2

word 26

word 23

…

…

…

word n1

word n2

word n3

Request: word 1

Result set:

Cluster 1

Cluster 3

Cluster

context

words

Similar documents search

Minimal Spanning Tree

Cluster

name

document 1

document 4

document 2

document 5

document 3

document 6

document 7

Cluster

Request: document 3

Result set:

document 6

document 7

Document classification: method 1

Test

documents

Clusters

List of topics

Training set

Topics contexts

Distances between topics and clusters contexts

Classification result:

cluster1 – topic 10

cluster 2 – topic 3

…

cluster n – topic 30

Document classification: method 2

Test

documents

All documents

set

Training set

Clusters

Classification result:

cluster1 – topic 10

cluster 2 – topic 3

…

cluster n – topic 30

Topics list

Contents

Introduction

Methods description

Information Retrieval System

Experiments

Information Retrieval System

Architecture

Features

Use

Information Retrieval System

architecture.

data

base server

client

IRS architecture

Data Base Server

MS SQL Server 2000

Local Area

Network

“thick” client

C#

Data

Base

IRS architecture

DBMS MS SQL Server 2000:

High-performance

Scalable

Secure

Huge volumes of data treat

T/SQL

Stored procedures

IRS features

In the IRS the following problems are solved:

document clustering

keyword search method

cluster based search method

similar documents search method

document classification with the use of

training set

DB structure

The Data Base of the IRS consists of the following tables:

documents

all words dictionary

dictionary

table of relations between documents and words: document-word

words contexts

words with narrow contexts

clusters

intermediate tables for main tables build and for retrieve realization

Algorithms implementation

Documents

All words

dictionary

Dictionary

Keyword

search

Table “document-word”

Words

contexts

Clusters

Words with narrow

contexts

Centroid

Cluster based

search

Similar documents

search

Similar documents search

0,26967

document1

document2

0,211

0,57231

document5

0,1011

0,7231

0,8731

0,16285

document3

0,23851

0,98154

document4

Cluster

Minimal Spanning Tree

Cluster

name

document 1

document 4

document 2

document 5

document 3

Cluster

Similar documents search

Clusters

table

Distances

table

Tree table

Similar

documents

search

IRS use

IRS use

IRS use

IRS use

IRS use

IRS use

Contents

Introduction

Methods description

Information Retrieval System

Experiments

Experiments

Test goals were:

algorithm accuracy test

different classification methods

comparison

algorithm efficiency evaluation

Experiments

60,000 documents

100 topics

Training set volume = 5% of the

collection size

Experiments

tf ( y ) 5, tf ( y ) 1000

df ( y ) 2, df ( y ) 1000

Result analysis

- Russian Information Retrieval Evaluation

Seminar

- Such measures as macro-average

recall

precision

F-measure

were calculated.

Recall

Recall

0.6

0.5

xxxx

textan

textan

xxxx

0.4

xxxx

0.3

0.2

0.1

xxxx

xxxx

xxxx

xxxx

xxxx

xxxx xxxx

0

Systems

xxxx

xxxx

xxxx

xxxx

xxxx

Precision

Precision

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

xxxx

xxxx

xxxx

textan

xxxx

xxxx

xxxx

textan

xxxx xxxx

xxxx xxxx

xxxx

xxxx

xxxx

xxxx

Systems

xxxx

F-measure

F-measure

0.35

0.3

0.25

0.2

0.15

0.1

0.05

0

xxxx

textan

textan

xxxx

xxxx

xxxx

xxxx

xxxx

xxxx

xxxxxxxx

Systems

xxxx

xxxx

xxxx

xxxx

xxxx

xxxx

Result analysis

List of some topics

test documents

were classified in

№

Category

1

Family law

2

Inheritance law

3

Water industry

4

Catering

5

Inhabitants’ consumer services

6

Rent truck

7

International law of the space

8

Territory in international law

9

Off-economic relations fellows

10

Off-economic dealerships

11

Economy free trade zones. Customs unions.

Result analysis

Recall results for every category.

Results which were the best for the category are selected with bold type.

All results are set in percents.

С

V

1

2

3

4

5

6

7

8

9

10

11

textan

33

34

35

60

46

26

27

98

75

25

100

xxxx

1

0

0.2

3

4

0

0.9

0

3

0

2

xxxx

0

0

4.3

2.3

0

5

0.9

8

3

0

0.8

xxxx

55

86

75

19

59

51

80

0

41

82

0

xxxx

21

39

2

22

15

6

0

1.4

0

5

0

xxxx

40

43

16

11

25

23

10

1.4

1.2

5

0

xxxx

23

4

2.5

1.1

18

7

0.9

0

1.2

10

0

xxxx

2.7

0

0

0

1.5

0

0

0

0

0

0

xxxx

2.2

0

0

0

1.5

0

0

0

0

0

0

xxxx

37

21

12

22

18

27

51

0

0

0

0

Thank you for your attention!