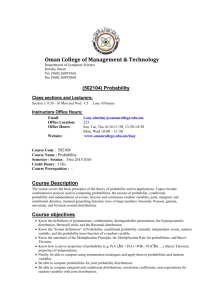

Bayes Theorem and an Application

Stochastic Methods

A Review

Some Terms

Random Experiment: An experiment for which the outcome cannot be predicted with certainty

Each experiment ends in an outcome

The collection of all outcomes is called the sample space, S

An event is a subset of the sample space

Given a random experiment with a sample space, S, a function X that assigns to each element s in S a real number, X(s) = x, is called a random variable.

A boolean random variable is a function from an event to the set {false, true} (or {0.0,1.0}).

Bernoulli/Binomial Experiments

A bernoulli experiment is a random experiment the outcome of which can be classified in one of two mutually exclusive and exhaustive ways

({failure,success}, {false,true}, {0,1}), etc.

A binomial experiment is a bernoulli experiment that:

Is performed n times

The trials are independent

The probability of success on each trial is a contant, p.

The probability of failure on each trial is a constant 1 – p

A random variable counts the number of successes in n trials

Example A

A fair die is cast six times

– Success: a six is rolled

– Failure: all other outcomes

A possible observed sequence is (0,0,1,0): a six has been rolled on the third trial. Call this sequence A.

Since every trial in the sequence is independent, p(A) = 5/6 * 5/6 * 1/6 * 5/6 = (1/6)(5/6) 3

Example A’

Now suppose we want to know the probability of 1 six in any four roll sequence:

(0001),(0010),(0100),(1000)

= 4 * p(A) since there are four ways of selecting 1 position for the 1 success

In General

The number of ways of selecting y positions for y successes in n trials is:

nCy = n! /((n – y)! * y!)

The probability of each of these ways is the probability of success * the probability of failure

– p y * (1-p) n-y

So, if Y is the event of y successes in n trials, p(Y) = n

C y

* p y * (1-p) n-y

This is Exactly the Example

p(Y) = n

C y

* p y * (1-p)

A fair die is cast six times n-y

– Success: a six is rolled

– Failure: all other outcomes

n=4

y = 1

4

C

1

= 4!/(4-1)! * 1! = 4 p y = (1/6) 1

(1-p) 4-1 = (5/6) 3

What is the probability of obtaining

5 heads in 7 flips of a fair coin

The probability of the event X, p(X), is the sum of the probabilities of each individual events

( n

C x

)p x (1-p) n-x

The Event X is 5 successes out of seven tries n = 7, x = 5 p(of a single success) = ½

p(of a single failure) = ½

P(X) = (

7

C

5

)(1/2) 5 (1/2) 2 = .164

The tries can be represented like this:

{0011111}, {0101111} …

There are 21 of the, each with a probability of :(1/2) 5 (1/2) 2

Expectation

If the reward for the occurrence of an event E, with probability p(E), is r, and the cost of the event not occurring, 1-p(E), is c, then the expectation for an event occurring, ex(E), is ex(E) = r x p(E) + c (1-p(E))

Expectation Example

A fair roulette wheel has integers, 0 to 36.

Each player places 5 dollars on any slot.

If the wheel stops on the spot, the player wins $35, else she loses $1

So, p(winning)= 1/37

P(losing) = 36/37 ex(E) = 35(1/37) + (-5)(36/37)

~ $-3.92

Bayes Theorem For Two Events

Recall that we defined conditional probability like this: p ( d | s )

p ( d s ) / p ( s ) 1

We can also express s in terms of d: p ( s | d )

p ( d s ) / p ( d )

2

Multiplying (2) by p(d) we get: p ( d s )

p ( s | d ) p ( d ) 3

Substituting (3) into (1) gives Bayes’ theorem for two events p ( d | s )

p ( s | d ) p ( d ) / p ( s )

If d is a disease and s is a symptom, the theorem tells us that the probability of the disease given the symptom is the probability of the symptom given the disease times the probability of the disease divided by the probability of the symptom

The Chain Rule

𝑝(𝐴

1

∩ 𝐴

2

) = 𝑝 𝐴

1

𝐴

2 𝑝(𝐴

2

)

Since set intersection is commutative 𝑝(𝐴

1

∩ 𝐴

2

) = 𝑝 𝐴

2

𝐴

1 𝑝(𝐴

1

) 𝑙𝑒𝑡 𝑋 = 𝐴 𝑝 𝐴

1

∩ 𝐴

2

1

∩ 𝐴

∩ 𝐴

3

2 then

= 𝑝(𝑋 ∩ 𝐴

3

)

= 𝑝 𝐴

3

= 𝑝 𝐴

3

𝑋 𝑝 𝑋

𝐴

1

∩ 𝐴

2 𝑝(𝐴

1

∩ 𝐴

2

)

= 𝑝 𝐴

3

𝐴

1

∩ 𝐴

2 p(𝐴

1

) 𝑝 𝐴

2

𝐴

1

= p(𝐴

1

) 𝑝 𝐴

2

𝐴

1 𝑝 𝐴

3

𝐴

1

∩ 𝐴

2

Can be generalize for any N sets and proved by induction

Example

Three cards are to be dealt one after another at random and without replacement from a fair deck. What is the probability of receiving a spade, a heart, a diamond in that order

A

1

A

2

A

3

= event of being dealt a spade

= event of being dealt a heart

= event of being dealt a diamond 𝑝 𝐴

1

∩ 𝐴

2

∩ 𝐴

3

= p 𝐴

1 𝑝 𝐴

2

𝐴

1 𝑝 𝐴

3

𝐴

1

∩ 𝐴

2 𝑝 𝐴

1 𝑝 𝐴

2

𝐴

1

13

=

=

52

13

51 𝑝 𝐴

3

𝐴

1

∩ 𝐴

2

=

13

50

Total Probability = 13/52*13/51*13/50

An Application

Def: A probabilistic finite state machine is a finite state machine where each arc is associated with a probability, indicating how likely that path is to be taken. The sum of the probabilities of all arcs leaving a node must sum to 1.0.

A PFSM is an acceptor when one or more states are indicated as the start states and one or more states is indicated as the accept state.

Phones/Phonemes

Def: A phone is a speech sound

Def: A phoneme is a collection of related phones

(allophones) that are pronounced differently in different contexts

So [t] is phoneme.

The [t] sound in tunafish differs from the [t] sound in starfish. The first [t] is aspirated, meaning the vocal chords briefly don’t vibrate, producing a sound like a puff a air. A [t] followed by an [s] is unaspirated

FSA showing the probabilities of allophones in the word “tomato”

More Phonemes

This happens with a [k] and [g]— both are unaspirated, leading to the mishearing of the Jimi Hendrix song:

– ‘Scuse me, while I kiss the sky

– ‘Scuse me, while I kiss this guy

PFSA for the pronunciation of tomatoe

Phoneme Recognition Problem

Computational Linguists have collections of spoken and written language called corpora.

The Brown Corpus and the

Switchboard Corpus are two examples. Together, they contain

2.5 million written and spoken words that we can use as a base

Now Suppose

Our machine identified the phone I

Next the machine has identified the phone ni (as in “knee”)

Turns out that an investigation of the

Switchboard corpus shows 7 words that can be pronounced ni after I

– the,neat, need, new, knee, to, you

How can this be?

Phoneme [t] is often deleted at the end of the word: say “neat little” quickly

[the] can be pronounced like [ni] after in or. Talk like Jersey gangster here or Bob Marley

Strategy

Compile the probabilities of each of the candidate words from the corpora

Applies Baye’s theorem for two events: p ( d | s )

p ( s | d ) p ( d ) / p ( s )

Word Frequency knee 61 the neat

114834

338 need 1417 new 2625

Probability

.000024

.046

.00013

.00056

.001

Apply Simplified Bayes

p (word | [ ni ])

p ([ ni ] | word ) p ( word ) / p ([ ni ])

Since all of the candidtates will be divided by p[ni], we can drop it

But where does p([ni]|word) come from?

Rules of pronunciation variation in English are well-known.

•Run them through the corpora and generate probabilities for each.

•So, for example, that word initial [th] becomes [n] if the preceding word ended in [n] is .15

•This can be done for other pronunciation rules

Result

Word p([ni]|word) p(word)

New .36

Neat .52

.001

.00013

Need .11

Knee 1.0

The 0.0

.00056

.000024

.046

p([ni]|word)p(word)

.00036

.000068

.000062

.000024

0.0

The has a probability of 0.0 since the previous phone was [the] not

[n]

Notice that new seems to be the most likely candidate. This might be resolved at the syntactic level

Another possibility is to look at the probability of two word combinations in the corpora:

“I new” is less probable than “I need”

This is referred to as N-Gram analysis

General Bayes Theorem

Recall Bayes Theorem for two events:

P(A|B) = p(B|A)p(A)/p(B)

We would like to generalize this to multiple events

Example

Suppose:

Bowl A contains 2 red and 4 white chips

Bowl B contains 1 red and 2 white chips

Bowl C contains 5 red and 4 white chips

We want to select the bowls and compute the p of drawing a red chip

Suppose further

P(A) = 1/3

P(B) = 1/6

P(C) = ½

Where A,B,C are the events that A,B,C are chosen

P(R) is dependent upon two probabilities: p(which bowl) then the p(drawing a red chip)

So, p(R) is the union of the probability of mutually exclusive events: p ( R )

p ( A R )

p ( B R )

p ( C R )

p ( A ) p ( R | A )

p ( B ) p ( R | B )

p ( C ) p ( R | C )

( 1 / 3 )( 2 / 6 )

( 1 / 6 )( 1 / 3 )

( 1 / 2 )( 5 / 9 )

4 / 9

Now suppose that the outcome of the experiment is a red chip, but we don’t know which bowl it was drawn from.

So we can compute the conditional probability for each of the bowls.

From the definition of conditional probability and the result above, we know: p ( A | R )

p ( A R ) / p ( R )

p ( A ) p ( R | A ) /( p ( A ) p ( R | A )

p ( B ) p ( R | b )

p ( C ) p ( R | c ))

( 1 / 3 )( 2 / 6 ) /(( 1 / 3 )( 2 / 6 )

( 1 / 6 )( 1 / 3 )

( 1 / 2 )( 5 / 9 ))

2 / 8

We can do the same thing for the other bowls: p(B|R) = 1/8

P(C|R) = 5/8

This accords with intuition. The probability that the red bowl was chosen increases over the original probability, because since it has more red chips, it is the more likely candidate.

The original probabilities are called prior probabilities

The conditional probabilities (e.g., p(A|R)) are called the posterior probabilities.

To Generalize

Let events B

1

,B

2

,…,B m constitute a partition of the sample space S.

That is:

S

B

1

B

2

...

B m and B i

B j

, i

j

Suppose R is an event with B

1 of which > 0,

…B m its prior probabilities, all then R is the union m mutually exclusive events, namely,

R

( B

1

R ) ( B

2

R ) ...

( B m

R )

So p(R)

i m

1 p(B i

R)

i m

1 p ( B i

)( p ( R | B i

) (1)

Now,

If p(A) > 0, we have from the definition of conditional probability that p ( B k

| R )

p ( B k

R ) / p ( R ), k

1,2,..., m (2)

Using equation (1) and replacing p(R) in (2), we have Bayes' theorem p(B k

| R)

p(B k

)P(R | B k

)/( i m

1 p(B i

)(p(R | B i

) , k

1,2,..., m

P(B k

|R) is the posterior probability

Example

Machines A,B,C produce bolts of the same size.

Each machine produces as follows:

Machine A = 35%, with 2% defective

Machine B =25%, with 1% defective

Machine C =40% with 3% defective

Suppose we select one bolt at the end of the day. The probability that it is defective is

: p ( D )

p ( A D )

p ( B D )

p ( C D )

p ( A ) p ( D | A )

p ( B ) p ( D | B )

p ( C ) p ( C | D )

( 35 / 100 )( 2 / 100 )

( 25 / 100 )( 1 / 100 )

( 40 / 100 )( 3 / 100 )

215 / 10 , 000

Now suppose the selected bolt is defective.

The probability that it was produced by machine 3 is: p ( C |

D ) p

( C ) p ( C p ( D

)

| p ( D

C ) /

| C ) /( p ( A ) p ( D p ( D )

|

( 40 / 100 )( 3 / 100 ) /( 215 / 10 , 000 )

120 / 215

A )

p ( B ) p ( D | B )

p ( C ) p ( D | C ))

Notice how the posterior probability increased, once we concentrated on C since C produces both more bolts and a more defective bolts.

Evidence and Hypotheses

We can think of these various events as evidence (E) and hypotheses (H). p(H k

| E)

i m

1 p(E p(E

| H k

)p(H k

)

| H i

)p(H i

)

Where p(H k

|E) is the probability that hypothesis i is true given the evidence, E p(H k

) is the probability that hypothesis I is true overall p(E|H k

) is the probability of observing evidence, E, when H i is true m is the number of hypotheses

Why Bayes Works

The probability of evidence given hypotheses is often easier to determine than the probability of hypotheses given the evidence.

Suppose the evidence is a headache.

The hypothesis is meningitis.

It is easier to determine the number of patients who have headaches given that they have meningitis than it is to determine the number of patients who have meningitis, given that that they have headaches.

Because the population of headache sufferers is

But There Are Issues

When we thought about bowls (hypotheses) and chips (evidence), the probability of a kind of bowl given a red chip required that we compute 3 posterior probabilities for each of three bowls. If we also worked it out for white chips, we would have to compute 3X2 = 6 posterior probabilities.

Now suppose our hypotheses are drawn from the set of m diseases and our evidence from the set of n symptoms, we have to compute mXn posterior probabilities.

But There’s More

Bayes assumes that the hypothesis partitions the set of evidence into disjoint sets.

This is fine with bolts and machines or red chips and bowls, but much less fine with natural phenomena.

Pneumonia and strep probably doesn’t partition the set of fever sufferers (since they could overlap)

That is

We have to use a form of Bayes theorem that that considers any single hypothesis, h i

, in the context of the union of multiple symptoms e i p ( h i

| e

1

e

2

...

e m

)

p ( h i

) p ( e

1

e

2

...

e n

| h i

) /( p ( e

1

e

2

...

e n

)

If n is the number of symptoms and m the number of diseases, this works out to be mxn 2 + n 2 + m pieces of information to collect. In a expert system that is to classify

200 diseases using 2000 symptoms, this is 800,000,000 pieces of information to collect.

Naïve Bayes to the Rescue

Naive Bayes classification assumes that variables are independent.

The probability that a fruit is an apple, given that it is red, round, and firm, can be calculated from the independent probabilities that the observed fruit is red, that it is round, and that it is firm.

The probability that a person has strep, given that he has a fever, and a sore throat, can be calculated from the independent probabilities that a person has a fever and has a sore throat.

In effect, we want to calculate this: p ( h | e

1

e

2

...

e n

)

Since the intersection of sets is a set, Bayes lets us write: p ( h | e

1

e

2

...

e n

)

p ( h ) p (( e

1

e

2

...

e n

) | h ) / p ( e

1

e

2

...

e n

)

Since we only want to classify and the denominator is constant, we can ignore it giving: p ( h | e

1

e

2

...

e n

) K

p ( h ) p (( e

1

e

2

...

e n

) | h )

Independent Events to the Rescue

Assume that all pieces of evidence are independent given a particular hypothesis.

Recall the chain rule: p(A

B ...

N)

p ( A ) p ( B | A ) p ( C | A B ) p ( D | A B C )...

p ( N | A B C D ...

( N

1

)

Since p(B|A) = p(B) and p(C)|A B) = p(C), that is, the events are mutually exclusive, then

p(A

( B ...

N))

p ( A ) p ( B ) p ( C )...

p ( N )

p ( h | e

1

e

2

...

e n

) K

p ( h ) p (( e

1

e

2

...

e n

) | h )

Becomes (with a little hand-waving)

P(h i

1

|h)Xp(e

2

|h i

X…Xp(e n

|h i

)

Leading to the naïve Bayes

Classifier

P(E|H j

) i n

1 p ( E i

| H j

)