Session slides - CIRTL Network

advertisement

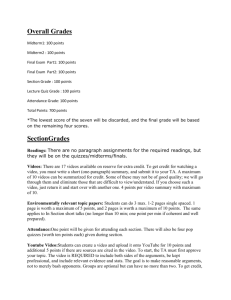

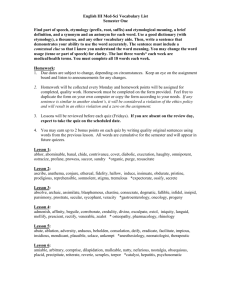

Welcome to the TAR Capstone Series What to Expect from My TAR Project? Alumni Reflect on the TAR Experience Shan He Faizan Zubair Kyle O’Connell Graduate student, Graduate student, Department of Chemical and Department of Biology, University of Texas at Biomolecular Engineering, Arlington Vanderbilt University Session begins at 4-5:30 ET/3-4:30 PM CT/2-3:30 MT/1-2:30 PT. Please configure your audio by running the Audio Set Up Wizard: Tools>Audio>Audio Set Up Wizard. Post-Doc, Department of Architecture Iowa State University www.cirtl.net Student’s attitude towards building energy modeling: A pilot study to improve integrated design education Shan He Department of Architecture Iowa State University Introduction • Class Content: • A new energy modeling course for architectural students to develop design argument considering the following building performance: solar heating, natural ventilation and daylighting at different design stage. • Organization: • 3-credit elective, meet once every Friday for 2.5h with a combination of lecture and lab. • Students submit report for each software introduced in this class as homework, and work on the same project in a group of 1~4 at the end of semester and make a group presentation. • 13 architectural students ranging from undergraduate Year #3 to graduate Year #3. • Grading: • The completion of utilizing all the 6 software introduced and use them to assist design decision making and develop design argument. Data Collection Methods • 1- Questionnaires • • One pre-survey at the beginning of the semester, one after each session, 7 in total Likert scale, General attitude to this class, software and pedagogy. • 2- Class presentation audio recording • • At the end of semester, 1 in total Recording students defense of their design argument. • 3- Individual interview • • Close to the end of the semester, only once Basically follow the questionnaire structure and get a deeper understanding of the students’ answer (especially blank and ‘not sure’ answer). Timeline Project Design Timeline Oct. 31, 2014 Nov. 15, 2014 Nov. 30, 2014 Dec. 15, 2014 Jan. 12, 2015 Agreement on the research question, continue Literature review Start IRB and design questionnaires, continue Literature review Finalize data collection method details, continue Literature review Finish IRB, continue Literature review Start TAR with the class Survey Implementation Timeline Jan. 19, 2015 Feb. 9, 2015 Mar. 27, 2015 Apr. 10, 2015 Introduce the project in class and send out digital survey Change digital presurvey to printed version and send out in class Send out 2 post- 1- Send out the rest survey in printed 4 post-survey in version in class printed version in class; 2- Start individual interview May 9, 2015 1- Final presentation; 2- Finish individual interview Conclusion • 1- Differentiate the class level • 2- Change the class meeting time from once every week to twice every week • 3- Integrated with design studio education Lessons learnt for TAR data collection • 1- Limited students amount, low attendance and off-schedule homework schedule delayed the data collection. • May be solved with reorganizing the class meeting time. • 2- Not being an instructor, data collection method is limited. • Incentives is very important. Gift card or optional assignment. • 3- Not being an instructor or TA, there could be some technical issues with sharing data. E.g. access the students’ homework. • 4- A lot of undecided about the software evaluation. Future follow-up interview is planned. In the next semester, I may be able to collect information from design studio with students enrolled in Arch 351 as a control group, and those who are not enrolled for a comparison. Future Work • 1- Assess the students’ understanding of software input and output; • 2- Design a new survey for all the architectural students from undergraduate year #3 to graduate year #3 • 3- Focus group and individual interview CIRTL TAR Capstone Series October 5th 2015 3 pm CT Promoting Active Learning in Engineering Classrooms Faizan Zubair1, Cynthia Brame2, Paul Laibinis1 1Chemical & Biomolecular Engineering 2Center for Teaching • • • Online modules developed to address a teaching and learning “problem” Grounded in understanding of how people learn Teaching-as-research: Assessment of module impact on student learning First semester: Design and develop online learning module(s) Second semester: Implement and assess online learning module(s) Bloom’s Taxonomy: The Hierarchy of Knowledge The goal is to enable higher-level learning in-class through the design and application of new instructional materials Target Classroom: Undergraduate Chemical Engineering Thermodynamics • Student understanding of thermodynamic efficiency is limited – Student can apply equations for simple systems – For a system of components, student show a lack of understanding • We address this disconnect by designing and applying new instructional tools to help enable higher level learning in the classroom Socrative A versatile platform for interactive teaching • • • • Real-time feedback Short time as well as discussion based questions Easy to share results with the class Good for group work Process for Developing Videos • Recording Software • Camtasia • Screencast-O-Matic • Videopad for Video-editing • Wacom Board • Upload the video on Youtube • Use Hapyak to integrate questions Building analogies for thermodynamic efficiency Video 1 & 2 Thermodynamic System Heat losses to the surroundings Internal Energy Electrical energy Currency Analogy Transaction Costs Example: Broker Fees Market Compute efficiency of a unit component Video 3 Heat losses to the surroundings Electrical energy Internal Energy 25 W of power for 60 sec Δ 𝐼𝑛𝑡𝑒𝑟𝑛𝑎𝑙 𝐸𝑛𝑒𝑟𝑔𝑦 𝐸𝑓𝑓𝑖𝑐𝑖𝑒𝑛𝑐𝑦 = 𝐸𝑙𝑒𝑐𝑡𝑟𝑖𝑐𝑎𝑙 𝐸𝑛𝑒𝑟𝑔𝑦 𝑠𝑢𝑝𝑝𝑙𝑖𝑒𝑑 Raises temperature from 20 °C to 50 ° C Compute efficiency for system of components Video 4 Synchronized audio-video Step-wise approach to problem solving Assessments for students Assessing the effectiveness of the Module Jan Feb Mar Implementation Assessments Start of Spring 2015 semester PS #3 (Feb 2nd), Monetary Analogy PS #4 (Feb 9th), Efficiency of the Heating Element Quiz 4 (Mar 9th) PS #6 (Mar 11th), Efficiency of the Rankine Cycle Exam II questions (Mar 19th) Student Attitude Survey (Mar 20th) Apr Formative assessments as part of the videos Formative assessments as part of the videos Problem on the Homework Assignment View the video at https://www.youtube.com/watch?v=uSukYclkQqo&feature=youtu.be&hd=1 and answer the following questions: For the process described in the video employing a 100% and an 80% efficient turbine, the overall process efficiency changed from 36.6% to 29.36%. Determine the percent increase or decrease in the rate of cooling demands for the process caused by the use of the 80% efficient turbine if a) The rate of heat provided to the process remained the same. b) The net rate of power generation remained the same. c) Determine the overall process efficiency if instead the pump was 80% efficient and the turbine was 100% efficient. Is your result greater than, less than, or the same as the 29.36% in the video? Pop Quiz Results didn’t show a difference in student learning early in the semester Quiz 4 Total (10 points) 2013 6.4 ± 2.3 2015 6.1 ± 2.3 Summative Assessment: Test II Results Student performance on Power Generation Cycle improved in 2015 2011 2015 0 - 50 23% 0 - 50 28% 80 - 100 43% 80 - 100 63% 50 - 80 14% 50 - 80 29% Average Score: 65.0 Average Score: 73.0 Summative Assessment: Test II Results Student performance on Refrigeration Cycle improved in 2015 2010 2015 80 - 100 12% 50 - 80 30% 0 - 50 15% 0 - 50 58% Average Score: 54.1 80 - 100 54% 50 - 80 31% Average Score: 73.9 Student Perception Survey I Students found videos to be an effective learning strategy Strongly Agree Agree Neutral Disagree Strongly Disagree The videos were an effective way to reinforce thermodynamic concepts 20% 68% 10% 2% 0% It was helpful to watch the videos on my own time and pace 32% 58% 8% 2% 0% The material covered in videos related well to concepts covered in class and problem sets 28% 58% 14% 0% 0% I watched at least one of the videos multiple times to help me understand a particular concept? 60% of the students said yes! Student Perception Survey II Students supported the use of videos for other classes Strongly Agree Agree Neutral Disagree Strongly Disagree It will be nice to have more videos to understand other thermodynamic concepts 44% 48% 8% 0% 0% It will be nice to have videos to illustrate concepts in other chemical engineering classes 38% 54% 8% 0% 0% Suggested topics for Thermodynamics Departure Functions Fugacity Equations of State Generalized co-relations Conclusions • Challenges – Time management • TA: Balance research with teaching • Students: Designed so that no additional time for students • Faculty: ? – Learning curve: access to technology specialist • Content-specific deployment – Helpful to know the material well – Target the instructional materials to challenging concepts + tie it to homework – Knowing the professor helps; recognizes research demands • Advice – Don’t over commit yourself Acknowledgements • Center for Teaching Cynthia Brame, Assistant Director Rhet McDaniel, Educational Technologist • BOLD Cohort Group Dave Caudel Emilianne McCranie Udo Chinyere Ty McCleery Mary Keithly • Funding National Science Foundation grant DUE-1231286 to the CIRTL Network Assessing Peer Instruction in an Undergraduate Biology Classroom Kyle O’Connell Department of Biology University of Texas Arlington Questions 1) What effects does Peer Instruction have on student learning? a) Do males and females perform differently on PI? b) Are there differences in attendance? c) Are there quiz vs exam performance differences? 2) What is the relationship between quiz and exam performance? Does Peer Instruction Improve Student Learning Outcomes? Peer Instruction: Experimental Group Traditional Quiz: Control Group Same Course, but two different sections Compare Quiz and Exam Performance Question 1: What effects does Peer Instruction have on student learning? a) Are there differences in performance between the sexes? Ttest=.801 No difference is observed b) Are there differences in attendance? Ttest =.401 No difference between sections Did PI help Section1 do better on Quiz Questions then Section 2? ttest=.574 Did PI help Section1 do better on Exam Questions then Section 2? ttest=.367 What is the relationship between quizzes and exam scores? Correlation coefficient= .725 What is the relationship between quizzes and exam scores? Correlation coefficient= .289 Conclusions 1. PI Quizzes influenced exam performance 2. Traditional quizzes had little effect on exam scores 1. However…PI did not influence quiz scores, exam scores, or attendance. Upcoming Events All-Network Mid-Project Presentations November 16, 4-5:30 ET/3-4:30 PM CT/2-3:30 MT/12:30 PT Presenters: Current TAR students throughout the CIRTL Network. Stay tuned for a call for speakers! To sign up to hear about these and other CIRTL events, email info@cirtl.net. www.cirtl.net