jan-sigir-tutorial - UC Berkeley School of Information

advertisement

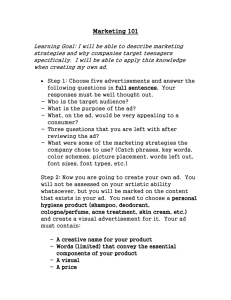

Web Search Tutorial Jan Pedersen and Knut Magne Risvik Yahoo! Inc. Search and Marketplace Agenda • A Short History • Internet Search Fundamentals – Web Pages – Indexing • Ranking and Evaluation • Third Generation Technologies A Short History Precursors • Information Retrieval (IR) Systems – online catalogs, and News • Limited scale, homogeneous text – recall focus – empirical • Driven by results on evaluation collections – free text queries shown to win over Boolean • Specialized Internet access – Gopher, Wais, Archie • FTP archives and special databases • Never achieved critical mass First Generation Systems • 1993: Mosaic opens the WWW – – – – – 1993 Architext/Excite (Stanford/Kleiner Perkins) 1994 Webcrawler (full text Indexing) 1994 Yahoo! (human edited Directory) 1994 Lycos (400K indexed pages) 1994 Infoseek (subscription service) • Power systems – 1994 AltaVista (Dec Labs, advanced query syntax, large index) – 1996 Inktomi (massively distributed solution) Second Generation Systems • Relevance matters – 1998 Direct Hit (clickthrough based re-ranking) – 1998 Google (link authority based re-ranking) • Size matters – 1999 FAST/AllTheWeb (scalable architecture) • The user matters – 1996 Ask Jeeves (question answering) • Money matters – 1997 Goto/Overture (pay-for-performance search) Third Generation Systems • Market consolidation – 2002 Yahoo! Purchases Inktomi – 2003 Overture purchases AV and FAST/AllTheWeb – 2003 MSN announces intention to build a Search Engine • Search matures – $2B market projected to grow to $6B by 2005 – required capital investment limits new players • Gigablast? – traffic focused in a few sites • Yahoo!, MSN, Google, AOL – consumer use driven by Brand marketing Web Search Fundamentals Web Fundamentals User Browser Web Server URL HTTP Request HTML Page Page Rendering Hyper Links Page Serving Definitions • URL’s refer to WWW content – referential integrity is not guaranteed – roughly 10% of Url’s go 404 every month • HTTP requests fetch content from a server – stateless protocol – cookies provide partial state • Web servers generate HTML pages – can be static or dynamic (output of a program) – markup tags determine page rendering • HTML pages contain hyperlinks – link consists of a url and anchor text Url’s • URL Definition – http://host:port/path;params?query#fragment • fragment is not considered part of the URL • params are considered part of the path – params are not frequently used • Examples – http://www.cnn.com/ – http://ad.doubleclick.net/jump;sz=120x60;ptile=6;ord=69810 62172 – http://us.imdb.com/Title?0068646 – http://www.sky.com/skynews/article/0,,3000012261027,00.html Dynamic Url’s • Urls with Dynamic Components – Path (including params) and host are not dynamic • If you change the PATH and/or host you will get a 404 or similar error – Query is dynamic • If you change the query part, you will get a valid page back • source of potentially infinite number of pages • Examples – http://www.cnn.com/index.html?test • Returns a valid 200 page, even if test is not a valid query term – http://www.cnn.com/index.html;test • Returns a 404 error page • Not all Url’s Follow this Convention: – http://www.internetnews.com/xSP/article.php/1378731 Dynamic Content • Content Depends on External (to URL) Factors – – – – Cookies IP Referrer User-Agent • Examples – http://my.yahoo.com/ – http://forum.doom9.org/forumdisplay.php?s=af9ddb31710c7 b314b75262c1031d8af&forumid=65 • Dynamic Url’s and Dynamic Content are Orthogonal – static url’s can refer to dynamic content – dynamic url’s can refer to static content HMTL Sample <html> <head> <title>Andreas S. WEIGEND, PhD</title> </head> <body> <blockquote><font face="Verdana,Tahoma,Arial" size=2> <h2><font size="4" face="Verdana, Arial, Helvetica, sans-serif">Andreas S. WEIGEND, </font><font size="3" face="Verdana, Arial, Helvetica, sans-serif">Ph.D.</font><font face="Verdana, Arial, Helvetica, sans-serif"><br> <font size="2">Chief Scientist, Amazon.com</font></font></h2> </font> <blockquote> <p><font face="Verdana, Arial, Helvetica, sans-serif" size="2"><i>&quot;Sophisticated algorithms have always been a big part of creating the Amazon.com customer experience.&quot; (Jeff Bezos, Founder and CEO of Amazon.com)</i></font></p></blockquote> <p><font face="Verdana, Arial, Helvetica, sans-serif" size="2"> <a href="http://www.amazon.com">Amazon.com</a> might be the world's largest laboratory to study human behavior and decision making. It for sure is a place with very smart people, with a healthy attitude towards data, measurement, and modeling. I am responsible for research in machine learning and computational marketing. Applications range from real-time predictions of customer intent and satisfaction, to personalization and long-term optimization of pricing and promotions.<font size="-2"> [<a href="http://www.weigend.com/amazonjobs.html" onclick="window.open(this.href);return false;">Job openings.</a>] </font> I'm also the point person for academic relations.</font></p> </blockquote> <font face="Verdana,Tahoma,Arial" size=2> <h3> <font face="Verdana, Arial, Helvetica, sans-serif"><i><font size="3"> Schedule Summer 2003</font></i></font></h3> </font> Rendered Page WWW Size • How pages are in the WWW? – Lawrence and Giles, 1999: 800M pages with most pages not indexed – Dynamically generated pages imply effective size is infinite • How many sites are registered? – Churn due to SPAM Crawling • Search Engine robot – visits every page that will be indexed – traversal behavior depends on crawl policy • Index parameterized by size and freshness – freshness is time since last revisit if page has changed • Batch vs Incremental – Batch crawl has several, distinct, batch processing stages • discover, grab, index • AV discovery phase takes 10 days, grab another 10, etc. • sharp freshness curve – Incremental crawl • crawler constantly operates, intermixing discovery with grab • mild drop-off in freshness Typical Crawl/Build Architecture Internet Seed List Crawl URL DB Pagefiles Grab Discovery Alias DB Index Build Anchor Text DB Pagefiles Filtered Pagefiles Index Connectivity DB Duplicates DB Relative Size From SearchEngineShowdown Google claims 3B Fast claims 2.5B AV claims 1B Freshness From Search Engine Showdown Note hybrid indices; subindices with differing update rates Query Language • Free text with implicit AND and implicit proximity – Syntax-free input • Explicit Boolean – AND (+) – OR (|) – AND NOT (-) • Explicit Phrasing (“”) • Filters – – – – domain: host: link: url: filetype: title: image: anchor: Query Serving Architecture “travel” Load Balancer “travel” FE1 … FE2 “travel” QI1 QI2 … FE8 … QI8 … “travel” Node1,1 Node1,2 Node1,3 Node2,1 Node2,2 Node2,3 Node3,1 Node3,2 Node3,3 Node4,1 Node4,2 Node4,3 “travel” … … … … Node1,N Node2,N Node3,N Node4,N • Index divided into segments each served by a node • Each row of nodes replicated for query load • Query integrator distributes query and merges results • Front end creates a HTML page with the query results Query Evaluation • Index has two tables: “travel” – term to posting – document ID to document data Query Evaluator ranking display • Postings record term occurrences – may include positions Terms Posting Doc ID Doc Data • Ranking employs posting – to score documents • Display employs document info – fetched for top scoring documents Scale • Indices typically cover billions of pages – terrabytes of data • Tens of millions of queries served every day – translates to hundreds of queries per second • User require rapid response – query must be evaluated in under 300 msecs • Data Centers typically employ thousands of machines – Individual component failures are common Search Results Page • Blended results – • • Relevance ranked Assisted search – • via Tabs Sponsored listing – • Spell correction Specialized indices – • multiple sources monetization Localization – Country language experience Relevance Evaluation Relevance is Everything • The Search Paradigm: 2.4 words, a few clicks, and you’re done – only possible if results are very relevant • Relevance is ‘speed’ – time from task initiation to resolution – important factors: • Location of useful result • UI Clutter • latency • Relevance is relative – context dependent • e.g. ‘football’ in the UK vs the US – task dependent • e.g. ‘mafia’ when shopping vs researching Relevance is Hard to Measure • Poorly defined, subjective notion – depends on task, user context, etc. • Analysts have Focused on Easier-to-Measure Surrogates – index size, traffic, speed – anecdotal relevance tests • e.g. Vanity queries • Requires Survey Methodology – averaged over queries – averaged over users Survey Methodologies • Internal expert assessments – assessments typically not replicated – models absolute notion of relevance • External consumer assessments – assessments heavily replicated – models statistical notion of relevance • A/B surveys – compare whole result sets – visual relevance plays a large role • Url surveys – judge relevance of particular url for query A/B Test Design • Strategy: – Compare two ranking algorithms by asking panelists to compare pairs of search results • Queries: – 1000 semi-random queries, filtered for family-friendly, understandability • Users can select from a list of 20 queries • URLS – Top 10 search results from 2 algorithms • Voting: – 5 point scale, 7 replications – Each user rates 6 queries, one of which is a control query • Control query has AV results on one side, random URLs on the other • Reject voters who take less than 10 seconds to vote Query selection screen Rating screen A/B Test Scoring • Test ran until we had 400 decisive votes – Margin of error = 5% • Compute: – Majority Vote: count of queries where more than half of the users said one engine was “somewhat better” or “much better” – Total Vote: count of users that rated a result set “somewhat better” of “better” for each engine • Compare percentages – test if one system ‘out votes’ the other – determine if the difference is statistically significant Results • Control Votes (error bar = 1/sqrt(160) = 7.9%) Queries with winner Good Bad Same All accepted votes Majority Unanimous “a little better” “much better” 98.1% 51.5% 24.4% 59.1% 0% 4.7% 1.7% 0% 1.9% 0.6% 10.1% • Test One: AV vs SE1 (error bar = 1/sqrt(400) = 5%) Queries with winner All accepted votes Majority Unanimous “a little better” “much better” AltaVista 37.6% 6.1% 24.4% 11.0% SE1 37.3% 8.0% 22.2% 10.7% Same 25.1% 2.6% 31.7% Results • Test Two: AV Vs SE2 (with UI issue) Queries with winner All accepted votes Majority Unanimous “a little better” “much better” AltaVista 58.5% 13.4% 26.5% 16.3% SE2 28.1% 4.6% 21.8% 8.9% Same 13.4% 0.9% 26.4% • Test Three: SE1 Vs SE2 Queries with winner All accepted votes Majority Unanimous “a little better” “much better” SE1 35.4% 4.7% 28.2% 13.2% SE2 40.6% 4.1% 29.0% 15.6% Same 24.0% 1.9% 13.8% Ranking • Given 2.4 query terms, search 2B documents and return 10 highly relevant in 300 msecs – Problem queries: • Travel (matches 32M documents) • John Ellis (which one) • Cobra (medical or animal) • Query types – Navigational (known item retrieval) – Informational • Ingredients – – – – Keyword match (title, abstract, body) Anchor Text (referring text) Quality (link connectivity) User Feedback (clickrate analysis) The Components of Relevance • First Generation: – Keyword matching • Title and abstract worth more • Second Generation: – Computed document authority • Based on link analysis – Anchor text matching • Webmaster voting • Development Cycle: Tune Ranking Evaluate Metrics Connectivity Connectivity Goals • An indicator of authority – As measured by static links – Each link is a ‘vote’ in favor of a site – Webmasters are the voters • Not all links are equal – Links from authoritative sites are worth more • Introduces an interesting circularity – Votes from sites with many links are discounted • Use your vote wisely – Discount navigational links • Not all links are editorial – Account for link SPAM Connectivity Network • What is authority score for nodes A and B? • Inlink computes: – A=3 – B=2 A • Page Rank Computes – A = .225 – B = .295 B Definitions • Connectivity Graph – Nodes are pages (or hosts) – Directed edges are links – Graph edges can be represented as a transition matrix, A • The ith row of A represents the links out from node i • Authority score – Score associated with each node – Some function of inlinks to node and outlinks from node • Simplest authority score is inlink count Page Rank (Without Random Jump) .1 .1 1/2 1/2 • Contribution averaged over all outlinks • Node score is the sum of contributions r (i) j: j i .1 A (.25) r( j) e( j ) • Fixed point equation r Ar B (.3) – If A is normalized • Each row sums to 1.0 Page Rank Implications • A is a stochastic matrix – r(i) can be interpreted as a probability • Suppose a surfer takes a outlink at random • r(i) is the long run probability of landing at a particular node – Solution to fixed point equation is the principal Eigen vector • principal Eigen value is 1.0 • Solution can be found by iteration – If Ar r then A r r – Start with random initial value for r – Iterate multiplication by A • Contribution of smaller eigen values will drop out – Final value is a good estimate of the fixed point solution n Page Rank (with random jump) .1 = 0.1 .1 1/2 • What’s the score for a node with no in-links? • Revised equation r( j) r (i) (1 ) N 1/2 j: j i e( j ) • Fixed point equation .1 A (.225) r (U (1 ) A)r Ui, j 1 N • Probability interpretation B (.293) – As before with chance of jumping randomly Eigenrank • Separates internal from external links – Internal transition matrix I – External transition matrix E • Introduces a new parameter – is the random jump probability – is the probability of taking an internal link – (1 - - ) is the probability of taking an external link Eigenrank • Revised equation = 0.1 = 0.1 .1 .1 1/2 A (.2) B (.202) N j : j i internal r ( j) r ( j) (1 ) e( j ) j : j i e ( j ) external • Fixed point equation 1/2 .1 r (i ) r (U I (1 )E)r Ui, j 1 N • Probability interpretation – chance of random jump – chance of internal link – (1--) chance of external link Computational Issues • Nodes with no outlinks – Transition matrix with zero row • Internal or external – Leave out of computation(?) – Redistribute mass to random jump(?) • Currently mass is redistributed – Complex formula that prefers external links Kleinberg • Two scores – Authority score, a – Hub score, h • Fixed Point equations – Authority a Ah AA t a – Hub h At a At Ah – Principal Eigen vectors are solutions SPAM • Manipulation of content purely to influence ranking – – – – Dictionary SPAM Link sharing Domain hi-jacking Link farms • Robotic use of search results – Meta-search engines – Search Engine optimizers – Fraud Third Generation Technologies Handling Ambiguity Results for query: Cobra Impression Tracking Incoherent urls are those that receive high rank for a large diversity of queries. Many incoherent urls indicate SPAM or a bug (as in this case). Clickrate Relevance Metric Average highest rank clicked perceptibly increased with the release of a new rank function. User Interface • Ranked result lists – Document summaries are critical • Hit highlighting • Dynamic abstracts • url – No recent innovation • Graphical presentations not well fit to the task • Blending – Predefined segmentation • e.g. Paid listing – Intermixed with results from other sources • e.g. News Future Trends • Question Answering – WWW as language model • Enables simple methods • e.g. Dumais et al. (SIGIR 2002) • New contexts – Ubiquitous Searching • Toolbars, desktop, phone – Implicit Searching • Computed links • New Tasks – E.g. Local/ Country Search Bibliography • • • • Modeling the Internet and the Web: Probabilistic Methods and Algorithms by Pierre Baldi, Paolo Frasconi, and Padhraic Smyth John Wiley & Sons; May 28, 2003 Mining the Web: Analysis of Hypertext and Semi Structured Data by Soumen Chakrabarti Morgan Kaufmann; August 15, 2002 The Anatomy of a Large-scale Hypertextual Web Search Engine by S. Brin and L. Page. 7th International WWW Conference, Brisbane, Australia; April 1998. Websites: – http://www.searchenginewatch.com/ – http://www.searchengineshowdown.com/ • Presentations – http://infonortics.com/searchengines/sh03/slides/evans.pdf