lecture02b

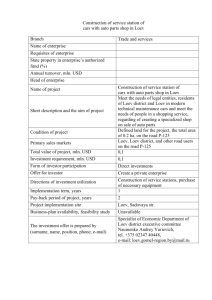

advertisement

CSE 326 Data Structures:

Complexity

Lecture 2: Wednesday, Jan 8, 2003

1

Overview of the Quarter

•

•

•

•

•

•

•

•

•

•

Complexity and analysis of algorithms

List-like data structures

Search trees

Priority queues

Hash tables

Midterm

Sorting

around here

Disjoint sets

Graph algorithms

Algorithm design

Advanced topics

2

Complexity and analysis of

algorithms

• Weiss Chapters 1 and 2

• Additional material

– Cormen,Leiserson,Rivest – on reserve at Eng.

library

– Graphical analysis

– Amortized analysis

3

Program Analysis

• Correctness

– Testing

– Proofs of correctness

• Efficiency

– Asymptotic complexity - how running times

scales as function of size of input

4

Proving Programs Correct

• Often takes the form of an inductive proof

• Example: summing an array

int sum(int v[], int n)

{

if (n==0) return 0;

else return v[n-1]+sum(v,n-1);

}

What are the parts of an inductive proof?

5

Inductive Proof of Correctness

int sum(int v[], int n)

{

if (n==0) return 0;

else return v[n-1]+sum(v,n-1);

}

Theorem: sum(v,n) correctly returns sum of 1st n elements of

array v for any n.

Basis Step: Program is correct for n=0; returns 0.

Inductive Hypothesis (n=k): Assume sum(v,k) returns sum of

first k elements of v.

Inductive Step (n=k+1): sum(v,k+1) returns v[k]+sum(v,k),

which is the same of the first k+1 elements of v.

6

Inductive Proof of Correctness

Binary search in a sorted

array:

int find(int x, int v[], int n)

{ int left = -1;

int right = n;

while (left+1 < right) {

int m = (left+right) / 2;

if (x == v[m]) return m;

if (x < v[m]) right = m;

else left = m;

}

return –1; /* not found */

v[0]v[1] ... v[n-1]

Given x,

find m s.t. x=v[m]

Exercise 1:

proof that it is correct

}

Exercise 2: compute m, k s.t.

v[m-1] < x = v[m] = v[m+1] = ... = v[k] < v[k+1]

7

Proof by Contradiction

• Assume negation of goal, show this

leads to a contradiction

• Example: there is no program that

solves the “halting problem”

– Determines if any other program runs

forever or not

Alan Turing, 1937

8

Program NonConformist (Program P)

If ( HALT(P) = “never halts” ) Then

Halt

Else

Do While (1 > 0)

Print “Hello!”

End While

End If

End Program

• Does NonConformist(NonConformist) halt?

• Yes? That means

HALT(NonConformist) = “never halts”

• No? That means

HALT(NonConformist) = “halts”

9

Defining Efficiency

• Asymptotic Complexity - how running time

scales as function of size of input

• Two problems:

– What is the “input size” ?

– How do we express the running time ? (The

Big-O notation)

10

Input Size

• Usually: length (in characters) of input

• Sometimes: value of input (if it is a number)

• Which inputs?

– Worst case: tells us how good an algorithm works

– Best case: tells us how bad an algorithm works

– Average case: useful in practice, but there are

technical problems here (next)

11

Input Size

Average Case Analysis

• Assume inputs are randomly distributed according

to some “realistic” distribution

• Compute expected running time

E (T , n)

xInputs( n )

Prob ( x) RunTime( x)

• Drawbacks

– Often hard to define realistic random distributions

– Usually hard to perform math

12

Input Size

• Recall the function: find(x, v, n)

• Input size: n (the length of the array)

• T(n) = “running time for size n”

• But T(n) needs clarification:

– Worst case T(n): it runs in at most T(n) time for any x,v

– Best case T(n): it takes at least T(n) time for any x,v

– Average case T(n): average time over all v and x

13

Input Size

Amortized Analysis

• Instead of a single input, consider a sequence of

inputs:

– This is interesting when the running time on some input

depends on the result of processing previous inputs

• Worst case analysis over the sequence of inputs

• Determine average running time on this sequence

• Will illustrate in the next lecture

14

Definition of Order Notation

•

Upper bound:T(n) = O(f(n))

Big-O

Exist constants c and n’ such that

T(n) c f(n) for all n n’

•

Lower bound:T(n) = (g(n))

Omega

Exist constants c and n’ such that

T(n) c g(n) for all n n’

•

Tight bound: T(n) = (f(n))

Theta

When both hold:

T(n) = O(f(n))

T(n) = (f(n))

Other notations: o(f), (f) - see Cormen et al.

15

Example: Upper Bound

Claim: n 2 100n O (n 2 )

Proof: Must find c, n such that for all n n,

n 2 100n cn 2

Let's try setting c 2. Then

n 2 100n 2n 2

100n n 2

100 n

So we can set n 100 and reverse the steps above.

16

Using a Different Pair of Constants

Claim: n 2 100n O(n 2 )

Proof: Must find c, n such that for all n n,

n 100n cn

Let's try setting c 101. Then

2

2

n 100n 100n

2

2

n 100 101n (divide both sides by n)

100 100n

1 n

So we can set n 1 and reverse the steps above.17

Example: Lower Bound

Claim: n 2 100n (n 2 )

Proof: Must find c, n such that for all n n,

n 2 100n cn 2

Let's try setting c 1. Then

n 2 100n n 2

n0

So we can set n 0 and reverse the steps above.

Thus we can also conclude n 2 100n (n 2 )

18

Conventions of Order Notation

Order notation is not symmetric: write 2n 2 n O(n 2 )

but never O(n 2 ) 2n 2 n

The expression O( f (n)) O( g (n)) is equivalent to

f (n) O( g (n))

The expression ( f ( n)) ( g ( n)) is equivalent to

f (n) ( g (n))

The right-hand side is a "cruder" version of the left:

18n 2 O(n 2 ) O(n3 ) O(2n )

18n 2 (n 2 ) (n log n) (n)

19

Which Function Dominates?

f(n) =

g(n) =

n3

100n2 + 1000

+

2n2

n0.1

log n

n + 100n0.1

2n + 10 log n

5n5

n!

n-152n/100

1000n15

82log n

3n7 + 7n

Question to class: is f = O(g) ? Is g = O(f) ?

20

Race I

f(n)= n3+2n2 vs. g(n)=100n2+1000

21

Race II

n0.1

vs.

log n

22

Race III

n + 100n0.1

vs. 2n + 10 log n

23

Race IV

5n5

vs.

n!

24

Race V

n-152n/100

vs.

1000n15

25

Race VI

82log(n)

vs.

3n7 + 7n

26

16n log8 (10n ) 100n O(n log(n))

3

• Eliminate

low order

terms

• Eliminate

constant

coefficients

2

2

3

16n3 log8 (10n 2 ) 100n 2

16n3 log8 (10n 2 )

n3 log8 (10n 2 )

n3 log8 (10) log 8 ( n 2 )

n3 log8 (10) n3 log8 (n 2 )

n3 log8 (n 2 )

n3 2 log8 (n)

n3 log8 (n)

n3 log8 (2) log( n)

n3 log(n)

27

Common Names

Slowest Growth

constant:

O(1)

logarithmic:

O(log n)

linear:

O(n)

log-linear:

O(n log n)

quadratic:

O(n2)

exponential:

O(cn) . . .2

2

2

hyperexponential: O(2

)

(c is a constant > 1)

(a tower of n exponentials

Fastest Growth

Other names:

superlinear:

polynomial:

O(nc)

O(nc)

(c is a constant > 1)

(c is a constant > 0)

28

Sums and Recurrences

Often the function f(n) is not explicit but

expressed as:

• A sum, or

• A recurrence

Need to obtain analytical formula first

29

Sums

n(n 1)

f (n) 1 2 ... n i

O(n 2 )

2

i 1

n

n

f (n) 1 3 5 ... (2n 1) (2i 1) n 2 O(n 2 )

i 1

n

f (n) 1 2 ... n i 2

2

2

2

i 1

n(n 1)( 2n 1)

O(n 3 )

6

f (n) 13 23 ... n3 O(?)

n

f (n) 1 5 9 ... (4n 3) (4i 3) 4 O(??)

4

4

4

4

i 1

30

More Sums

3n1 1

f (n) 1 3 3 ... 3 3

O(3n )

3 1

i 1

n

2

n

i

Sometimes sums are easiest computed with integrals:

n1

1 1 1

1 n 1

f (n) ... 1 dx 1 ln( n) ln( 1) O(ln( n))

1 x

1 2 3

n i 1 i

n

n 1

1 1 1

1

1

1 1

f (n) 2 2 2 ... 2 2 1 2 dx 1 O(1)

1 x

1 2 3

n

1 n

i 1 i

31

Recurrences

• f(n) = 2f(n-1) + 1, f(0) = T

• Telescoping

f(n)+1 = 2(f(n-1)+1)

f(n-1)+1 = 2(f(n-2)+1)

f(n-2)+1 = 2(f(n-3)+1)

.....

f(1) + 1 = 2(f(0) + 1)

f(n)+1

2

22

2n-1

= 2n(f(0)+1) = 2n(T+1)

f(n) = 2n(T+1) - 1

32

Recurrences

• Fibonacci: f(n) = f(n-1)+f(n-2), f(0)=f(1)=1

try f(n) = A cn What is c ?

A cn = A cn-1 + A cn-2

c2 – c – 1 = 0

c1, 2

1 1 4 1 5

2

2

n

n

1 5

1 5

1 5

B

O

f (n) A

2

2

2

n

Constants A, B can be determined from f(0), f(1) – not

interesting for us for the Big O notation

33

Recurrences

• f(n) = f(n/2) + 1, f(1) = T

• Telescoping:

f(n) = f(n/2) + 1

f(n/2) = f(n/4) + 1

...

f(2) = f(1) + 1 = T + 1

f(n) = T + log n = O(log n)

34