SINPASE fMRI course

advertisement

SINPASE fMRI course

Dr Cyril Pernet, University of Edinburgh

Dr Gordon Waiter, University of Aberdeen

Overview

Matlab® environment: images are matrices

MRI and fMRI: image format and softwares

Computational Neuro-anatomy: theory

Computational Neuro-anatomy: pratice

Statistics: theory

fMRI Single level analysis in practice

fMRI Random effects analysis

Other software - visualization

Data provided by the FIL: http://www.fil.ion.ucl.ac.uk/spm/

Matlab® environment:

images are matrices

Read data and do basic stuffs

Matlab (1)

Command

window:

A = 3+5

Workspace: whos

History

Browser

Matlab (2)

load MRI_3D look in the workspace

size(MRI_3D) returns the dimensions (here nb of voxels)

imagesc(MRI_3D(:,:,54))

All rows All columns ‘column’ 54 on dim3

imagesc(MRI_3D(:,:,54)')

imagesc(flipud(MRI_3D(:,:,54)'))

colormap('gray')

Matlab (3)

MRI images are matrices (tables) with 3 dimensions, but it can be

4 dimensions (fMRI) or more.

Operations on matrices

A = [1 2 3 4 5]; B = A' (transpose)

A*B → matrix multiplication sum of row*columns

C = [1 1 1 1 1];

A+C → addition / subtraction work by cell

A.*C → multiplication by cell uses .* (and ./)

Exercise: subtract slice 54 from slice 55 and image it

imagesc(flipud(MRI_3D(:,:,55)'-MRI_3D(:,:,54)'))

Matlab (4)

Exercise: Make a script (Matlab Editor) to look at the all volume

using an axial view

Possible functions to use: for, imagesc, squeeze, pause

the matlab help

Loop For/End

for z = 1:108

do this and that for each z

end

… and

Matlab (5)

for z = 50:220

imagesc(squeeze(MRI_3D(:,z,:)))

colormap('gray')

title(['this is the slice ',num2str(z)])

pause(0.1)

end

MRI and fMRI:

image format and software

Image format

DICOM format (.dcm)

‘Standard’ format coming from all scanners

Stands for Digital Imaging and Communications in Medicine

Part 10 of the DICOM standard describes a file format for the

distribution of images

A single DICOM file contains both a header (which stores

information about the patient's name, the type of scan, image

dimensions, etc), as well as all of the image data (which can

contain information in three dimensions).

Manufacturers tend to output in DICOM but also put lots of

useful information in the ‘private’ part of the header

Image format

Analyze format (.img .hdr)

Analyze is an image processing program, written by The

Biomedical Imaging Resource at the Mayo Foundation.

There are two Analyze formats. One, by much the more

common, is Analyze 7.5 (this is one format used by SPM), the

other is Analyze AVW, the format used in the latest version of

the Analyze program

An Analyze (7.5) format image consists of two files, and image

(.img) and a header file (.hdr). The .img file contains the numbers

that make up the information in the image. The .hdr file contains

information about the img file, such as the volume represented

by each number in the image (voxel size) and the number of

pixels in the X, Y and Z directions. This header contains fields

of text, floating point, integer and other information.

Image format

NIfti format (.img .hdr or .nii)

Stands for Neuroimaging Informatics Technology Initiative

(The National Institute of Mental Health and the National

Institute of Neurological Disorders and Stroke)

Facilitates inter-operation of functional MRI data analysis

software packages

Headers now include - affine coordinate definitions relating

voxel index (i,j,k) to spatial location (x,y,z); codes to indicate

spatio-temporal slice ordering for FMRI; - "Complete" set of 8128 bit data types; - Standardized way to store vector-valued

datasets over 1-4 dimensional domains; - etc

1. Importing Data

Matlab DICOM tools in the image processing toolbox, but also

plenty of free software including SPM

Type SPM select fMRI

Import from the directory

3D_dicom

Save as one file

1. Importing Data

1. Importing Data

Select the newly imported

image

Surf to the front, near the

eye

Check orientation

Check the voxel size!!

edit spm_defaults

defaults.analyze.flip =

1; % input data left =

right

1. Importing Data

Now simply double click on the nii file – this should bring

MRICron

Again surf to the front near the eyes the white spot is at a

different location ?? Check outside the brain MRICron is

telling you are on the right side

BE AWARE OF THE ORIENTATION

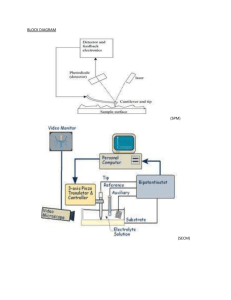

Computational Neuroanatomy: theory

fMRI time-series

kernel

Slice timing and

Realignment

smoothing

normalisation

Anatomical

reference

Statistics

Slice timing: Why?

During the scanning, slices of the brain are acquired every TR (x

sec) and one wants to correct for this delay between slices.

Data can be acquired in ascending/descending order, in this case

one realigns first, otherwise one would apply the same time

correction to voxels possibly coming from different slices, i.e.

acquired at different time.

Often one acquires first slices 1, 3, 5, 7, 9 … then 2, 4,6, 8, …

(interleaved mode), in this case slice timing is done first

otherwise the realignment would move voxels possibly coming

from different slices, i.e. acquired at different time (max TR/2),

onto the same plane and the slice timing would then be wrong.

Realignment: Why?

Subjects will always move in the scanner.

movement may be related to the tasks performed.

When identifying areas in the brain that appear activated due to

the subject performing a task, it may not be possible to discount

artefacts that have arisen due to motion.

The sensitivity of the analysis is determined by the amount of

residual noise in the image series, so movement that is unrelated

to the task will add to this noise and reduce the sensitivity.

Normalization: Why?

Inter-subject averaging

extrapolate findings to the population as a whole

increase activation signal above that obtained from single

subject

increase number of possible degrees of freedom allowed in

statistical model

Enable reporting of activations as co-ordinates within a known

standard space

e.g. MNI

Smoothing: Why?

Smoothing is used for 3 reasons:

Potentially increase signal to noise (Depends on relative size of

smoothing kernel and effects to be detected - Matched filter

theorem: smoothing kernel = expected signal - Practically

FWHM 3· voxel size ; May consider varying kernel size if

interested in different brain regions (e.g. hippocampus -vsparietal cortex))

Inter-subject averaging.

Increase validity of SPM.

In SPM, smoothing is a convolution with a

Gaussian kernel, and the Kernel is defined in

terms of FWHM (full width at half maximum).

Computational Neuroanatomy: practice

Single subject processing

Protocol – Imaging parameters

spm_data_set\Auditory_data_block_design

Each acquisition consisted of 64 contiguous slices (3mm3).

Acquisition took 6.05s, with the scan to scan repeat time (TR) set

to 7s.

96 acquisitions were made (TR=7s) from a single subject, in

blocks of 6, giving 16 42s blocks.

The condition for successive blocks alternated between rest and

auditory stimulation, starting with rest. Auditory stimulation was

bi-syllabic words presented binaurally at a rate of 60 per minute.

The functional data starts at acquisition 4, image fM00223_004.

2. Slice timing

2. Slice timing

Session – Specify files --> select all functional images -> 96 files

Number of slices? 64

TR? 7

TA? 6.05

Slice order? [64:-2:1, 64-1:-2:1]

Reference slice? 2

OUTPUT: ‘a’ images .. afM00XX.img / .hdr

3. Realignment

New session Select the slice timed images afM00XX.img

For each session during the scanning, create a session !!

Creates the mean of all realigned EPI images – you do not have to write

the data, parameters (which comes from the ‘estimate’) are kept in the

header

created in your

directory

- the mean image

-a spm.ps

- .txt file

= translation and

rotation parameters

4. Normalize

We could normalize on the EPI template but since we

have the subjects’ anatomical scan, we can use it to

normalize on the T1 template.

compute

T1

MNI T1 template

T1 like EPI

Mean image

Coregistration

apply

EPI

Realign and

normalize

4a. Coregister

4a. Coregister

Target image?

mean EPI – this doesn’t change

Source image?

High resolution T1 (nsM00223_002.img)

We want this to be like the EPI

Other images?

nothing here

Reslice option interpolation

trilinear is fine, could improve using b-spline

(come back to this latter)

4a. Coregistrer

Output:

rnsM00223_002.img

4b. Normalization

4b. Normalize

Data New Subject

Source Image rns...img (compute from the coregistered T1)

Images to write all the af…img (normalize all EPI images)

we can add the mean.img

we can add the rns…img (normalize the T1)

Estimation options:

Template image SPM5\Template\T1.nii

Writing options: interpolation

4b. Normlization

Output:

wa…img

4c. Check the data

Check Reg

Several images

at once

4c. Check the data

Select

wmean…img

T1 template

5. Smoothing

Select the normalized images

Smooth at 6 mm

Output: s…img

A word on

interpolation

Writing down the images

What is interpolation?

Interpolation is a process for estimating values that lie between

known data points

exit spm and open/run the script called interp_ex.m

z is a 2D matrix and one interpolates between the ‘points’ defined

by z – there are many options, here we use nearest neighbor,

bilinear, and bicubic interpolation methods – observe the

difference in the results

5. Writing down the images

Writing down the images

Why is it better to compute (estimate) everything 1st and then

apply?

While one can compute and write the realigned data and

compute and write the normalized one, I suggest here to

estimate the realignment parameters and then normalize. The

realignment only uses affine transformation (translations and

rotations in x, y ,z) and this info is stored in the header. Then we

compute how to normalize an image (translation, rotation,

zoom, shear and non-linear warping) and multiply the two

transformation matrix – as a result a voxel (and its’ contend) is

‘cut’ only 1 time vs. twice.

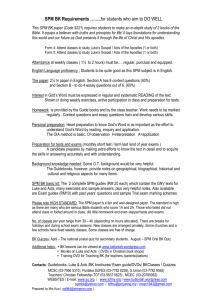

Statistical modelling:

theory

fMRI time-series

Design matrix

Parameter estimates

kernel

Slice timing and

Realignment

smoothing

General Linear Model

model fitting

statistic image

Multiple comparisons

correction

normalisation

Anatomical

reference

Statistical

Parametric Map

corrected p-values

General Linear Model

Regression, t-tests, ANOVAs, AnCovas … are all instances of the

same linear modelling.

Regression: - simple: searching to explain the data (y) by a single predictor

(x) such as y = βx + b – multiple: searching to explain the data (y) by

several predictors (x1, x2, …) such as y = β1x1 + β2x2 + b

Linear models can be solved by the

least squares method, i.e. one looks for

a coefficient (Beta) that minimizes the

error, i.e. the difference between the

model (βx+b) and the data (y)

General Linear Model

Dummy coding:

Instead of a continuous variable, we have categorical variables

for each data point (y) we have a 2 groups, i.e. 1 predictor (x)

regarding the group that we code like 1111-1-1-1-1 and we still

use the equation y = βx + b ( = t-test)

or we can have several groups/conditions (x1 = 1111-1-1-1-1,

x2 =11-1-111-1-1, x1x2=11-1-1-1-111) such as y = β1x1 + β2x2

+ β12x1x2 b (ANOVA)

General Linear Model

Using a matrix notation we can write any models like

Y = Xβ + e

Y a vector for each data point,

X the design matrix where each column is a vector

representing groups/conditions/continuous predictor

β a vector (length = nb of column of X) of the coefficients to

apply on X in order the minimize e the error (what is not

modeled/explained)

Matrix algebra offers a unique solution for all models:

β = (XTX)-1 XT Y

using pseudoinverse in matlab: betas = pinv(X’*X)*X’*Y

General Linear Model

Exercise 1: multiple regression with 4 covariates

Load the data called reg_eg.mat: load ('reg_eg')

Y = reg_eg(:,1); X = reg_eg(:,2:6); imagesc(X); X

Y=X*B+e B = pinv(X'*X)*X'*Y; Yhat = X*B;

plot(Y); hold on; plot(Yhat,'r')

ss_total = norm(Y - mean(Y)).^2; ss_effect = norm(Yhat mean(Yhat)).^2; ss_error = ss_total – ss_effect;

Rsquare = ss_effect/ss_total

f = (ss_effect/(rank(X)-1)) / (ss_error/(length(Y)-rank(X)))

General Linear Model

Exercise 2: ANOVA with 4 groups

Clear all; close all; clc; load(‘anova_eg’);

Y = anova_eg(:,1); X = anova_eg(:,2:6); imagesc(X);

Y=X*B+e B = pinv(X'*X)*X'*Y; Yhat = X*B;

plot(Y); hold on; plot(Yhat,'r')

ss_total = norm(Y - mean(Y)).^2; ss_effect = norm(Yhat mean(Yhat)).^2; ss_error = ss_total – ss_effect;

Rsquare = ss_effect/ss_total

f = (ss_effect/(rank(X)-1)) / (ss_error/(length(Y)-rank(X)))

EVERY ANALYSIS DEPENDS ON X AND THE DOF

General Linear Model

Application to fMRI: massive univariate approach

For each voxel of the brain we have a time series (data points Y)

and we know what happened during this time period

(experimental conditions X).

We also know that after a stimulus or a response, the blood flow

increases peaking at 5sec then decreases (hemodynamic

response)

We thus model the data such as Y(t) = [u(t) h(τ)] β + e(t)

where u represent the occurance of a stimulus or a response and

h(τ) the hemodynamic response with τ the peristimulus time

General linear convolution model

X(y) = u(t) h(τ)

u = [0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0

0 1 0 0 0 0 0 0 0 0 ];

h = spm_hrf(1)

X = conv(u,h);

General linear convolution model

y(t) = X(t)β + e(t)

X as now two conditions u1 and u2 …

And we search the beta parameters to fit Y

Y

X

General linear convolution model

=

β1 +

β2 + u + e

General linear convolution model

=

β1

β2 + e

u

Single subject stat

modelling: practice

5. Single subject stats

5. Single subject stats

Directory select a directory to store your data

Timing parameters

Unit for the design: scan

Interscan interval: 7 (TR)

Microtime onset: 3

(TA = 6.05 and we slice timed on the middle slice)

Data and Design ‘New Subject/Session’

Scans: images swaf…4.img to waf…99.img

5. Single subject stats

Data and Design ‘New Subject/Session’

Scans: start at 4 to avoid artefacts .. (you should always discard

initial scans) = 96 files

Conditions New condition

Name: ‘activation’

Onsets: 6:12:84 (= every 12 scans from 6 to 84)

Durations: 6

Multiple regressors: select the realignment

parameters (.txt file)

The

rest is implicitly modelled (goes into the mean)

5. Single subject stats

Activations

Movements

Mean

Model summary

5. Single subject stats

5. Single subject stats

5. Single subject stats

5. Single subject stats

5. Single subject stats

5. Single subject stats

5. Single subject stats

5. Single subject stats

Contrast estimate

(effect size)

Fitted response

(model or Y-error)

5. Single subject stats

SPM outputs

beta000X.img /hdr: betas values in each voxel

con000X.img /hdr: contrast (combination of betas)

spmT000X.ing /hdr: statistics image

Also

mask.img / hdr: area analyzed by SPM (binary image)

RessMS: residual mean square

RPV: Resels-Per-Voxel image; image of roughness

Multiple comparisons

correction: theory

What Problem?

4-Dimensional Data

1,000 multivariate observations,

each with 100,000 elements

100,000 time series, each

with 1,000 observations

Massively Univariate

Approach

1

100,000 hypothesis

tests

Massive MCP!

1,000

3

2

Solutions for MCP

Height Threshold

Familywise Error Rate (FWER)

Chance of any false positives; Controlled by Bonferroni &

Random Field Methods

False Discovery Rate (FDR)

Proportion of false positives among rejected tests

Set level statistic

Bayes Statistics

From single univariate to

massive univariate

Univariate stat

Functional neuroimaging

1 observed data

Many voxels

1 statistical value

Family of statistical values

Type 1 error rate (chance to

be wrong rejecting H0)

Null hypothesis

Family-wise error rate

Family-wise null hypothesis

Height Threshold

Choose locations where a test statistic Z (T, 2, ...) is large to

threshold the image of Z at a height z

The problem is how to choose this threshold z to exclude false

positives with a high probability (e.g. 0.95)?

To control for family

wise error on must

take into account the

nb of tests

Bonferroni

10000 Z-scores ; alpha = 5%

alpha corrected = .000005 ; z-score = 4.42

100 voxels

100 voxels

Bonferroni

10000 Z-scores ; alpha = 5%

2D homogeneous smoothing – 100 independent observations

alpha corrected = .0005 ; z-score = 3.29

100 voxels

100 voxels

Random Field Theory

10000 Z-scores ; alpha = 5%

Gaussian kernel smoothing –

How many independent observations ?

100 voxels

100 voxels

Random Field Theory

RFT relies on theoretical results for smooth statistical maps

(hence the need for smoothing), allowing to find a threshold in a

set of data where it’s not easy to find the number of

independent variables. Uses the expected Euler characteristic

(EC density)

1 Estimation of the smoothness = number of resel (resolution

element) = f(nb voxels, FWHM)

2 ! expected Euler characteristic = number of clusters above the

threshold

3 Calculation of the threshold

Random Field Theory

The Euler characterisitc can be seen as the number of blobs in

an image after thresholding

At high threshold, EC = 0 or 1 per resel: E[EC] pFWE

2

E[EC] = R · (4 loge 2) · (2)−2/3 · Zt · e−1/2 Z t for a 2D image, more complicated in 3D

Random Field Theory

For 100 resels, the equation gives E[EC] = 0.049 for a threshold

Z of 3.8, i.e. the probability of getting one or more blobs where

Z is greater than 3.8 is 0.049

100 voxels

100 voxels

If the resel size is much larger than the voxel size then E[EC]

only depends on the nb of resels othersize it also depends on the

volume, surface and diameter of the search area (i.e. shape and

volume matter)

False discovery Rate

Whereas family wise approach corrects for any false positive, the

FDR approach aim at correcting among positive results only.

1. Run an analysis with alpha = x%

2. Sort the resulting data

3. Threshold the resulting data to remove the false positives

(theoretical problem: threshold any voxels whatever their spatial

positions)

False discovery Rate

Signal+Noise

FEW correction

FDR correction

Levels of inference:

theory

Levels of inference

-

3 levels of inference can be considered:

Voxel level (prob associated at each voxel)

Cluster level (prob associated to a set of voxels)

Set level (prob associated to a set of clusters)

The 3 levels are nested and based on a single probability of

obtaining c or more clusters (set level) with k or more voxels

(cluster level) above a threshold u (voxel level): Pw(u,k,c)

Both voxel and cluster levels need to address the multiple

comparison problem. If the activated region is predicted in

advance, the use of corrected P values is unnecessary and

inappropriately conservative – a correction for the number of

predicted regions (Bonferroni) is enough

Levels of inference

Set level: we can reject H0 for an omnibus

test, i.e. there are some significant clusters of

activation in the brain.

Cluster level: we can reject H0 for an

area of a size k, i.e. a cluster of

‘activated’ voxels is likely to be true for a

given spatial extend.

Voxel level: we can reject H0 at each

voxel, i.e. a voxel is ‘activated’ if

exceeding a given threshold

Inference in practice

Levels of inference and MCC

From single subjects to

random effects: theory

From single subjects to random effects

Why random effect (also called pseudo-mixed)?

Basic stats: compute the mean for each condition for each

subjects and do the stats on these means (inter-subject variance)

Random effect: compute the beta parameters for each condition

(intra-subject variance) and do the stats on these beta parameter

(inter-subject variance)

From single subjects to

random effects: practice

Face data set

Repetition priming experiment performed using event-related

fMRI

2x2 factorial study with factors `fame‘ and `repetition' where

famous and non-famous faces were presented twice against a

checkerboard baseline

Four event-types of interest; first and second presentations of

famous and non-famous faces, which are denoted N1, N2, F1

and F2

TR=2s

TA = 1.92s

24 descending slices (64x64 3x3mm2)

3mm thick with a 1.5mm gap

Single subject modelling

Here we consider a more sophisticated model in which we use

the hrf and its derivatives.

In the folder, spm_data_set\Face_data_event_related_design\

single_subject\Preprocessed, you can find the smoothed,

normalized, realigned, and slice timed images

Try to model by yourself

Single subject modelling

Stimulus Onsets Times

sots.mat

Cond 1 to 4 are F1, F2,

N1, N2 with the

respective onset time

sot{1} sot{2} sor{3}

and sot{4}

Single subject modelling

Directory stats

Timing parameters: Units (sec), Interscan interval (2),

Microtime resolution (24), Microtime onset (12)

Data and design ‘New Subject/Session’: Scan (all EPI

swa…img), Condition create 4 conditions, each time enter

name and sot (F1 sot{1} F2 sot {2} N1 sot{3} N2 sot{4}),

Multiple regressors: enter the .txt file from realignment

Factorial Design create 2 factors (famous, level =2, and

repetition, level =2)

Basis function: Canonical HRF, model derivatives (time and

dispersion)

Single subject modelling

Result select the SPM.mat

Because we specified the factorial design, the variance has been

partitioned in a specific way and contrasts are already there

Select contrast nb 5

Single subject modelling

Single subject modelling

Single subject modelling

Define a new F contrast called ‘effect of interest’

(any effect of any regressor and combinations) ok done

Single subject modelling

Plot contrast estimates and 90% CI select effect of

interest

F1

F2

N1

N2

Single subject modelling

Plot event-related response / fitted response

Single subject modelling

In the matlab workspace

Y = fitted response, y = adjusted response

Exercise: plot for 4 fitted responses on 1 figure

Each time save using e.g. N1 = Y; then N2 = Y; .. then plot(N1);

hold on; plot(N2,’--’) …

Multi-subjects

All data were analyzed and are stored in the folder

Face_data_event_related_design\multisubjects\cons_informed

We have all the con images from 12 subjects, data were modelled

with Famous and Non famous faces (2 conditions only) with the hrf

and the two derivatives (12*6 files)

Random effects

Random effects

Analyze Faces vs baseline

For each subject we have the con images for the hrf, the 1st

derivative and 2nd derivative

A full analysis will thus be a repeated measure ANOVA (we have

3 measures per subject)

SPM allows to correct for non-sphericity, i.e. it will take into

account the correlation between regressors – here regressors are

by inception correlated

Random effects

Design Full factorial

create a ‘new factor’

Name: Basis functions

Levels: 3

Independence: No

Specify cells create 3 cells, 1

per level of the factor (cell 1 =

con 3 to 14 / cell 2 = 15 to 26

/ cell 3 = 27 to 38)

Run

Estimate

Random effects

In the contrast

manager, enter a

new F contrast:

effect of interest

(eye(3))

Evaluate

and

look at the result

with a correction

FWE @ .05

Random effects

Random effects

In the contrast

manager, enter a

new T contrast

for the hrf only:

100

Evaluate

and

look at the result

with a correction

FEW @ .05

Random effects

Select an image to

display (e.g. SPM

/canonical/T1)

Add blobs select

the SPM.mat and

the 2 contrasts

Random effects

The display is

‘surfable’

MNI and voxel

coordinates available

Voxel value

}

Random effects

F Contrast [0 1 0]

Time dispersion

(+/- 2 sec)

Earlier

response

(- = delayed)

F Contrast [0 0 1 ]

Duration dispersion

Narrower

response

( - = wider)

Random effect

Paired t-test

12 pairs

3 vs 39

4 vs 40

…

Independence: No

Variance: equal

Visualizing the data

SPM and other software

Rendering with SPM

Segmentation

Segmentation

Input coregistered image

in \spm_data_set\

Face_data_event_related_de

sign\single_subject\Structur

al rs …img

Output

grey / white matter tissues

bias corrected image

affine normalization

parameters (and inverse)

Rendering with SPM

Select the grey and

white matter (c1 and

c2) images and save

the rendering

Rendering with SPM

Select the SPM.mat

from the individual

subject and then 2

sets / contrasts

Rendering in SPM

For random effects analysis you can use the render in

SPM/render which is in the MNI space

For individual subjects, best is to not normalize and make a

render .. Results are better with smaller voxel size (i.e. you could

interpolate the data @ 1mm3)

Overall, better to use slices / section – poor rendering

capabilities although new plug in are appearing ..

Surface visualization

with Caret

What does it do?

Surface visualization

Display experimental data (activation maps, connectivity patterns, etc)

View data in flexible combinations (cortical surface, flat maps, contours,

outlines, etc)

Surface manipulation and data analysis

generate inflated maps, spherical maps, and flat maps

probabilistic maps, surface based analysis

On-line search for fMRI maps, comparisons, etc ..

The Software

http://brainmap.wustl.edu/caret

my experience is that it works better with linux / mac but windows

does the job

From David Van Essen lab – Washingtom University in St Louis –

School of Medicine – Dpt of Anatomy & Neurobiology

Most famous paper from this guy?

Felleman, D.J. and Van Essen, D.C. (1991) Distributed hierarchical

processing in primate visual cortex, Cerebral Cortex, 1: 1-47.

Caret

File Open Spec File …

In Caret\

HUMAN.COLIN.ATLAS\

LEFT_HEM\ …spec

Caret

Caret

Caret

Attributes Map

Volume(s) to Surface(s)

… leave the Metric

option ticked and press

next

Caret

Add Volumes From

Disk select the

spmT_0005.hdr file

from the single subject

analysis (Face data set)

Caret

Map to Caret

Caret

Chose your algorithm

Average Voxel

Neighbor Box Size 1

Next ..

Caret

Caret

Caret

Individual visualization

with Anatomist

Brain Visa / Anatomist

Caret as well as BrainVisa / Anatomist works better with clean

data …

1 st – data are usual better handled with isotropic voxels (same

dimensions in x, y, z) better acquire isotropic voxels, at least

for the T1

2 nd – there is usual a bias in one direction (often Z), i.e. for a

given concentration of tissue, the gray scale is slightly different

Solution: correct and resample

My favourite tools for this: FSL

Quick Tour into FSL

Depending on what you want to do

ApplyXFM reinterpolation using the identity matrix

FSL brings the interface BET / SUSAN / FSL View

Back to BrainVisa / Anatomist

Back to Anatomist

Anatomist

Resources

IdoImaging: http://idoimaging.com/index.shtml

SPM: http://www.fil.ion.ucl.ac.uk/spm/

Caret: http://brainvis.wustl.edu/wiki/index.php/Caret:About

FSL: http://www.fmrib.ox.ac.uk/fsl/

BrainVisa / Anatomist: http://brainvisa.info/

Cambridge imaging wiki: http://imaging.mrccbu.cam.ac.uk/imaging/CbuImaging

Russ Poldracks’wiki on Matlab:

http://www.poldracklab.org/teaching/psych254

Digital signal processing in general:

http://www.dspguide.com/pdfbook.htm

Maths in general: http://mathworld.wolfram.com/