presentation

advertisement

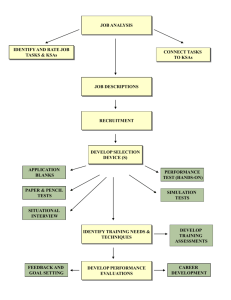

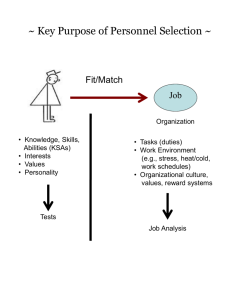

Stability of Job Analysis Findings and Test Plans over Time Calvin C. Hoffman, PhD Los Angeles County Sheriff’s Department Presented to PTCSC on April 14th, 2010 Coauthors: Carlos Valle, Gabrielle Orozco-Atienza, & Chy Tashima. INTRODUCTION • Job analysis (JA) provides foundation for many human resources activities [recruitment, placement, training, compensation, classification, and selection (Gatewood & Feild, 2001)]. • In content validation research, JA is used to minimize the “inferential leaps” in selecting or developing selection instruments. INTRODUCTION • There is little guidance on how often to revalidate or revisit the validity of selection systems (Bobko, Roth, & Buster, 2005). – Uniform Guidelines (1978) - “There are no absolutes in the area of determining the currency of a validity study.” – SIOP Principles – “…organizations should develop policies requiring periodic review of validity of selection materials and methods.” INTRODUCTION • Our position is that if “revalidation” is needed, researchers must pay attention to job analysis. • For example: – Changes in duties? – Changes in technology? – HR systems changes? – Changes in required KSAs? SETTING • Extensive litigation regarding sergeant promotional process. • Consent decree governed all aspects of JA, selection system design, selection system operation, and actual promotions for over 25 years. • Organizational policy requires updating job analysis every five years. – Policy does not consider important factors such as costs and legal context. SETTING • 2006 Sergeant Exam - Conducted extensive multimethod JA. • 2009 Sergeant Exam – Unsure about need for additional JA given recency of JA data. • SIOP Principles - “The level of detail required of an analysis of work is directly related to its intended use and the availability of information about the work. A less detailed analysis may be sufficient when there is already information descriptive of the work” (p.11). CURRENT STUDY • Few changes in sergeant job were expected during the three-year span. – Could conclude that new JA is not needed, and reuse the existing 2006 test plan. – Given history of litigation surrounding this exam, the Principles would support conducting an additional JA. • Choosing to err on the side of caution, we performed a slightly abbreviated JA to support 2009 exam. CURRENT STUDY • Study examined the stability of the JA data over a two-year span. Focuses on the similarity of: – task and KSA ratings by two independent groups of incumbents – the test plans for the written job knowledge test. METHOD - 2006 JA • Structured JA interviews were conducted onsite with incumbents, along with job observation, facility tours, and “desk observation”. • From these data sources, a work-oriented job analysis questionnaire (JAQ) was drafted consisting of major tasks and KSAs. • JAQ survey (incumbents), SME linkage ratings. METHOD - 2009 JA • Did not conduct additional JA interviews. • Relied on the existing 2006 JAQ as a starting point for the 2009 JA effort. • Otherwise, followed same process. METHOD Participants Invite d 91 Participated 69* Response Rate Sampling Method 76% Incumbents (Sergeants) chosen by Personnel Captain. 75% Incumbents (Stratified random sample) chosen by researchers. 2006 Exam 65 2009 Exam 49* *Both JAQs were administered online via a web survey METHOD • SMEs (2006 N = 13, 2009 N = 10) in both studies performed linkage ratings to establish relationship between task domains and KSA domains using a 4-point relevance scale. – JAQ x linkage ratings data were further reviewed and fine-tuned by SMEs. • JAQ data helped determine relative weight and content of test plans (written test, appraisal of promotability, and structured interview). RESULTS - TASKS Correlation 2006 Test 2009 Test Mean Task Importance Rating t-test 3.7 Dependant t (28) = 7.65; p < .001 two-tailed; d = 1.74 3.4 Independent t (56) = 3.23; p < .01 two-tailed; d = .85 r =.83 RESULTS - KSAs Correlation 2006 Exam 2009 Exam Mean KSA Importance Rating t-test 3.8 Dependant t (29) = 3.94; p < .001 two-tailed; d = 1.54 3.7 Independent t (56) = 0.90; p > .05 two-tailed; d = .23 r =.96 RESULTS – TEST PLAN 2006 Test Total Items Knowledge Domains From JA Domains Omitted from Test Plan 102 31 6 Recall v. Correlation Reference 84% 104 30 3* Importance Rating 3.8 Agreement 2009 Test M KSA r =.85 3.7 *Of six knowledge domains omitted in 2006, three knowledge domains were retained in 2009, for a total of 13 items. All were included as Reference items in 2009. DISCUSSION • JA data were highly stable over time, despite significant M differences observed. • Mean task importance ratings (r = .83) – About 1.0 standard deviation larger than meta-analytic findings of intrarater reliability (rate-rerate) of JA ratings data reported by Dierdorff and Wilson (2003), r = .68 (n = 7,392; k = 49) over an average of 6 months. • Mean KSA importance ratings (r = .96) – No comparison could be made because NO estimate of KSA stability over time could be located in literature. • Test plans (r =.85) – Number of items allocated to specific knowledge domains was highly similar. DISCUSSION • Differences between mean task ratings might be attributable to: – differences in the selection of JAQ respondents – decreased sensitivity in organization regarding sergeant promotional exam (i.e., no new lawsuits!). DISCUSSION • Findings did not translate into major differences in the test plans resulting from the JA efforts even with: – different SMEs, – different survey respondent selection methods, – significant differences in mean task and KSA ratings. DISCUSSION • Although five new domains were included in the 2009 test, they were incorporated as Reference questions wherein candidates are provided resource material to answer questions. CONCLUSION • We considered costs and risks in determining whether to revalidate our selection system. – The greater the number of intervening years between validation studies, the higher the risk the organization assumes. – The shorter the intervals of time between revalidation efforts, the costlier and more burdensome they are for the organization. • We were conservative due to the legal context. Might have followed a different path if guidelines regarding revalidation efforts were clearer. CONCLUSION • We encourage researchers and practitioners to conduct and share any research findings that might help in creating detailed practice guidelines on revalidation efforts. • More information is needed to close the disconnect between the requirement to maintain currency of validity information and the lack of clear guidance regarding how often is “often enough.” Questions? • Copies of slides and the conference paper are available: – Email request to: choffma@lasd.org