ppt

advertisement

Learner Error Corpora

Grammatical Error Detection

Grammatical Error Correction

Evaluation of Error Detection/Correction

System

Native

Language

Transformation

Foreign

Language

Interlanguage

A learner corpus is a computerized textual database of

the language produced by foreign language learners

Benefits

Researchers will have access to leaners’ interlanguage

May lead to development of language learning tools

Error tagged corpora

Deals with real errors made by language learners

Well formed corpora

Language corpora with well formed constructs

BNC, WSJ

N-gram corpora

Artificial error corpora

Error tagged corpora are expensive

Well formed corpora do not deal with errors

Artificially modify well formed corpora to become

error corpora

NUCLE : NUS Corpus of Learner English

About 1,400 essays from university-level students

with 1.2 million words.

Completely annotated with error categories and

corrections.

Annotation performed by English instructors at

NUS Centre for English Language Communication

(CELC).

Annotation Task

Select arbitrary, contiguous text spans using the

cursor to identify grammatical errors.

Classify errors by choosing an error tag from a dropdown menu.

Correct errors by typing the correction into a text box.

Comment to give additional explanations if necessary.

Writing, Annotation, and Marking Platform (WAMP)

27 error categories with 13 error groups

NICT-JLE

“Error Annotation for Corpus of Japanese Learner

English” – Izumi et al.

CoNLL shared task data

▪ http://www.comp.nus.edu.sg/~nlp/conll13st.html

HOO data

▪ http://clt.mq.edu.au/research/projects/hoo/hoo2012/ind

ex.html

Precision Grammar: formal grammar

designed to distinguish ungrammatical from

grammatical sentences.

Constraint Dependency Grammar (CDG)

▪ every grammatical rule is given as a constraint on wordto-word modifications

Resource: Structural disambiguation with constraint propagation, Hiroshi Maruyama,

ACL’90

CDG grammar 𝐺 =< Σ, 𝑅, 𝐿, 𝐶 >

Σ finite set of terminal symbols (words)

𝑅 = {𝑟1 , 𝑟2 , … 𝑟𝑘 } finite set of role-ids

𝐿 = {𝑎, 𝑎2 , … 𝑎𝑡 } finite set of labels

𝐶 constraint that an assignment A should

satisfy

A sentence 𝑠 = 𝑤1 , 𝑤2 , … 𝑤𝑛 is a finite string

on Σ

Each word in a sentence s has k roles

𝑟1 𝑖 , 𝑟2 𝑖 , … , 𝑟𝑘 (𝑖)

Roles are variables that can take < 𝑎, 𝑑 > as its

value where 𝑎 ∈ 𝐿 and modifiee is 𝑑 either 1 ≤

𝑑 ≤ 𝑛 or special symbol 𝑛𝑖𝑙.

𝑾𝟏

𝑾𝟐

𝑾𝒏

𝒓𝟏 -𝒓𝒐𝒍𝒆

𝒓𝟐 -𝒓𝒐𝒍𝒆

𝒓𝒌 -𝒓𝒐𝒍𝒆

Analysis of a sentence assigning values to the 𝑛 × 𝑘 roles.

Definitions

Assuming 𝑥 is an 𝑟𝑗 role of word 𝑖.

▪ 𝑝𝑜𝑠 𝑥 ⇒ 𝑡ℎ𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑜𝑛 𝑖

▪ 𝑟𝑖𝑑 𝑥 ⇒ 𝑡ℎ𝑒 𝑟𝑜𝑙𝑒 𝑖𝑑 𝑟𝑗

▪ 𝑙𝑎𝑏 𝑥 ⇒ 𝑡ℎ𝑒 𝑙𝑎𝑏𝑒𝑙 𝑜𝑓 𝑥

▪ 𝑚𝑜𝑑 𝑥 ⇒ 𝑡ℎ𝑒 𝑚𝑜𝑑𝑖𝑓𝑖𝑒𝑒 𝑜𝑓 𝑥

▪ 𝑤𝑜𝑟𝑑 𝑖 ⇒

𝑡ℎ𝑒 𝑡𝑒𝑟𝑚𝑖𝑛𝑎𝑙 𝑠𝑦𝑚𝑏𝑜𝑙 𝑜𝑐𝑐𝑢𝑟𝑟𝑖𝑛𝑔 𝑎𝑡 𝑝𝑜𝑠𝑖𝑡𝑖𝑜𝑛 𝑖

A constraint

∀𝑥1 𝑥2 ….𝑥𝑝 𝑃1 ⋀𝑃2 ⋀ … ⋀𝑃𝑚 where

▪ 𝑥1 𝑥2 … . 𝑥𝑝 range over the set of roles in an assignment

𝐴.

▪ Each 𝑃𝑖 is a subformula with vocabulary

▪ Variables: 𝑥1 𝑥2 … . 𝑥𝑝

▪

▪

▪

▪

Constants: Σ ∪ 𝐿 ∪ 𝑅 ∪ {𝑛𝑖𝑙, 1,2, … }

Function symbols: 𝑤𝑜𝑟𝑑

, 𝑝𝑜𝑠

, 𝑟𝑖𝑑

Predicate symbols: =, <, >, ∈

Logical connectors: ∧,∨, ¬, ⇒

, 𝑚𝑜𝑑

Definitions

The arity of a subformula 𝑃𝑖 depends on the

number of variables that it contains

The degree of grammar is the size of set of role

ids (𝑅).

A non-null string 𝑠 over the alphabet Σ is

generated iff there exists an assignment

𝐴 that satisfies the constraint 𝐶.

𝐺1 =< Σ1, 𝑅1, 𝐿1, 𝐶1 >

Σ1 = 𝐷, 𝑁, 𝑉

𝑅1 = {𝑔𝑜𝑣𝑒𝑟𝑛𝑜𝑟}

𝐿1 = 𝐷𝐸𝑇, 𝑆𝑈𝐵𝐽, 𝑅𝑂𝑂𝑇

𝐶1 = ∀𝑥𝑦 : 𝑟𝑜𝑙𝑒; 𝑃11 ⋀𝑃12 ⋀𝑃13 ⋀𝑃14

• 𝑷𝟏𝟏 : “A determiner (D) modifies a noun (N) on the right with the label DET”

𝑤𝑜𝑟𝑑 𝑝𝑜𝑠 𝑥 = 𝐷 ⇒ (𝑙𝑎𝑏 𝑥 = 𝐷𝐸𝑇, 𝑤𝑜𝑟𝑑 𝑚𝑜𝑑 𝑥 = 𝑁, 𝑝𝑜𝑠 𝑥

< 𝑝𝑜𝑠(𝑚𝑜𝑑 𝑥 ))

• 𝑷𝟏𝟐 :“A noun modifies a verb (V) on the right with the label SUBJ”

• 𝑷𝟏𝟑 : “A verb modifies nothing and its label should be ROOT”

• 𝑷𝟏𝟒 : “No two words can modify the same word with the same label.”

𝐺1 =< Σ1, 𝑅1, 𝐿1, 𝐶1 >

Σ1 = 𝐷, 𝑁, 𝑉

𝑅1 = {𝑔𝑜𝑣𝑒𝑟𝑛𝑜𝑟}

𝐿1 = 𝐷𝐸𝑇, 𝑆𝑈𝐵𝐽, 𝑅𝑂𝑂𝑇

𝐶1 = ∀𝑥𝑦 : 𝑟𝑜𝑙𝑒; 𝑃11 ⋀𝑃12 ⋀𝑃13 ⋀𝑃14

• 𝑷𝟏𝟏 : “A determiner (D) modifies a noun (N) on the right with the label DET”

𝑤𝑜𝑟𝑑 𝑝𝑜𝑠 𝑥 = 𝐷 ⇒ (𝑙𝑎𝑏 𝑥 = 𝐷𝐸𝑇, 𝑤𝑜𝑟𝑑 𝑚𝑜𝑑 𝑥 = 𝑁, 𝑝𝑜𝑠 𝑥

< 𝑝𝑜𝑠(𝑚𝑜𝑑 𝑥 ))

• 𝑷𝟏𝟐 :“A noun modifies a verb (V) on the right with the label SUBJ”

𝑤𝑜𝑟𝑑 𝑝𝑜𝑠 𝑥 = 𝑁

⇒ 𝑙𝑎𝑏 𝑥 = 𝑆𝑈𝐵𝐽, 𝑤𝑜𝑟𝑑 𝑚𝑜𝑑 𝑥 = 𝑉, 𝑝𝑜𝑠 𝑥 < 𝑝𝑜𝑠(𝑚𝑜𝑑 𝑥 )

• 𝑷𝟏𝟑 : “A verb modifies nothing and its label should be ROOT”

𝑤𝑜𝑟𝑑 𝑝𝑜𝑠 𝑥 = 𝑉 ⇒ 𝑙𝑎𝑏 𝑥 = 𝑅𝑂𝑂𝑇, 𝑚𝑜𝑑 𝑥 = 𝑛𝑖𝑙

• 𝑷𝟏𝟒 : “No two words can modify the same word with the same label.”

𝑚𝑜𝑑 𝑥 = 𝑚𝑜𝑑 𝑦 , 𝑙𝑎𝑏 𝑥 = 𝑙𝑎𝑏 𝑦 ⇒ 𝑥 = 𝑦

[A1]D [dog2]N [runs3]V

CDG parsing

Assigning values to 𝑛 × 𝑘 roles from a finite set

𝐿 × {𝑛𝑖𝑙, 1,2 … , 𝑛}

A constraint satisfaction problem (CSP)

Use constraint propagation or filtering to solve

CSP

▪ Form an initial constraint network using a “core”

grammar.

▪ Remove local inconsistencies by filtering.

▪ If any ambiguity remains, add new constraints and go to

Step 2.

Put the block on the floor on the table in the room

𝑉1 𝑁𝑃2 𝑃𝑃3 𝑃𝑃4 𝑃𝑃5

𝐺2 =< Σ2, 𝑅2, 𝐿2, 𝐶2 >

Σ2 = 𝑉, 𝑁𝑃, 𝑃𝑃

𝑅2 = {𝑔𝑜𝑣𝑒𝑟𝑛𝑜𝑟}

𝐿2 = 𝑅𝑂𝑂𝑇, 𝑂𝐵𝐽, 𝐿𝑂𝐶, 𝑃𝑂𝑆𝑇𝑀𝑂𝐷

𝐶2 = ∀𝑥𝑦 : 𝑟𝑜𝑙𝑒; 𝑃

Constraints

mod(x)

mod(y)

x

y

mod(x)

mod(y)

y

x

Total number of possible parse trees?

Catallan number

Explicit representation is not feasible

Constraint network for implicit representation of

the parse trees

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑷𝑷𝟓 {𝐿1 , 𝑃2 , 𝑃3 , 𝑃4 }

𝑽𝟏

𝑵𝑷𝟐

𝑷𝑷𝟒 {𝐿1 , 𝑃2 , 𝑃3 }

𝑷𝑷𝟑

𝑅𝑛𝑖𝑙 <ROOT, nil>, 𝐿1 <LOC,1>

{𝐿1 , 𝑃2 }

A constraint network is said to be arc consistent

if, for any constraint matrix, there are no rows

and no columns that contain only zeros

A node corresponding to all zero row or column

is removed from solution

Removing one value makes others inconsistent

The process is propagated until the network

becomes arc consistent.

The network in example is arc consistent

Two more constraints

𝑓𝑒(𝑝𝑜𝑠(𝑥)) extracts semantic

features of 𝑥

Put the block on the floor on the table in the room

𝑉1 𝑁𝑃2 𝑃𝑃3 𝑃𝑃4 𝑃𝑃5

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑷𝑷𝟓 {𝐿1 , 𝑃2 , 𝑃3 , 𝑃4 }

𝑽𝟏

𝑵𝑷𝟐

𝑷𝑷𝟒 {𝐿1 , 𝑃2 , 𝑃3 }

𝑷𝑷𝟑

{𝐿1 , 𝑃2 }

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑷𝑷𝟓 {𝐿1 , 𝑃2 , 𝑃3 , 𝑃4 }

𝑽𝟏

𝑵𝑷𝟐

𝑷𝑷𝟒

𝑷𝑷𝟑

{𝐿1 , 𝑃2 }

{𝐿1 , 𝑃2 }

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑷𝑷𝟓 {𝐿1 , 𝑃2 , 𝑃3 , 𝑃4 }

𝑽𝟏

𝑵𝑷𝟐

𝑷𝑷𝟒

𝑷𝑷𝟑

{𝐿1 , 𝑃2 }

{𝐿1 , 𝑃2 }

Two more constraints

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑽𝟏

𝑷𝑷𝟓

𝑵𝑷𝟐

{𝐿1 , 𝑃2 , 𝑃4 }

𝑷𝑷𝟒

𝑷𝑷𝟑

{𝐿1 , 𝑃2 }

{𝐿1 , 𝑃2 }

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑽𝟏

𝑷𝑷𝟓

𝑵𝑷𝟐

{𝐿1 , 𝑃2 , 𝑃4 }

𝑷𝑷𝟒

𝑷𝑷𝟑

{𝑃2 }

{𝐿1 , 𝑃2 }

Put the block on the floor on the table in the room

𝑉1 𝑁𝑃2 𝑃𝑃3 𝑃𝑃4 𝑃𝑃5

{𝑅𝑛𝑖𝑙 }

{𝑂1 }

𝑷𝑷𝟓 {𝑃4 }

𝑽𝟏

𝑵𝑷𝟐

𝑷𝑷𝟒 {𝐿1 }

𝑷𝑷𝟑

{𝑃2 }

Put the block on the floor on the table in the room

OBJ

POSTMOD

LOC

POSTMOD

All constraints are treated with same priority

failure to adhere to the set of specified

constraints mark an utterance to be

ungrammatical

Gradation in natural language

Can model robustness, the ability to deal with

unexpected and possibly erroneous input .

Weighted Constraint Dependency Grammar

(WCDG)

Different error detection tasks

Grammatical vs Ungrammatical

Detecting errors for targeted categories

▪ Preposition errors

▪ Article errors

Agnostic to error category

Approaches

Error detection as classification

Error detection as sequence labelling

Generic steps

Decide on the error category

Pick up a learning algorithm

Identify discriminative features

Train the algorithm with training data

▪ Error corpora

▪ Model encodes the error contexts

▪ flags error detecting a match of context in learner response

▪ Well-formed corpora

▪ Learns the ideal models for the targeted categories

▪ Flags error in case of mismatch

▪ Artificial error corpora

Type of preposition errors

Selection error [They arrived to the town]

Extraneous use [They came to outside]

Omission error [He is fond this book]

Tasks

Classifier prediction

Training a model

What are the features?

Resource: The Ups and Downs of Preposition Error Detection in ESL Writing, Tetreault and

Chodorow, COLING’08

Cast error detection task as a classification problem

Given a model classifier and a context:

System outputs a probability distribution over all prepositions

Compare weight of system’s top preposition with writer’s

preposition

Error occurs when:

Writer’s preposition ≠ classifier’s prediction

And the difference in probabilities exceeds a threshold

Develop a training set of error-annotated ESL

essays (millions of examples?):

Too labor intensive to be practical

Alternative:

Train on millions of examples of proper usage

Determining how “close to correct” writer’s

preposition is

Prepositions are influenced by:

Words in the local context, and how they interact

with each other (lexical)

Syntactic structure of context

Semantic interpretation

1.

2.

3.

Extract lexical and syntactic features from

well-formed (native) text

Train MaxEnt model on feature set to output

a probability distribution over a set of preps

Evaluate on error-annotated ESL corpus by:

Comparing system’s prep with writer’s prep

If unequal, use thresholds to determine

“correctness” of writer’s prep

Feature Description

PV

Prior verb

PN

Prior noun

FH

Headword of the following phrase

FP

Following phrase

TGLR

Middle trigram (pos + words)

TGL

Left trigram

TGR

Right trigram

BGL

Left bigram

He will take our place in the line

Feature Description

PV

Prior verb

PN

Prior noun

FH

Headword of the following phrase

FP

Following phrase

TGLR

Middle trigram (pos + words)

TGL

Left trigram

TGR

Right trigram

BGL

Left bigram

He will take our place in the line

PV

PN

FH

Feature Description

PV

Prior verb

PN

Prior noun

FH

Headword of the following phrase

FP

Following phrase

TGLR

Middle trigram (pos + words)

TGL

Left trigram

TGR

Right trigram

BGL

Left bigram

He will take our place in the line.

TGLR

MaxEnt does not model the interactions

between features

Build “combination” features of the head

nouns and commanding verbs

PV, PN, FH

3 types: word, tag, word+tag

Each type has four possible combinations

Maximum of 12 features

Class

p-N

Components

FH

+Combo:word

line

N-p-N

PN-FH

place-line

V-p-N

PV-PN

take-line

V-N-p-N

PV-PN-FH

take-place-line

“He will take our place in the line.”

Typical way that non-native speakers check if

usage is correct:

“Google” the phrase and alternatives

Google N-gram corpus

Queries provided frequency data for the

+Combo features

Top three prepositions per query were used

as features for ME model

Maximum of 12 Google features

Class

p-N

Combo:word

line

Google Features

N-p-N

place-line

P1= in

P2= on

P3= of

V-p-N

take-line

P1= on

P2= to

P3= into

V-N-p-N

take-place-line

P1= in

P2= on

P3= after

P1= on

P2= in

P3= of

“He will take our place in the line”

Thresholds allow the system to skip cases

where the top-ranked preposition and what

the student wrote differ by less than a prespecified amount

FLAG AS ERROR

100

90

80

FLAG ERROR

70

60

50

40

30

20

10

0

of

in

at

by

“He is fond with beer”

with

FLAG AS OK

60

50

FLAG OK

40

30

20

10

0

of

in

around

by

with

“My sister usually gets home around 3:00”

Errors consist of a sub-sequence of tokens in

a longer token sequence.

Some of the sub-sequences are errors while the

others not

Advantage: Error category independent

Sequence modelling tasks in NLP

Parts-of-speech tagging

Information Extraction

Resource: High-Order Sequence Modeling for Language Learner Error Detection, Michael

Gamon, 6th Workshop on Innovative Use of NLP for Building Educational Applications

Many NLP problems can be viewed as sequence

labeling.

Each token in a sequence is assigned a label.

Labels of tokens are dependent on the labels of

other tokens in the sequence, particularly their

neighbors (not i.i.d).

foo

bar

blam

zonk

zonk

bar

Slides from Raymond J. Mooney

blam

Annotate each word in a sentence with a

part-of-speech.

Lowest level of syntactic analysis.

John saw the saw and decided to take it to the table.

PN V Det N Con V Part V Pro Prep Det N

Useful for subsequent syntactic parsing

and word sense disambiguation.

Identify phrases in language that refer to specific types of

entities and relations in text.

Named Entity Recognition (NER) is task of identifying

names of people, places, organizations, etc. in text.

people organizations places

Michael Dell is the CEO of Dell Computer Corporation and lives in

Austin Texas.

Extract pieces of information relevant to a specific

application, e.g. used car ads:

make model year mileage price

For sale, 2002 Toyota Prius, 20,000 mi, $15K or best offer.

Available starting July 30, 2006.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

PN

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

V

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

Det

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

N

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

Conj

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

V

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

Part

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

V

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

classifier

Pro

to the table.

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

to the table.

classifier

Prep

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

to the table.

classifier

Det

Classify each token independently but use as input features,

information about the surrounding tokens (sliding window).

John saw the saw and decided to take it

to the table.

classifier

N

Hidden Markov Model

Finite state automation with stochastic state

transitions and observations

Start from a state emitting an observation

transiting to new state emitting observation

…….. Final state

▪ State transition probability 𝑃(𝑠|𝑠 ′ )

▪ Observation probability 𝑃(𝑜|𝑠)

▪ Initial state distribution 𝑃0 (𝑠)

𝒔𝒕−𝟏

𝒔𝒕

𝒐𝒕

Maximum Entropy Markov Model (MEMM)

Combines transition and observation functions

together with a single function

𝑃(𝑠|𝑠 ′ , 𝑜)

𝒔𝒕−𝟏

𝒔𝒕

𝒐𝒕

NER annotation convention

“O” outside NE

“B” beginning of NE

“I” inside NE

Michael Dell is the CEO of Dell Computer Corporation and lives in Austin Texas.

B

I

O O

O O

B

I

I

Learner error annotation

“O” and “I”

▪ Most of the error spans are short

O

O

O

B

I

Language model features

How close or far is the learner’s utterance from

ideal language usage?

String features

whether a token is capitalized (initial

capitalization or all capitalized)?

token length in characters

number of tokens in the sentence

Linguistic analysis feature

Features from constituency parse tree

All features are calculated for each token 𝑤 of

the tokens 𝑤1 , 𝑤2 , … 𝑤𝑘 in a sentence

Basic LM features

All LM score in log probability

Unigram probability of 𝑤

average n-gram probability of all n-grams in the

sentence that contain 𝑤

𝐴𝑣𝑔 =

𝑛

𝑖=1

𝑝𝑜𝑠 𝑤

𝑗=𝑝𝑜𝑠 𝑤 −𝑖+1 𝑃(𝑤𝑗 … 𝑤𝑗+𝑖−1 )

𝑝𝑜𝑠 𝑤

𝑛

𝑖=1 𝑗=𝑝𝑜𝑠 𝑤 −𝑖+1 1

Ratio features

tokens that are part of an unlikely combination of

otherwise likely smaller n-grams error

𝑟𝑎𝑡𝑖𝑜 =

𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝑝𝑟𝑜𝑏 𝑜𝑓 𝑥−𝑔𝑟𝑎𝑚 𝑐𝑜𝑛𝑡𝑎𝑖𝑛𝑖𝑛𝑔 𝑤

(𝑤ℎ𝑒𝑟𝑒 𝑥 > 𝑦)

𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝑝𝑟𝑜𝑏 𝑜𝑓 𝑦−𝑔𝑟𝑎𝑚 𝑐𝑜𝑛𝑡𝑎𝑖𝑛𝑖𝑛𝑔 𝑤

Drop features

drop or increase in n-gram probability across token

𝑤.

Δ 𝑤𝑖 = 𝑃 𝑤𝑖 𝑤𝑖+1 − 𝑃(𝑤𝑖−1 𝑤𝑖 )

Entropy delta features

𝑓𝑜𝑟𝑤𝑎𝑟𝑑 𝑒𝑛𝑡𝑟𝑜𝑝𝑦 𝑤𝑖 =

𝐿𝑀_𝑆𝑐𝑜𝑟𝑒(𝑤𝑖 …𝑤𝑛 )

𝑁𝑜 𝑜𝑓 𝑡𝑜𝑘𝑒𝑛𝑠(𝑤𝑖 …𝑤𝑛 )

𝑏𝑎𝑐𝑘𝑤𝑎𝑟𝑑 𝑒𝑛𝑡𝑟𝑜𝑝𝑦 𝑤𝑖 =

𝐿𝑀_𝑆𝑐𝑜𝑟𝑒(𝑤0 …𝑤𝑖 )

𝑁𝑜 𝑜𝑓 𝑡𝑜𝑘𝑒𝑛𝑠(𝑤0 …𝑤𝑖 )

𝑓𝑜𝑟𝑤𝑎𝑟𝑑 𝑠𝑙𝑖𝑑𝑖𝑛𝑔 𝑒𝑛𝑡𝑟𝑜𝑝𝑦 𝑤𝑖 =

𝐿𝑀𝑆𝑐𝑜𝑟𝑒

𝑤𝑖 …𝑤𝑛

− 𝐿𝑀_𝑆𝑐𝑜𝑟𝑒(𝑤𝑖+1 … 𝑤𝑛 )

Similarly backward sliding entropy

“good” n-gram is likely to have a much higher

probability than an n-gram with the same

tokens in random order

𝑟𝑎𝑡𝑖𝑜𝑟𝑎𝑛𝑑𝑜𝑚 =

𝑃(𝑛−𝑔𝑟𝑎𝑚 𝑐𝑜𝑛𝑡𝑎𝑖𝑛𝑖𝑛𝑔 𝑤)

𝑠𝑢𝑚 𝑜𝑓 𝑢𝑛𝑖𝑔𝑟𝑎𝑚𝑠 𝑝𝑟𝑜𝑏𝑠 𝑜𝑓 𝑡𝑜𝑘𝑒𝑛𝑠 𝑡ℎ𝑎𝑡 𝑛−𝑔𝑟𝑎𝑚𝑠 𝑐𝑜𝑛𝑡𝑎𝑖𝑛𝑠

Minimum ratio to random

Average ratio to random

Overall ratio to random = 𝑠𝑢𝑚1 𝑠𝑢𝑚2

▪ 𝑠𝑢𝑚1 =

𝑛

𝑖=2

𝑝𝑜𝑠 𝑤

𝑗=𝑝𝑜𝑠 𝑤 −𝑖+1 𝑃(𝑤𝑗

… 𝑤𝑗+𝑖−1 )

▪ 𝑠𝑢𝑚2 = 𝑠𝑢𝑚 𝑜𝑓 𝑢𝑛𝑖𝑔𝑟𝑎𝑚 𝑝𝑟𝑜𝑏𝑠 𝑜𝑓 𝑡𝑜𝑘𝑒𝑛𝑠 𝑡ℎ𝑎𝑡 𝑡ℎ𝑒 𝑎𝑏𝑜𝑣𝑒 𝑛 − 𝑔𝑟𝑎𝑚𝑠 𝑐𝑜𝑛𝑡𝑎𝑖𝑛

Overlap to adjacent ratio

an erroneous word may cause n-grams that contain the

word to be less likely than adjacent n-grams not

containing the word

=

𝑠𝑢𝑚 𝑜𝑓 𝑝𝑟𝑜𝑏𝑠 𝑜𝑓 𝑛−𝑔𝑟𝑎𝑚𝑠 𝑖𝑛𝑐𝑙𝑢𝑑𝑖𝑛𝑔 𝑤𝑖

𝑠𝑢𝑚 𝑜𝑓 𝑝𝑟𝑜𝑏𝑠 𝑜𝑓 𝑛−𝑔𝑟𝑎𝑚𝑠 𝑡ℎ𝑎𝑡 𝑎𝑟𝑒 𝑎𝑑𝑗𝑎𝑐𝑒𝑛𝑡 𝑡𝑜 𝑤𝑖 𝑏𝑢𝑡 𝑒𝑥𝑐𝑙𝑢𝑑𝑖𝑛𝑔 𝑖𝑡

Features extracted from syntactic parse trees

Label of the parent and grandparent node (some

of the labels denote complex constructs , e.g.,

SBAR )

number of sibling nodes

number of siblings of the parent

length of path to the root

GEC Approaches

Rule-based

Classification

Language

Modelling

SMT

Hybrid

Whole sentence error correction

Pipeline based approach

▪ Design classifiers for different error categories

▪ Deploy classifiers independently

▪ Relations between errors are ignored

▪ Example: A cats runs

▪ An article classifier may propose to delete ‘a’

▪ A noun number classifier may propose to change ‘cats’ to ‘cat’

Resource: Grammatical Error Correction Using Integer Linear Programming, Yuanbin Wu

and Hwee Tou Ng

Joint Inference

Errors are most of the cases interacting

Errors needs to be corrected jointly

Steps

▪ For every possible correction, a score (how much

grammatical) is assigned to the corrected sentence

▪ A set of corrections resulting in maximum score is

selected

Objective Function

Integer Linear Programming

𝑚𝑎𝑥𝑖𝑚𝑖𝑧𝑒 𝒄𝑇 𝒙

𝑠𝑢𝑏𝑗𝑒𝑐𝑡 𝑡𝑜 𝐴𝒙 ≤ 𝑏

Output Variable Space

𝒙≥0

𝒙∈ℤ

Constraints

GEC “Given an input sentence, choose a set

of corrections which results in the best output

sentence”

ILP formulation of GEC

Encode the output space using integer variables.

▪ Corrections that a word needs

Express inference objective as a linear objective

function.

▪ Maximize the grammaticality of corrections

Introducing constraints to refine feasible output

space

▪ Constraints guarantee that the corrections do not

conflict with each other

What corrections at which positions?

Location of error

Error type

Correction

First order variables

𝑘

𝑍𝑙,𝑝

∈ 0,1

𝑝 ∈ 1,2, … , 𝑠

𝑙 ∈ 𝐿 is an error type

𝑘 ∈ 1,2, … , 𝐶 𝑙 is a correction of type 𝑙

𝑘

𝑍𝑙,𝑝

=1

The word at position 𝑝 should be corrected to 𝑘

that is of error type 𝑙.

𝑘

𝑍𝑙,𝑝

=0

The word at position 𝑝 is not applicable for

correction 𝑘

𝜖

𝑍𝑙,𝑝

=1

Deletion of a word

Objective: To find best correction

Exponential in combinations of corrections

Approximate by decomposable assumption

Measuring the output quality of multiple corrections can

be decomposed into measuring quality of the individual

corrections

𝑘

𝑍𝑙,𝑝

Let 𝑠

𝑠′and 𝑤𝑙,𝑝,𝑘 ∈ ℝ measure the

grammaticality of 𝑠′

𝑘

𝑤𝑙,𝑝,𝑘 × 𝑍𝑙,𝑝

max

𝑙,𝑝,𝑘

Objective

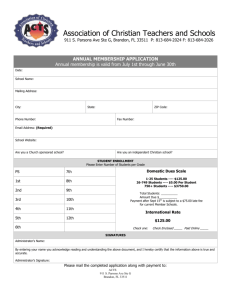

Overview of IBM Watson technology

Overview of IBM Watson services on IBM Bulemix

Overview and demo of key Watson toolkit

Date and Time

08.02.2015, 10:30AM-1:30PM

Venue

V1, Vikramshila

Specific instructions

Please enter the venue by 10:20AM

Bring your laptops if possible

𝑘

For individual correction 𝑍𝑙,𝑝

, the quality of 𝑠′ is

depends on

Language model score: ℎ 𝑠 ′ , 𝐿𝑀

Classifier confidence: 𝑓 𝑠 ′ , 𝑡

Disagreement score: 𝑔 𝑠 ′ , 𝑡

▪ Difference between maximum confidence score and the

score of the word that is being corrected

𝑤𝑙,𝑝,𝑘 = 𝜈𝐿𝑀 ℎ 𝑠 ′ , 𝐿𝑀 +

𝜆𝑡 𝑓(𝑠 ′ , 𝑡) +

𝑡∈𝐸

𝜇𝑡 𝑔(𝑠 ′ , 𝑡)

𝑡∈𝐸

Constraint to avoid conflict

For each error type 𝑙, only one output 𝑘 is allowed

at any applicable position 𝑝

𝑘

𝑍𝑙,𝑝

= 1 ∀ applicable 𝑙, 𝑝

𝑘

Final ILP formulation

𝑘

𝑤𝑙,𝑝,𝑘 × 𝑍𝑙,𝑝

max

𝑙,𝑝,𝑘

𝑘

𝑍𝑙,𝑝

= 1 ∀ applicable 𝑙, 𝑝

𝑠. 𝑡.

𝑘

𝑘

𝑍𝑙,𝑝

∈ {0,1}

A cats sat on the mat

Possible corrections and related variables

Constraint

𝑎

𝑡ℎ𝑒

𝜖

𝑍𝐴𝑟𝑡,1

+ 𝑍𝐴𝑟𝑡,1

+ 𝑍𝐴𝑟𝑡,1

=1

Computing weights

Language model score, classifier confidence

score, disagreement score

Classifiers: article (ART), preposition (PREP), noun

number (NOUN)

Correction: 𝑠

𝑘

𝑍𝑙,𝑝

𝑠 ′ = 𝐴 𝑐𝑎𝑡 𝑠𝑎𝑡 𝑜𝑛 𝑡ℎ𝑒 𝑚𝑎𝑡.

𝑠𝑖𝑛𝑔𝑢𝑙𝑎𝑟

Weight for 𝑍𝑁𝑜𝑢𝑛,2

:

𝑤𝑁𝑜𝑢𝑛,2,𝑠𝑖𝑛𝑔𝑢𝑙𝑎𝑟 = 𝜈𝐿𝑀 ℎ 𝑠 ′ , 𝐿𝑀 +

𝜆𝐴𝑅𝑇 𝑓 𝑠 ′ , 𝐴𝑅𝑇 + 𝜆𝑃𝑅𝐸𝑃 𝑓 𝑠 ′ , 𝑃𝑅𝐸𝑃 + 𝜆𝑁𝑂𝑈𝑁 𝑓 𝑠 ′ , 𝑁𝑂𝑈𝑁 +

𝜇𝐴𝑅𝑇 𝑔 𝑠 ′ , 𝐴𝑅𝑇 + 𝜇𝑃𝑅𝐸𝑃 𝑔 𝑠 ′ , 𝑃𝑅𝐸𝑃 + 𝜇𝑁𝑂𝑈𝑁 𝑔 𝑠 ′ , 𝑁𝑂𝑈𝑁

𝑓 𝑠 ′ , 𝐴𝑅𝑇 =

=

1

2

𝑓𝑡 𝑠 ′ 1 , 1, 𝐴𝑅𝑇 + 𝑓𝑡 𝑠 ′ 5 , 5, 𝐴𝑅𝑇

1

𝑓 𝑎, 1, 𝐴𝑅𝑇 + 𝑓𝑡 𝑡ℎ𝑒, 5, 𝐴𝑅𝑇

2 𝑡

𝑔 𝑠 ′ , 𝐴𝑅𝑇 = max 𝑔1 , 𝑔2

𝑔1 = 𝑚𝑎𝑥 𝑓𝑡 𝑘, 1, 𝐴𝑅𝑇 − 𝑓𝑡 𝑎, 1, 𝐴𝑅𝑇

𝑘

𝑔2 = 𝑚𝑎𝑥 𝑓𝑡 𝑘, 5, 𝐴𝑅𝑇 − 𝑓𝑡 𝑡ℎ𝑒, 5, 𝐴𝑅𝑇

𝑘

𝑤𝑁𝑜𝑢𝑛,2,𝑠𝑖𝑛𝑔𝑢𝑙𝑎𝑟 = 𝜈𝐿𝑀 ℎ 𝑠 ′ , 𝐿𝑀

𝜆𝐴𝑅𝑇

+

𝑓𝑡 𝑎, 1, 𝐴𝑅𝑇 + 𝑓𝑡 𝑡ℎ𝑒, 5, 𝐴𝑅𝑇

2

+ 𝜆𝑃𝑅𝐸𝑃 𝑓𝑡 𝑜𝑛, 4, 𝑃𝑅𝐸𝑃

𝜆𝑁𝑂𝑈𝑁

+

𝑓𝑡 𝑐𝑎𝑡, 2, 𝑁𝑂𝑈𝑁 + 𝑓𝑡 𝑚𝑎𝑡, 6, 𝑁𝑂𝑈𝑁

2

+ 𝜇𝐴𝑅𝑇 𝑔 𝑠 ′ , 𝐴𝑅𝑇

+𝜇𝑃𝑅𝐸𝑃 𝑔 𝑠 ′ , 𝑃𝑅𝐸𝑃

+ 𝜇𝑁𝑂𝑈𝑁 𝑔 𝑠 ′ , 𝑁𝑂𝑈𝑁

Modification count constraints

Major part of the sentence is grammatical

▪ No of modifications allowed for a particular error

category (𝑙) can be constrained to 𝑁𝑙

𝑘

▪ 𝑝,𝑘 𝑍𝐴𝑟𝑡,𝑝

≤ 𝑁𝐴𝑟𝑡 𝑤ℎ𝑒𝑟𝑒 𝑘 ≠ 𝑠[𝑝]

▪

𝑘

𝑍

𝑝,𝑘 𝑃𝑟𝑒𝑝,𝑝 ≤ 𝑁𝑃𝑟𝑒𝑝 𝑤ℎ𝑒𝑟𝑒 𝑘 ≠ 𝑠[𝑝]

▪

𝑘

𝑍

𝑝,𝑘 𝑁𝑜𝑢𝑛,𝑝 ≤ 𝑁𝑁𝑜𝑢𝑛 𝑤ℎ𝑒𝑟𝑒 𝑘 ≠ 𝑠[𝑝]

Article-Noun agreement constraints

A noun in plural form cannot have a (or an) as its

article.

▪ Noun in plural form is mutually exclusive with having article a

(or an)

𝑝𝑙𝑢𝑟𝑎𝑙

𝑎

▪ 𝑍𝐴𝑟𝑡,𝑝1

+ 𝑍𝑁𝑜𝑢𝑛,𝑝2 ≤ 1

Dependency relation constraints

subject-verb relation and determiner-noun relation

▪ If w belongs to a set of verbs or determiners (are, were, these,

all) that takes a plural noun, then the noun n is required to be

in plural

𝑝𝑙𝑢𝑟𝑎𝑙

▪ 𝑍𝑁𝑜𝑢𝑛,𝑝 = 1

A motivating case

A cat sat on the mat (𝑠) Cats sat on the mat (𝑠′)

𝜖

𝑍𝐴𝑟𝑡,1

𝑝𝑙𝑢𝑟𝑎𝑙

𝑍𝑁𝑜𝑢𝑛,2

𝑠

𝑠𝐼

𝑠′

𝑤𝐴𝑟𝑡,1,𝜖 will be small due to missing article

𝑤𝑁𝑜𝑢𝑛,2,𝑝𝑙𝑢𝑟𝑎𝑙 will be small due to low LM score of ‘A

cats’

Relaxing decomposable assumption

Combine multiple corrections to a single correction

▪ Instead of considering corrections A/𝜖 and 𝑐𝑎𝑡/𝑐𝑎𝑡𝑠

separately consider 𝐴 𝑐𝑎𝑡/𝜖 𝑐𝑎𝑡 together

▪ Higher order variables

𝑘

𝑍𝑙,𝑝 , ∀𝑙, 𝑝, 𝑘

Let 𝑍 = 𝑍𝑢 𝑍𝑢 =

be the set of

first order variables

𝑘

Let 𝑤𝑢 = 𝑤𝑙,𝑝,𝑘 be the weight of 𝑍𝑢 = 𝑍𝑙,𝑝

A second order variable:

𝑘

𝑘

𝑋𝑢,𝑣 = 𝑍𝑢 ∧ 𝑍𝑣 , 𝑍𝑢 ≜ 𝑍𝑙 1,𝑝 , 𝑍𝑣 ≜ 𝑍𝑙 2,𝑝

1 1

2 2

𝑝𝑙𝑢𝑟𝑎𝑙

𝜖

𝑋𝑢,𝑣 = 𝑍𝐴𝑟𝑡,1 ∧ 𝑍𝑁𝑜𝑢𝑛,2

𝑋𝑢,𝑣

𝑠

𝑠 ′ = 𝐶𝑎𝑡𝑠 𝑠𝑎𝑡 𝑜𝑛 𝑡ℎ𝑒

𝑚𝑎𝑡.

Weight for second order variable is similar as

that for first order variables

Why?

𝑤𝑢,𝑣 = 𝜈𝐿𝑀 ℎ 𝑠 ′ , 𝐿𝑀 +

′ , 𝑡) +

𝜆

𝑓(𝑠

𝑡∈𝐸 𝑡

′ , 𝑡)

𝜇

𝑔(𝑠

𝑡∈𝐸 𝑡

New constraints for enforcing consistency

between first and second order variables

New objective function

Statistical Machine Translation for GEC

𝐸 = arg max 𝑃 𝐸 𝑃(𝐹|𝐸)

𝐸

Model GEC as SMT

▪ E=Corrected sentence and F=Erroneous sentence

▪ Parallel corpora: Learner error corpora

GEC is as good as SMT

Increase size of parallel corpora covering targeted

types of errors Expensive

A hack through

SMT systems considered to be meaning

preserving

Generate alternate surface renderings of the

meaning expressed in erroneous sentence

Select the most fluent one

Resource: Exploring Grammatical Error Correction with Not-So-Crummy Machine Translation,

Madnani et al.

PL1

Translation

Bilingual MT

System 1

PL2

Translation

PLn

Translation

Bilingual MT

System 2

Bilingual MT

System n

Erroneous

Sentence

RT1

RT2

Combine

RTn

Select

Find the most fluent alternative

Use an n-gram language model

Issue

Language model does not care about preserving sentence

meaning

No single translation is error free in general

To increase the likelihood of whole-sentence

correction

Combine evidence of corrections produced by

each independent translation model

Steps: Combination based approach

Align (original, round translation) pairs

Combine aligned pairs to form word lattice

Decode for best candidate

The task: Align each sentence pair <original,

round trip translation>

Alignment:

▪ For a (hypothesis, reference) pair perform some edit

operations that transform a hypothesis sentence to a

reference one

▪ Each edit operation involves a cost

▪ Best alignment is that with minimal cost

▪ Also used as machine translation metric

Word order rate (WER)

Levenstein distance between <hypothesis,

reference> pair

Edit operations: Match, Insertion , Deletion and

Substitution

Fails to model reordering of words or phrases in

translation

Translation Edit Rate (TER)

Introduce shift operation

Resource: TERp System Description, Snover et al.

Toolkit: TER Compute, http://www.cs.umd.edu/~snover/tercom/

REF:

saudi arabia denied this week

information published in the american new york

times

HYP: this week the

saudis denied

information published in the

times

new york

WER too harsh when output is distorted from

reference

With WER, no credit is given to the system when

it generates the right string in the wrong place

TER shifts reflect the editing action of moving

the string from one location to another

REF:

saudi arabia denied this week

information published in the american new york

times

HYP: this week the

saudis denied

information published in the

times

new york

WER too harsh when output is distorted from

reference

With WER, no credit is given to the system when

it generates the right string in the wrong place

TER shifts reflect the editing action of moving

the string from one location to another

REF: **** **** SAUDI ARABIA denied THIS WEEK

information published in the AMERICAN new york

times

HYP: THIS WEEK THE

SAUDIS denied **** ****

information published in the ******** new york

times

WER too harsh when output is distorted from

reference

With WER, no credit is given to the system when

it generates the right string in the wrong place

TER shifts reflect the editing action of moving

the string from one location to another

REF: **** **** SAUDI ARABIA denied THIS WEEK

information published in the AMERICAN new york

times

HYP: THIS WEEK THE

SAUDIS denied **** ****

information published in the ******** new york

times

WER too harsh when output is distorted from

reference

With WER, no credit is given to the system when

it generates the right string in the wrong place

TER shifts reflect the editing action of moving

the string from one location to another

REF:

saudi arabia denied this week

information published in the american new

york times

HYP: this week the

saudis denied

information published in the

york times

new

REF:

saudi arabia denied this week

information published in the american new

york times

HYP: @

the

saudis denied [this week]

information published in the

new

york times

Edits:

Shift “this week” to after “denied”

REF:

SAUDI ARABIA denied this week

information published in the american new

york times

HYP: @

THE

SAUDIS denied [this week]

information published in the

new

york times

Edits:

Shift “this week” to after “denied”

Substitute “Saudi Arabia” for “the Saudis”

REF:

SAUDI ARABIA denied this week

information published in the AMERICAN new

york times

HYP: @

THE

SAUDIS denied [this week]

information published in the ******** new

york times

Edits:

Shift “this week” to after “denied”

Substitute “Saudi Arabia” for “the Saudis”

Insert “American”

REF:

SAUDI ARABIA denied this week

information published in the AMERICAN new

york times

HYP: @

THE

SAUDIS denied [this week]

information published in the ******** new

york times

Edits:

Shift “this week” to after “denied”

Substitute “Saudi Arabia” for “the Saudis”

Insert “American”

1 Shift, 2 Substitutions, 1 Insertion

Optimal sequence of edits (with shifts) is very

expensive to find

Use a greedy search to select the set of shifts

At each step, calculate min-edit (Levenshtein) distance (number

of insertions, deletions, substitutions) using dynamic

programming

Choose shift that most reduces min-edit distance

Repeat until no shift remains that reduces min-edit distance

After all shifting is complete, the number of

edits is the number of shifts plus the

remaining edit distance

REF: DOWNER SAID " IN

THE END ,

ANY bad

AGREEMENT will NOT be an agreement we CAN

SIGN

. "

HYP: HE

OUT " EVENTUALLY ,

ANY WAS *** bad

,

will *** be an agreement we WILL

SIGNED . ”

Shifted words must match the reference words in the destination

position exactly

The word sequence of the hypothesis in the original position and the

corresponding reference words must not match

The word sequence of the reference that corresponds to the

destination position must be misaligned before the shift

REF: DOWNER SAID " IN

THE END ,

ANY bad

AGREEMENT will NOT be an agreement we CAN

SIGN

. "

HYP: HE

OUT " EVENTUALLY ,

ANY WAS *** bad

,

will *** be an agreement we WILL

SIGNED . ”

Shifted words must match the reference words in the destination

position exactly

The word sequence of the hypothesis in the original position and the

corresponding reference words must not match

The word sequence of the reference that corresponds to the

destination position must be misaligned before the shift

REF: DOWNER SAID " IN

THE END ,

ANY bad

AGREEMENT will NOT be an agreement we CAN

SIGN

. "

HYP: HE

OUT " EVENTUALLY ,

ANY WAS *** bad

,

will *** be an agreement we WILL

SIGNED . ”

Shifted words must match the reference words in the destination

position exactly

The word sequence of the hypothesis in the original position and the

corresponding reference words must not match

The word sequence of the reference that corresponds to the

destination position must be misaligned before the shift

REF: DOWNER SAID " IN

THE END ,

ANY bad

AGREEMENT will NOT be an agreement we CAN

SIGN

. "

HYP: HE

OUT " EVENTUALLY ,

ANY WAS *** bad

,

will *** be an agreement we WILL

SIGNED . ”

Shifted words must match the reference words in the destination

position exactly

The word sequence of the hypothesis in the original position and the

corresponding reference words must not match

The word sequence of the reference that corresponds to the

destination position must be misaligned before the shift

REF: DOWNER SAID " IN

THE END ,

ANY bad

AGREEMENT will NOT be an agreement we CAN

SIGN

. "

HYP: HE

OUT " EVENTUALLY ,

ANY WAS *** bad

,

will *** be an agreement we WILL

SIGNED . ”

Shifted words must match the reference words in the destination

position exactly

The word sequence of the hypothesis in the original position and the

corresponding reference words must not match

The word sequence of the reference that corresponds to the

destination position must be misaligned before the shift

TER-Plus (TERp)

Three more edit operations

▪ Stem match, synonym match, phrase substitution

allows shifts if the words being shifted are exactly

the same, are synonyms, stems or paraphrases of

each other, or any such combination

both experience and books are very important about living .

related to the life experiences and the books are very imp0rtant .

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

both experience and books are very important about living .

related to the life experiences and the books are very imp0rtant .

[I]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living .

related to the life experiences and the books are very imp0rtant .

[I]

[I]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living

related to the life experiences and the books are very imp0rtant

[I]

[I] [S]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living

related to the

[I]

experiences and the books are very imp0rtant life

[I] [S]

[Y]*

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living

related to the

[I]

experiences and the books are very imp0rtant life

[I] [S]

[Y]*

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living

related to the

[I]

[I] [S]

experiences and the books are very imp0rtant life

[T]

[Y]*

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

----

---- both experience and books are very important about living

related to the experiences and the books are very imp0rtant life

[I]

[I] [S]

[T]

[M]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

[Y]*

----

---- both experience and ---

books are very important about living

related to the experiences and the books are very imp0rtant life

[I]

[I] [S]

[T]

[M]

[I]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

[Y]*

----

---- both experience and ---

books are very important about living

related to the experiences and the books are very imp0rtant life

[I]

[I] [S]

[T]

[M]

[I]

[I] Insertion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

[Y]*

----

---- both experience and ---

books are very important about living

related to the experiences and the books are very imp0rtant

[I]

[I] [S]

[T]

[M]

[I]

[M]

[M]

[I] Insertion

[D] Deletion

[S] Substitution

[M] Match

[T] Stemming

[Y] Wordnet Synonym

* Shifting

[M]

[M]

-----

life

[D]

[Y]*

----

both experience --- and books are very important about living

and

the

[I]

[S]

experience , and book

[M]

[I]

[M]

[T]*

a

very important about life

[S]

[M]

[M]

[M]

[Y]

The task: Combine every translations using

their alignments to the original sentence

We need a data structure for combination: Word

Lattice

Word Lattice

a directed acyclic graph with a single start point

and edges labeled with a word and weight.

a word lattice can represent an exponential

number of sentences in polynomial space

1

Create backbone of the lattice using the

original sentence

both/1

2

experience/1

3

and/1

4

For all round trip translations, map the

alignments to the lattice

Action for edit: each insertion, deletion,

substitution, stemming, synonymy and

paraphrase operation lead to creation of new

nodes

Action for match: Duplicate nodes are merged

Combining weights: Edges produced by different

translations between same pair of nodes are

merged and their weights are added

Original: Both experience and books are very important about living.

Russian: And the experience, and a very important book about life.

----

both experience --- and books are very important about living

and

the

[I]

[S]

experience , and book

[M]

[I]

[M]

[T]*

a

very important about life

[S]

[M]

[M]

[M]

[Y]

A FST is (Q, , , I , F , , P)

Q: a finite set of states

Σ: a finite set of input symbols

Γ: a finite set of output symbols

I: Q R+ (initial-state probabilities)

F: Q R+ (final-state probabilities)

Q ( { }) ( { }) Q : the transition

relation between states.

P: R (transition probabilities)

Accepted with confidence?

Accepted + Translated with

confidence?

Acceptors (FSAs)

Unweighted

Transducers (FSTs)

c

{false, true}

a

Weighted

:y

c/.7

numbers

a/.5

/.5

c:z

strings

a:x

.3

(string, num) pairs c:z/.7

a:x/.5

:y/.5

.3

Acceptors (FSAs)

Unweighted

Weighted

{false, true}

Grammatical?

numbers

How grammatical?

Better, how likely?

Transducers (FSTs)

strings

Markup

Correction

Translation

(string, num) pairs

Good markups

Good corrections

Good translations

Greedy best first

Both experience and books are very important about life

1-Best

Convert TREp lattice edge weights to edge costs

by multiplying the weights by -1

Find the output as the shortest path in TERp

lattice.

Both experience and the books are very important about life (cost: -59)

Language Model ranked

Find n-best (lowest cost) list from TERp lattice

Rank the list using n-gram language model

Suggest top ranked candidate as correction

Product Re-ranked

Find n-best (lowest cost) list from TERp lattice

Multiply cost of each hypothesis with its LM score

Rank hypothesis by the product and chose the

best

Language Model Composition

Convert edge weights in the TERp lattice into

probabilities

▪ Weighted Finite State Transducer (WFST)

representation (𝑊𝐹𝑆𝑇𝑙𝑎𝑡𝑡𝑖𝑐𝑒 )

Train an n-gram finite state language model in

WFST (𝑊𝐹𝑆𝑇𝐿𝑀 )

Compose: 𝑊𝐹𝑆𝑇𝑐𝑜𝑚𝑝 = 𝑊𝐹𝑆𝑇𝑙𝑎𝑡𝑡𝑖𝑐𝑒 ∘ 𝑊𝐹𝑆𝑇𝐿𝑀

shortest path through 𝑊𝐹𝑆𝑇𝑐𝑜𝑚𝑝 is suggested as

correction

Relevant Toolkit: OpenFst Toolkit, http://openfst.org/twiki/bin/view/FST/WebHome

Resources

OpenFst Toolkit

▪ http://openfst.org/twiki/bin/view/FST/WebHome

Tutorials

▪ http://www.openfst.org/twiki/pub/FST/FstSltTutorial/pa

rt1.pdf

▪ http://www.openfst.org/twiki/pub/FST/FstSltTutorial/pa

rt2.pdf

Compile

fstcompile -isymbols=T.isyms -

osymbols=T.osyms T.txt T.fst

Printing

fstprint -isymbols=T.isyms -

osymbols=T.osyms T.fst >T.txt

Drawing

fstdraw -isymbols=T.isyms -

osymbols=T.osyms T.fst >T.dot

Visualization format

dot

-Tpng T.dot > T.png

$ fstunion a.fst b.fst out.fst

$ fstconcat a.fst b.fst out.fst

ab?d

abc

abcd

f

g

abc

ab?d

Function composition: f g

(1, c, a, 0.3, 1)

(2, a, b, 0.6, 2)

Learner error corpora

Grammatical error detection

Grammatical error correction

Evaluating error detection and correction

system