Speech Processing

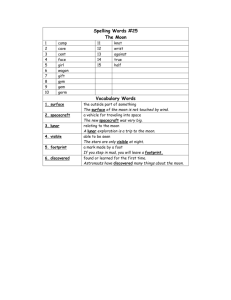

advertisement

Presented by Erin Palmer Speech processing is widely used today Can you think of some examples? ▪ ▪ ▪ ▪ ▪ ▪ Phone dialog systems (bank, Amtrak) Computer’s dictation feature Amazon’s Kindle (TTS) Cell phone GPS Others? Speech processing: Speech Recognition Speech Generation (Text to Speech) Text? Easy: each letter is an entity, words are composed of letters Computer stores each letter (character) to form words (strings) Images? Slightly more complicated: each pixel has RGB values, stored in a 2D array But what about speech? Unit: phoneme Phoneme is an interval that represents a unit sound in speech Denoted by slashes: /k/ in kit In english the correspondance between phonemes and letters is not good /k/ is the same in kit and cat /∫/ is the sound for shell All Phonemes of the English Language: In the English Language there is a total of: 26 letters 43 phonemes Waveform Constructed from raw speech by sampling the air pressure at each point given the frequency (which is dependant on sample rate) Frequencies are connected by a curve The signal is quantized, so it needs to be smoothed, and that is the waveform that is output Spectrogram Function of amplitude as a function of frequency ▪ time (x-axis) vs. frequency (y-axis) Using the gray-scale we indicate the energy at each particular point ▪ so color is the 3rd dimension The areas of the spectrogram look denser, where the amplitudes of the wavelengths are greater The regions with the greatest wavelengths are the areas where the vowels were pronounced, for example /ee/ in “speech”. The spectrogram also has very distinct entries for all the phonemes Intensity Measure of the loudness of how one talks ▪ Through the course of a word, the intensity goes up then down ▪ In between words, the intensity goes down to zero Pitch Measure of the fundamental frequency of the speaker’s speech It is measured within one word The pitch doesn’t change too drastically , ▪ A good way to detect if there is an error, is to see how drastically it changes. In statements the pitch stays constant, and in a question or in an exclamation, it would go up on the thing that we are asking or on the thing we were exclaiming about. The wave form is used to do various speechrelated tasks on a computer .wav format Speech recognition and TTS both use this representation, as all other information can be derived from it The problem of language understanding is very difficult! Training is required What constitutes good training? Depends on what you want! Better recognition = more samples Speaker-specific models: 1 speaker generates lots of examples ▪ Good for this speaker, but horrible for everyone else More general models: Area-specific ▪ The more speakers the better, but limited in scope, for instance only technical language Speech recognition consists of 2 parts: 1. Recognition of the phonemes 2. Recognition of the words The two parts are done using the following techniques: Method 1: Recognition by template Method 2: Using a combination of: ▪ HMM (Hidden Markov Models) ▪ Language Models How is it done? Record templates from a user & store in a library Record the sample when used and compare against the library examples Select closest example Uses: Voice dialing system on a cell phone Simple command and control Speaker ID Matching is done in the frequency domain Different utterances might still vary quite a bit Solution: use shift-matching For each square compute: ▪ Dist(template[i], sample[j]) + smallest_of( ▪ Dist(template[i-1], sample[j]), ▪ Dist(template[i], sample[j-1]), ▪ Dist(template[i-1], sample[j-1])) ▪ Remember which choice you took and count path Issues What happens with no matches? ▪ Need to deal with none of the above case What happens when there are a lot of templates? ▪ Harder to choose ▪ Costly Choose templates that are very different Advantages Works well for small number of templates (<20) Language Independent Speaker Specific Easy to Train (end user controls it) Disadvantages Limited by number of templates Speaker specific Need actual training examples Main problem: there are a lot of words! What if we used one phoneme for template? Would work better, in terms of generality but some issues still remain A better model: HMMs for Acoustic Model and Language Models Want to go from Acoustics to Text Acoustic Modeling: Recognize all forms of phonemes Probability of phonemes given acoustics Language Modeling Expectation of what might be said Probability of word strings Need both to do recognition Similar to templates for each phoneme Each phoneme can be said very many ways Can average over multiple examples Different phonetic contexts ▪ Ex. “sow” vs. “see” Different people Different acoustic environments Different channels Markov Process: Future can be predicted from the past P(Xt+1 | Xt, Xt-1, … Xt-m) Hidden Markov Models State is unknown Probability is given for each state So: Given observation O and model M Efficiently file P(O|M) This is called decoding Find the sum of all path probabilities Each path probability is product of each transition in state sequence ▪ Use dynamic programming to find the best path Use one HMM for each phone type Each observation Probability distribution of possible phone types Thus can find most probable sequence Viterbi algorithm used to find the best path Not all phones are equi-probable! Find sequences that maximize: P(W | O) Bayes Law: P(W | O) = P(W)P(O|W) / P(O) ▪ HMMs give us P(O|W) ▪ Language model: P(W) What are the most common words? Different domains have different distributions Computer Science Textbook Kids Books Context helps prediction Suppose you have the following data: ▪ Source “Goodnight Moon” by Margaret Wise Brown In the great green room There was a telephone And a red balloon And a picture of – The cow jumping over the moon … Goodnight room Goodnight moon Goodnight cow jumping over the moon Let’s build a language model! Can have uni-gram (1-word) and bi-gram (2word) models But first we have to preprocess the data! Data Preprocessing: First remove all line breaks and punctuation ▪ In the great green room There was a telephone And a red balloon And a picture of The cow jumping over the moon Goodnight room Goodnight moon Goodnight cow jumping over the moon For the purposes of speech recognition we don’t care about capitalization, so get rid of that! ▪ in the great green room there was a telephone and a red balloon and a picture of the cow jumping over the moon goodnight room goodnight moon goodnight cow jumping over the moon Now we have our training data! ▪ Note for text recognition things like sentences and punctuation matter, but we usually replace those with tags, ex <sentence>I have a cat</sentence> Now count up how many of each word we have (uni-gram) Then compute probabilities of each word and voila! in 1 red 1 the 3 balloon 1 great 1 picture 1 green 1 of 1 room 2 cow 2 there 1 jumping 2 was 1 over 2 a 3 moon 3 telephone 1 goodnight 3 and 2 TOTAL 33 in 0.03 red 0.03 the 0.09 balloon 0.03 great 0.03 picture 0.03 green 0.03 of 0.03 room 0.06 cow 0.06 there 0.03 jumping 0.06 was 0.03 over 0.06 a 0.09 moon 0.09 telephone 0.03 goodnight 0.09 and 0.06 TOTAL 1 What are bigram models? And what are they good for? More dependant on the content, so would avoid word combinations like ▪ “telephone room” ▪ “I green like” Can also use grammars but the process of generating those is pretty complex How cam we improve? Look at more than just 2 words (tri-grams, etc) Replace words with types ▪ “I am going to <City>” instead of “I am going to Paris” Microsoft’s Dictation tool Speech Synthesis Text Analysis ▪ Strings of characters to words Linguistic Analysis ▪ From words to pronunciations and prosidy Waveform Synthesis ▪ From pronunciations to waveforms What can pose difficulties? Numbers Abbreviations and letter sequences Spelling errors Punctuation Text layout AT&T’s speech synthesizer http://www.research.att.com/~ttsweb/tts/demo.p hp#top Windows TTS Some of the slides were adapted from: www.speech.cs.cmu.edu/15-492 Wikipedia.com Amanda Stent’s Speech Processing slides