Systeemsoftware_Chapter_14

advertisement

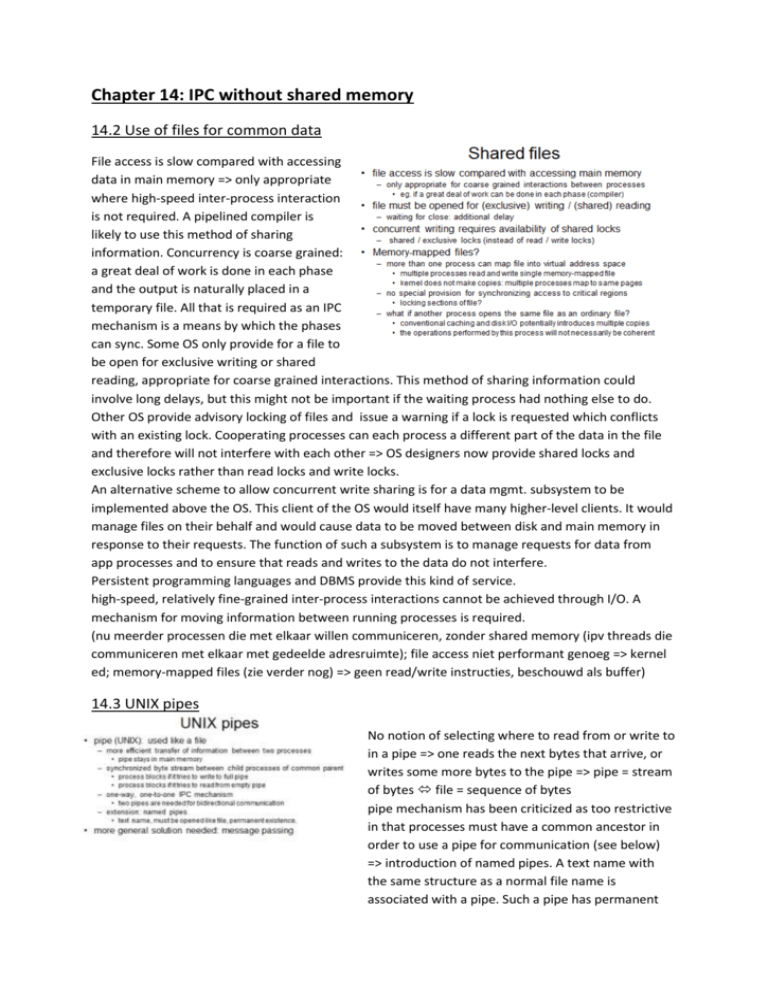

Chapter 14: IPC without shared memory 14.2 Use of files for common data File access is slow compared with accessing data in main memory => only appropriate where high-speed inter-process interaction is not required. A pipelined compiler is likely to use this method of sharing information. Concurrency is coarse grained: a great deal of work is done in each phase and the output is naturally placed in a temporary file. All that is required as an IPC mechanism is a means by which the phases can sync. Some OS only provide for a file to be open for exclusive writing or shared reading, appropriate for coarse grained interactions. This method of sharing information could involve long delays, but this might not be important if the waiting process had nothing else to do. Other OS provide advisory locking of files and issue a warning if a lock is requested which conflicts with an existing lock. Cooperating processes can each process a different part of the data in the file and therefore will not interfere with each other => OS designers now provide shared locks and exclusive locks rather than read locks and write locks. An alternative scheme to allow concurrent write sharing is for a data mgmt. subsystem to be implemented above the OS. This client of the OS would itself have many higher-level clients. It would manage files on their behalf and would cause data to be moved between disk and main memory in response to their requests. The function of such a subsystem is to manage requests for data from app processes and to ensure that reads and writes to the data do not interfere. Persistent programming languages and DBMS provide this kind of service. high-speed, relatively fine-grained inter-process interactions cannot be achieved through I/O. A mechanism for moving information between running processes is required. (nu meerder processen die met elkaar willen communiceren, zonder shared memory (ipv threads die communiceren met elkaar met gedeelde adresruimte); file access niet performant genoeg => kernel ed; memory-mapped files (zie verder nog) => geen read/write instructies, beschouwd als buffer) 14.3 UNIX pipes No notion of selecting where to read from or write to in a pipe => one reads the next bytes that arrive, or writes some more bytes to the pipe => pipe = stream of bytes file = sequence of bytes pipe mechanism has been criticized as too restrictive in that processes must have a common ancestor in order to use a pipe for communication (see below) => introduction of named pipes. A text name with the same structure as a normal file name is associated with a pipe. Such a pipe has permanent existence in the UNIX namespace and can be opened and used by processes under the same access control policies that exist for files (no longer requirement for family relationship between processes) (pipe zoals die in java bestaat geen exacte representative van hoe die hier gebruikt wordt => in java voor communicatie tussen draden (weer shared memory) pipe is beperkt in grootte kan alleen maar voor processen die op zelfde systeem zitten we zoeken iets algemeners => message passing) fig: pipe system zodanig dat A gegevens kan doorsturen naar B (zie ook Ch13) (fig rechts) a process is created in UNIX by means of a fork system call which causes a new address space to be created. The address space of the parent is replicated for the child. Any files the parent has opened, or pipes the parent has created, become available to its children. The children can be programmed to communicate via the pipes they inherit. The processes which may use a pipe are therefore the children, grandchildren or descendants in general, of the process that created the pipe. Fig is example of a pipe in use by two processes. (identifiers van een pipe komen ook in file table, alsof die ook file zijn (maar is niet zo!) – typisch voor UNIX) 14.4 Asynchronous message passing (rechts) typical message: (gestructureerde boodschap) system makes use of the message header in order to deliver the message to the correct destination. Contents of the message (= message body) are of no concern to the message transport system (agreed between sender and receiver). App proctocols associated with specific process interactions determine how messages should be structured by a sender in order to be interpreted correctly by the receiver (= security check) type of message: might: indicate whether the message is an initial request for some service or a reply to some previous request; indicate the priority of the message; be a subaddress within the destination process indicating a particular service required. implicit assumption here that the source and destination fields of the message will each indicate a single process, might be the case, but a more general and flexible scheme is often desirable. outline of message passing mechanism: A wants to send message to B assumption: each knows appropriate name for the other A builds up message then executes SEND primitive with parameters indicating to whom the message should be delivered and the address at which the message can be found (in a data structure in A’s address space). B reaches point in its code where it needs to sync with and receive data from A and executes WAIT (or maybe RECEIVE) with parameters indicating from whom the message is expected and the address (in B’s address space) where the message should be put. Assumptions: each knows identity of the other; appropriate for sender to specify single recipient; appropriate for receiver to specify single sender; agreement between A and B on size of message and contents (language-level type checking could be helpful here) (geen punt van blokkeren bij zender => moet ergens bufferruimte zijn; lijkt alsof je boodschap 2x kopieert (PAGING => pagina remappen zonder kopiëren); als B zit te wachten, kan je boodschap rechtstreeks overbrengen; indien niet, dan gaat message eerst naar buffer) sending process is not delayed on send =>messages must be buffered if destination process has not yet executed WAIT for that message. Message acts as wake-up-waiting signal as well as passing data. if process WAITs and no message in buffer => blocked, when message arrives => runnable again => message is copied from sender’s address space into receiver’s address space, if necessary via buffer maintained by message passing service 14.5 Variations on basic message passing 14.5.2 Request and reply primitives (figuur boven) the message system may distinguish between requests and replies and this could be reflected in the message passing primitives: SEND-REQUEST (receiver-id, message) WAIT-REQUEST (sender-id, space for message) SEND-REPLY (original-sender-id, reply-message) WAIT-REPLY (original-receiver-id, space for reply-mess) typical interaction: after sending request, the sender process is free to carry on working in parallel with receiver until it needs to sync with the reply. Might be better to enforce that the SEND-REQUEST, WAIT-REPLY primitives should be used as an indivisible pair, perhaps provided to the user as a single REQUEST-SERVICE primitive. This would prevent the complexity of parallel operation and would avoid context switching overhead in the common case where the sender has nothing to do in parallel with the service being carried out and immediately blocks, waiting for the reply, when scheduled, as shown in [fig links] REQUEST-SERVICE corresponds to a procedure call. (request en reply samengenomen want als die request stuurt, wacht die toch op een antwoord – en blokkeert) if processes are single threaded, the requesting process blocks until a reply is returned. If the processes are multi-threaded, and threads can be created dynamically, a new thread may be created to make the request for service. 14.5.3 Multiple ports per process extending the idea of allowing a process to select precisely which messages it is prepared to receive and in what order it will receive them, it may be possible for a process to specify a number of ports on which it will receive messages. With this facility, a port could be associated with each of the functions a process provides as a service to other processes and a port could be allocated to receive replies to requests this process has made (shown in figure). Again, unnecessary blocking must be avoided this may be achieved either by allowing a process to poll its ports, in order to test for waiting messages, or by supporting the ability to specify a set of ports on which it is prepared to receive a message. A priority ordering may be possible, or a nondeterministic selection may be made from the ports with messages available. (poort gekoppeld aan specifieke operatie, bv boodschappen door producers via poort 1, van consumers via poort 2; process P blokkeert niet zomaar als in poort 0 niets zit => gaat poorten af om te kijken of er iets in zit; stel buffer: buffer leeg => we kijken niet naar poort 2 (consumers) want er zit niets in buffer om te verwerken; we kijken wel naar poort 1 (producers) => rendez-vous principe uit ADA) 14.5.4 Input ports, output ports and channels (stap verder: zender moet alleen lokale poort kennen waarnaar hij moet zenden (moet niet weten wie ontvanger is) => niet geprogrammeerd maar geconfigureerd; loskoppeling van zender en ontvanger) only at configuration time it is specified which other process’s input port is associated with this output port or which other process is to receive from this channel => greater flexibility fig1: binding of a single output port to a single input port fig2: many-to-one binding 14.5.5 Global ports (zender stuurt boodschap naar mailbox => alleen mailbox kennen, niet ontvanger omgekeerd ook: ontvangers halen op uit mailbox zonder zender te kennen any to one of many: slechts 1 receiver moet verwerken, welke maakt niet uit indirectieniveau: zenders en ontvangers moeten elkaar niet meer kennen, enkel nog mailbox) equivalent server processes may take request-for-work messages from the mailbox, a new server can easily be introduced or a crashed server removed. (same effect achieved as with many-to-many consumer-producer buffering using a monitor or programmed with semaphores) 14.5.6 Broadcast and multicast (one to many => boodschap moet door meerdere receivers verwerkt worden bovenste figuur: heel gelijkaardig aan bounded buffer probleem met producers en consumers) it is possible to achieve ‘from anyone’ (many to one) or ‘to everyone’ (broadcast) simply by using a special name in the message header channels: ability to receive messages from a specific group of processes global ports: ability to send to a group of anonymous, identical processes – global ports also support the ability to receive messages from ‘anyone’, of from a selected group, depending on how the name of the global port is used and controlled. an alternative approach is to support the naming of groups of processes in a system as well as individual processes. The semantics of sending to a group name and receiving from a group name must be defined very carefully. In the previous discussion a send to a global port implied that one anonymous process would take the message and process it. This is a common requirement. A different requirement is indicated by the term multicast. In this case the message could be sent to every process in the group. The figure illustrates this distinction. 14.6 Implementation of asynchronous message passing (-boodschap is gestructureerd objecthoe groot kan zo’n object zijn? voldoende ruimte nodig in kernel om ≠ boodschappen te bufferen => hoeveel bufferruimte ga je voorzien? 4 GB is maximale grootte adresruimte (virtueel), hoe kan boodschap dan 4GB zijn? Geraakt niet in kernel (om te bufferen)… 4GB is absolute maximale grootte voor een boodschap, groter kan niet je geeft geen boodschap van 4GB door => paging technieken : laten weten dat er een boodschap is en die wordt dan door ontvanger in ≠ pages opgevraagd) 14.7 Synchronous message passing (fig1: synchroon: nadeel is dat zender kan blokkeren, voordeel: message wordt niet meer gekopieerd) A message system in which the sender is delayed on SEND until the receiver executes WAIT avoids the overhead of message buffer mgmt. Such a system is outlined in the figure above. The message is copied once from sender to receiver when they have sync’d. The behaviour of such a system is easier to analyse since synchronizations are explicit. fig2: possible sync delay in practice, explicit sync is not feasible in all process interactions, eg, an important system service process must not be committed to wait in order to send a reply to one of its many client processes. The client cannot be relied on to be waiting for the reply. processes with the sole function of managing buffers are often built to avoid this sync delay in sync systems. We have removed message buffering from the message passing service only to find it rebuilt at the process level. Advantage: kernel has less overhead than in async systems and time taken to manage message buffers is used only by those processes that need them, thus avoiding system overhead for others. (multi-thread: als 1 draad blokkeert, kan andere verder => is oplossing op 1 voorwaarde: als je niet teveel requests binnenkrijgt) buffer mgmt scheme: clients interact with server throug interface process which manages 2 servers (1 for requests, 1 for replies). Interface process answers to wellknown system name for the service. Interface process never syncs with specific process, takes any incoming message. Server messages can get priority over client messages. Real service is carried out by process anonymous to clients => scheme could be used to allow a number of server processes to perform the service, each taking work from the interface process.