Eurostat - typology of validation rules

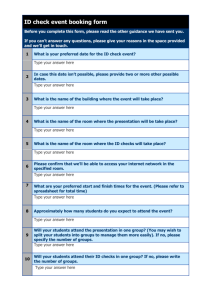

advertisement

European Commission – Eurostat/B1, Eurostat/E1, Eurostat/E6 WORKING DOCUMENT – Pending further analysis and improvements Based on deliverable 2.4 Contract No. 40107.2011.001-2011.567 ‘VIP on data validation general approach’ Exhaustive and detailed typology of validation rules – v 0.1306 September 2013 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Document Service Data Type of Document Reference: Deliverable Version: 0.1306 Status: Draft Created by: Angel SIMÓN Date: 23.04.2013 Distribution: European Commission Eurostat/E6 Eurostat/B1, Eurostat/E1, 2-4 - Exhaustive and detailed typology of validation rules_v01306.doc – For Internal Use Only Reviewed by: Angel SIMÓN Approved by: Remark: Pending further analysis and improvements Document Change Record Version Date Change 0.1304 23.04.2013 Initial release based on deliverable from contractor AGILIS 0.1305 30.05.2013 Content reorganization and improvement 0.1306 17.06.2013 Content improvement following suggestions from Mr P. Diaz 0.1309 11.09.2013 Content improvements from suggestions proposed in the Workshop on ESS.VIP Validation on 10.09.2013 Contact Information EUROSTAT Ángel SIMÓN Unit E-6: Transport statistics BECH B4/334 Tel.: +352 4301 36285 Email: Angel.SIMON@ec.europa.eu September 2013 Page ii Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Table of contents Page 1. Executive summary................................................................................................................ 1 2. Introduction .......................................................................................................................... 2 3. Validation rules ..................................................................................................................... 2 4. Typology of validation rules ................................................................................................... 4 4.1 File structure ............................................................................................................................. 4 4.1.1 Filename check .................................................................................................................. 4 4.1.2 File type check ................................................................................................................... 4 4.1.3 Delimiters checks ............................................................................................................... 4 4.1.4 Format check ..................................................................................................................... 5 4.2 Checks within datasets ............................................................................................................. 5 4.2.1 Type Check......................................................................................................................... 5 4.2.2 Length Check ..................................................................................................................... 6 4.2.3 Presence Check .................................................................................................................. 6 4.2.4 Allowed character checks .................................................................................................. 6 4.2.5 Uniqueness Check.............................................................................................................. 7 4.2.6 Range Check ...................................................................................................................... 7 4.3 Checks inter-datasets................................................................................................................ 7 4.3.1 Referential integrity ........................................................................................................... 8 4.3.2 Code List Check .................................................................................................................. 8 4.3.3 Cardinality checks .............................................................................................................. 8 4.3.4 Mirror checks ..................................................................................................................... 8 4.3.5 Time series checks ............................................................................................................. 9 4.3.6 Revised data integrity Check ........................................................................................... 10 4.3.7 Model – based Consistency Check .................................................................................. 10 4.4 Other checks ........................................................................................................................... 11 4.4.1 Consistency checks .......................................................................................................... 11 4.4.2 Control Check .................................................................................................................. 12 4.4.3 Conditional Checks .......................................................................................................... 12 September 2013 Page iii Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 1. Executive summary In this document an exhaustive typology of validation rules for statistical purposes is presented. Twenty types of validation rules have been identified. These types can be of any of the following groups: File structure 1 file checks >1 file checks 1n file checks Filename Type Referential integrity Consistency File type Length Code List Control Delimiters Presence Cardinality Conditional Format Allowed character Mirror Uniqueness Time series Range Revised data integrity Model based consistency Table 1: Validation rules types by group In general, validation rules may be classified under one or several types, in some cases from different groups. From a practical point of view, this document can be used as a checklist to set up a validation procedure for a statistical domain, supporting the identification of validation rules to be applied to datasets in order to guarantee the maximum of data quality. Validation levels1 study the validation process from various perspectives including the validation complexity and source of the data to be checked. The typology proposed is fully compatible with those validation levels, complementing them to reach a better view of the validation rules in a statistical domain applying one of the principles of the VIP on Validation: “be concrete, be useful”. In the following table we can find a relationship between the Validation levels and the Validation typology proposed. 1 Validation levels are defined in the document “Definition of ‘validation levels’ and other related concepts” by the VIP on Validation in 2011 and revised during 2013. September 2013 Page 1 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 2. Introduction The aim of this document is to present, in most exhaustive way, all the validation rules currently applied to the data received by Eurostat. The validation rules are grouped according to the source of the information required: no records, single dataset and data from several datasets. Some validation types cannot be classified according to the source of information as they can be applied intra or inter datasets depending on each case. Validation rules often cover more than one typology of checks as they can be implicit. For example “Allowed character” checks may have an implicit “Type” check. The present list of validation typologies can serve as a checklist when preparing the validation rules to apply in a domain. However it should be stated that not all domains or dataset validation processes require having all the typologies described. Most of the validation processes will have a set of validation rules covering just a part of the validation types described. 3. Validation rules The different validation rules can be grouped into four groups: a. Checks on the file structure: This validation group involves consistency and reasonability tests applied by the data manager prior to integration into the Database System. Consistency tests verify that file naming conventions, data formats, field names, and file structure are consistent with project conventions. Discrepancies are reported to the measurement investigator for remediation. b. 1 file checks: This validation group is the first step in data analysis. Validation tests in this group involve the testing of measurement assumptions, comparisons of measurements, and internal consistency tests. c. >1 file checks: This group of data validation usually takes place after data have been assembled in the database2. Validation rules under this group need to access other files, including external sources of information. Time series analysis and mirror checks are two special types of more than one file checks. d. 1n files checks: All checks not falling into the previous group. The type of check will need 1 or more files depending on its practical implementation in each validation rule. 2 Intra-dataset checks may take place before the data is assembled in the database as well. September 2013 Page 2 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Group 4: Other checks Reference Datasets Data Structure Target Dataset Data Group 3: Inter datasets Group 1: File Structure Group 2: Intra-dataset Graph 1: Typology groups When the validation fails, it may produce three types of error: Fatal error: the data are rejected; Warning: the data can be accepted, with some corrections or explanations from the data provider; Information: the data are accepted. September 2013 Page 3 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 4. Typology of validation rules 4.1 File structure File structure checks are performed before analysing the data. Type of error of validation rules under this category is usually “Fatal” as they may cause IT systems not to accept the files. By definition, all types of rules under this group correspond to level 0 validation rules. In this category there are the following types: Filename checks File type checks Delimiters checks Format checks 4.1.1 Filename check Checks that the filename is consistent with file naming conventions. This validation also checks implicitly the filename length whether is consistent with file naming conventions agreed for each domain. These validation rules may have a technical origin, e.g. limitations in the filename length of operating systems. Example: (Road freight transport statistics) Table File naming convention File name/File name length A1 (Vehicle related data) Country Code (2-characters) + Year (2-digits) + Quarter Code (2-characters) + ’ROAD’+ Table Name IT07Q2ROADA1.dat/16 4.1.2 File type check Checks the type of data file we are dealing with. This validation is quite important since both sender and receiver rely both upon the compatibility and integrity of data file e.g. a system can require input data in csv format. Example: (Road freight transport statistics) Table File File Format A1 (Vehicle related data) All data Files referring to A1 table a DAT type which is a generic "data" file or a ZIP type which is used for data file compression 4.1.3 Delimiters checks Checks that only expected characters are present as field or record delimiters. For example for a csv file may only allow comma (or semicolon) as field separator. Example: (Farm structure survey statistics) September 2013 Page 4 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Table Target Structure delimiters checks Any table Any data field A plus sign ‘+’ is used as record separator Any table Any data record A plus sign ‘+’ followed by a line feed character is used as record separator 4.1.4 Format check Checks that the data is in a specified format (template), e.g., each record must contain ten fields. In the example below, a check of “field headings” is included as this type of validation because they can be easily be wrongly classified as inter-dataset checks. Example: (Rail transport statistics) Table Target Valid character checks A1 – A9(Annual statistics on goods transport – detailed reporting) Any data file corresponding to tables A1 – A9 Each file must include the correct names for the fields and in the specified order in the first record of the file A1 – A9 Any data record Each record must include 18 fields 4.2 Checks within the file In this group of validation rules, data in the file are analysed looking for internal coherence. The type of error (Fatal error, Warning, Information) should be studied case by case but usually they have a lower weight than file structure checks. In this group, there are validation rules falling under validation levels 0 and 1. Following types of validation rules are classified in this group: Type checks Length checks Presence checks Allowed character checks Uniqueness checks Range checks 4.2.1 Type Check A type check will ensure that the correct type of data is entered into each field. By setting the data type as number, only numbers could be entered e.g. 10,12, 14, and should prevent anyone to enter text such as ‘ten’ or ‘twelve’. Example: (Social protection statistics) Table Field Valid Type ESSPROS_QI (Qualitative Information on Updated_Info Date September 2013 Page 5 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Social protection expenditure by Social Protection Scheme) 4.2.2 ESSPROS_QI Organisation Text ESSPROS_PB (Number of Pension Beneficiaries) 1142111 (Means-tested survivors’ pension beneficiaries) Number Length Check Validation rules of this type try to ensure that data in a field (dimension, measure or attribute) contain the same number of characters. Example: (Road freight transport statistics) 4.2.3 Table Field Valid Length (in characters/digits) A1 Year 4 A1 Quarter 2 Presence Check Checks that important data are actually present and have not been missed out. These checks don’t ensure that each field was filled in the correct way. Example: for road freight transport data files some fields -for instance the survey year- are mandatory 4.2.4 Table Field Mandatory Presence A1 Year Yes A1 Quarter Yes A1 QuestN (Questionnaire number) Yes Allowed character checks Checks that ascertain that only expected characters are present in a field. For example data attributes (flags) may contain “p”, “e”, “i” but not any other arbitrary character. In some other cases, “periodicity” can be expressed as “Q”, “Y”, “M”, “5Y”, etc. Example: (Farm structure survey statistics) Table Field Valid character checks Any table Any numerical field A full stop as decimal separator instead of comma Any table Any data field The character ‘:’ is used for non available data September 2013 Page 6 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 4.2.5 Uniqueness Check The uniqueness checks are integrity rules, which checks that each value in specific fields is unique (there are no duplicates) throughout the dataset. It can be applied to the combination of several fields (i.e. Country, Year, Type of transport). Example: (Road freight transport statistics) Table Table key (fields combination) Unique A1 Rcount (Reporting Country) + Year + Quarter + QuestN Yes (Each combination of Reporting country + Year + Quarter + Questionnaire number must be unique in the table) 4.2.6 Range Check Checks that the data lay within a specified range of values, e.g., the month of a person's date of birth should lie between 1 and 12. This validation checks data also for one limit only, upper OR lower, e.g., data should not be greater than 2 (<=2). Below we present in a table the fields for which range check validation rules applied for the distinct domains: Example: Road freight transport statistics Table Field Valid Range (Minimum, Maximum) A1 (Vehicle-related data) A1.3 (Age of vehicle) 0 < Value < 30 A1 Year > 1998 4.3 Checks inter-files Checks inter-files may require the presence of more than one file. In some cases there is one file to be verified against values contained in other tables of codes or values while in other cases both files are linked and information should keep coherence between them. In both cases, we call “target dataset” to the dataset to be evaluated against the values in the “reference datasets or files”. Types of rules identified in this group usually are under validation levels 2 and 3, but in some cases they can cover validation levels 4 or even 5. There are seven types of validation rules in this category: Referential integrity Code List check Cardinality checks Mirror checks Time series checks Revised data integrity Check Model – based consistency Check September 2013 Page 7 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 4.3.1 Referential integrity Referential integrity ensures that all the values in a field or group of fields (usually values of one or more dimensions) are contained in another dataset. This is used to keep virtual links between files or datasets with related data. For referential integrity checks both files, the target and the referenced, must be available. A special case of referential integrity is the “Code list check” where the referenced dataset acts as a dictionary table for the values in the checked file. Example: In Inland waterways transport statistics, data files A1, B1 and C1 should contain data referring to existing information in tables D1 and D2. Table1 Table2 Fields in Table 1 that should contain the same values as those in Table 2 A1 B1 Reporting Country + Year + Type of Transport A1 C1 Reporting Country + Year + Type of Transport B1 D1 Reporting Country + Year + Type of Transport C1 D2 Reporting Country + Year 4.3.2 Code List Check Code list is a special case of referential integrity. In Code list type, validation rules check that all the values for a field are stored in another file or dataset that acts as a data dictionary. These checks are very useful to keep coherence in the values assigned to a field. They require a maintained and up-todate reference table of values. Example: External trade statistics Dataset Field Valid List of entries INTRASTAT Country of origin Valid ISO country codes INTRASTAT Commodity Code Combined Nomenclature for arrivals and dispatches (8-digit codes) 4.3.3 Cardinality checks Checks that each record has a valid number of related records. In some cases the cardinality is a fixed number, while in other cases the cardinality is expressed in one of the fields of the record being analysed. The related records can be in the same or in another dataset. For example in an imaginary Census we have household data and personal data, if based to household records the number of persons living in the same household is three, there must be three associated records in personal data for this household (Cardinality = 3). 4.3.4 Mirror checks These quality checks can be performed in order to compare the consistency between two partner declarations. September 2013 Page 8 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 A classic example of mirror checks comes from INTRASTAT. Member States report monthly the arrivals of goods from the other MSs and the dispatches of goods to other Member States. Therefore for each combination of [Reference month, Type of goods, Dispatching MS, Receiving MS] there are two data items: one for the dispatch declared by the dispatching country as reporting country and one for the arrival declared by the receiving country as reporting country. In principle the statistical values and quantities in the two items must be equal. This is not always the case due to different reporting thresholds for different MSs and different recording dates of shipments, which strand two months. The principle however remains and is used in actual validation. Example 1: Road freight transport statistics Table Field Mirror field1 Mirror field2 Mirror Check Table A2 A2.2 (Weight of goods) A2.3 (Place of A2.4 (Place of loading (for a unloading (for a A2.2[ A2.3] A2.2[ A2.4] Round _ Err laden journey): laden journey): either country either country code code or full or full region code region code with country) (Weight of goods loaded in a origin-region should be equal to the sum of weight of goods in all records having the same region as destination plus a rounding error) with country) Example 2: Air transport statistics Table Field Mirror field1 Mirror field2 Mirror Check Table A1 Passengers Total Passengers on board at Departure (Reporting country) Total Passengers on board at Arrival (Partner country) Passengers [Mirror field1] =Passengers [Mirror field2] + Deviation 4.3.5 Time series checks Time series checks are implemented in order to detect suspicious evolution of data during the time. They can be associated to outlier detection. In some cases they can express seasonality of data. Example 1: Maritime transport statistics Table Field Indicator Valid Range (Minimum, Maximum) A1 Gross weight of goods A1(t) / A1(t-1) (Low limit, High Limit) Example 2: Structural Business Statistics Table Variable Valid Range (Minimum, Maximum) Series 1A V11110(t)/V11110(t-1) (0.82,1.22) September 2013 Page 9 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 Example 3: External trade Table Variable Valid Range (Minimum, Maximum)3 Model specifies Valid Range INTRASTAT Invoice Value (Low limit, Higher Limit) MAD INTRASTAT Total value declared (Low limit, Higher Limit) MAD Example 4: National Accounts: Average growth rate of GDP at market prices (first rule) and Final consumption expenditure (second rule) should be under the normal 2-sigma delimited values Table Variable Valid Range V101.EE.B1GM.CLV00MF.QNW B1GM (t)/B1GM (t-1) Average Growth Rate 2 V102.EE.P3.CLV00MF.QSW P3 (t)/P3 (t-1) Average Growth Rate 2 4.3.6 Revised data integrity Check Revised data integrity check applies to revised datasets. Thisvalidation compares revised to initial data and, if necessary4, investigates the sources of significant discrepancies. The levels of acceptable discrepancies are either ad – hoc or model specified. Example: National Accounts Statistics. In the example, Revisions of Gross Domestic Product at Market Prices data are checked to be kept below a threshold of 0.5% Table1 (Initial data) Table2 (Revised data) Condition V.EE.B1GM.CLV00MF.QSW V101.EE.B1GM.CLV00MF.QSW (ValueT2 – ValueT1) <= 0.005*ValueT1 4.3.7 Statistical model – based Consistency Check These rules compare quantitative data with limits derived from other data of the same reference period, e.g. with limits set at a number of standard deviations around the data mean or limits derived from a regression model that connects two variables. Models are also used to derive limits from historical data for comparison of current data with them. Example 3 of Time series checks described above (chapter 4.3.5) could be seen as an example of a mixed validation rule of both types: time series and statistical model, as it uses the Median Absolute Deviation to determine the thresholds where values are acceptable in a defined period of time. 3 The lower and higher limits in the valid range defined by the limits specified following the Median Absolute Deviation (MAD) in a determined period of time for outliers detection. 4 For some datasets, the revision process is a normal one so the detection of revisions is a 'processing' step and not a pure validation step. However, in order to validate data, the revised figures should be detected and tested against some thresholds September 2013 Page 10 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 4.4 Checks intra or inter files Some types of validation rules will use one or more files depending on their practical implementation and the nature of the validation to be performed. In these cases two different rules, checking 1 or more than one file, can be grouped inside the same type. The validation rules under this group may cover all validation levels except level 0 since they are not supposed to check file formats. These validation rules have been grouped as: Consistency checks Control checks Conditional checks 4.4.1 Consistency checks Consistency checks are a wide range of validation rules ensuring the coherence of fields in the dataset. They usually constitute a vast set of rules aiming to perform a data analysis looking for coherence and, in some cases plausibility of the values in the file. They can perform comparisons between fields, comparisons of data with a predefined range or comparisons of values with parts of the filename. It can happen that values checked for consistency are located in different files or datasets. In that case we are speaking about inter-dataset checks. This is the reason why Consistency checks can be considered as a special type of validation rules that can be classified as intra-dataset checks as well as inter-dataset. Example 1: Road freight transport statistics Table Field1 Field2 Consistency Rule A1 Year Filename Year Field1=Field2 A1 Quarter Filename Quarter Field1=Filed2 Example 2: Structural Business Statistics Table Variables Consistency Rule Series 1A V12150/V12120 0.85<V12150/V12120<1.15 Series 2A V13310/V16130 0.85< V13310/V16130<1.18 Example 3: Rail transport statistics Tables Variables Consistency Rule C1, E2 C1-11 – E2-12 0.05<= C1-11 – E2-12 <=0.2 C3, E2 C3-12 – E2-09 0.05<= C3-12 – E2-09 <=0.2 September 2013 Page 11 Project: ESS.VIP.BUS Common data validation policy Document: Exhaustive and detailed typology of validation rules Version: 0.1309 4.4.2 Control Check In some domains there are control fields that link values at record or dataset level with an implicit formula that should be respected, e.g. Total population should be equal to Male population plus Female population. This field is called the Control totals key figure field. Control totals are used to verify the integrity of the contents of the data. Example 1: Social protection statistics Table Variables Consistency Rule ESSPROS_PB (Pension Beneficiaries) Total, Women, Men Total = Women + Men (in all records) ESSPROS_QD (Quantitative data) S1000000 (Total social protection expenditure) S1100000 (Social protection benefits) S1000000 = S1100000 + S1200000 + S1400000 S1200000 (Administration costs) S1400000 (Other expenditure) Example 2: Migration statistics 4.4.3 Table Key fields Control Check IMM1CTZ Sex (Total, Male, Female) Total = Male + Female IMM7CTB Citizenship (TOTAL, NATIONALS, NON-NATIONALS, UNK_GR) TOTAL = NATIONALS + NONNATIONALS + UNK_GR IMM6CTZ Country of birth (TOTAL, NATIVE-BORN, FOREIGN-BORN, UNK_GR) TOTAL = NATIVE-BORN + FOREIGN-BORN + UNK_GR Conditional Checks Conditional checks perform different checks depending on whether a pre-specified condition evaluates to true or false. Example: Road freight transport statistics Table Condition Conditional Check A2 A1.2 like ‘3XX’ A1.5 <= 0.85*A1.4 A2 A2.1=1 A2.2<=A1.5 September 2013 Page 12