Slide

advertisement

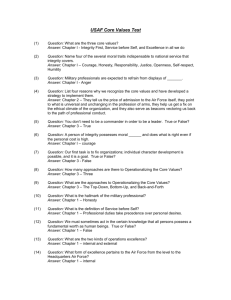

Convex Optimization: Part 1 of Chapter 7

Discussion

Presenter: Brian Quanz

A KTEC Center of Excellence

1

About today’s discussion…

• Chapter 7 – no separate discussion of

convex optimization

• Discusses with SVM problems

• Instead:

• Today: Discuss convex optimization

• Next Week: Discuss some specific convex optimization problems

(from text), e.g. SVMs

A KTEC Center of Excellence

2

About today’s discussion…

• Mostly follow alternate text:

• Convex Optimization, Stephen Boyd and Lieven Vandenberghe

– Borrowed material from book and related course notes

– Some figures and equations shown here

• Available online: http://www.stanford.edu/~boyd/cvxbook/

• Nice course lecture videos available from Stephen Boyd online:

http://www.stanford.edu/class/ee364a/

• Corresponding convex optimization tool (discuss later) - CVX:

http://www.stanford.edu/~boyd/cvx/

A KTEC Center of Excellence

3

Overview

Why convex? What is convex?

Key examples of linear and quadratic programming

Key mathematical ideas to discuss:

->Lagrange Duality

->KKT conditions

Brief concept of interior point methods

CVX – convex opt. made easy

A KTEC Center of Excellence

4

Mathematical Optimization

• All learning is some optimization problem

-> Stick to canonical form

• x = (x1, x2, …, xp ) – opt. variables ; x*

• f0 : Rp -> R – objective function

• fi : Rp -> R – constraint function

A KTEC Center of Excellence

5

Optimization Example

• Well familiar with: regularized regression

• Least squares

• Add some constraints, ridge, lasso

A KTEC Center of Excellence

6

Why convex optimization?

• Can’t solve most OPs

• E.g. NP Hard, even high polynomial time too slow

• Convex OPs

• (Generally) No analytic solution

• Efficient algorithms to find (global) solution

• Interior point methods (basically Iterated Newton) can be used:

– ~[10-100]*max{p3 , p2m, F} ; F cost eval. obj. and constr. f

• At worst solve with general IP methods (CVX), faster specialized

A KTEC Center of Excellence

7

What is Convex

Optimization?

• OP with convex objective and constraint

functions

• f0 , … , fm are convex = convex OP that has

an efficient solution!

A KTEC Center of Excellence

8

Convex Function

• Definition: the weighted mean of function

evaluated at any two points is greater than

or equal to the function evaluated at the

weighted mean of the two points

A KTEC Center of Excellence

9

Convex Function

• What does definition mean?

• Pick any two points x, y and evaluate along the function,

f(x), f(y)

• Draw the line passing through the two points f(x) and

f(y)

• Convex if function evaluated on any point along the line

between x and y is below the line between f(x) and f(y)

A KTEC Center of Excellence

10

Convex Function

A KTEC Center of Excellence

11

Convex Function

Convex!

A KTEC Center of Excellence

12

Convex Function

Not Convex!!!

A KTEC Center of Excellence

13

Convex Function

• Easy to see why convexity allows for

efficient solution

• Just “slide” down the objective function as

far as possible and will reach a minimum

A KTEC Center of Excellence

14

Local Optima is Global (simple proof)

A KTEC Center of Excellence

15

Convex vs. Non-convex Ex.

Affine – border

case of convexity

• Convex, min. easy to find

A KTEC Center of Excellence

16

Convex vs. Non-convex Ex.

• Non-convex, easy to get stuck in a local min.

• Can’t rely on only local search techniques

A KTEC Center of Excellence

17

Non-convex

• Some non-convex problems highly multi-modal,

or NP hard

• Could be forced to search all solutions, or hope

stochastic search is successful

• Cannot guarantee best solution, inefficient

• Harder to make performance guarantees with

approximate solutions

A KTEC Center of Excellence

18

Determine/Prove Convexity

• Can use definition (prove holds) to prove

• If function restricted to any line is convex, function is convex

• If 2X differentiable, show hessian >= 0

• Often easier to:

• Convert to a known convex OP

– E.g. QP, LP, SOCP, SDP, often of a more general form

• Combine known convex functions (building blocks) using

operations that preserve convexity

– Similar idea to building kernels

A KTEC Center of Excellence

19

Some common convex OPs

• Of particular interest for this book and

chapter:

• linear programming (LP) and quadratic programming (QP)

• LP: affine objective function, affine constraints

-e.g. LP SVM, portfolio management

A KTEC Center of Excellence

20

LP Visualization

Note:

constraints

form

feasible set

-for LP,

polyhedra

A KTEC Center of Excellence

21

Quadratic Program

• QP: Quadratic objective, affine constraints

• LP is special case

• Many SVM problems result in QP, regression

• If constraint functions quadratic, then Quadratically

Constrained Quadratic Program (QCQP)

A KTEC Center of Excellence

22

QP Visualization

A KTEC Center of Excellence

23

Second Order Cone Program

• Ai = 0 - results in LP

• ci = 0 - results in QCQP

• Constraint requires the affine functions

to lie in 2nd order cone

A KTEC Center of Excellence

24

Second Order Cone (Boundary) in R3

A KTEC Center of Excellence

25

Semidefinite Programming

• Linear matrix inequality (LMI) constraints

• Many problems can be expressed using

LMIs

• LP and SOCP

A KTEC Center of Excellence

26

Semidefinite Programming

A KTEC Center of Excellence

27

Building Convex Functions

• From simple convex functions to complex:

some operations that preserve complexity

• Nonnegative weighted sum

• Composition with affine function

• Pointwise maximum and supremum

• Composition

• Minimization

• Perspective ( g(x,t) = tf(x/t) )

A KTEC Center of Excellence

28

Verifying Convexity Remarks

• For more detail and expansion, consult the

referenced text, Convex Optimization

• Geometric Programs also convex, can be

handled with a series of SDPs (skipped details

here)

• CVX converts the problem either to SOCP or

SDM (or a series of) and uses efficient solver

A KTEC Center of Excellence

29

Lagrangian

• Standard form:

• Lagrangian L:

• Lambda, nu, Lagrange multipliers (dual variables)

A KTEC Center of Excellence

30

Lagrange Dual Function

• Lagrange Dual found by minimizing L

with respect to primal variables

• Often can take gradient of L w.r.t. primal var.’s and set = 0

(SVM)

A KTEC Center of Excellence

31

Lagrange Dual Function

• Note: Lagrange dual function is the pointwise infimum of family of affine functions

of (lambda, nu)

• Thus, g is concave even if problem is not

convex

A KTEC Center of Excellence

32

Lagrange Dual Function

• Lagrange Dual provides lower bound on

objective value at solution

A KTEC Center of Excellence

33

Lagrangian as Linear Approximation, Lower Bound

• Simple interpretation of Lagrangian

• Can incorporate the constraints into objective as

indicator functions

• Infinity if violated, 0 otherwise:

0

• In Lagrangian we use a “soft” linear approximation to the

indicator functions; under-estimator since

A KTEC Center of Excellence

34

Lagrange Dual Problem

• Why not make the lower bound best possible?

• Dual problem:

• Always convex opt. problem (even when primal

is non-convex)

• Weak Duality: d* <= p* (have already seen

this)

A KTEC Center of Excellence

35

Strong Duality

• If d* = p*, strong duality holds

• Does not hold in general

• Slater’s Theorem: If convex problem, and

strictly feasible point exists, then strong

duality holds! (proof too involved, refer to text)

• => For convex problems, can use dual problem to find solution

A KTEC Center of Excellence

36

Complementary Slackness

• When strong duality holds

(definition)

(since constraints

satisfied at x*)

• Sandwiched between f0(x), last 2 inequalities are

equalities, simple!

A KTEC Center of Excellence

37

Complementary Slackness

• Which means:

• Since each term is non-positive, we have

complementary slackness:

• Whenever constraint is non-active,

corresponding multiplier is zero

A KTEC Center of Excellence

38

Complementary Slackness

• This can also be described by

• Since usually only a few active constraints at

solution (see geometry), the dual variable

lambda is often sparse

• Note: In general no guarantee

A KTEC Center of Excellence

39

Complementary Slackness

• As we will see, this is why support vector

machines result in solution with only key

support vectors

• These come from the dual problem, constraints correspond to points, and

complementary slackness ensures only the “active” points are kept

A KTEC Center of Excellence

40

Complementary Slackness

• However, avoid common misconceptions when

it comes to SVM and complementary slackness!

• E.g. if Lagrange multiplier is 0, constraint could

still be active! (not bijection!)

• This means:

A KTEC Center of Excellence

41

KKT Conditions

• The KKT conditions are then just what we

call that set of conditions required at the

solution (basically list what we know)

• KKT conditions play important role

• Can sometimes be used to find solution analytically

• Otherwise can think of many methods as ways of solving KKT

conditions

A KTEC Center of Excellence

42

KKT Conditions

• Again given strong duality and assuming

differentiable, since

gradient must be 0 at x*

• Thus, putting it all together, for non-convex

problems we have

A KTEC Center of Excellence

43

KKT Conditions – non-convex

• Necessary conditions

A KTEC Center of Excellence

44

KKT Conditions – convex

• Also sufficient

conditions:

• 1+2 -> xt is feasible.

• 3 -> L(x,lt,nt) is convex

• 5 -> xt minimizes L(x,lt,nt)

so g(lt,nt) = L(xt,lt,nt)

A KTEC Center of Excellence

45

Brief description of interior point method

• Solve a series of equality constrained problems

with Newton’s method

• Approximate constraints with log-barrier

(approx. of indicator)

A KTEC Center of Excellence

46

Brief description of interior point method

• As t gets larger, approximation becomes better

A KTEC Center of Excellence

47

Central Path Idea

A KTEC Center of Excellence

48

CVX: Convex Optimization Made Easy

• CVX is a Matlab toolbox

• Allows you to flexibly express convex optimization problems

• Translates these to a general form and uses efficient solver

(SOCP, SDP, or a series of these)

• http://www.stanford.edu/~boyd/cvx/

• All you have to do is design the convex

optimization problem

• Plug into CVX, a first version of algorithm implemented

• More specialized solver may be necessary for some applications

A KTEC Center of Excellence

49

CVX - Examples

• Quadratic program: given H, f, A, and b

• cvx_begin

variable x(n)

minimize (x’*H*x + f’*x)

subject to

A*x >= b

cvx_end

A KTEC Center of Excellence

50

CVX - Examples

• SVM-type formulation with L1 norm

• cvx_begin

variable w(p)

variable b(1)

variable e(n)

expression by(n)

by = train_label.*b;

minimize( w'*(L + I)*w + C*sum(e) + l1_lambda*norm(w,1) )

subject to

X*w + by >= a - e;

e >= ec;

cvx_end

A KTEC Center of Excellence

51

CVX - Examples

• More complicated terms built with expressions

• cvx_begin

variable w(p+1+n);

expression q(ec);

for i =1:p

for j =i:p

if(A(i,j) == 1)

q(ct) = max(abs(w(i))/d(i),abs(w(j))/d(j));

ct=ct+1;

end

end

end

minimize( f'*w + lambda*sum(q) )

subject to

X*w >= a;

cvx_end

A KTEC Center of Excellence

52

Questions

• Questions, Comments?

A KTEC Center of Excellence

53

Extra proof

A KTEC Center of Excellence

54