Title Layout

advertisement

Shared Computing Cluster

Advanced Usage

Research Computing Services

Outline

›

SCC design overview

›

SCC Login Nodes

›

Interactive Jobs

›

Batch Jobs

›

Multithreaded Jobs

›

MPI Jobs

›

Job Arrays

›

Job dependence

›

Interactive Graphics jobs

›

Jobs monitoring

›

Jobs analysis

›

Code Optimization

›

qacct, acctool and other useful commands

Rear View

Ethernet

Infiniband

switch

Compute

nodes

Server Cabinets

SCC Compute node

There are hundreds of nodes.

Each has its own properties and designation.

• Processor

• Number of Cores

• Memory

• Network connection

• CPU Architecture

SCC on the web: http://www.bu.edu/tech/support/research/computing-resources/tech-summary/

<- Chassis ->

<- Cabinets ->

<- Cabinets ->

<- Chassis ->

MPI jobs only

16 cores; 128 GB per node

1 Gbps Ethernet & FDR Infiniband

Shared nodes with

16 cores & 128 GB per node

1p jobs and omp jobs

1 Gbps Ethernet

Shared nodes with

16 cores & 256 GB per node

1p jobs and omp jobs

10 Gbps Ethernet

To get information about each node execute qhost

scc2 ~>

local

HOSTNAME

qhost

qhost

Show status of each host

ARCH

NCPU

LOAD

MEMTOT

MEMUSE

SWAPTO

SWAPUS

------------------------------------------------------------------------------global

-

-

-

-

-

-

-

geo

linux-x64

12

0.34

94.4G

16.1G

8.0G

2.2G

scc-aa1

linux-x64

16

9.78

126.0G

2.6G

8.0G

19.3M

scc-aa2

linux-x64

16 16.11

126.0G

5.1G

8.0G

0.0

scc-aa3

linux-x64

16 16.13

126.0G

5.1G

8.0G

0.0

scc-aa4

linux-x64

16 16.03

126.0G

5.3G

8.0G

2.7M

scc-aa5

linux-x64

16 16.01

126.0G

2.1G

8.0G

18.7M

scc-aa6

linux-x64

16 16.00

126.0G

4.9G

8.0G

2.4M

scc-aa7

linux-x64

16 16.01

126.0G

5.0G

8.0G

16.9M

scc-aa8

linux-x64

16 16.03

126.0G

5.7G

8.0G

18.5M

scc-ab1

linux-x64

16 16.00

126.0G

58.2G

8.0G

89.7M

qhost

scc2 ~>

qhost -F

local

HOSTNAME

ARCH

Detailed information about each node

NCPU

LOAD

MEMTOT

MEMUSE

SWAPTO

SWAPUS

------------------------------------------------------------------------------scc-aa1

linux-x64

...

hl:arch=linux-x64

hl:num_proc=16.000000

hl:mem_total=125.997G

hl:swap_total=8.000G

hl:virtual_total=133.997G

hl:scratch_free=840.000G

...

hf:cpu_arch=sandybridge

hf:cpu_type=E5-2670

hf:eth_speed=1.000000

hf:ib_speed=56.000000

16

0.00

126.0G

1.6G

8.0G

19.3M

qhost

scc2 ~>

qhost -j

local

HOSTNAME

ARCH

Print all the jobs running on each host

NCPU

LOAD

MEMTOT

MEMUSE

SWAPTO

SWAPUS

------------------------------------------------------------------------------scc-aa2

job-ID

linux-x64

prior

name

user

16 16.05

126.0G

5.2G

state submit/start at

8.0G

queue

0.0

master ja-task-ID

---------------------------------------------------------------------------------------------5299960 0.30000 cu_pt

bmatt

r

01/17/2015 18:25:53 a128@scc-a MASTER

a128@scc-a SLAVE

a128@scc-a SLAVE

a128@scc-a SLAVE

a128@scc-a SLAVE

a128@scc-a SLAVE

a128@scc-a SLAVE

a128@scc-a SLAVE

qhost

scc2 ~>

local

HOSTNAME

qhost -q

Show information about queues for each host

ARCH

NCPU

LOAD

MEMTOT

MEMUSE

SWAPTO

SWAPUS

------------------------------------------------------------------------------global

-

-

-

-

-

-

-

geo

linux-x64

12

0.30

94.4G

16.1G

8.0G

2.2G

scc-aa1

linux-x64

16 15.15

126.0G

2.6G

8.0G

19.3M

16 16.15

126.0G

5.1G

8.0G

0.0

a

BP

0/16/16

as

BP

0/0/16

a128

BP

0/0/16

scc-aa2

linux-x64

a

BP

0/0/16

as

BP

0/0/16

a128

BP

0/16/16

Service Models – Shared and Buy-In

~ 55%

~ 45%

Buy-In: purchased by individual

Shared: paid for by BU and

faculty or research groups

through the Buy-In program with

priority access for the purchaser.

university-wide grants and are free

to the entire BU Research

Computing community.

Shared

Buy-In

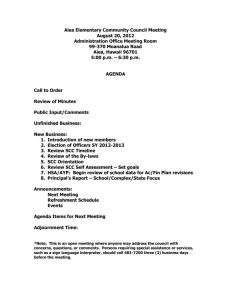

SCC basic organization

Public Network

SCC1

SCC2

SCC3

SCC4

VPN only

File Storage

Login Nodes

Private Network

Compute Nodes

More than 350 nodes

with

~ 6300 CPUs and

232 GPUs

SCC Login nodes rules

Login nodes are designed for light work:

› Text editing

› Light debugging

› Program compilation

› File transfer

There are 4 login nodes with 12 cores each and more than 1400 SCC users

SCC Login nodes rules

To ensure effective and smooth experience for everyone,

the users should NOT:

› Execute a program on a login node that runs longer than

10-15 minutes

› Execute parallel programs on a login node

3 types of jobs

› Batch job – execution of the program without manual

intervention

› Interactive job – running interactive shell: run GUI

applications, code debugging, benchmarking of serial and

parallel code performance…

› Interactive Graphics job (new)

Interactive Jobs

qsh

qlogin

qrsh

qsh

qlogin /

qrsh

X-forwarding is required

✓

—

Session is opened in a separate

window

✓

—

Allows for a graphics window to be

opened by a program

✓

✓

Current environment variables can be

passed to the session

✓

—

Batch-system environment variables

($NSLOTS, etc.) are set

✓

—

Request interactive job

scc2 ~>

qsh

pwd

/projectnb/krcs

local

scc2 ~>

qsh

Your job 5300277 ("INTERACTIVE") has been submitted

waiting for interactive job to be scheduled ...

Your interactive job 5300277 has been successfully scheduled.

scc2 ~ >

scc2 ~> pwd

/projectnb/krcs

Request interactive job with additional options

scc2 ~>

module load R

local ~>

scc2

qsh –pe omp 4 –l mem_total 252G -V

Your job 5300277 ("INTERACTIVE") has been submitted

waiting for interactive job to be scheduled ...

Your interactive job 5300277 has been successfully scheduled.

scc2 ~ >

scc2 ~> echo $NSLOTS

4

scc2 ~> module list

Currently Loaded Modulefiles:

1) pgi/13.5

2) R/R-3.1.1

qsh

scc2 ~ > qsh -P krcs -pe omp 16

qsh –now n

Your

job 5300273 ("INTERACTIVE") has been submitted

local

waiting for interactive job to be scheduled ....

Your "qsh" request could not be scheduled, try again later.

scc2 ~ > qsh -P krcs -pe omp 16 –now n

Your job 5300277 ("INTERACTIVE") has been submitted

waiting for interactive job to be scheduled ...

Your interactive job 5300277 has been successfully scheduled.

scc2 ~ >

When the cluster is busy, or when a number of additional options are added to

interactive job request the schedule cannot satisfy the request immediately.

Add “-now n” option to your interactive job request to add this job into pending

queue.

qrsh

scc2 ~ > qrsh –pe omp 4 -V

RSA host key for IP address '192.168.18.180' not in list of known hosts.

local

Last login: Fri Jan 16 16:50:34 2015 from scc4p.scc.bu.edu

scc-pi4 ~ > pwd

/usr1/scv/koleinik

Jobs started with qrsh command do not require

X-forwarding;

scc-pi4 ~ > echo $NSLOTS

scc-pi4 ~ > module list

Currently Loaded Modulefiles:

1) pgi/13.5

They will start in the same window;

Current directory will be set to home;

Environment variables cannot be passed;

Submitting Batch Jobs

# local

Submit a (binary) program

scc2 ~ > qsub –b y printenv

Your job 5300301 ("printenv") has been submitted

# Submit a program using script

scc-pi4 ~ > qsub myScript.sh

Your job 5300302 (“myScript") has been submitted

qsub

Check the job

qstat

local ~ > qstat –u koleinik

scc2

job-ID

prior

name user

state submit/start at

queue

slots ja-task-ID

----------------------------------------------------------------------------------------------------5260168 0.11732 a1

koleinik r

01/22/2015 14:59:22 budge@scc-jc1.scc.bu.edu

# Check only running jobs

scc-pi4 ~ > qstat –u koleinik –s r

# Check resources requested for each job

scc-pi4 ~ > qstat –u koleinik –r

12

4

Parallelization on the SCC

2 types of parallelization:

- multithreaded/OpenMP (uses some or all cores on one node)

- mpi (uses multiple cores possibly across a number of nodes)

Multithreaded parallelization on the SCC

C, C++, FORTRAN, R, Python, etc. allow for multithreaded

type of parallelization. This normally requires to add some

special directives within the code. There are a number of

applications which will also parallelize if appropriate option

is given on the command line

Multithreaded parallelization on the SCC

OMP parallelization, using C:

#pragma omp parallel

{

threads = omp_get_num_threads();

id = omp_get_thread_num();

printf(" hello from thread %d out of %d threads!\n", id, threads);

}

Multithreaded parallelization on the SCC

Multithreaded parallelization, using R:

library(parallel)

registerDoMC(nCores)

# Execute sampling and analysis in parallel

matrix <- foreach(i=1:nSim, .combine=rbind) %dopar% {

perm <- sample(D, replace=FALSE)

mdl <- lm(perm ~ M)

c(i, coef(mdl))

}

Multithreaded parallelization on the SCC

Batch script options to submit a multi-threaded program

# Request 8 cores. This number can be up to 16

#$ -pe omp 8

#

# For OMP C or FORTRAN code you need to set enviroment variable:

export OMP_NUM_THREADS=$NSLOTS

./program arg1 arg2

MPI parallelization on the SCC

Batch script options to submit MPI program

# Request 32 cores. This number should be multiple of 16

#$ -pe mpi_16_tasks_per_node 32

#

mpirun –np 32 ./program arg1 arg2

MPI parallelization on the SCC

Check which nodes the program runs on (expanded view for MPI jobs)

qstat

scc2 ~ > qstat –u <user_name> –g t

local

job-ID

prior

name

user

state submit/start at

queue

master ja-task-ID

-----------------------------------------------------------------------------------------------------------------5348232 0.24921 program

user

r

01/23/2015 06:52:00 straub-mpi@scc-nb3.scc.bu.edu

MASTER

straub-mpi@scc-nb3.scc.bu.edu

SLAVE

straub-mpi@scc-nb3.scc.bu.edu

SLAVE

straub-mpi@scc-nb3.scc.bu.edu

SLAVE

straub-mpi@scc-nb3.scc.bu.edu

SLAVE

01/23/2015 06:52:00 straub-mpi@scc-nb7.scc.bu.edu

SLAVE

straub-mpi@scc-nb7.scc.bu.edu

SLAVE

. . .

5348232 0.24921 program

. . .

user

r

MPI parallelization on the SCC

Possible choices for number of processors on the SCC:

4 tasks per node: 4, 8, 12, …

8 tasks per node: 16, 24, 32, …

12 tasks per node: 12, 24, 36, …

16 tasks per node: 16, 32, 48, …

Bad choice:

Better:

# should be used for very small number of tasks

# should be used for very small number of tasks

mpi_4_tasks_per_node 12

mpi_12_tasks_per_node 12

Array Jobs

An array job executes independent copy of the same job script. The number of tasks to be executed is

set using-t option to the qsub command, .i.e:

scc % qsub -t 1-10 myscript.sh

The above command will submit an array job consisting of 10 tasks, numbered from 1 to 10. The batch

system sets upSGE_TASK_ID environment variable which can be used inside the script to pass the task

ID to the program:

#!/bin/bash/

#$ -N myjob

#$ -j y

Rscript myRfile.R $SGE_TASK_ID

Where my job will execute? How long will it wait in the queue?…

› Type of the application

› Additional resources requested

› What other users do

Where my job will execute? How long will it wait in the queue?…

There are a number of queues defined on the SCC.

Various types of jobs are assigned to the different queues.

Jobs in a particular queue can execute only on designated

nodes.

Check status of the queues

qstat

scc2

local~ > qstat –g c

CLUSTER QUEUE

CQLOAD

USED

RES

AVAIL

TOTAL aoACDS

cdsuE

-------------------------------------------------------------------------------a

0.89

128

0

448

576

0

0

a128

0.89

384

0

192

576

0

0

as

0.89

0

0

576

576

0

0

b

0.96

407

0

9

416

0

0

bioinfo

0.00

0

0

48

48

0

0

bioinfo-pub

0.00

0

0

48

48

0

0

Queues on the SCC

› a* queues - for MPI jobs

› b* 1p and omp jobs

› c* large memory jobs

Get information about the queue

local ~ > qconf –sq a

scc2

hostlist

@aa @ab @ac @ad @ae

qtype

BATCH

pe_list

mpi_16_tasks_per_node_a

h_rt

120:00:00

qconf

Get information about various options for qsub command

local ~ > qconf –sc

scc2

#name

shortcut

type

#-----------------------------------------------------------cpu_arch

cpu_a

RESTRING

cpu_type

cpu_t

RESTRING

eth_speed

eth_sp

INT

scratch

MEMORY

…

scratch_free

qconf

Why my job failed… WHY ?

Batch Script Syntax

I submitted a job and it's hung in the queue…

Possible Cause: Check if the script has CR symbols at the end of

the lines:

cat -A script_file

You should NOT see ^M characters there

dos2unix script_file

I submitted a job and it failed … Why?

Add the option “-m ae” to the batch script (or qsub command):

an email will be sent at the end of the job and if the job is

aborted.

Job 5300308 (printenv) Complete

User

= koleinik

Queue

= linga@scc-kb5.scc.bu.edu

Host

= scc-kb5.scc.bu.edu

Start Time

= 01/17/2015 23:31:44

End Time

= 01/17/2015 23:31:44

User Time

= 00:00:00

System Time

= 00:00:00

Wallclock Time = 00:00:00

CPU

= 00:00:00

Max vmem

= NA

Exit Status

=0

Time Limit

Job 9022506 (myJob) Aborted

Exit Status = 137

Signal = KILL

User = koleinik

Queue = b@scc-bc3.scc.bu.edu

Host = scc-bc3.scc.bu.edu

Start Time = 08/18/2014 15:58:55

End Time = 08/19/2014 03:58:56

CPU = 11:58:33

Max vmem = 4.324G

failed assumedly after job because:

job 9022506.1 died through signal KILL (9)

The default time for interactive and

non-interactive jobs on the SCC is 12

hours.

Make sure you request enough time

for your application to complete:

#$ -l h_rt 48:00:00

Dear Admins:

I submitted a job and it takes longer than I expected.

Is it possible to extend the time limit?

Unfortunately, no…

SCC batch system does not allow to alter the time limit

even to the Systems Administrators.

Memory

Job 1864070 (myBigJob) Complete

User = koleinik

Queue = linga@scc-kb8.scc.bu.edu

Host = scc-kb8.scc.bu.edu

Start Time = 10/19/2014 15:17:22

End Time = 10/19/2014 15:46:14

User Time = 00:14:51

System Time = 00:06:59

Wallclock Time = 00:28:52

CPU = 00:27:43

Max vmem

= 207.393G

Exit Status

= 137

There is a number of

nodes that have only 3GB

of memory per slot, so by

default 1p-job should not

use more than 3-4GB of

memory.

If the program needs more

memory it should request

additional resources.

scc2 ~ > qhost –h scc-kb8

HOSTNAME

ARCH

NCPU LOAD MEMTOT MEMUSE SWAPTO SWAPUS

------------------------------------------------------------------------------global

scc-kb8

linux-x64

64 4.03 252.2G

8.6G

8.0G

36.8M

Memory

Currently, on the SCC there are nodes with

16 cores & 128GB

=

8GB/per slot

16 cores & 256GB

= 16GB/per slot

12 cores & 48GB

=

4GB/per slot

8 cores & 24GB

=

3GB/per slot

8 cores & 96GB

= 12GB/per slot

64 cores & 256GB = 4GB/per slot

64 cores & 512GB = 8GB/per slot

Available only to Med. Campus users

Memory

Example:

Single processor job needs 10GB of memory.

-------------------------

# Request a node with at least 12 GB per slot

#$ -l mem_total=94G

Memory

Example:

Single processor job needs 50GB of memory.

------------------------# Request a large memory node (16GB of memory per slot)

#$ -l mem_total=252G

# Request a few slots

#$ -pe omp 3

* Projects that can run on LinGA nodes might need some

additional options

Memory

Valgrind memory mismangement detector:

scc2 val > valgrind --tool=memcheck --leak-check=yes ./mytest

==63349== Memcheck, a memory error detector

==63349== Copyright (C) 2002-2012, and GNU GPL'd, by Julian Seward et al.

==63349== Using Valgrind-3.8.1 and LibVEX; rerun with -h for copyright info

==63349== Command: ./mytest

==63349==

String = tutorial, Address = 85733440

String = tutorial from SCC, Address = 85733536

==63349==

==63349== HEAP SUMMARY:

==63349==

in use at exit: 0 bytes in 0 blocks

==63349==

total heap usage: 2 allocs, 2 frees, 271 bytes allocated

==63349==

==63349== All heap blocks were freed -- no leaks are possible

==63349==

==63349== For counts of detected and suppressed errors, rerun with: -v

==63349== ERROR SUMMARY: 0 errors from 0 contexts (suppressed: 6 from 6)

Jobs using more than 1 CPU

Job 1864070 (myParJob) Complete

User = koleinik

Queue = budge@scc-hb2.scc.bu.edu

Host = scc-hb2.scc.bu.edu

Start Time = 11/29/2014 00:48:27

End Time = 11/29/2014 01:33:35

User Time = 02:24:13

System Time = 00:09:07

Wallclock Time = 00:45:08

CPU = 02:38:59

Max vmem = 78.527G

Exit Status = 137

Some applications try to

detect the number of cores and

parallelize if possible.

One common example is MATLAB.

Always read documentation and

available options to

applications. And either

disable parallelization or

request additional cores.

If the program does not allow

to control the number of cores

used – request the whole node.

Jobs using more than 1 CPU

Example:

MATLAB by default will use up to 12 CPUs.

-------------------------

# Start MATLAB using a single thread option:

matlab -nodisplay -singleCompThread -r "n=4, rand(n), exit"

Jobs using more than 1 CPU

Example:

Running MATLAB Parallel Computing Toolbox.

-------------------------

# Request 4 cores:

#$ -pe omp 4

matlab -nodisplay -r "matlabpool open 4, s=0; parfor i=1:n, s=s+i; end,

matlabpool close, s, exit"

My Job used to run fine and now it fails… Why?

Check your disc usage!

- To check the disc usage in your home directory use quota

- To check the disc usage by the project use pquota –u project_name

I submitted a job and it failed … Why?

We are always happy to help!

Please email us at help@scc.bu.edu

Please include:

1. Job ID

2. Your working directory

3. Brief description of the problem

How I can retrieve the information about the job I recently ran?

scc2 ~ > qacct –d 7 –o koleinik -j

qacct - report and account for SCC usage:

-b

-d

-e

-h

-j

-o

-q

-t

-P

Begin Time MMDDhhmm

Days

EndTime MMDDhhmm

HostName

Job ID

Owner

Queue

Task ID Range

Project

qacct output

qname

hostname

group

owner

project

department

jobname

jobnumber

taskid

b

scc-bd3.scc.bu.edu

scv

koleinik

krcs

defaultdepartment

ibd_check

5060718

4

qacct output

granted_pe

slots

failed

exit_status

ru_wallclock

ru_utime

ru_stime

ru_maxrss

...

ru_inblock

ru_oublock

...

maxvmem

NONE

1

0

0

231

171.622

16.445

14613128

# Indicates a problem

# Exit status of the job script

# Time (in seconds)

# Maximum resident set size (in bytes)

4427096

308408

# Block input operations

# Block output operations

14.003G

# Maximum virtual memory usage

Job Analysis

I submitted my job. And the program did not run .

My job is now in Eqw status…

scc2 advanced > cat -v myScript.sh

#!/bin/bash^M

^M

#Give my job a name^M

#$ -N myProgram^M

#^M

./myProgram^M

scc2 advanced > dos2unix myScript.sh

If a text file was created or

edited outside of the SCC –

make sure it is converted to the

proper format!

I submitted my job? How can I monitor it?

# Get the host name

scc2 ~ > qstat –u <userID>

job-ID prior

name

user

state submit/start at

queue

slots

------------------------------------------------------------------------------------------------------5288392 0.11772 myScript

koleinik

r

01/17/2015 08:48:15 linga@scc-ka6.scc.bu.edu

1

# Login to the host

scc2 ~ > ssh scc-ka6

top

scc2 ~ > top –u <userID>

PID USER

24793 koleinik

PR

20

NI VIRT RES SHR S %CPU %MEM

0 2556m 1.2g 5656 R 533.3 0.9

TIME+ COMMAND

0:06.87 python

top

scc2 ~ > top –u <userID>

PID USER

24793 koleinik

PID

PR

--

PR

20

NI VIRT RES SHR S %CPU %MEM

0 2556m 1.2g 5656 R 533.3 0.9

TIME+ COMMAND

0:06.87 python

Process Id

-- Priority of the task

VIRT – Total amount of virtual memory used

RES – Non-swapped physical memory a task has used

(RES = CODE+DATA)

SHR – Shared memory used by the task (memory that could be potentially

shared with other tasks)

top

scc2 ~ > top –u <userID>

PID USER

24793 koleinik

PR

20

NI VIRT RES SHR S %CPU %MEM

0 2556m 1.2g 5656 R 533.3 0.9

TIME+ COMMAND

0:06.87 python

S – Process Status:

‘D’ = uninterruptable sleep

‘R’ = running

‘S’ = sleeping

‘T’ = traced or stopped

‘Z’ = zombie

%CPU – CPU usage

%MEM – Currently used share of available physical memory

TIME+ -- CPU time

COMMAND – Command/program used to start the task

top

scc2 ~ > top –u <userID>

PID USER

24793 koleinik

PR

20

NI VIRT RES SHR S %CPU %MEM

0 2556m 1.2g 5656 R 533.3 0.9

TIME+ COMMAND

0:06.87 python

The job was submitted requesting only 1 slot, but it is using more

than 5 CPUs. This jobs will be aborted by the process reaper.

top

scc2 ~ > top –u <userID>

PID

46746

46748

46749

46750

46747

46703

46727

USER

koleinik

koleinik

koleinik

koleinik

koleinik

koleinik

koleinik

PR

20

20

20

20

20

20

20

NI VIRT RES SHR S

0 975m 911m 2396 R

0 853m 789m 2412 R

0 1000m 936m 2396 R

0 1199m 1.1g 2396 R

0 857m 793m 2412 R

0 9196 1424 1180 S

0 410m 301m 3864 S

%CPU %MEM

100.0 0.7

100.0 0.6

100.0 0.7

100.0 0.9

99.7 0.6

0.0 0.0

0.0 0.2

TIME+ COMMAND

238:08.88 R

238:07.88 R

238:07.84 R

238:07.36 R

238:07.20 R

0:00.01 5300788

0:05.11 R

The job was submitted requesting only 1 slot, but it is using 4CPUs.

This jobs will be aborted by the process reaper.

top

scc2 ~ > top –u <userID>

PID USER

8012 koleinik

PR

20

NI VIRT

0 24.3g

RES

23g

SHR S %CPU %MEM

16g R 99.8 25.8

TIME+ COMMAND

2:48.89 R

The job was submitted requesting only 1 slot, but it is using 25% of

all available memory on the machine. This jobs might fail due to the

memory problem (especially if other jobs on this machine are also

using a lot of memory).

qstat

qstat command has many options!

qstat –u <userID>

# list all users’ the jobs in the queue

qstat –u <userID> -r

qstat –u <userID> -g t

# check resources requested for each job

# display each task on a separate line

qstat

qstat –j <jobID>

# Display full information about the job

job_number:

. . .

5270164

sge_o_host:

scc1

. . .

hard resource_list:

. . .

h_rt=2592000

usage

. . .

1:

# time in seconds

cpu=9:04:39:31, mem=163439.96226 GBs, io=0.21693,

vmem=45.272G, maxvmem=46.359G

Program optimization

My program runs too slow… Why?

Before you look into parallelization of your code, optimize it. There

are a number of well know techniques in every language. There are also

some specifics in running the code on the cluster!

My program runs too slow… Why?

1. Input/Output

› Reduce the number of I/O to the home directory/project space (if possible);

› Group smaller I/O statements into larger where possible

› Utilize local /scratch space

› Optimize the seek pattern to reduce the amount of time waiting for disk seeks.

› If possible read and write numerical data in a binary format

My program runs too slow… Why?

2. Other tips

› Many languages allow operations on vectors/matrices;

› Pre-allocate arrays before accessing them within loops;

› Reuse variables when possible and delete those that are not needed anymore;

› Access elements within your code according to the storage pattern in this

language (FORTRAN, MATLAB, R – in columns; C, C++ - rows)

My program runs too slow… Why?

3. Email SCC

The members of out group will be happy to assist you with the tips how to improve

the performance of your code for the specific language.

How many SUs I used ?

› acctool

#My project(s) total usage on all hosts yesterday (short form):

% acctool y

#My project(s) total usage on shared nodes for the past moth

% acctool –host shared –b 1/01/15

y

#My balance for the project scv

% acctool -p scv -balance -b 1/01/15 y