Lecture 10 Instruction Set Architecture

advertisement

Lecture 11 Sequential Logic,ISA,

ALU

Prof. Sin-Min Lee

Department of Computer Science

Registers

• Two independent flip-flops with clear and preset

Registers

• Missing Q, preset, clocks ganged

• Inversion bubbles cancelled, so loaded with rising

• Can make 8- bit register with this

Review: Bus Concept

Review: CPU Building Blocks

Registers

(IR, PC, ACC)

Control Unit

(CU)

Arithmetic

Logic Unit

(ALU)

The Simplest Computer Building Blocks

Instruction Register (IR)

Program Counter (PC)

0

1

Control Unit

(CU)

2

ALU

3

4

5

Status Register (FLAG)

CPU

Accumulator (ACC)

.

RAM

John von Neumann with his computer at

Princeton

What’s ALU?

1. ALU stands for: Arithmetic Logic Unit

2. ALU is a digital circuit that performs

Arithmetic (Add, Sub, . . .) and Logical

(AND, OR, NOT) operations.

3. John Von Neumann proposed the ALU

in 1945 when he was working on

EDVAC.

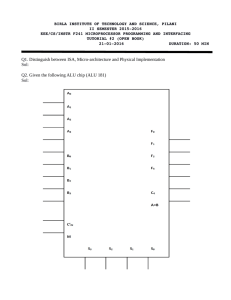

Typical Schematic Symbol of an ALU

A and B: the inputs to the ALU

(aka operands)

R: Output or Result

F: Code or Instruction from the

Control Unit (aka as op-code)

D: Output status; it indicates cases

such as:

•carry-in

•carry-out,

•overflow,

•division-by-zero

•And . . .

What is Computer Architecture?

• Computer Architecture is the design of the

computer at the hardware/software interface.

• Computer Architecture = Instruction Set

ArchitectureComputer Architecture

+

Machine Organization

Instruction Set Design

Machine Organization

at the above interface.

of Hardware Components.

Compiler/System View

Logic Designer’s View

Instruction Set Architecture

• Instruction set architecture has the attributes of a computing

system as seen by the assembly language programmer or

compiler. This includes

– Instruction Set (what operations can be performed?)

– Instruction Format (how are instructions specified?)

– Data storage (where is data located?)

– Addressing Modes (how is data accessed?)

– Exceptional Conditions (what happens if something

goes wrong?)

• A good understanding of computer architecture is

important for compiler writers, operating system designers,

and general computer programmers.

Instruction Set Architecture

• An abstract interface between the hardware

and the lowest level software of a machine

that encompasses all the information

necessary to write a machine language

program that will run correctly, including

instructions, registers, memory access, I/O,

and so on.

Key considerations in “Computer

Architecture”

Application

Operating

System

Compiler

Firmware

Instr. Set Proc. I/O system

Software

Instruction Set

Architecture

Datapath & Control

Digital Design

Circuit Design

Layout

• Coordination of many levels of abstraction

• Under a rapidly changing set of forces

• Design, Measurement, and Evaluation

Hardware

Instruction Set Architecture: An

Abstraction

• A very important abstraction

– interface between hardware and low-level

software

– standardizes instructions, machine

language bit patterns, etc.

– advantage: different implementations of the

same architecture

– disadvantage: sometimes prevents using

new innovations

IBM 360 architecture

• The first ISA used for multiple models

– IBM invested $5 billion

– 6 models introduced in 1964

• Performance varied by factor of 50

– 24-bit addresses (huge for 1964)

• largest model only had 512 KB memory

– Huge success!

– Architecture still in use today

• Evolved to 370 (added virtual addressing) and 390

(32 bit addresses).

“Let’s learn from our

successes” ...

• Early 70’s, IBM took another big gamble

• “FS” – a new layer between ISA and highlevel language

– Put a lot of the OS function into hardware

• Huge failure

Moral: Getting right abstraction is hard!

How to Program a Computer?

• Most natural way is to encode whatever you

want to tell the computer to do with electrical

signals (on and off)

– since this is the only thing it understands

• Of course, we need something simpler to work

with

• Machine Code

• Assembly language

• High-level languages

– C/C++, Fortran, Java, C#

53

Key ISA decisions

instruction length

are all instructions the same length?

how many registers?

where do operands reside?

e.g., can you add contents of memory to a register?

instruction format

which bits designate what??

operands

how many? how big?

how are memory addresses computed?

operations

what operations are provided??

Running examples

We’ll look at four example ISA’s:

–

–

–

–

Digital’s VAX (1977) - elegant

Intel’s x86 (1978) - ugly, but successful (IBM PC)

MIPS – focus of text, used in assorted machines

PowerPC – used in Mac’s, IBM supercomputers, ...

• VAX and x86 are CISC (“Complex Instruction Set

Computers”)

• MIPS and PowerPC are RISC (“Reduced

Instruction Set Computers”)

– almost all machines of 80’s and 90’s are RISC

• including VAX’s successor, the DEC Alpha

Variable:

Instruction Length

x86 – Instructions vary from 1 to 17 Bytes long

VAX – from 1 to 54 Bytes

Fixed:

MIPS, PowerPC, and most other RISC’s:

all instruction are 4 Bytes long

Instruction Length

• Variable-length instructions (x86, VAX):

- require multi-step fetch and decode.

+ allow for a more flexible and compact

instruction set.

• Fixed-length instructions (RISC’s)

+ allow easy fetch and decode.

+ simplify pipelining and parallelism.

- instruction bits are scarce.

What’s going on??

• How is it possible that ISA’s of 70’s were

much more complex than those of 90’s?

– Doesn’t everything get more complex?

– Today, transistors are much smaller & cheaper,

and design tools are better, so building complex

computer should be easier.

• How could IBM make two models of 370

ISA in the same year that differed by 50x in

performance??

Microcode

• Another layer - between ISA and hardware

– 1 instruction sequence of microinstructions

– µ-instruction specifies values of individual

wires

– Each model can have different micro-language

• low-end (cheapest) model uses simple HW, long

microprograms.

• We’ll look at rise and fall of microcode later

• Meanwhile, back to ISA’s ...

How many registers?

All computers have a small set of registers

Memory to hold values that will be used soon

Typical instruction will use 2 or 3 register values

Advantages of a small number of registers:

It requires fewer bits to specify which one.

Less hardware

Faster access (shorter wires, fewer gates)

In 141, “load” means moving

Faster context switch (when all registersdata

need

saving)

from

memory to register,

Advantages of a larger number:

“store” is reverse

Fewer loads and stores needed

Easier to do several operations at once

How many registers?

VAX – 16 registers

R15 is program counter (PC)

Elegant! Loading R15 is a jump instruction

x86 – 8 general purpose regs Fine print – some restrictions apply

Plus floating point and special purpose registers

Most RISC’s have 32 int and 32 floating point regs

Plus some special purpose ones

• PowerPC has 8 four-bit “condition registers”, a “count register”

(to hold loop index), and others.

Itanium has 128 fixed, 128 float, and 64 “predicate” registers

Where do operands reside?

Stack machine:

“Push” loads memory into 1st register (“top of stack”), moves other regs down

“Pop” does the reverse.

“Add” combines contents of first two registers, moves rest up.

Accumulator machine:

Only 1 register (called the “accumulator”)

Instruction include “store” and “acc acc + mem”

Register-Memory machine :

Arithmetic instructions can use data in registers and/or memory

Load-Store Machine (aka Register-Register Machine):

Arithmetic instructions can only use data in registers.

Load-store architectures

can do:

add r1=r2+r3

load r3, M(address) can’t do:

store r1, M(address) add

r1=r2+M(address)

forces heavy dependence on

registers, which is exactly

what you want in today’s

CPUs

- more instructions

+ fast implementation (e.g.,

easy pipelining)

Where do operands reside?

VAX: register-memory

Very general. 0, 1, 2, or 3 operands can be in registers

x86: register-memory ...

But floating-point registers are a stack.

Not as general as VAX instructions

RISC machines:

Always load-store machines

I’m not aware of any accumulator machines in last 20 years. But

they may be used by embedded processors, and might

conceivable be appropriate for 141L project.

Comparing the Number of

Code sequence for C = A + B

Instructions

Stack

Accumulator

Register-Memory

Load-Store

Push A

Push B

Add

Pop C

Load A

Add

B

Store C

Add C, A, B

Load

Load

Add

Store

R1,A

R2,B

R3,R1,R2

C,R3

Alternate ISA’s

A = X*Y + X*Z

Stack

Accumulator

Reg-Mem

Load-store

Processing a C Program

High-level language

program (in C)

swap (int v[], int k){

int temp;

temp = v[k];

v[k] = v[k+1];

v[k+1] = temp;

}

Binary machine

language program

for MIPS

Assembly language

program for MIPS

C compiler

0000000010100001000000000001

10000000000010001110000110000

01000011000110001100010000000

00000000001000110011110010000

00000000001001010110011110010

00000000000000001010110001100

01000000000000001000000001111

1000000000000000001000

swap:

muli

add

lw

lw

sw

sw

jr

$2,

$2,

$15,

$16,

$16,

$15,

$31

$5, 4

$4, $2

0($2)

4($2)

0($2)

4($2)

Assembler

67

Functions of a Computer

•

•

•

•

Data processing

Data storage

Data movement

Control

68

Functions of a Computer

source & destination of data

data movements

apparatus

Control

mechanism

Data storage

facility

Data processing

facility

69

Five Classic Components

Computer

Processor

Datapath

Memory

Control

2016/3/16

Input

Output

Erkay Savas

System Interconnection

70

Motherboard

PS/2

connectors

USB 2.0

SIMM

Sockets

Sound

PCI

Card

Slots

Parallel/Serial

Processor

IDE

Connectors

71

Inside the Processor Chip

Instruction

Cache

Data

Cache

Control

Bus

branch

prediction

integer floating-point

datapath

datapath

72

Computer

peripherals

CPU

computer

I/O

System

interconnection

Memory

network

73

CPU

ALU

CPU

Registers

Internal CPU

interconnection

Cache

Memory

Control

Unit

74

Memory

• Nonvolatile:

– ROM

– Hard disk, floppy disk, magnetic tape, CDROM,

USB Memory

• Volatile

– DRAM used usually for main memory

– SRAM used mainly for on-chip memory such as

register and cache

– DRAM is much less expensive than SRAM

– SRAM is much faster than DRAM

75

Application of Abstraction: A

Hierarchical Layer of Computer

High Level Language

Languages

Program

Compiler

Assembly Language

Program

Assembler

Machine Language

Program

lw

$15, 0($2)

lw

$16, 4($2)

sw

$16, 0($2)

0000 1001 1100 0110 1010 1111 0101 1000

1010 1111 sw

0101 1000 $15,

0000 1001

1100 0110

4($2)

1100 0110 1010 1111 0101 1000 0000 1001

0101 1000 0000 1001 1100 0110 1010 1111

Machine Interpretation

Control Signal

Specification

°

°

ALUOP[0:3] <= InstReg[9:11] & MASK

The Organization of a Computer

• Since 1946 all computers have had 5 main

components

Processor

Input

Control

Memory

Datapath

Output