slides here - tim bates

advertisement

Experimental design and

rationale

All slides here

http://timbates.wikidot.com/methodology-2

Part Two: Quantitative Methods

Experimental design and rationale (Tim)

Scale construction (Tim)

Designing studies with children (Morag)

Cognitive Neuroimaging (Mante)

Single-case studies (Thomas Bak)

Great topics…

Raising Human Capital & Well-being

Educational outcomes

Innovation/Entrepreneurship/Wealth

Mental Illness

What is schizophrenia? Why do some people become depressed after

childbirth?

Why do some people lack self-control? What is addiction? How can we

cure it?

How can we increase life-span cognitive function?

Understanding the mind

Status, trust, religion, forgiveness, reading, comprehension, memory,

dementia…

Human Nature, Human potentials

The problem we have…

“A series of novel and amusing figures

formed by the hand”

A world without Science

Bad science is dangerous

Should firefighters be literate?

Will increasing tax decrease the well-being of people on

£40,000/yr? Or make them less likely to grow their business?

Do children differ only in their learning styles? Almost

certainly harming children

Rohrer, D., & Pashler, H. (2012). Learning styles: where's the

evidence? Med Educ, 46(7), 634-635. doi: 10.1111/j.13652923.2012.04273.x

Should we teach reading via whole language?

Overview

The research topic

The research question

Theory generation; Hypothesis formulation

Validity: Reverse causation, confounding, causality

Problems of poorly designed studies

Foundations

We wish to build correct theories of mental

mechanisms underlying behavior

If we correctly understand causal mechanisms then

intervening will necessarily change behavior.

How can we best understand mechanisms?

If there’s one thing I’d like you to “take away” it is this:

Ask: “What mechanism makes this happen?”

Theory

Theory necessarily goes beyond observation, or even a

catalogue of observation.

It makes testable predictions about observations yet to

be made. Therefore, it can informative, and can be

wrong.

Contrast with:

1929 Vienna Positivism: The idea that knowledge can

only be what we observe and is provable.

Social constructionism and Post-modernism: The idea

that we have only narratives, largely socially constructed.

Why theory can be wrong

As a consequence of going beyond observation,

theories can be wrong.

Theories posit non-observable entities (latent constructs,

like “episodic memory” “phoneme buffer”, or

“agreeableness”) and relations among these (mechanism

and process) to explain observations:

That’s why they can be wrong

We can only subject theory to the risk of falsification

by testing predictions, so theory must make

predictions.

Progress toward truth

We cannot know when we have an eternally true theory.

We can know which of two competing theories is closer to the

truth

Rather than showing that one theory passes a test or fails a test,

we see which of two competing theories passes all/more tests

Experiments are imperfect tests of theory.

The art in research is making experiments as honest diviners

between competing theories.

Makes deriving hypotheses very important for supporting a theory

Most people are not great at formulating ideas in terms of

hypotheses: it seems not to be a natural human faculty

Takes practice.

If we can be wrong, how can

we choose between theories?

We can know the least wrong theory

Model fitting & model fit

Interestingly, then, though we cannot know what the

true theory is (even if we have it), we can objectively

improve our theories

Successive comparisons leave us closer to the true model

Lee, James J. (2012). Common Factors and Causal

Networks. European Journal of Personality, 26(4), 441-442.

doi: 10.1002/per.1873

So far…

Test the best theory against its strongest competitor

Requires that the two theories make distinct

predictions: You test which gets these right

Next: What and how should I study using this

awesome new science culture we have learned?

Being a Scientist

Read biographies of Nobel winners:

“Avoid Boring People” by James Watson

“Dancing in the Mind Field” by Kary Mullis

“What do you care what other people think?” Richard

Feynman

All about £.01 + shipping on Amazon

Generating ideas

Learn what exists already

Humans uniquely have a generative culture

Attend talks, Conferences, ask staff about their interests

/ projects, read journal articles

Observe, people watch, read classic literature: This is

what Shakespeare did

Keep a notebook: Make concrete suggestions to yourself

about possible projects

Do something: Run an experiment! That’s an awesome

place to get ideas because you are confronted by reality

Understand your Topic!

You will need to understand something similar to what

a paper gives you in its introduction:

What is the core question?

Why does this matter?

What are the core concepts and theories?

Key texts and authors on the general area

History of the topic

What current argument will you resolve?

Lesson 1: Practice

Your experiment can

Replicate a paper: {Doyen, 2012}

Test a prediction: {Martin, 2011, 55-62}

Contrast two theories {Lewis, 2012, 1623-1628}

Make a new theory

“those who refuse to go beyond the facts, rarely get as far.”

Thomas Henry Huxley (1825-95) English biologist

Kurt Gödel proved that this will require creativity on your

part: There can never be an automatic system for

expanding the system

From Topic to Research

Question

Formulate the research question

Have you posed a question? An hypothesis is a

conjecture – a testable proposition about relations

between two or more phenomena or variables

(Kerlinger & Lee, 2000, p.15)

It should derive from (be a prediction of) some larger

theory or proto-theory

Good hypotheses have clear implications for testing the

stated relations between variables.

e.g. “If attention is driven by interest, then positive emotions

should narrow attention.”

Practical issues about where to

invest your time

Specify relationship(s) to be examined

For practicality’s sake: Are the relationship(s) empirically

testable?

Consider participant availability

If you research in this area, will it make any difference to

human well-being or knowledge?

Is this the place that you can best exert your labour? Is it

ready to move? Will this be a significant result?

Hypothesis checklist

1. Does your hypothesis:

1. Name two or more variables?

2. State a relationship between these variables?

3. State the nature of the relationship?

2. Is it stated in a testable tense?

1. Does it imply the research design to be used?

2. Define the population to be studied?

3. Will it generalise appropriately?

1. Stipulate relationships among variables rather than names of

statistical tests?

2. Free of unnecessary methodological detail?

4. Distinguish predictions from competing theories?

Cone and Foster (2006), p.73

Hypotheses - examples

Close: The gene KIBRA mediates forgetting (but not learning)

Better: Controlling for initial learning, delayed recall on

paired associate learning will be associated with rs3987823

Close: Teacher’s understanding of their material improves

their pupil’s learning and retention of information.

Better:“When teachers are randomly assigned to students,

Teacher’s knowledge (final grade) will be positively associated r > .3

with year-5 pupil final grades”.

Personality (NEO PI-R) accounts for the heritable component

of happiness (SWBS) scores

“There is an innate language mechanism”

What, exactly, is it predicting? Need to define innate

Part 2: Testing causes in social

science

Causality

Fundamental to psychology

We are trying to learn what causes behavior

Causal Designs

Subjects: Random/representative

Measured causes (Independent Variables)

Measured consequences (Dependent Variables)

“Minimize” Confounds

Confound is an unmeasured IV

We don’t know what some confounds are: they are the IVs

of a better theory (which we don’t know about!)

Example Causal Model:

What causes wealth?

Ritchie, S. J., & Bates, T. C. (2013).

Enduring links from childhood

mathematics and reading achievement to

adult socioeconomic status. Psychological

Science, 24(7), 1301-1308. doi:

10.1177/0956797612466268

What’s wrong with measuring just

my cause and my effect?

“SES causes IQ scores”

“Exercise relieves Depression”

“Media causes anorexia”

They’re “obviously” the causes and cures, right?

If you find your self saying “it makes sense”, like 99% of

people you are using just System-I (Kahnman). You are

saying we don’t need to test.

Confounds

So we get this

“Taking Vitamin C reduces car

accidents by 40% …”

Almost every claim in epidemiology is wrong

True even after controlling for all major covariates

99% of these are false positives riding on health

gradients linked to ability and capability

Davy Smith et al. (2009)

Why do we see this over and over in the press and in

scientific articles?

Social and structural factors in the (bad) business of

doing science (Ioannides, 2010)

Case in point: Breastfeeding

Smart Mums: IQ & FADS2

Caspi et al. 2007

rs174575

Promotor region of fatty acyl

desaturase 2.

IQ advantage of 6.4 to 7 IQ points for

breast-fed versus non-breast-fed infants

That’s a massive effect!

More than wipe-out effects of

status on ability

No main effect: Only among

children carrying one or more

C alleles of the rs174575 SNP,

but that was 90% of kids

(No) interaction effect of rs174575

status with breastfeeding

Bates et al 2010;

Martin et al 2011

p=0.67

No effect

N= 3,067

Steer et al. (2010)

N=7,000+

Reverse effect

do(mud)

Judea Pearl and Causality

“Look: the Mud made it rain last night!”

“No, the rain caused the mud!”

A correlation is an unresolved causal nexus

What we need is P(rain|do(mud))

Do(mud) Get(rain)

Randomized Control Trial

Read this paper (and the commentaries to)

Lee, J. J. (2012). Common Factors and Causal Networks.

European Journal of Personality, 26(4), 441-442. doi:

10.1002/per.1873

Fundamentally,

“To find out what happens to a system when

you interfere with it you have to interfere with

it (not just passively observe it)”

(George Box, 1966)

Twins and other models give us a way to use randomly

varying genes and environments to look for causal

mechanisms

Randomized Control Trial

(RCT)

In an experiment, the experimenter can subject random

people to random treatments and measure the results:

We can very seldom do this in psychology

Partly we don’t know how

For instance, to change someone’s extraversion or

language acquisition skill

Partly though we know how, we can’t in practice

For instance allocating babies to breast-feeding or not

Adding educational exposure

This seldom happens at random

Testing environmental effects:

Discordant MZ twin Design

Genes can both

Cause behavior

So need to be measured when testing causal social theory

Influence the dose of the environment (G x E

covariance)

MZ differences:

Does exercise reduce depression?

Extremely powerful, and underused design

Exercise

A

C

Exercise

.3

Depression

E

A

Depression

Can test directly in twins

DeMoor et al, Arch Gen Psychiatry (2008)

Results

Genetic correlations between .2 and .4

But…

Environmental causal pathway

from exercise to affect = 0

Part III:

False Positive Psychology

•

We often run small experiments

•

If the theory is right, what is chance p will be < .05?

•

If the theory is wrong, what is the chance p will be

<.05?

•

What is the chance of 1 experiment “working” by

chance if you conduct 20 experiments?

plot (1 –

n

.95 )

~

n

1.0

1 - 0.95^n

0.8

0.6

0.4

0.2

0

20

40

60

n

80

100

Bad Science

p-hacking (Simmons, 2011)

Non-double-blinded scientists influence their data

Bargh (1996) example

Funder-priorities & publicity bias what questions get asked and what people hear

White hat bias (look it up on Wikipedia)

Scientists cheat

Fame: Stapel & Hauser examples

Scientist’s values influence their teaching, their reviewing of papers and grants, and their

hiring policy for new colleagues

Psych Science special issue on improbable numbers of liberals in Psychology

Scientists make mistakes in logic

Eliminating the race-gap on SATs by giving only items that have no bias

p-hacking: Just don’t do it!

Because researchers control their experiment, they can cause

anything they want to be significant

How many subjects

When to stop collecting

What variables to measure and which to exclude

How to analyse the results. etc.

Simmons et al (2011)

Almost all published studies are significant (Sterling, 1959)

Most studies have moderate power at best (Cohen, 1962).

Failed studies must be not being published.

“Ask to see the Meta-analysis”

Stereotype Threat case study

Paulette Flore, & Wicherts, J. M. (2015). Does stereotype threat influence performance

of girls in stereotyped domains? A meta-analysis. J Sch Psychol, 53(1), 25-44. doi:

10.1016/j.jsp.2014.10.002

What to do?

Do bigger experiments, and aggregate replications

http://psychfiledrawer.org/view_article_list.php

Don’t be afraid to try and replicate

It is very valuable

You need the practice

A major theory might well be false, and you’ll be

famous!

More multi-experiment studies

replicate than expected by chance

“High quality” journals require multiple experiments

But… most experiments are under-powered.

One more more should, therefore, fail.

But they don’t {Franks, 2012}

Most Psychology results published in Science are false;

Possibly most findings are false

Ioannidis, J. P. (2005). Why most published research findings

are false. PLoS Medicine, 2(8), e124. doi:

10.1371/journal.pmed.0020124

LESSON: Don’t be afraid of a null result

6 studies “show” people would be

less greedy if we cut their pay

Piff, P. K., Stancato, D. M., Cote, S., Mendoza-Denton,

R., & Keltner, D. (2012). Higher social class predicts

increased unethical behavior.

Proceedings of the National Academy of Science USA,

109(11), 4086-4091. doi: 10.1073/pnas.1118373109

Extremely unlikely set of underpowered positives suggest

publication bias {Francis, 2012, E1587}

Values: Your Professors are

Biased

Inbar and Lammers (2012)

“in choosing between two equally qualified job candidates for one

job opening, would you be inclined to vote for the more liberal

candidate (i.e., over the conservative).”

Over 1/3 said they would discriminate against the

conservative candidate!

Far more said their colleagues would

Similar effects for reviewing papers and grants

“Usually you have to be pretty tricky to get people to say they’d

discriminate against minorities.”

Unreplicated but sexy claims

“Exposure to money makes you greedy”

The psychological consequences of money. Science

{Vohs, 2006,1154-1156}

Failure to replicate http://bit.ly/NGQf1L

Unconscious thought theory

Unconscious thought (UT) is better at solving complex

tasks, where many variables are considered, than

conscious thought

Unreplicated but sexy claims

L:arge-scale replication yield 0 evidence for

Unconscious Thought Theory

meta-analysis showed that previous reports of the UTA

were confined to underpowered studies that used

relatively small sample sizes

Nieuwenstein, et al.. (2015). On making the right

choice: A meta-analysis and large-scale replication

attempt of the unconscious thought advantage. Judgment

and Decision Making, 10(1), 1-17

It’s not a straight world out

there…

Fraud

Diederik Stapel

http://en.wikipedia.org/wiki/Diederik_Stapel

Mark Hauser

May even be normative

{John, 2012, 524-532}

Detecting Fraud: {Simonsohn, 2011, 2013}

Smeesters fraud

Fraud: 20 +

http://goo.gl/lgvGv

Fraud: Hauser

http://goo.gl/0Qkj8

Experimenter Expectation

Why do we need things to be double blinded, really?

Surely only liars need that to stop them cheating?

Experimenter Expectation

Stereotype priming Bargh et al, (1996)

Cited hundreds of times

Participants primed non-consciously

Exposed to ageing-related words in a scrambled sentence

task

DV: Walking speed

Subjects primed by elderly stereotypes walked away from

a psychology lab more slowly

Experimenter Expectation

Doyen et al (2012) doubled the number of participants:

More power

Timed speed with infra-red beams

(Bargh had an RA use a stop-watch).

Null results

Expt 2

Experimenters knowing the expected result and which condition participants had

been allocated to

Slowing effect was observed.

Expt 3

Experimenters told to expect participants to walk away faster

Now data supporting this reverse-effect were recorded (if they used a stop-watch)

Double-blind: Bargh’s

response

Authors are ‘incompetent and ill-informed’

PLoS One allows researchers to 'self-publish' their studies

without appropriate peer review for money

“Experimenter expectancies could not have interfered with the

results”

post is now deleted, but linked here

http://hardsci.wordpress.com/2012/03/12/some-reflectionson-the-bargh-doyen-elderly-walking-priming-brouhaha/

Double-blind: Bargh’s

response

'gross' methodological changes invalidate the Doyen

replication

Participants told to ‘go straight down the hall when leaving’

While he let participants 'leave in the most natural way’.

In fact

Doyen: ‘participants were clearly directed to the end of the

corridor’

Bargh told that ‘the elevator was down the hall’.

Double-blind: Bargh’s

response

Replicated 'dozens if not hundreds' of times

In fact no actual replications

Hull et al. 2002 “showed the effect mainly for individuals

high in self consciousness”

Cesario et al. 2006 showed the effect “mainly” for

individuals who like (versus dislike) the elderly.

Solidly embedded in theories across multiple

disciplines

But that’s bad if this is wrong?

http://goo.gl/P9IOM

Experimenter Expectation:

Harold Pashler’s response

Attempt another replication

Also failed

http://psychfiledrawer.org/chart.php?target_article=1&

type=failure

Is this a one off?

Williams and Bargh (2008)

Participants plot a single pair of points on a piece of graph

paper

Given coordinates which are “close”, “intermediate”, or “far”

apart in the space on graph paper

DV: How close are you too members of your family?

Participants who graphed a distant pair reported themselves

as being significantly less close to members of their own

family

Expt 2: Same spatial distance prime

DV = Caloric content of foods

Significant prime

Pashler et al. (2012)

Direct replications of both results attempted

Ensured the experimenter did not know what

condition the participant was assigned to.

“No hint of the priming effects reported by Williams and

Bargh (2008).”

[Pashler, 2012, e42510]

Figure 1

Summary

Focus on mechanisms and causation

Correlation is an unidentified causal nexus: Identify it

Work with the most famous person you can

Skill pays off:

Invest in learning concepts and facts: Use these in daily conversation

Practice collecting, analyzing, and writing

You can’t have a theory without creativity and knowledge

Come to the journal clubs, talk with staff and PhD students: Get

involved early

You can improve by iterating:

1. Try 2. Learn 3. Adapt ideas Try

Summary Tips

Systematic Time on task pays off: Get started early; Work hard

You can’t make a breakthrough in an arm chair

Get data: That’s what Aristotle did (see Aristotle’s Lagoon), and

it is still necessary

Ideas come from analysed data: The more times you go through the cycle,

the better your product will be.

No experiment is a failure: The person with “failed” data can find why;

The person with no data can’t know anything

“Fortune favors the brave” and “God is on the side of the big battalions”

and “the meek shall inherit the earth”

If you believe in iteration, you won’t be afraid to fail

Aim for a good publication out of your Y4!

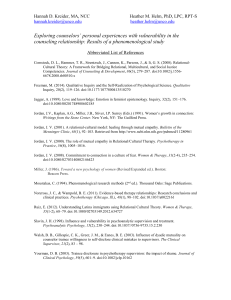

References

Bargh, J. A., Chen, M., & Burrows, L. (1996). Automaticity of social behavior: direct effects

of trait construct and stereotype-activation on action. J Pers Soc Psychol, 71(2), 230-244.

Underlying Ingroup Favoritism. Psychological Science, 21(11), 1623-1628. doi:

10.1177/0956797610387439

Bates, T. C., & Lewis, G. J. (2012). Towards a genetically informed approach in the social

sciences: Strengths and an opportunity. Personality and Individual Differences, 53(4), 374380. doi: 10.1016/j.paid.2012.03.002

Martin, N. W., Benyamin, B., Hansell, N. K., Montgomery, G. W., Martin, N. G., Wright, M.

J., & Bates, T. C. (2011). Cognitive function in adolescence: testing for interactions between

breast-feeding and FADS2 polymorphisms. Journal of the American Academy of Child and

Adolescent Psychiatry, 50(1), 55-62 e54. doi: 10.1016/j.jaac.2010.10.010

Bones, A. K. (2012). We Knew the Future All Along: Scientific Hypothesizing is Much More

Accurate Than Other Forms of Precognition--A Satire in One Part. Perspectives on

Psychological Science, 7(3), 307-309. doi: 10.1177/1745691612441216

Caspi, A., Williams, B., Kim-Cohen, J., Craig, I. W., Milne, B. J., Poulton, R., . . . Moffitt, T.

E. (2007). Moderation of breastfeeding effects on the IQ by genetic variation in fatty acid

metabolism. Proc Natl Acad Sci U S A, 104(47), 18860-18865.

Pashler, H., Coburn, N., & Harris, C. R. (2012). Priming of social distance? Failure to

replicate effects on social and food judgments. PLoS One, 7(8), e42510. doi:

10.1371/journal.pone.0042510

Piffa, P. K., Stancatoa, D. M., Côtéb, S. p., Mendoza-Dentona, R., & Keltnera, D. (2012).

Higher social class predicts increased unethical behavior. Proceedings of the National

Academy of Science USA. doi: http://www.pnas.org/cgi/doi/10.1073/pnas.1118373109

Cesario, J., Plaks, J. E., & Higgins, E. T. (2006). Automatic social behavior as motivated

preparation to interact. J Pers Soc Psychol, 90(6), 893-910. doi: 10.1037/00223514.90.6.893

Roediger, H. L., 3rd, & Butler, A. C. (2011). The critical role of retrieval practice in long-term

retention. Trends Cogn Sci, 15(1), 20-27. doi: 10.1016/j.tics.2010.09.003

Doyen, S., Klein, O., Pichon, C. L., & Cleeremans, A. (2012). Behavioral priming: it's all in

the mind, but whose mind? PLoS One, 7(1), e29081. doi: 10.1371/journal.pone.0029081

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology:

undisclosed flexibility in data collection and analysis allows presenting anything as

significant. Psychological Science, 22(11), 1359-1366. doi: 10.1177/0956797611417632

Francis, G. (2012a). Evidence that publication bias contaminated studies relating social

class and unethical behavior. Proceedings of the National Academy of Science USA,

109(25), E1587; author reply E1588. doi: 10.1073/pnas.1203591109

Francis, G. (2012b). The Same Old New Look: Publication Bias in a Study of Wishful

Seeing. i-Perception, 3(3), 176-178.

Simonsohn, U. (2012). It Does Not Follow: Evaluating the One-Off Publication Bias

Critiques by Francis (2012a,b,c,d,e,f), from

http://opim.wharton.upenn.edu/%7Euws/papers/it_does_not_follow.pdf

Steer, C. D., Davey Smith, G., Emmett, P. M., Hibbeln, J. R., & Golding, J. (2010). FADS2

polymorphisms modify the effect of breastfeeding on child IQ. PLoS One, 5(7), e11570. doi:

10.1371/journal.pone.0011570

Hull, J. G., Slone, L. B., Meteyer, K. B., & Matthews, A. R. (2002). The nonconsciousness of

self-consciousness. J Pers Soc Psychol, 83(2), 406-424.

Vohs, K. D., Mead, N. L., & Goode, M. R. (2006). The psychological consequences of

money. Science, 314(5802), 1154-1156. doi: 10.1126/science.1132491

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of

questionable research practices with incentives for truth telling. Psychol Sci, 23(5), 524532. doi: 10.1177/0956797611430953

Lewis, G. J., & Bates, T. C. (2010). Genetic Evidence for Multiple Biological Mechanisms

Yong, E. (2012). Replication studies: Bad copy In the wake of high-profile controversies,

psychologists are facing up to problems with replication. Nature, from

http://www.nature.com/polopoly_fs/1.10634!/menu/main/topColumns/topLeftColumn/pdf/48

5298a.pdf

Useful references

Breakwall, Hammond and Fife-Schaw (Eds.) Reserch Methods in Psychology. 2nd Ed.,

Sage Publications.

Cone and Foster (2006), Dissertations and Theses from Start to Finish. 2nd Ed.,

Washington, DC: APA.

Kerlinger and Lee (2000) Foundations of behavioural Research. 4th Ed. Harcourt Inc.

Fort Worth.

Tabachnick and Fidell (2007). Using Multivariate Statistics, 5th ed., Boston: Ally and

Bacon.

http://www.socialresearchmethods.net/kb/constval.php

Practical advise from successful Scientists

James Watson “Avoid Boring People”

Kary Mullis: “Dancing naked in the Mindfield”

H.J. Eysenck “Rebel with a cause”