Henness&PattersonEnd..

advertisement

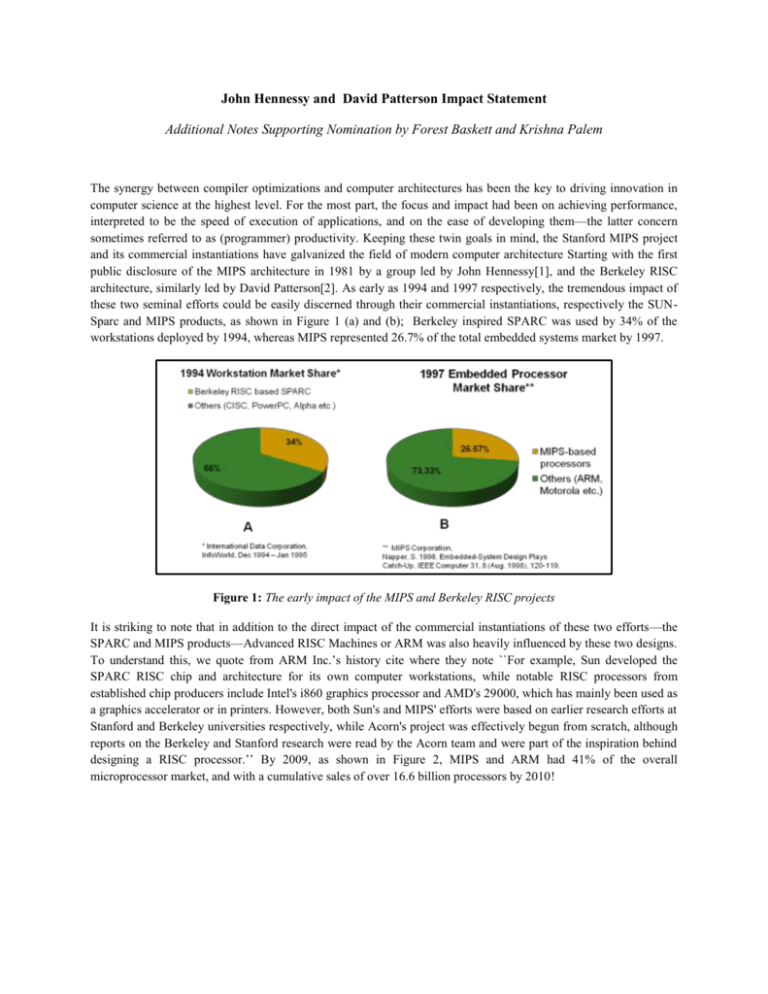

John Hennessy and David Patterson Impact Statement Additional Notes Supporting Nomination by Forest Baskett and Krishna Palem The synergy between compiler optimizations and computer architectures has been the key to driving innovation in computer science at the highest level. For the most part, the focus and impact had been on achieving performance, interpreted to be the speed of execution of applications, and on the ease of developing them—the latter concern sometimes referred to as (programmer) productivity. Keeping these twin goals in mind, the Stanford MIPS project and its commercial instantiations have galvanized the field of modern computer architecture Starting with the first public disclosure of the MIPS architecture in 1981 by a group led by John Hennessy[1], and the Berkeley RISC architecture, similarly led by David Patterson[2]. As early as 1994 and 1997 respectively, the tremendous impact of these two seminal efforts could be easily discerned through their commercial instantiations, respectively the SUNSparc and MIPS products, as shown in Figure 1 (a) and (b); Berkeley inspired SPARC was used by 34% of the workstations deployed by 1994, whereas MIPS represented 26.7% of the total embedded systems market by 1997. Figure 1: The early impact of the MIPS and Berkeley RISC projects It is striking to note that in addition to the direct impact of the commercial instantiations of these two efforts—the SPARC and MIPS products—Advanced RISC Machines or ARM was also heavily influenced by these two designs. To understand this, we quote from ARM Inc.’s history cite where they note ``For example, Sun developed the SPARC RISC chip and architecture for its own computer workstations, while notable RISC processors from established chip producers include Intel's i860 graphics processor and AMD's 29000, which has mainly been used as a graphics accelerator or in printers. However, both Sun's and MIPS' efforts were based on earlier research efforts at Stanford and Berkeley universities respectively, while Acorn's project was effectively begun from scratch, although reports on the Berkeley and Stanford research were read by the Acorn team and were part of the inspiration behind designing a RISC processor.’’ By 2009, as shown in Figure 2, MIPS and ARM had 41% of the overall microprocessor market, and with a cumulative sales of over 16.6 billion processors by 2010! Figure 2: The impact of the MIPS and ARM processors 1. In the Beginning By the late 1970s, ambitious processor architectures were being developed at significant cost, whereas it was proving rather hard to truly exploit their complexity while retaining a level of productivity. This motivated John Cocke and a team of researchers at IBM to take a deeper look at the value of implementing processor architectures with ever increasing complexity. The resulting project 801 [3], many credit, as having established the importance of a simple instruction set and the utility of a compiler in being able to exploit it. The projects at Berkeley and Stanford (Figure 2) succeeded in indelibly establishing the influence of this principle in modern computer architecture as we know it. We have already seen that the commercial derivatives of this processor had significant a impact in terms of sheer numbers, on modern microprocessors. However, a subtler event which turned out to be epochal in promulgating this philosophy, was the textbook authored by the protagonists of these projects, Hennessy and Patterson. This book added significant momentum to a technical avalanche that was already on the way within the industrial realm—various RISC processors were designed at that time including the DEC ALPHA and the SUN SPARC architectures—by creating a pedagogically well-founded, cohesive, and quantitative framework through which architectures could be viewed, rigorously evaluated, and ultimately innovated. A central concept that enabled this was the approach of elevating the concept of an instruction set architecture (ISA) to be an abstraction worthy of independent consideration in specification, analysis and evaluation in its own right, decoupled from the particular manner in which it might (or might not) be implemented—the latter being an issue that became localized to the more detailed world of microarchitecture. In our view, the book succeeded in giving computer architecture a lucid and elegant framework that was methodologically reductionist—one does not always associate it with, nor is it always tractable to achieve this in an area where detailed engineering considerations are intimately intertwined with conceptual considerations. J. Cocke IBM-801 Project [3] MIPS Computer Systems was founded J. Hennessey and D. Patterson “Computer architecture: a quantitative approach” [8] Over 48 million MIPS architecture based processors sold Source: MIPS Corporation 1981 1980 J. Hennessey MIPS project [1] SPARC developed by Sun Microsystems Source: Netrino – The Embedded Systems Expert 1987 1984 D. Patterson RISC Project [2] Embedded processors occupy 98% of the total processor market and represents 8.8 billion units 1990 1997 Advanced RISC Machines (ARM) Ltd. was founded Less than 10 million units based on ARM architecture shipped Source: Arm Holdings plc 2005 2010 A cumulative of 16.6 billion MIPS and ARM based processors shipped Source:MIPS Corporation & Arm Holdings plc Figure 3. The major events and the impact of Hennessy and Patterson in the microprocessor arena Thus, while the IBM 801 project started shifting the ``balance of power’’ from a hardware based microarchitecture, to software based compiler-centric technique, this was motivated by traditional concerns of achieving high performance. The efforts led by Hennessy and Patterson provided several crucial pivots beyond goal of the IBM 801 project, which was to demonstrate the importance of a compiler-centric approach to achieving high-performance and were motivated by concerns that proved significant to the eventual impact of processors with simple instruction sets. First, they wanted to overcome the burdens and overheads of having extra layers of microprogramming which made the interpretations of instructions by hardware engines both cumbersome and inefficient. The result was a focus on simplicity and efficiency. This in turn allowed the use and exploitation of (the then emerging) VLSI technology in a variety of ways. The incorporation of instruction level parallelism, and an emphasis on using the increasingly available silicon for on-chip caches, are two examples of this trend—in contrast, the IBM 801 was a wire-wrapped board with composed of Motorola 10K parts built out of emitter coupled logic. Of course, the efficiency factor has turned out to be very important in the embedded market as denoted by the dominance of the MIPS and ARM 2. Beyond Microprocessors Notably, in the 1980s, industry leaders Joseph (Josh) Fisher[4] and B. Ramakrishna (Bob) Rau [5] used the principle that RISC enabled—that of a compiler managing hardware resources through architectural features and the concept of a simple ISA—very successfully in the context of VLIW systems, at the heart of most if not all modern DSP processing. To understand the significance of DSP processing, we note that (Figure 4) the total revenue associated with the DSP market was close to all of the microprocessor related revenue from Intel. Figure 4. Comparing the revenue associated with DSP processors with that of Intel from microprocessors. On a personal note, this lesson also crucially influenced the work of one of the c-nominators. To elaborate, by 1999, it was apparent that for customized embedded platforms to proliferate, it was essential to include malleable or reconfigurable hardware into the mix, thereby adding a new dimension along which NRE and time-to-market had to be combated. To tackle this challenge, Palem adopted the conceptual view and philosophy underlying the original RISC lessons in a commercial effort pursued through Proceler Inc [6]—that of using an optimizing compiler and ``assemble’’ customized processors on reconfigurable hardware substrates quickly, and at low cost. This resulted in the widely-used technology of Dynamically Variable Instruction Set Architectures (DVAITA),—the basis for product offerings from major manufacturers of reconfigurable systems and their tools including Xilinx, Altera and Symplicity and elucidated by Max Baron, its editor-in-chief, in the industry bellwether Microprocessor Report as a nominee for one of the four outstanding technologies of 2002 [7]. In this connection, Max Baron noted that “It's no longer possible to assume you can design a processor without having a good idea of the system architecture in which it will be used and the workload it will execute....some of TI and ADI's DSP chips proves that processors are designed with specific applications in mind....Proceler takes a different route: it can call "soft" hardware into existence to accelerate parts of a workload that require high performance. Altera, Xilinx, Triscend, and others provide microprocessor cores and raw hardware that can be programmed at boot time, or, if time permits, at run time.” References [1] J. L. Hennessy, N. Jouppi, F. Baskett, and J. Gill, MIPS: a VLSI processor architecture. Technical Report CSL-TR-81-223, Stanford, CA, USA, 1981. [2] D. A. Patterson and C. H. Sequin, RISC I: A reduced instruction set VLSI computer, in the Proceedings of the International Symposium on Computer Architecture, pages 443-458, 1981. [3] G. Radin. The 801 minicomputer. IBM Journal of Research and Development, 27(3):237-246, 1983. [4] J. A. Fisher, Trace scheduling: A technique for global microcode compaction, in the IEEE Transactions on Computers, 30(7):478-490, 1981. [5] B. R. Rau, C. D. Glaeser, and E. M. Greenawalt, Architectural support for the efficient generation of code for horizontal architectures, in the Proceedings of the Symposium on Architectural Support for Programming Languages and Operating Systems, pages 96-99, 1982. [6] K. Palem, H. Patel, and S. Yalamanchili. An instruction set architecture to aid code generation for hardware platforms having multiple heterogeneous functional units, 2000. US Patent, Publication No. WO/2002/041104. [7] M. Baron, Technology 2001: On a clear day you can see forever, Microprocessor Report, February 2002. [8] J. L. Hennessy and D. A. Patterson. Computer architecture: a quantitative approach. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 1990.