Closing the Loop - St. Norbert College

advertisement

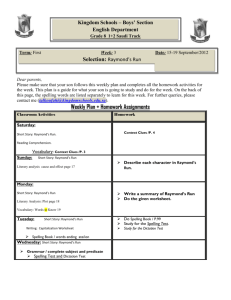

Closing the Loop What to do when your assessment data are in September 21, 2005 Raymond M. Zurawski, Ph.D. 1 Step 8: Revise the Assessment Plan and Continue the Loop Step 7: Close the Loop (Use the Results) Step 6: Report Findings And Conclusions Step 1: Identify Program Goals Cycle of Assessment Step 2: Specify Intended Learning Outcomes (Objectives) Step 3: Select Assessment Methods Step 5: Analyze and Interpret the Data: (Make Sense of It All) Step 4: Implement: Data Collection Assessment Methods September 21, 2005 Raymond M. Zurawski, Ph.D. 3 Assessment Methods Used at SNC Examination of student work Major field or licensure tests Measures of professional activity Capstone projects Essays, papers, oral presentations Scholarly presentations or publications Portfolios Locally developed examinations Performance at internship, placement, sites Supervisor evaluations Miscellaneous Indirect Measures Satisfaction/evaluation questionnaires Placement analysis (graduate or professional school, employment) September 21, 2005 Raymond M. Zurawski, Ph.D. 4 Other Methods Used Faculty review of the curriculum Curriculum audit Analysis of existing program requirements External review of curriculum Analysis of course/program enrollment, dropout rates September 21, 2005 Raymond M. Zurawski, Ph.D. 5 What to Know About Methods Notice that different assessment methods may yield different estimates of program success Measures of student self-reported abilities and student satisfaction may yield different estimates of program success than measures of student knowledge or student performance What are your experiences here at SNC? Good assessment practice involves use of multiple methods; multiple methods provide greater opportunities to use findings to improve learning September 21, 2005 Raymond M. Zurawski, Ph.D. 6 What to Know About Methods Even if the question is simply… Are students performing… …way better than good enough? …good enough? …NOT good enough? The answer may depend on the assessment method used to answer that question September 21, 2005 Raymond M. Zurawski, Ph.D. 7 Implementation September 21, 2005 Raymond M. Zurawski, Ph.D. 8 Implementation Common Problems Methodological problems Human or administrative error Response/participation rate problems Instrument in development; method misaligned with program goals Insufficient numbers (few majors; reliance on volunteers, convenience sample; poor response rate); insufficient incentives, motivation High “costs” of administration “Other” (no assessment, no rationale) NOTE: Document the problems; provides one set of directions for ‘closing the loop’ September 21, 2005 Raymond M. Zurawski, Ph.D. 9 Document Your Work! “If you didn’t document it, it never happened…” The clinician’s mantra September 21, 2005 Raymond M. Zurawski, Ph.D. 10 Analyzing and Interpreting Data September 21, 2005 Raymond M. Zurawski, Ph.D. 11 Analyzing and Interpreting Data General Issues Think about how information will be examined, what comparisons will be made, even before the data are collected Provide Descriptive information Percentages (‘strongly improved’, ‘very satisfied’) Means, medians on examinations Summaries of scores on products, performances Provide Comparative information External norms, local norms, comparisons to previous findings Comparisons to Division, College norms Subgroup data (students in various concentrations within program; year in program) September 21, 2005 Raymond M. Zurawski, Ph.D. 12 Interpretations Identify patterns of strength Identify patterns of weakness Seek agreement about innovations, changes in educational practice, curricular sequencing, advising, etc. that program staff believe will improve learning September 21, 2005 Raymond M. Zurawski, Ph.D. 13 Ways to Develop Targeted Interpretations What questions are most important to you? What’s the story you want to tell? helps you decide how you want results analyzed Seek results reported against your criteria and standards of judgment so you can discern patterns of achievement September 21, 2005 Raymond M. Zurawski, Ph.D. 14 Interpreting Results in Relation to Standards Some programs establish target criteria Examples September 21, 2005 If the program is effective, then 70% of portfolios evaluated will be judged “Good” or “Very good” in design The average alumni rating of the program’s overall effectiveness will be at least 4.5 on a 5.0point scale Raymond M. Zurawski, Ph.D. 15 Standards and Results: Four Basic Relationships Four broad relationships are possible: A standard was established that students met A standard was established that students did not meet No standard was established The planned assessment was not conducted or not possible Some drawbacks to establishing target criteria Difficulties in picking the target number Results exceeding standard do not justify inaction Results not meeting standard do not represent failure September 21, 2005 Raymond M. Zurawski, Ph.D. 16 Reporting Results September 21, 2005 Raymond M. Zurawski, Ph.D. 17 Reporting Assessment Findings Resources An Assessment Workbook Another Assessment Handbook Ball State University Skidmore College An important general consideration: Who is your audience? September 21, 2005 Raymond M. Zurawski, Ph.D. 18 Sample Report Formats Skidmore College Old Dominion University Ohio University George Mason University Montana State University (History) Other programs Institutional Effectiveness Associates, Inc. September 21, 2005 Raymond M. Zurawski, Ph.D. 19 Local Examples of Assessment Reports Academic Affairs Divisions Division of Humanities and Fine Arts Division of Natural Sciences Division of Social Sciences Student Life Mission and Heritage September 21, 2005 Raymond M. Zurawski, Ph.D. 20 OK, but just HOW do I report… Q: How to report…. Survey findings, Major Field Test data, Performance on Scoring Rubrics, etc. A: Don’t Reinvent the Wheel Consult local Assessment and Program Review reports for examples September 21, 2005 Raymond M. Zurawski, Ph.D. 21 Closing the Loop: Using Assessment Results September 21, 2005 Raymond M. Zurawski, Ph.D. 22 Closing the Loop: The Key Step September 21, 2005 To be meaningful, assessment results must be studied, interpreted, and used Using the results is called “closing the loop” We conduct outcomes assessment because the findings can be used to improve our programs Raymond M. Zurawski, Ph.D. 23 Closing the Loop Where assessment and evaluation come together… Assessment: Evaluation September 21, 2005 Gathering, analyzing, and interpreting information about student learning Raymond M. Zurawski, Ph.D. Using assessment findings to improve institutions, divisions, and departments Upcraft and Schuh 24 Why Close the Loop? To To To To Inform Program Review Inform Planning and Budgeting Improve Teaching and Learning Promote Continuous Improvement (rather than ‘inspection at the end’) September 21, 2005 Raymond M. Zurawski, Ph.D. 25 Steps in Closing the Assessment Loop Briefly report methodology for each outcome Document where the students are meeting the intended outcome Document where they are not meeting the outcome Document decisions made to improve the program and assessment plan Refine assessment method and repeat process after proper time for implementation September 21, 2005 Raymond M. Zurawski, Ph.D. 26 Ways to Close the Loop Curricular design and sequencing Restriction on navigation of the curriculum Weaving more of “x” across the curriculum Increasing opportunities to learn “x” September 21, 2005 Raymond M. Zurawski, Ph.D. 27 Additional Ways to Close the Loop Strengthening advising Co-designing curriculum and co-curriculum Development of new model of teaching and learning based on research or others’ practice Development of learning modules or selfpaced learning to address typical learning obstacles September 21, 2005 Raymond M. Zurawski, Ph.D. 28 And don’t forget… A commonly reported use of results is to refine the assessment process itself New or refined instruments Improved methods of data collection (instructions, incentives, timing, setting, etc.) Changes in participant sample Re-assess to determine the efficacy of these changes in enhancing student learning. September 21, 2005 Raymond M. Zurawski, Ph.D. 29 A Cautionary Tale Beware the Lake Woebegone Effect September 21, 2005 …where all the children are above average… Raymond M. Zurawski, Ph.D. 30 A Cautionary Tale When concluding that… no changes are necessary at this time… Standards may have been met but… There may nonetheless be many students failing to meet expectations September 21, 2005 How might they be helped to perform better? There may nonetheless be ways to improve the program Raymond M. Zurawski, Ph.D. 31 Facilitating Use of Findings Laying Appropriate Groundwork Assessment infrastructure Conducive policies Linking assessment to other internal proceses (e.g., planning, budgeting, program review, etc,) Establish an annual assessment calendar September 21, 2005 Raymond M. Zurawski, Ph.D. 32 Factors that Discourage Use of Findings Failure to inform relevant individuals about purposes and scope of assessment projects Raising concerns and obstacles over unimportant issues Competing agendas and lack of sufficient resources September 21, 2005 Raymond M. Zurawski, Ph.D. 33 What You Can Do (Fulks, 2004) Schedule time to record data directly after completing the assessment. Prepare a simple table or chart to record results. Think about the meaning of these data and write down your conclusions. Take the opportunity to share your findings with other faculty in your area as well in those in other areas. Share the findings with students, if appropriate. Report on the data and what you have learned at discipline and institutional meetings. September 21, 2005 Raymond M. Zurawski, Ph.D. 34 Group Practices that Enhance Use of Findings Disciplinary groups’ interpretation of results Cross-disciplinary groups’ interpretation of results (library and information resource professionals, student affairs professionals) Integration of students, TAs, internship advisors or others who contribute to students’ learning September 21, 2005 Raymond M. Zurawski, Ph.D. 35 External Examples of Closing the Loop University of Washington Virginia Polytechnic University St. Cloud State University Montana State University (Chemistry) September 21, 2005 Raymond M. Zurawski, Ph.D. 36 Closing the Loop: Good News! Many programs at SNC have used their results to make program improvements or to refine their assessment procedures September 21, 2005 Raymond M. Zurawski, Ph.D. 37 Local Examples of Closing the Loop See HLC Focused Visit Progress Report Narrative on OIE Website See Program Assessment Reports and Program Review Reports on OIE Website September 21, 2005 Raymond M. Zurawski, Ph.D. 38 Ask your colleagues in … about their efforts to close the loop September 21, 2005 Raymond M. Zurawski, Ph.D. Music Religious Studies Chemistry Geology Business Administration Economics Teacher Education Student Life Mission and Heritage Etc. 39 One Example of Closing the Loop Psychology Added capstone in light of curriculum audit Piloting changes to course pedagogy to improve performance on General Education assessment Established PsycNews in response to student concerns about career/graduate study preparation Replaced pre-test Major Field Test administration with a lower cost, reliable and valid externally developed test September 21, 2005 Raymond M. Zurawski, Ph.D. 40 Conclusions September 21, 2005 Raymond M. Zurawski, Ph.D. 41 Conclusions Programs are relatively free to choose which aspects of student learning they wish to assess Assessing and reporting matter, but . . . Taking action on the basis of good information about real questions is the best reason for doing assessment Conclusions The main thing… …is to keep the main thing… …the main thing! Douglas Eder, SIU-E September 21, 2005 Raymond M. Zurawski, Ph.D. 43 Conclusions It may be premature to discourage the use of any method It may be premature to establish a specific target criteria It may be premature to require strict adherence to a particular reporting format Remember that sample reports discussed here are examples not necessarily models September 21, 2005 Raymond M. Zurawski, Ph.D. 44 Oh, and by the way… Document Your Work! September 21, 2005 Raymond M. Zurawski, Ph.D. 45 Additional Resources Internet Resources for Higher Education Outcomes Assessment (at NC State) September 21, 2005 Raymond M. Zurawski, Ph.D. 46 Concluding Q & A: A One-Minute paper What remains most unclear or confusing to you about closing the loop at this point? September 21, 2005 Raymond M. Zurawski, Ph.D. 47