The Future of Software Engineering

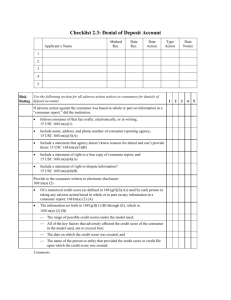

advertisement

USC C S E University of Southern California Center for Software Engineering The Future of Software Engineering Barry Boehm, USC Boeing Presentation May 10, 2002 5/10/2002 ©USC-CSE 1 USC C S E University of Southern California Center for Software Engineering Acknowledgments: USC-CSE Affiliates • Commercial Industry (15) – Daimler Chrysler, Freshwater Partners, Galorath, Group Systems.Com, Hughes, IBM, Cost Xpert Group, Microsoft, Motorola, Price Systems, Rational, Reuters Consulting, Sun, Telcordia, Xerox • Aerospace Industry (6) – Boeing, Lockheed Martin, Northrop Grumman, Raytheon, SAIC, TRW • Government (8) – DARPA, DISA, FAA, NASA-Ames, NSF, OSD/ARA/SIS, US Army Research Labs, US Army TACOM • FFRDC’s and Consortia (4) – Aerospace, JPL, SEI, SPC • International (1) 5/10/2002– Chung-Ang U. (Korea) ©USC-CSE 2 USC C S E University of Southern California Center for Software Engineering Software Engineering Trends Traditional • Standalone systems • Mostly source code • Requirements-driven • Control over evolution • Focus on software • Stable requirements • Premium on cost • Staffing workable 5/10/2002 Future • Everything connected-maybe • Mostly COTS components • Requirements are emergent • No control over COTS evolution • Focus on systems and software • Rapid change • Premium on value, speed, quality • Scarcity of critical talent ©USC-CSE 3 USC C S E University of Southern California Center for Software Engineering Large-System Example: Future Combat Systems To This... From This... Network Centric Distributed Platforms Small Unit UAV Other Layered Sensors Network Centric Force Robotic Sensor Distributed Fire Mechanisms Robotic Direct Fire Exploit Battlefield Non-Linearities using Technology to Reduce the Size of Platforms and the Force Robotic NLOS Fire Manned C2/Infantry Squad 5/10/2002 ©USC-CSE 4 Total Collaborative Effort to Support FCS CHPS Robotics AUTONOMY VISION PDR Unmanned Ground MOBILITY DESIGN Vehicle COMMAND & CONTROL Maneuver COMMS SUO C3 LOITER ATTACK Maneuver BLOS AFSS Networked Fires Weapon* PRECISION ATTACK 3D PLATFORM Organic All-Weather A160 NEAR ALL WEATHER ($5.0M) Targeting Vehicle ALL WEATHER All-Weather Surveillance PRECISION SENSING and Targeting Sensor IOR 1 IOR 2 SOG review CDR Detailed Design Build Robotic Unmanned Ground Vehicle BLOS Surveillance & Targeting System SOG review SOG review FY06 T&E Preliminary Design FY05 Shakeout Concept Development / Modeling and Simulation GovernmentRun Experiments FY04 FY03 Redesign / Test Brassboard FY02 Breadboard FY01 Design Competition Design Competition FY00 USC C S E University of Southern California Center for Software Engineering FCS Product/Process Interoperability Challenge 5/10/2002 ©USC-CSE 6 USC C S E University of Southern California Center for Software Engineering Small-Systems Example: eCommerce • 16-24 week fixed schedule Iteration Scope, Listening, Delivery focus Define LA SPIN Copyright © 2001 C-bridge 5/10/2002 Design ©USC-CSE Lines of readiness Are we ready for the next step? Develop Deploy 7 USC C S E University of Southern California Center for Software Engineering Future Software/Systems Challenges • Distribution, mobility, autonomy • Systems of systems, networks of networks, agents of agents • Group-oriented user interfaces • Nonstop adaptation and upgrades • Synchronizing many independent evolving systems • Hybrid, dynamic, evolving architectures • Complex adaptive systems behavior 5/10/2002 ©USC-CSE 8 USC C S E University of Southern California Center for Software Engineering Complex Adaptive Systems Characteristics • Order is not imposed; it emerges (Kauffman) • Viability determined by adaptation rules – Too inflexible: gridlock – Too flexible: chaos • Equilibrium determined by “fitness landscape” – A function assigning values to system states • Adaptation yields better outcomes then optimization 5/10/2002 – Software approaches: Agile Methods ©USC-CSE 9 USC C S E University of Southern California Center for Software Engineering Getting the Best from Agile Methods • Representative agile methods • The Agile Manifesto • The planning spectrum – Relations to Software CMM and CMMI • Agile and plan-driven home grounds • How much planning is enough? – Risk-driven hybrid approaches 5/10/2002 ©USC-CSE 10 USC C S E University of Southern California Center for Software Engineering Leading Agile Methods • • • • * • • • Adaptive Software Development (ASD) Agile Modeling Crystal methods Dynamic System Development Methodology (DSDM) eXtreme Programming (XP) Feature Driven Development Lean Development Scrum 5/10/2002 ©USC-CSE 11 USC C S E University of Southern California Center for Software Engineering XP: The 12 Practices • • • • • • The Planning Game Small Releases Metaphor Simple Design Testing Refactoring • • • • • • Pair Programming Collective Ownership Continuous Integration 40-hour Week On-site Customer Coding Standards -Used generatively, not imperatively 5/10/2002 ©USC-CSE 12 USC C S E University of Southern California Center for Software Engineering The Agile Manifesto We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value: • Individuals and interactions over processes and tools • Working software over comprehensive documentation • Customer collaboration over contract negotiation • Responding to change over following a plan That is, while there is value in the items on the right, we value the items on the left more. 5/10/2002 ©USC-CSE 13 USC C S E University of Southern California Center for Software Engineering Counterpoint: A Skeptical View Letter to Computer, Steven Rakitin, Dec. 2000 “customer collaboration over contract negotiation” Translation: Let's not spend time haggling over the details, it only interferes with our ability to spend all our time coding. We’ll work out the kinks once we deliver something... “responding to change over following a plan” Translation: Following a plan implies we would have to spend time thinking about the problem and how we might actually solve it. Why would we want to do that when we could be coding? 5/10/2002 ©USC-CSE 14 USC C S E University of Southern California Center for Software Engineering The Planning Spectrum Hackers XP Milestone Adaptive Risk- Driven SW Devel. Models … … Milestone Plan-Driven Models Inch- Pebble Micro-milestones, Ironbound Contract Agile Methods Software CMM CMMI 5/10/2002 ©USC-CSE 15 USC C S E University of Southern California Center for Software Engineering Agile and Plan-Driven Home Grounds Agile Home Ground Plan-Driven Home Ground • Agile, knowledgeable, collocated, collaborative developers • Plan-oriented developers; mix of skills • Above plus representative, empowered customers • Mix of customer capability levels • Reliance on tacit interpersonal knowledge • Reliance on explicit documented knowledge • Largely emergent requirements, rapid change • Requirements knowable early; largely stable • Architected for current requirements • Architected for current and foreseeable requirements • Refactoring inexpensive • Refactoring expensive • Smaller teams, products • Larger teams, products • Premium on rapid value • Premium on high-assurance 5/10/2002 ©USC-CSE 16 USC C S E University of Southern California Center for Software Engineering How Much Planning Is Enough? - A risk analysis approach • Risk Exposure RE = Prob (Loss) * Size (Loss) – “Loss” – financial; reputation; future prospects, … • For multiple sources of loss: RE = [Prob (Loss) * Size (Loss)]source sources 5/10/2002 ©USC-CSE 17 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Planning Detail - Loss due to inadequate plans high P(L): inadequate plans high S(L): major problems (oversights, delays, rework) RE = P(L) * S(L) low P(L): thorough plans low S(L): minor problems Time and Effort Invested in plans 5/10/2002 ©USC-CSE 18 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Planning Detail - Loss due to inadequate plans - Loss due to market share erosion high P(L): inadequate plans high S(L): major problems (oversights, delays, rework)) high P(L): plan breakage, delay high S(L): value capture delays RE = P(L) * S(L) low P(L): few plan delays low S(L): early value capture low P(L): thorough plans low S(L): minor problems Time and Effort Invested in Plans 5/10/2002 ©USC-CSE 19 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Planning Detail - Sum of Risk Exposures high P(L): inadequate plans high S(L): major problems (oversights, delays, rework) high P(L): plan breakage, delay high S(L): value capture delays Sweet Spot low P(L): few plan delays low S(L): early value capture low P(L): thorough plans low S(L): minor problems Time and Effort Invested in Plans 5/10/2002 ©USC-CSE 20 USC C S E University of Southern California Center for Software Engineering Comparative RE Profile: Plan-Driven Home Ground Higher S(L): large system rework Plan-Driven Sweet Spot Mainstream Sweet Spot Time and Effort Invested in Plans 5/10/2002 ©USC-CSE 21 USC C S E University of Southern California Center for Software Engineering Comparative RE Profile: Agile Home Ground Mainstream Sweet Spot Agile Sweet Spot Lower S(L): easy rework Time and Effort Invested in Plans 5/10/2002 ©USC-CSE 22 USC C S E University of Southern California Center for Software Engineering Conclusions: CMMI and Agile Methods • Agile and plan-driven methods have best-fit home grounds – Increasing pace of change requires more agility • Risk considerations help balance agility and planning – Risk-driven “How much planning is enough?” • Risk-driven agile/plan-driven hybrid methods available – Adaptive Software Development, RUP, MBASE, CeBASE Method – Explored at USC-CSE Affiliates’ Executive Workshop, March 12-14 • CMMI provides enabling criteria for hybrid methods – Risk Management, Integrated Teaming 5/10/2002 ©USC-CSE 23 USC C S E University of Southern California Center for Software Engineering Software Engineering Trends Traditional • Standalone systems • Mostly source code • Requirements-driven • Control over evolution • Focus on software • Stable requirements • Premium on cost • Staffing workable 5/10/2002 Future • Everything connected-maybe • Mostly COTS components • Requirements are emergent • No control over COTS evolution • Focus on systems and software • Rapid change • Premium on value, speed, quality • Scarcity of critical talent ©USC-CSE 24 USC C S E University of Southern California Center for Software Engineering Getting the Best from COTS • COTS Software: A Behavioral Definition • COTS Empirical Hypotheses: Top-10 List – COTS Best Practice Implications • COTS-Based Systems (CBS) Challenges 5/10/2002 ©USC-CSE 25 USC C S E University of Southern California Center for Software Engineering COTS Software: A Behavioral Definition • Commercially sold and supported – Avoids expensive development and support • No access to source code – Special case: commercial open-source • Vendor controls evolution – Vendor motivation to add, evolve features • No vendor guarantees – Of dependability, interoperability, continuity, … 5/10/2002 ©USC-CSE 26 USC C S E University of Southern California Center for Software Engineering COTS Top-10 Empirical Hypotheses-I - Basili & Boehm, Computer, May 2001, pp. 91-93 1. Over 99% of all executing computer instructions come from COTS – Each has passed a market test for value 2. More than half of large-COTS-product features go unused 3. New COTS release frequency averages 8-9 months – Average of 3 releases before becoming unsupported 4. CBS life-cycle effort can scale as high as N2 – N = # of independent COTS products in a system 5. CBS post-deployment costs exceed development costs – Except for short-lifetime systems 5/10/2002 ©USC-CSE 27 USC C S E University of Southern California Center for Software Engineering Usual Hardware-Software Trend Comparison Log N HW New transistors in service/year SW • Different counting rules • Try counting software as Lines of Code in Service (LOCS) = (#platforms) * (#object LOCS/platform) New Source Lines of Code/year Time 5/10/2002 ©USC-CSE 28 USC C S E University of Southern California Center for Software Engineering Lines of Code in Service: U.S. Dept. of Defense 1015 1000000 1014 100000 Total LOCS LOCS 1013 10000 1012 1000 1011 100 1010 10 109 1 108 0.1 107 $ LOCS 0.01 Total $/LOCS 106 0.001 105 0.0001 104 0.00001 1950 1960 1970 1980 1990 2000 Years 5/10/2002 ©USC-CSE 29 USC C S E University of Southern California Center for Software Engineering 1. DoD LOCS: % COTS, 2000 M = Million, T = Trillion Platform # P’form (M) LOCS P’form (M) LOCS Mainframe .015 200 Mini .10 Micro Combat Total % COTS Non-COTS LOCS (T) 3 95 .15 100 10 99 .10 2 100 200 99.5 1.00 2 2 4 80 .80 (T) 217 2.05 (<1%) • COTS often an economic necessity 5/10/2002 ©USC-CSE 30 USC C S E University of Southern California Center for Software Engineering 2. Use of COTS Features: Microsoft Word and Power Point - K. Torii Lab, Japan: ISERN 2000 Workshop • Individuals: 12-16% of features used • 10-Person Group: 26-29% of features used • Extra features cause extra work • Consider build vs. buy for small # features 5/10/2002 ©USC-CSE 31 USC C S E University of Southern California Center for Software Engineering 3. COTS Release Frequency: Survey Data - Ron Kohl/GSAW surveys: 1999-2002* GSAW Survey Release Frequency (months) 1999 6.3 2000 8.5 2001 8.75 2002 9.6 • Adaptive maintenance often biggest CBS life cycle cost • Average of 3 releases before becoming unsupported * Ground System Architecture Workshops, Aerospace Corp. 5/10/2002 ©USC-CSE 32 USC C S E University of Southern California Center for Software Engineering 4. CBS Effort Scaling As High As N2 Strong COTS Coupling Weak COTS Coupling COTS COTS COTS 5/10/2002 COTS COTS COTS Custom SW Custom SW Effort ~ N + N(N-1)/2 = N(N+1)/2 (here = 6) Effort ~ N (here = 3) ©USC-CSE 33 USC C S E University of Southern California Center for Software Engineering 4. CBS Effort Scaling - II Strong COTS Coupling Relative COTS Adaptation Effort 10 8 Weak COTS Coupling 6 4 2 1 2 3 4 # COTS • Reduce # COTS or Weaken COTS coupling - COTS choices, wrappers, domain architectures, open standards, COTS refresh synchronization 5/10/2002 ©USC-CSE 34 USC C S E University of Southern California Center for Software Engineering COTS Top-10 Empirical Hypotheses-II 6. Less than half of CBS development effort comes from glue code – But glue code costs 3x more per instruction 7. Non-development CBS costs are significant – Worth addressing, but not overaddressing 8. COTS assessment and tailoring costs vary by COTS product class 9. Personnel factors are the leading CBS effort drivers – Different skill factors are needed for CBS and custom software 10. CBS project failure rates are higher than for custom software – But CBS benefit rates are higher also 5/10/2002 ©USC-CSE 35 USC C S E University of Southern California Center for Software Engineering 6. COCOTS Effort Distribution: 20 Projects - Mean % of Total COTS Effort by Activity ( +/- 1 SD) 70.00% 61.25% 60.00% % Person-months 50.00% 49.07% 50.99% 40.00% 31.06% 30.00% 20.00% 20.75% 21.76% 20.27% 11.31% 10.00% -10.00% 2.35% 0.88% 0.00% -7.57% assessment -7.48% tailoring glue code system volatility -20.00% • Glue code generally less than half of total • No standard CBS effort distribution profile 5/10/2002 ©USC-CSE 36 USC C S E University of Southern California Center for Software Engineering 9. Personnel Factors Are Leading CBS Effort Drivers: Different Skill Factors Needed Custom Development CBS Development • Rqts./implementation assessment • Rqts./ COTS assessment • Algorithms; data & control structures • COTS mismatches; connector structures • Code reading and writing • COTS mismatches; assessment, tailoring, glue code development; coding avoidance • Adaptation to rqts. changes • Adaptation to rqts., COTS changes • White-box/black-box testing • Black-box testing • Rethink recruiting and evaluation criteria 5/10/2002 ©USC-CSE 37 USC C S E University of Southern California Center for Software Engineering 10. CBS Projects Tend to Fail Hard Major Sources of CBS Project Failure • • • • • • • • CBS skill mismatches CBS inexperience CBS optimism Weak life cycle planning CBS product mismatches Hasty COTS assessment Changing vendor priorities New COTS market entries • These are major topics for COTS risk assessment 5/10/2002 ©USC-CSE 38 USC C S E University of Southern California Center for Software Engineering CBS Challenges • • • • • 5/10/2002 Process specifics – Milestones; role of “requirements” – Multi-COTS refresh frequency and process Life cycle management – Progress metrics; development/support roles Cost-effective COTS assessment and test aids – COTS mismatch detection and redressal Increasing CBS controllability – Technology watch; wrappers; vendor win-win Better empirical data and organized experience ©USC-CSE 39 USC C S E University of Southern California Center for Software Engineering Software Engineering Trends Traditional • Standalone systems • Mostly source code • Requirements-driven • Control over evolution • Focus on software • Stable requirements • Premium on cost • Staffing workable 5/10/2002 Future • Everything connected-maybe • Mostly COTS components • Requirements are emergent • No control over COTS evolution • Focus on systems and software • Rapid change • Premium on value, speed, quality • Scarcity of critical talent ©USC-CSE 40 USC C S E University of Southern California Center for Software Engineering Integrating Software and Systems Engineering • The software “separation of concerns” legacy – Erosion of underlying modernist philosophy – Resulting project social structure • Responsibility for system definition • The CMMI software paradigm 5/10/2002 ©USC-CSE 41 USC C S E University of Southern California Center for Software Engineering The “Separation of Concerns” Legacy “The notion of ‘user’ cannot be precisely defined, and therefore has no place in CS or SE.” – Edsger Dijkstra, ICSE 4, 1979 “Analysis and allocation of the system requirements is not the responsibility of the SE group but is a prerequisite for their work.” – Mark Paulk at al., SEI Software CMM v.1.1, 1993 5/10/2002 ©USC-CSE 42 USC C S E University of Southern California Center for Software Engineering Cosmopolis: The Erosion of Modernist Philosophy • Dominant since 17th century • Formal, reductionist – Apply laws of cosmos to human polis • Focus on written vs. oral; universal vs. particular; general vs. local; timeless vs. timely – one-size-fits-all solutions • Strong influence on focus of computer science • Weak in dealing with human behavior, rapid change 5/10/2002 ©USC-CSE 43 USC C S E University of Southern California Center for Software Engineering Resulting Project Social Structure I wonder when they'll give us our requirements? SOFTWARE AERO. ELEC. MGMT. MFG. COMM 5/10/2002 G&C PAYLOAD ©USC-CSE 44 USC C S E University of Southern California Center for Software Engineering Responsibility for System Definition • Every success-critical stakeholder has knowledge of factors that will thwart a project’s success – Software developers, users, customers, … • It is irresponsible to underparticipate in system definition – Govt./TRW large system: 1 second response time • It is irresponsible to overparticipate in system definition – Scientific American: focus on part with software solution 5/10/2002 ©USC-CSE 45 USC C S E University of Southern California Center for Software Engineering The CMMI Software Paradigm • System and software engineering are integrated – Software has a seat at the center table • Requirements, architecture, and process are developed concurrently – Along with prototypes and key capabilities • Developments done by integrated teams – Collaborative vs. adversarial process – Based on shared vision, negotiated stakeholder concurrence 5/10/2002 ©USC-CSE 46 USC C S E University of Southern California Center for Software Engineering Systems Engineering Cost Model (COSYSMO) Barry Boehm, Donald Reifer and Ricardo Valerdi 5/10/2002 ©USC-CSE 47 USC C S E University of Southern California Center for Software Engineering Development Approach • Use USC seven step model building process • Keep things simple – Start with Inception phase first when only largegrain information tends to be available – Use EIA 632 to bound tasks that are part of the effort – Focus on software intensive subsystems first (see next chart for explanation), then extend to total systems • Develop the model in three steps – Step 1 – limit effort to software-intensive systems – Step 2 – tackle remainder of phases in life cycle – Step 3 – extend work to broad range of systems • Build on previous work 5/10/2002 ©USC-CSE 48 USC University of Southern California C S E Center for Software Engineering The Form of the Model # Requirements # Interfaces # Scenarios # Algorithms + Volatility Factor COCOMO II-based model Size Drivers Effort COSYSMO Effort Multipliers Duration - Application factors -8 factors - Team factors -8 factors - Schedule driver 5/10/2002 Calibration ©USC-CSE WBS guided By EIA 632 49 USC C S E University of Southern California Center for Software Engineering Step 2: Size the Effort Using Function Point Analogy Low Average High ____x0.5 ____x1 ____x4 Interfaces ____x1 ____x3 ____x7 Algorithms ____x3 ____x6 ____x15 Scenarios ____x14 ____x29 ____x58 Requirements TOTAL To compute size, develop estimates for the drivers and then sum the columns Weightings of factors relative work use average requirement as basis 5/10/2002 ©USC-CSE 50 USC C S E University of Southern California Center for Software Engineering Basic Effort Multipliers - I • Applications Factors (8) – – – – – – – – Requirements understanding Architecture understanding Level of service requirements, criticality, difficulty Legacy transition complexity COTS assessment complexity Platform difficulty Required business process reengineering Technology maturity Detailed rating conventions will be developed for these multipliers 5/10/2002 ©USC-CSE 51 USC C S E University of Southern California Center for Software Engineering Basic Effort Multipliers - II • Team Factors (8) – – – – – – – – Number and diversity of stakeholder communities Stakeholder team cohesion Personnel capability Personnel experience/continuity Process maturity Multi-site coordination Formality of deliverables Tool support Detailed rating conventions will be developed for these multipliers 5/10/2002 ©USC-CSE 52 USC C S E University of Southern California Center for Software Engineering Next Steps • Data collection – gather actual effort and duration data from actual system engineering effort • Model calibration – calibrate the model first using expert opinion, then refine as actual data being collected • Model validation – validate that the model generates answers that reflect a mix of expert opinions and realworld data (move towards the actual data as it becomes available) • Model publication – publish the model periodically as new versions become available (at least bi-annually) • Model extension and refinement – extend the model to increase its scope towards the right; refine the model to increase its predictive accuracy 5/10/2002 ©USC-CSE 53 USC C S E University of Southern California Center for Software Engineering Software Engineering Trends Traditional • Standalone systems • Mostly source code • Requirements-driven • Control over evolution • Focus on software • Stable requirements • Premium on cost • Staffing workable 5/10/2002 Future • Everything connected-maybe • Mostly COTS components • Requirements are emergent • No control over COTS evolution • Focus on systems and software • Rapid change • Premium on value, speed, quality • Scarcity of critical talent ©USC-CSE 54 USC C S E University of Southern California Center for Software Engineering Value-Based Software Engineering (VBSE) • Essential to integration of software and systems engineering – Software increasingly determines system content – Software architecture determines system adaptability • 7 key elements of VBSE • Implementing VBSE – CeBASE Method and method patterns – Experience factory, GQM, and MBASE elements – Means of achieving CMMI Level 5 benefits 5/10/2002 ©USC-CSE 55 USC C S E University of Southern California Center for Software Engineering 7 Key Elements of VBSE 1. Benefits Realization Analysis 2. Stakeholders’ Value Proposition Elicitation and Reconciliation 3. Business Case Analysis 4. Continuous Risk and Opportunity Management 5. Concurrent System and Software Engineering 6. Value-Based Monitoring and Control 7. Change as Opportunity 5/10/2002 ©USC-CSE 56 USC C S E University of Southern California Center for Software Engineering The Information Paradox (Thorp) • No correlation between companies’ IT investments and their market performance • Field of Dreams – Build the (field; software) – and the great (players; profits) will come • Need to integrate software and systems initiatives 5/10/2002 ©USC-CSE 57 USC C S E University of Southern California Center for Software Engineering DMR/BRA* Results Chain Order to delivery time is an important buying criterion INITIATIVE Contribution Implement a new order entry system ASSUMPTION OUTCOME Contribution OUTCOME Reduced order processing cycle (intermediate outcome) Increased sales Reduce time to process order Reduce time to deliver product *DMR Consulting Group’s Benefits Realization Approach 5/10/2002 ©USC-CSE 58 USC C S E University of Southern California Center for Software Engineering The Model-Clash Spider Web: Master Net 5/10/2002 ©USC-CSE 59 USC C S E University of Southern California Center for Software Engineering EasyWinWin OnLine Negotiation Steps 5/10/2002 ©USC-CSE 60 USC C S E University of Southern California Center for Software Engineering Red cells indicate lack of consensus. Oral discussion of cell graph reveals unshared information, unnoticed assumptions, hidden issues, constraints, etc. 5/10/2002 ©USC-CSE 61 USC C S E University of Southern California Center for Software Engineering Example of Business Case Analysis ROI= (Benefits-Costs)/Costs Option BRapid 3 Return on Investment Option B 2 Option A 1 Time -1 5/10/2002 ©USC-CSE 62 USC C S E University of Southern California Center for Software Engineering Architecture Choices Rigid Hyper-flexible … Intelligent Router Flexible F12 5/10/2002 F1, F2, F3 F4, F5 F6, F7 F8, F9, F10, F11 ©USC-CSE 63 USC University of Southern California C S E Center for Software Engineering Architecture Flexibility Determination Effort 60 (person-weeks) 50 40 Hyper-flexible m=1 30 Rigid m = 12 20 10 0 5/10/2002 Flexible m=2.74 Fraction of features modified 0.2 0.4 ©USC-CSE 0.6 0.8 1 64 USC C S E University of Southern California Center for Software Engineering Value-Based Software Engineering (VBSE) • Essential to integration of software and systems engineering – Software increasingly determines system content – Software architecture determines system adaptability • 7 key elements of VBSE • Implementing VBSE – CeBASE Method and method patterns – Experience factory, GQM, and MBASE elements – Means of achieving CMMI Level 5 benefits 5/10/2002 ©USC-CSE 65 USC C S E University of Southern California Center for Software Engineering Experience Factory Framework - III Progress/Plan/ Goal Mismatches Org. Shared Vision & Improvement Strategy • – • Planning context Org. Improvement Goals Initiative Plans Goal-related questions, metrics • Goal achievement models Org. Goals Initiative-related questions, metrics Initiative Monitoring and Control – Experience-Base Analysis Analyzed experience, Updated models Achievables, Opportunities Project experience Experience Base Models and data Models and data Project Shared Vision and Strategy 5/10/2002 • – Org. Improvement Strategies – Org. Improvement Initiative Planning & Control Planning Context ©USC-CSE Project Planning and Control 66 USC C S E University of Southern California Center for Software Engineering VBSE Experience Factory Example • Shared vision: Increased market share, profit via rapid development • Improvement goal: Reduce system development time 50% • Improvement strategy: Reduce all task durations 50% • Pilot project: Rqts, design, code reduced 50%; test delayed – Analysis: Test preparation insufficient, not on critical-path • Experience base: OK to increase effort on noncritical-path activities, if it decreases time on critical-path activities 5/10/2002 ©USC-CSE 67 USC University of Southern California C S E Center for Software Engineering CeBASE Method Strategic Framework -Applies to organization’s and projects’ people, processes, and products Progress/Plan/Goal mismatches -shortfalls, opportunities, risks Plan/Goal mismatches Org-Portfolio Shared Vision •Org. Value Propositions (VP’s) -Stakeholder values •Current situation w.r.t. VP’s •Improvement Goals, Priorities •Global Scope, Results Chain •Value/business case models Organization/ Portfolio: Experience Factory, GQM Shortfalls, opportunities, risks Initiatives •Project Value Propositions -Stakeholder values •Current situation w.r.t. VP’s •Improvement Goals, Priorities •Project Scope, Results Chain •Value/business case models Org. Strategic Plans •Strategy elements •Evaluation criteria/questions •Improvement plans -Progress metrics -Experience base Shortfalls, opportunities, risks Project vision, goals Project Shared Vision Project: MBASE Planning context Scoping context Planning Context 5/10/2002 Planning Context Project Plans •LCO/LCA Package -Ops concept, prototypes, rqts, architecture, LCplan, rationale •IOC/Transition/Support Package -Design, increment plans, quality plans, T/S plans •Evaluation criteria/questions •Progress metrics LCO: Life Cycle Objectives LCA: Life Cycle Architecture Plan/goal mismatches IOC: Initial Operational Capability GMQM: Goal-Model-Question-Metric Paradigm MBASE: Model-Based (System) Architecting and Software Engineering ©USC-CSE Monitoring & Control Context Org. Monitoring & Control •Monitor environment -Update models •Implement plans •Evaluate progress -w.r.t. goals, models •Determine, apply corrective actions •Update experience base Project experience, progress w.r.t. plans, goals Monitoring & Control context Proj. Monitoring & Control •Monitor environment -Update models Monitoring •Implement plans & Control •Evaluate progress context -w.r.t. goals, models, plans •Determine, apply corrective actions •Update experience base Progress/Plan/goal mismatches -Shortfalls, opportunities, risks 68 USC University of Southern California C S E Center for Software Engineering CeBASE Method Coverage of CMMI - I • Process Management – – – – – Organizational Process Focus: 100+ Organizational Process Definition: 100+ Organizational Training: 100Organizational Process Performance: 100Organizational Innovation and Deployment: 100+ • Project Management – – – – – – – 5/10/2002 Project Planning: 100 Project Monitoring and Control: 100+ Supplier Agreement Management: 50Integrated Project Management: 100Risk Management: 100 Integrated Teaming: 100 Quantitative Project Management: 70- ©USC-CSE 69 USC University of Southern California C S E Center for Software Engineering CeBASE Method Coverage of CMMI - II • Engineering – – – – – – Requirements Management: 100 Requirements Development: 100 Technical Solution: 60+ Product Integration: 70+ Verification: 70Validation: 80+ • Support – – – – – – 5/10/2002 Configuration Management: 70Process and Product Quality Assurance: 70Measurement and Analysis: 100Decision Analysis and Resolution: 100Organizational Environment for Integration: 80Causal Analysis and Resolution: 100 ©USC-CSE 70 USC C S E University of Southern California Center for Software Engineering CeBASE Method Simplified via Method Patterns • Schedule as Independent Variable (SAIV) – Also applies for cost, cost/schedule/quality • Opportunity Trees – Cost, schedule, defect reduction • Agile COCOMO II – Analogy-based estimate adjuster • COTS integration method patterns • Process model decision table 5/10/2002 ©USC-CSE 71 USC C S E University of Southern California Center for Software Engineering The SAIV Process Model 1. Shared vision and expectations management 2. Feature prioritization 3. Schedule range estimation 4. Architecture and core capabilities determination 5. Incremental development 6. Change and progress monitoring and control 5/10/2002 ©USC-CSE 72 USC C S E University of Southern California Center for Software Engineering Shared Vision, Expectations Management, and Feature Prioritization • Use stakeholder win-win approach • Developer win condition: Don’t overrun fixed 24-week schedule • Clients’ win conditions: 56 weeks’ worth of features • Win-Win negotiation – Which features are most critical? – COCOMO II: How many features can be built within a 24-week schedule? 5/10/2002 ©USC-CSE 73 USC University of Southern California C S E Center for Software Engineering COCOMO II Estimate Ranges 4x 2x Early Design (13 parameters) 1.5x 90% confidence limits: - Pessimistic 1.25x Relative Cost Range x - Optimistic Post-Architecture (23 parameters) 0.8x 0.67x 0.5x Applications Composition (3 parameters) 0.25x Feasibility Plans and Rqts. Detail Design Spec. Product Design Spec. Rqts. Spec. Concept of Operation Product Design Detail Design Accepted Software Devel. and Test Phases and Milestones 5/10/2002 ©USC-CSE 74 USC C S E University of Southern California Center for Software Engineering Core Capability Incremental Development, and Coping with Rapid Change • Core capability not just top-priority features – Useful end-to-end capability – Architected for ease of adding, dropping marginal features • Worst case: Deliver core capability in 24 weeks, with some extra effort • Most likely case: Finish core capability in 16-20 weeks – Add next-priority features • Cope with change by monitoring progress – Renegotiate plans as appropriate 5/10/2002 ©USC-CSE 75 USC C S E University of Southern California Center for Software Engineering SAIV Experience I: USC Digital Library Projects • Life Cycle Architecture package in fixed 12 weeks – Compatible operational concept, prototypes, requirements, architecture, plans, feasibility rationale • Initial Operational Capability in 12 weeks – Including 2-week cold-turkey transition • Successful on 24 of 26 projects – Failure 1: too-ambitious core capability • Cover 3 image repositories at once – Failure 2: team disbanded • Graduation, summer job pressures 5/10/2002 ©USC-CSE 76 USC C S E University of Southern California Center for Software Engineering RAD Opportunity Tree Business process reengineering Reusing assets Applications generation Schedule as independent variable (SAIV) Eliminating Tasks Tools and automation Work streamlining (80-20) Increasing parallelism Reducing Time Per Task Reducing Risks of Single-Point Failures Reducing failures Reducing their effects Early error elimination Process anchor points Improving process maturity Collaboration technology Minimizing task dependencies Avoiding high fan-in, fan-out Reducing task variance Removing tasks from critical path Reducing Backtracking Activity Network Streamlining 24x7 development Nightly builds, testing Weekend warriors Increasing Effective Workweek Better People and Incentives Transition to Learning Organization 5/10/2002 ©USC-CSE 77 USC C S E University of Southern California Center for Software Engineering Software Engineering Trends Traditional • Standalone systems • Mostly source code • Requirements-driven • Control over evolution • Focus on software • Stable requirements • Premium on cost • Staffing workable 5/10/2002 Future • Everything connected-maybe • Mostly COTS components • Requirements are emergent • No control over COTS evolution • Focus on systems and software • Rapid change • Premium on value, speed, quality • Scarcity of critical talent ©USC-CSE 78 USC C S E University of Southern California Center for Software Engineering Critical Success Factors for Software Careers • Understanding, dealing with sources of software value – And associated stakeholders, applications domains • Software/systems architecting and engineering – Creating and/or integrating software components – Using risk to balance disciplined and agile processes • Ability to continuously learn and adapt 5/10/2002 ©USC-CSE 79 USC C S E University of Southern California Center for Software Engineering Critical Success Factors for Software Careers - USC Software Engineering Certificate Courses • Understanding, dealing with sources of software value – And associated stakeholders, applications domains – CS 510: Software Management and Economics • Software/systems architecting and engineering – Creating and/or integrating software components – Using risk to balance disciplined and agile processes – CS 577ab: Software Engineering Principles and Practice – CS 578: Software Architecture • Ability to continuously learn and adapt – CS 592: Emerging Best Practices in Software Engineering 5/10/2002 ©USC-CSE 80 USC C S E University of Southern California Center for Software Engineering USC SWE Certificate Program and MS Programs Provide Key Software Talent Strategy Enablers • Infusion of latest SWE knowledge and trends • Tailorable framework of best practices • Continuing education for existing staff - Including education on doing their own lifelong learning • Package of career-enhancing perks for new recruits - Career reward for earning Certificate - Option to continue for MS degree - Many CS BA grads want both income and advanced degree • Available via USC Distance Education Network 5/10/2002 ©USC-CSE 81 USC C S E University of Southern California Center for Software Engineering Conclusions • World of future: Complex adaptive systems – Distribution; mobility; rapid change • Need adaptive vs. optimized processes – Don’t be a process dinosaur – Or a change-resistant change agent • Marketplace trends favor value/risk-based processes – Software a/the major source of product value – Software the primary enabler of system adaptability • Individuals are organizations need pro-active software talent strategies – Consider USC SW Engr. Certificate and MS programs 5/10/2002 ©USC-CSE 82 USC C S E University of Southern California Center for Software Engineering References D. Ahern, A.Clouse, & R. Turner, CMMI Distilled, Addison Wesley, 2001. C. Baldwin & K. Clark, Design Rules: The Power of Modularity, MIT Press, 1999. B. Boehm, D. Port, A. Jain, & V. Basili, “Achieving CMMI Level 5 with MBASE and the CeBASE Method,” Cross Talk, May 2002. B. Boehm & K. Sullivan, “Software Economics: A Roadmap,” The Future of Software Economics, A. Finkelstein (ed.), ACM Press, 2000. R. Kaplan & D. Norton, The Balanced Scorecard: Translating Strategy into Action, Harvard Business School Press, 1996. D. Reifer, Making the Software Business Case, Addison Wesley, 2002. K. Sullivan, Y. Cai, B. Hallen, and W. Griswold, “The Structure and Value of Modularity in Software Design,” Proceedings, ESEC/FSE, 2001, ACM Press, pp. 99-108. J. Thorp and DMR, The Information Paradox, McGraw Hill, 1998. Software Engineering Certificate website: sunset.usc.edu/SWE-Cert CeBASE web site : www.cebase.org CMMI web site : www.sei.cmu.edu/cmmi MBASE web site : sunset.usc.edu/research/MBASE 5/10/2002 ©USC-CSE 83