Mining Non-Redundant High Order Correlations in Binary Data

advertisement

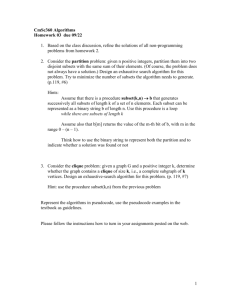

Mining Non-Redundant High Order

Correlations in Binary Data

Xiang Zhang, Feng Pan, Wei Wang, and Andrew

Nobel

VLDB2008

Outline

Motivation

Properties related to NIFSs

Pruning candidates by mutual information

The algorithm

Bounds based on pair-wise correlations

Bounds based on Hamming distances

Discussion

Motivation

Example:

Suppose X, Y and Z are binary features, where X and Y are

disease SNPs, Z=X(XOR)Y is the complex disease trait.

{X,Y,Z} have strong correlation.

But there are no correlation in{X,Z},{Y,Z} and {X,Y}.

Summary

We can see that the high order correlation pattern cannot be

identified by only examing the pair-wise correlations

Two aspects of the desired correlation patterns:

The correlation involves more than two features

The correlation is non-redundant, i.e., removing any feature will greatly

reduce the correlation

I ( X 3 ; X1 )

(Cont.)

•

I (Y ; X )

H (Y ) H (Y | X )

be the

H (Y )

relative entropy reduction of Y

based on X.

Consider three features, X1 , X 2 and X 3

I ( X 3 ; X 1 ) 21.97%

I ( X 3 ; X 2 ) 8.62%

i.e., the relative entropy reduction

of X 3 given X1 or X 2 alone is

small.

I ( X 3 ; X 1 , X 2 ) 81.59%

i.e., X1 or X 2 jointly reduce the

uncertainty of X 3 more than they

do separately.

This strong correlation exists only

when these three features are

considered together.

H ( X 3 ) H ( X 3 | X1)

H (X3)

6

6 14

14

9

5

5 4

4 11 10

10 1

1

log 2

log 2 ) [ ( log 2 log 2 ) ( log 2 log 2 )]

20 20

20

20 9

9 9

9 20 11

11 11

11

20

6

6 14

14

( log 2

log 2 )

20

20 20

20

0.881 0.688

21.97%

0.881

(

(Cont.)

In this paper, author study the problem of finding non-

redundant high order correlations in binary data.

NIFSs(Non-redundant Interacting Feature Subsets):

The features in an NIFS together has high multi-information

All subsets of an NIFS have low multi-information.

The computational challenge of finding NIFSs:

To enumerate feature combinations to find the feature subsets

that have high correlation.

For each such subset, it must be checked all its subsets to

make sure there is no redundancy.

Definition of NIFS

A subset of features {X1 , X 2 ,..., X n } is NIFS if the following two

criteria are satisfied:

{X1 , X 2 ,..., X n } is an SFS

Every proper subset X {X1, X 2 ,..., X n } is a WFS

Ex.

{ X 1 , X 2 , X 3}

is a NIFS

{ X 1 , X 2 , X 3} is a SFS

{X1 , X 2 },{X1 , X 3},{X 2 , X 3} are WFSs, where C ( X 1 , X 2 ) 0.03

C ( X 1 , X 3 ) 0.22

C ( X 2 , X 3 ) 0.22

Properties related to NIFSs

(Downward closure property of WFSs):

If feature subset {X1 , X 2 ,..., X n } is a WFS, then all its subsets are

WFSs

Advantage: This greatly reduces the complexity of the problem.

Let

X { X1 , X 2 ,..., X n }

be a NIFS. Any Y X is not a NIFS

Pruning candidates by mutual

information

not a WFS, i.e., C ( X , X )

All supersets of { X , X } can be safely pruned.

Ex.

{ X i , X j } is

i

i

Let 0.25 , 0.8

j

j

Algorithm

Upper and lower bounds based on pairwise correlations

is the average entropy in bits per symbol of a randomly

drawn k-element subset of {X1 , X 2 ,..., X n }

Algorithm(Cont.)

V { X a , X a 1 ,..., X b }

Suppose that the current candidate feature subset is

lb(V ) C (V ) , check whether all subsets of V of size (b-a-1) are WFSs.

lb(V ) , the subtree of V can be pruned. In case 2, C(V) must be calculated and checked all

subsets of V.

(Cont.)

ub(V ) , there is no need to calculate C(V) and directly proceed to its subtree. Using adding

proposition to get upper and lower bounds on the multi-information for each direct child node of V

lb(V ) , ub(V ) , it must be calculate C(V) .

adding proposition, C (V )

subtree is pruned, C (V )

V is output as a NIFS, C (V ) & & all subsets is WFSs

Discussion

Using an entropy-based correlation measurement to address

the problem of finding non-redundant interacting feature

subsets.

(Cont.)

C ( X1, X 2 ) H ( X1 ) H ( X 2 ) H ( X1, X 2 )

9

9 11

11

8

8 12

12

7

7

4

4

5

5

4

4

log 2

log 2 )+( log 2

log 2 )-( log 2

log 2

log 2

log 2 )

20

20 20

20

20

20 20

20

20

20 20

20 20

20 20

20

0.992+0.971-1.933

(

0.03<

Let 0.25 and

0.8

{ X1 , X 2 , X 3}, {X1 , X 2 , X 3 , X 6 }, {X 7 , X 8 , X 9 , X 10}

are SFSs

C ( X1 , X 2 , X 3 ) 0.82

C ( X1 , X 2 , X 3 , X 6 ) 0.97

C ( X 7 , X 8 , X 9 , X10 ) 1.15

{ X1 , X 2 }, { X 7 , X 8 , X 9 } are WFSs

C ( X1 , X 2 ) 0.03

C ( X 7 , X 8 , X 9 ) 0.15

To require that any subset of an NIFS

is weakly correlated.

Adding proposition

Where,

Hamming distance