presentation-2002-06-20-PlanetLab

advertisement

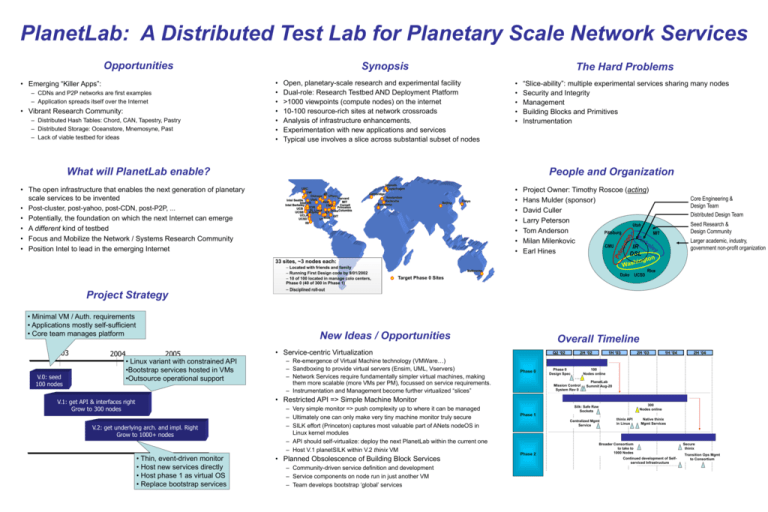

PlanetLab: A Distributed Test Lab for Planetary Scale Network Services Opportunities • Emerging “Killer Apps”: – CDNs and P2P networks are first examples – Application spreads itself over the Internet • Vibrant Research Community: – Distributed Hash Tables: Chord, CAN, Tapestry, Pastry – Distributed Storage: Oceanstore, Mnemosyne, Past – Lack of viable testbed for ideas Synopsis • • • • • • • The Hard Problems Open, planetary-scale research and experimental facility Dual-role: Research Testbed AND Deployment Platform >1000 viewpoints (compute nodes) on the internet 10-100 resource-rich sites at network crossroads Analysis of infrastructure enhancements, Experimentation with new applications and services Typical use involves a slice across substantial subset of nodes • • • • • “Slice-ability”: multiple experimental services sharing many nodes Security and Integrity Management Building Blocks and Primitives Instrumentation What will PlanetLab enable? • The open infrastructure that enables the next generation of planetary scale services to be invented • Post-cluster, post-yahoo, post-CDN, post-P2P, ... • Potentially, the foundation on which the next Internet can emerge • A different kind of testbed • Focus and Mobilize the Network / Systems Research Community • Position Intel to lead in the emerging Internet People and Organization UBC UW WI Chicago UPenn Harvard Utah Intel Seattle Intel MIT Intel OR Intel Berkeley Cornell CMU ICIR Princeton UCB St. Louis Columbia Duke UCSB Washu KY UCLA Rice GIT UCSD UT ISI Uppsala Copenhagen Cambridge Amsterdam Karlsruhe Barcelona Beijing Tokyo • • • • • • • Project Owner: Timothy Roscoe (acting) Hans Mulder (sponsor) David Culler Larry Peterson Utah Tom Anderson Pittsburg Milan Milenkovic CMU IR Earl Hines DSL Core Engineering & Design Team Distributed Design Team Seed Research & Design Community MIT Larger academic, industry, government non-profit organization 33 sites, ~3 nodes each: – Located with friends and family – Running First Design code by 9/01/2002 – 10 of 100 located in manage colo centers, Phase 0 (40 of 300 in Phase 1) Project Strategy • Minimal VM / Auth. requirements • Applications mostly self-sufficient • Core team manages platform 2003 V.0: seed 100 nodes 2004 Melbourne Duke UCSD Target Phase 0 Sites – Disciplined roll-out New Ideas / Opportunities 2005 • Linux variant with constrained API •Bootstrap services hosted in VMs •Outsource operational support Rice Overall Timeline • Service-centric Virtualization – Re-emergence of Virtual Machine technology (VMWare…) – Sandboxing to provide virtual servers (Ensim, UML, Vservers) – Network Services require fundamentally simpler virtual machines, making them more scalable (more VMs per PM), focussed on service requirements. – Instrumentation and Management become further virtualized “slices” Q2 ‘02 Phase 0 2H ‘02 Phase 0 Design Spec 1H ‘03 2H ‘03 1H ‘04 2H ‘04 100 Nodes online Mission Control System Rev 0 PlanetLab Summit Aug-20 • Restricted API => Simple Machine Monitor V.1: get API & interfaces right Grow to 300 nodes V.2: get underlying arch. and impl. Right Grow to 1000+ nodes • Thin, event-driven monitor • Host new services directly • Host phase 1 as virtual OS • Replace bootstrap services – Very simple monitor => push complexity up to where it can be managed – Ultimately one can only make very tiny machine monitor truly secure – SILK effort (Princeton) captures most valuable part of ANets nodeOS in Linux kernel modules – API should self-virtualize: deploy the next PlanetLab within the current one – Host V.1 planetSILK within V.2 thinix VM • Planned Obsolescence of Building Block Services – Community-driven service definition and development – Service components on node run in just another VM – Team develops bootstrap ‘global’ services 300 Nodes online Silk: Safe Raw Sockets Phase 1 Centralized Mgmt Service Phase 2 thinix API in Linux Native thinix Mgmt Services Broader Consortium to take to 1000 Nodes Continued development of Selfserviced Infrastructure Secure thinix Transition Ops Mgmt to Consortium