Tokenization

advertisement

CSE 535

Information Retrieval

Chapter 2: Text Operations

Srihari-CSE535-Spring2008

Logical View

1. Description of content and semantics of document

2. Structure

3. Derivation of index terms:

(a) removal of stopwords

(b) stemming

(c) identification of nouns

Srihari-CSE535-Spring2008

Index Terms

Assigned terms

Extracted terms

1. Manual indexing

1. Extraction from title, abstract or

(a) Professional indexer

(b) Authors

full-text

2. Classification efforts (Library of

2. Methodology to extract must

Congress Classification, ACM)

distinguish significant from

(a) Coarseness: large collections?

non-significant terms

(b) Categories are mutually

3. Controlled (limit to pre-defined

exclusive

3. Subjectivity, inter-indexer

dictionary vs. non-controlled

consistency (:very low!)

vocabulary (all terms as they are)

4. Ontologies and classications:

4. Controlled ! less noise, better

limited appeal for retrieval

performance?

Srihari-CSE535-Spring2008

Document Pre-Processing

Lexical analysis

digits, hypens, punctuation marks

e.g. I’ll --> I will

Stopword elimination

filter out words with low discriminatory value

stopword list

reduces size of index by 40% or more

might miss documents, e.g. “to be or not to be”

Stemming

connecting, connected, etc.

Selection of index terms

e.g. select nouns only

Thesaurus construction

permits query expansion

Srihari-CSE535-Spring2008

Lexical Analysis

Converting stream of characters into stream of

words/tokens

more than just detecting spaces between words (see next slide)

Disregard numbers as index terms

too vague

perform date and number normalization

Hyphens

consistent policy on removal e.g. B-52

Punctuation marks

typically removed

sometimes problematic, e.g. variable names myclass.height

Orthographic variations typically disregarded

Srihari-CSE535-Spring2008

Tokenization

Srihari-CSE535-Spring2008

Tokenization

Input: “Friends, Romans and Countrymen”

Output: Tokens

Friends

Romans

Countrymen

Each such token is now a candidate for an index

entry, after further processing

Described below

But what are valid tokens to emit?

Srihari-CSE535-Spring2008

Parsing a document

What format is it in?

pdf/word/excel/html?

What language is it in?

What character set is in use?

Each of these is a classification problem.

But there are complications …

Srihari-CSE535-Spring2008

Format/language stripping

Documents being indexed can include docs from

many different languages

A single index may have to contain terms of several languages.

Sometimes a document or its components can

contain multiple languages/formats

French email with a Portuguese pdf attachment.

What is a unit document?

An email?

With attachments?

An email with a zip containing documents?

Srihari-CSE535-Spring2008

Tokenization

Issues in tokenization:

Finland’s capital Finland? Finlands? Finland’s?

Hewlett-Packard Hewlett and Packard as two tokens?

San Francisco: one token or two? How do you decide it is

one token?

Srihari-CSE535-Spring2008

Language issues

Accents: résumé vs. resume.

L'ensemble one token or two?

L ? L’ ? Le ?

How are your users like to write their

queries for these words?

Srihari-CSE535-Spring2008

Tokenization: language issues

Chinese and Japanese have no spaces

between words:

Not always guaranteed a unique tokenization

Further complicated in Japanese, with

multiple alphabets intermingled

Dates/amounts in multiple formats

フォーチュン500社は情報不足のため時間あた$500K(約6,000万円)

Katakana

Hiragana

Kanji

“Romaji”

End-user can express query entirely in (say) Hiragana!

Srihari-CSE535-Spring2008

Normalization

“Right-to-left languages” like Hebrew and

Arabic: can have “left-to-right” text

interspersed.

Need to “normalize” indexed

as7/30

well as

7月30text

日 vs.

query terms into the same form

Character-level alphabet detection and

conversion

Tokenization not separable from this.

Sometimes ambiguous:

Morgen will ich in MIT …

Is this

German “mit”?

Srihari-CSE535-Spring2008

Punctuation

Ne’er: use language-specific, handcrafted “locale”

to normalize.

Which language?

Most common: detect/apply language at a pre-determined

granularity: doc/paragraph.

State-of-the-art: break up hyphenated sequence.

Phrase index?

U.S.A. vs. USA - use locale.

a.out

Srihari-CSE535-Spring2008

Numbers

3/12/91

Mar. 12, 1991

55 B.C.

B-52

My PGP key is 324a3df234cb23e

100.2.86.144

Generally, don’t index as text.

Will often index “meta-data” separately

Creation date, format, etc.

Srihari-CSE535-Spring2008

Case folding

Reduce all letters to lower case

exception: upper case (in mid-sentence?)

e.g., General Motors

Fed vs. fed

SAIL vs. sail

Srihari-CSE535-Spring2008

Thesauri and soundex

Handle synonyms and homonyms

Hand-constructed equivalence classes

e.g., car = automobile

your and you’re

Index such equivalences

When the document contains automobile, index it under car as

well (usually, also vice-versa)

Or expand query?

When the query contains automobile, look under car as well

Srihari-CSE535-Spring2008

Assymetric query expansion

Srihari-CSE535-Spring2008

Lemmatization

Reduce inflectional/variant forms to base form

E.g.,

am, are, is be

car, cars, car's, cars' car

the boy's cars are different colors the boy car

be different color

Srihari-CSE535-Spring2008

Dictionary entries – first cut

ensemble.french

時間.japanese

MIT.english

mit.german

guaranteed.english

entries.english

sometimes.english

These may be

grouped by

language. More

on this in

ranking/query

processing.

tokenization.english

Srihari-CSE535-Spring2008

Perl program to Split Words

# ! / usr/bin/perl

while (<>)

{ $ line = $ ;

chomp ( $ line ) ; Store line (with CR removed)

if ( $ line = ˜ / [ aÅ|z ] / )

{ # split on spaces

@words = split / \ s + / , $line ;

words is an associative array

# separately print all words in line

for each $term ( @words )

{ # lowercase

$term = ˜ tr / [A-Z ] / [ a-z ] / ;

# remove punctuation

$term = ˜ s / \ , | \ . | \ ; | \ : | \ ” | \ ’ | \ ‘ | \ ? | \ ! / / g ;

print $term . ” \ n ” ;

}

}

}

Srihari-CSE535-Spring2008

Building Lexical Analysers

Finite state machines

recognizer for regular expressions

Lex (http://www.combo.org/lex_yacc_page/lex.html)

popular Unix utility

program generator designed for lexical processing of character input

streams

input: high-level, problem oriented specification for character string

matching

output: program in a general purpose language which recognizes

regular expressions

+-------+

Source -> |

Lex

|

-> yylex

+-------+

+-------+

Input ->

| yylex | -> Output

+-------+

Srihari-CSE535-Spring2008

Writing lex source files

Format of a lex source file:

{definitions}

%%

{rules}

%%

{user subroutines}

invoking lex:

lex source cc lex.yy.c -ll

•minimum lex program is %% (null program copies input to output)

•use rules to do any special transformations

Program to convert British spelling to American

colour printf("color");

mechanise printf("mechanize");

petrol printf("gas");

Srihari-CSE535-Spring2008

Sample tokenizer using lex

letter

digit

id

number

%%

[a-zA-Z]

[0-9]

{letter}({letter}|{digit})*

{digit}+

printf("\nTokenizer running -- ^D to exit\n");

^{id}

{line();printf("<id>");}

{id}

printf("<id>");

^{number}

{line();printf("<number>");}

{number}

printf("<number>");

^[ \t]+

line();

[ \t]+

printf(" ");

[\n]

ECHO;

^[^a-zA-Z0-9 \t\n]+

{line();printf("\\%s\\",yytext);}

[^a-zA-Z0-9 \t\n]+

printf("\\%s\\",yytext);

%%

line()

{

printf("%4d: ",lineno++);

}

Sample input and output from above program

here are some ids

1: <id> <id> <id> <id>

now some numbers 123 4

2: <id> <id> <id> <number> <number>

ids with numbers x123 78xyz

3: <id> <id> <id> <id> <number><id>

garbage: @#$%^890abc

4: <id>\:\ \@#$%^\<number><id>

Srihari-CSE535-Spring2008

Stopword Elimination

1. Stopwords:

(a) Too frequent, P(ki) > 0.8

(b) Irrelevant in linguistic terms

i. Do not correpond to nouns, concepts etc

ii. Could however be vital in natural language processing

2. Removal from collection on two grounds:

(a) Retrieval efficiency

i. High frequency: no distinction between documents

ii. Useless for retrieval

(b) Storage efficiency

i. Zipf’s law

ii. Heap’s law

Srihari-CSE535-Spring2008

OAC Stopword list (~ 275 words)

The Complete OAC-Search Stopword List

a

did

has

nobody

somewhere

usually

about

do

have

noone

soon

very

all

does

having

nor

still

was

almost

doing

here

not

such

way

along

done

how

nothing

than

ways

already

during

however

now

that

we

also

each

i

of

the

well

although

either

if

once

their

went

always

enough

ii

one

theirs

were

am

etc

in

one's

then

what

among

even

including

only

there

when

an

ever

indeed

or

therefore

whenever

and

every

instead

other

these

where

any

everyone

into

others

they

wherever

anyone

everything

is

our

thing

whether

anything

for

it

ours

things

which

Srihari-CSE535-Spring2008

Stopword list contd.

anywhere

from

its

out

this

while

are

further

itself

over

those

who

as

gave

just

own

though

whoever

at

get

like

perhaps

through

why

away

getting

likely

rather

thus

will

because

give

may

really

to

with

between

given

maybe

same

together

within

beyond

giving

me

shall

too

without

both

go

might

should

toward

would

but

going

mine

simply

until

yes

by

gone

much

since

upon

yet

can

good

must

so

us

you

cannot

got

neither

some

use

your

come

great

never

someone

used

yours

could

had

no

something

sometimes

uses

using

yourself

Srihari-CSE535-Spring2008

Implementing Stoplists

2 ways to filter stoplist words from input token

stream

examine tokenizer output and remove any stopwords

remove stopwords as part of lexical analysis (or tokenization) phase

Approach 1: filtering after tokenization

standard list search problem; every token must be looked up in

stoplist

binary search trees, hashing

hashing is preferred method

insert stoplist into a hash table

each token hashed into table

if location is empty, not a stopword; otherwise string

comparisons to see whether hashed value is really a stopword

Approach 2

much more efficient

Srihari-CSE535-Spring2008

Stemming Algorithms

Designed to standardize morphological variations

Benefits of stemming

supposedly, to increase recall; could lead to lower precision

controversy surrounding benefits

web search engines do NOT perform stemming

Reduces size of index (clear benefit)

4 types of stemming algorithms

stem is the portion of a word which is left after the removal of its affixes

(prefixes and suffixes)

affix removal: most commonly used

table look-up: considerable storage space, all data not available

successor variety : (uses knowledge from structural linguistics, more

complex)

n-grams; more a term clustering procedure that a stemming algorithm

Porter Stemming algorithm

http://snowball.sourceforge.net/porter/stemmer.html

Srihari-CSE535-Spring2008

Stemming - example

Word is “duplicatable”

duplicat

duplicate

duplic

step 4

step 1b

step 3

Srihari-CSE535-Spring2008

Stemming

Reduce terms to their “roots” before indexing

language dependent

e.g., automate(s), automatic, automation all reduced to

automat.

for example compressed

and compression are both

accepted as equivalent to

compress.

for exampl compres and

compres are both accept

as equival to compres.

Srihari-CSE535-Spring2008

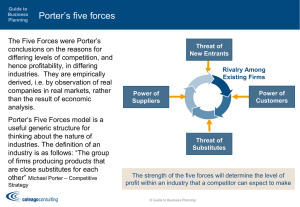

Porter’s algorithm

Commonest algorithm for stemming English

Conventions + 5 phases of reductions

phases applied sequentially

each phase consists of a set of commands

sample convention: Of the rules in a compound command, select the

one that applies to the longest suffix.

Srihari-CSE535-Spring2008

Typical rules in Porter

sses ss

ies i

ational ate

tional tion

Srihari-CSE535-Spring2008

Other stemmers

Other stemmers exist, e.g., Lovins stemmer

http://www.comp.lancs.ac.uk/computing/research/stemming/general/lovins.ht

m

Single-pass, longest suffix removal (about 250

rules)

Motivated by Linguistics as well as IR

Full morphological analysis - modest benefits

for retrieval

Srihari-CSE535-Spring2008

Stemming variants

Srihari-CSE535-Spring2008

Porter Stemmer : Representing Strings

A consonant in a word is a letter other than A, E, I, O or U, and other than Y preceded by a consonant.

(The fact that the term ‘consonant’ is defined to some extent in terms of itself does not make it ambiguous.)

So in TOY the consonants are T and Y, and in SYZYGY they are S, Z and G.

If a letter is not a consonant it is a vowel.

A consonant will be denoted by c, a vowel by v. A list ccc... of length greater than 0 will be denoted by C,

and a list vvv... of length greater than 0 will be denoted by V. Any word, or part of a word,

therefore has one of the four forms:

CVCV ... C

CVCV ... V

VCVC ... C

VCVC ... V

These may all be represented by the single form

[C]VCVC ... [V]

where the square brackets denote arbitrary presence of their contents. Using (VC) m to denote VC repeated m times,

this may again be written as

[C](VC)m[V].

m will be called the measure of any word or word part when represented in this form. The case m = 0 covers the null word.

Here are some examples:

m=0

TR, EE, TREE, Y, BY.

m=1

TROUBLE, OATS, TREES, IVY.

m=2

TROUBLES, PRIVATE, OATEN, ORRERY.

Srihari-CSE535-Spring2008

Porter Stemmer - Types of Rules

The rules for removing a suffix will be given in the form

(condition) S1 -> S2

This means that if a word ends with the suffix S1, and the stem before S1 satisfies the given condition, S1 is replaced by S2. The condition is usually given in terms of m, e

(m > 1) EMENT ->

Here S1 is ‘EMENT’ and S2 is null. This would map REPLACEMENT to REPLAC, since REPLAC is a word part for which m = 2.

The ‘condition’ part may also contain the following:

*S

-

the stem ends with S (and similarly for the other letters).

*v*

-

the stem contains a vowel.

*d

-

the stem ends with a double consonant (e.g. -TT, -SS).

*o

-

the stem ends cvc, where the second c is not W, X or Y (e.g. -WIL, -HOP).

And the condition part may also contain expressions with and, or and not, so that

(m>1 and (*S or *T))

tests for a stem with m>1 ending in S or T, while

(*d and not (*L or *S or *Z))

tests for a stem ending with a double consonant other than L, S or Z. Elaborate conditions like this are required only rarely.

In a set of rules written beneath each other, only one is obeyed, and this will be the one with the longest matching S1 for the given word. For example, with

SSES

->

SS

IES

->

I

SS

->

SS

S

->

(here the conditions are all null) CARESSES maps to CARESS since SSES is the longest match for S1.

Equally CARESS maps to CARESS (S1=‘SS’) and CARES to CARE (S1=‘S’).

Srihari-CSE535-Spring2008

Porter Stemmer - Step 1a

In the rules below, examples of their application, successful or otherwise, are given

on the right in lower case. Only one rule from each step can fire. The algorithm now follows:

Step 1a

SSES

->

SS

caresses

->

caress

IES

->

I

ponies

->

poni

ties

->

ti

caress

->

caress

cats

->

cat

SS

->

S

->

SS

Srihari-CSE535-Spring2008

Porter Stemmer- Step 1b

Step 1b

(m>0) EED

(*v*) ED

(*v*) ING

->

EE

->

->

feed

->

feed

agreed

->

agree

plastered

->

plaster

bled

->

bled

motoring

->

motor

sing

->

sing

If the second or third of the rules in Step 1b is successful, the following is done:

AT

->

ATE

conflat(ed)

->

conflate

BL

->

BLE

troubl(ed)

->

trouble

IZ

->

IZE

siz(ed)

->

size

->

single letter

hopp(ing)

->

hop

tann(ed)

->

tan

fall(ing)

->

fall

hiss(ing)

->

hiss

fizz(ed)

->

fizz

fail(ing)

->

fail

fil(ing)

->

file

(*d and not (*L or *S or *Z))

(m=1 and *o)

->

E

The rule to map to a single letter causes the removal of one of the double letter pair. The -E is put back on -AT, -BL and -IZ, so that the suffixes

-ATE, -BLE and -IZE can be recognised later. This E may be removed in step 4.

Srihari-CSE535-Spring2008

Porter Stemmer- Step 1c

Step 1c

(*v*) Y

->

I

happy

->

happi

sky

->

sky

Step 1 deals with plurals and past participles. The subsequent steps are

much more straightforward.

Srihari-CSE535-Spring2008

Porter Stemmer - Step 2

(m>0) ATIONAL

->

ATE

relational

->

relate

(m>0) TIONAL

->

TION

conditional

->

condition

rational

->

rational

(m>0) ENCI

->

ENCE

valenci

->

valence

(m>0) ANCI

->

ANCE

hesitanci

->

hesitance

(m>0) IZER

->

IZE

digitizer

->

digitize

(m>0) ABLI

->

ABLE

conformabli

->

conformable

(m>0) ALLI

->

AL

radicalli

->

radical

(m>0) ENTLI

->

ENT

differentli

->

different

(m>0) ELI

->

E

vileli

->

vile

(m>0) OUSLI

->

OUS

analogousli

->

analogous

(m>0) IZATION

->

IZE

vietnamization

->

vietnamize

(m>0) ATION

->

ATE

predication

->

predicate

(m>0) ATOR

->

ATE

operator

->

operate

(m>0) ALISM

->

AL

feudalism

->

feudal

(m>0) IVENESS

->

IVE

decisiveness

->

decisive

(m>0) FULNESS

->

FUL

hopefulness

->

hopeful

(m>0) OUSNESS

->

OUS

callousness

->

callous

(m>0) ALITI

->

AL

formaliti

->

formal

(m>0) IVITI

->

IVE

sensitiviti

->

sensitive

(m>0) BILITI

->

BLE

sensibiliti

->

sensible

The test for the string S1 can be made fast by doing a program switch on the penultimate letter of the word being tested. This gives a fairly even breakdown

of the possible values of the string S1. It will be seen in fact that the S1-strings in step 2 are presented here in the alphabetical order of their penultimate letter.

Srihari-CSE535-Spring2008

Porter Stemmer - Step 3

(m>0)

ICATE -> IC triplicate -> triplic

(m>0)

ATIVE -> formative ->

form

(m>0)

ALIZE -> AL formalize ->

formal

(m>0)

ICITI -> IC electriciti -> electric

(m>0)

ICAL -> IC electrical ->

electric

(m>0)

FUL -> hopeful ->

hope

(m>0)

NESS -> goodness ->

good

Srihari-CSE535-Spring2008

Porter Stemmer- Step 4

(m>1) AL

->

revival

->

reviv

(m>1) ANCE

->

allowance

->

allow

(m>1) ENCE

->

inference

->

infer

(m>1) ER

->

airliner

->

airlin

(m>1) IC

->

gyroscopic

->

gyroscop

(m>1) ABLE

->

adjustable

->

adjust

(m>1) IBLE

->

defensible

->

defens

(m>1) ANT

->

irritant

->

irrit

(m>1) EMENT

->

replacement

->

replac

(m>1) MENT

->

adjustment

->

adjust

(m>1) ENT

->

dependent

->

depend

(m>1 and (*S or *T)) ION

->

adoption

->

adopt

(m>1) OU

->

homologou

->

homolog

(m>1) ISM

->

communism

->

commun

(m>1) ATE

->

activate

->

activ

(m>1) ITI

->

angulariti

->

angular

(m>1) OUS

->

homologous

->

homolog

(m>1) IVE

->

effective

->

effect

(m>1) IZE

->

bowdlerize

->

bowdler

The suffixes are now removed. All that remains is a little tidying up.

Srihari-CSE535-Spring2008

Porter Stemmer - Step 5

Step 5a

(m>1) E

(m=1 and not *o) E

->

probate

->

probat

rate

->

rate

->

cease

->

ceas

-> single letter

controll

->

control

roll

->

roll

Step 5b

(m > 1 and *d and *L)

Srihari-CSE535-Spring2008

Thesauri

Thesaurus

precompiled list of words in a given domain

for each word above, a list of related words

computer-aided instruction

computer applications

educational computing

Applications of Thesaurus in IR

provides a standard vocabulary for indexing and searching

medical literature querying

proper query formulation

classified hierarchies that allow broadening/narrowing of current

query

e.g. Yahoo

Components of a thesaurus

index terms

relationship among terms

layout design for term relationships

Srihari-CSE535-Spring2008

![[5] James William Porter The third member of the Kentucky trio was](http://s3.studylib.net/store/data/007720435_2-b7ae8b469a9e5e8e28988eb9f13b60e3-300x300.png)