notes

advertisement

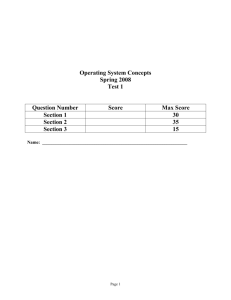

CS 346 – Chapter 1

•

•

•

•

Operating system – definition

Responsibilities

What we find in computer systems

Review of

– Instruction execution

– Compile – link – load – execute

• Kernel versus user mode

Questions

• What is the purpose of a computer?

• What if all computers became fried or infected?

• How did Furman function before 1967 (the year

we bought our first computer)?

• Why do people not like computers?

Definition

• How do you define something? Possible approaches:

–

–

–

–

What it consists of

What is does (a functional definition) – purpose

What if we didn’t have it

What else it’s similar to

• OS = set of software between user and HW

–

–

–

–

Provides “environment” for user to work

Convenience and efficiency

Manage the HW / resources

Ensure correct and appropriate operation of machine

• 2 Kinds of software: application and system

– Distinction is blurry; no universal definition for “system”

Some responsibilities

• Can glean from table of contents

– Book compares an OS to a government

– Don’t worry about details for now

• Security: logins

• Manage resources

– Correct and efficient use of CPU

– Disk: “memory management”

– network access

• File management

• I/O, terminal, devices

• Kernel vs. shell

Big picture

• Computer system has: CPU, main memory, disk, I/O

devices

• Turn on computer:

– Bootstrap program already in ROM comes to life

– Tells where to find the OS on disk. Load the OS.

– Transfer control to OS once loaded.

• From time to time, control is “interrupted”

– Examples?

• Memory hierarchy

– Several levels of memory in use from registers to tape

– Closer to CPU: smaller, faster, more expensive

– OS must decide who belongs where

Big picture (2)

• von Neumann program execution

– Fetch, decode, execute, data access, write result

– OS usually not involved unless problem

• Compiling

–

–

–

–

–

1 source file 1 object file

1 entire program 1 executable file

“link” object files to produce executable

Code may be optimized to please the OS

When you invoke a program, OS calls a “loader” program that

precedes execution

• I/O

– Each device has a controller, a circuit containing registers and a

memory buffer

– Each controller is managed by a device driver (software)

2 modes

• When CPU executing instructions, nice to know if the

instruction is on behalf of the OS

• OS should have the highest privileges kernel mode

– Some operations only available to OS

– Examples?

• Users should have some restriction user mode

• A hardware bit can be set if program is running in kernel

mode

• Sometimes, the user needs OS to help out, so we

perform a system call

Management topics

• What did we ask the OS to do during lab?

• File system

• Program vs. process

–

–

–

–

“job” and “task” are synonyms of process

Starting, destroying processes

Process communication

Make sure 2 processes don’t interfere with each other

• Multiprogramming

– CPU should never be idle

– Multitasking: give each job a short quantum of time to take turns

– If a job needs I/O, give CPU to another job

More topics

• Scheduling: deciding the order to do the jobs

– Detect system “load”

– In a real-time system, jobs have deadlines. OS should know

worst-case execution time of jobs

• Memory hierarchy

– Higher levels “bank” the lower levels

– OS manages RAM/disk decision

– Virtual memory: actual size of RAM is invisible to user. Allow

programmer to think memory is huge

– Allocate and deallocate heap objects

– Schedule disk ops and backups of data

CS 346 – Chapter 2

• OS services

– OS user interface

– System calls

– System programs

• How to make an OS

– Implementation

– Structure

– Virtual machines

• Commitment

– For next day, please finish chapter 2.

OS services

2 types

• For the user’s convenience

–

–

–

–

–

Shell

Running user programs

Doing I/O

File system

Detecting problems

• Internal/support

– Allocating resources

– System security

– Accounting

• Infamous KGB spy ring uncovered due to discrepancy in

billing of computer time at Berkeley lab

User interface

• Command line = shell program

– Parses commands from user

– Supports redirection of I/O (stdin, stdout, stderr)

• GUI

– Pioneered by Xerox PARC, made famous by Mac

– Utilizes additional input devices such as mouse

– Icons or hotspots on screen

• Hybrid approach

– GUI allowing several terminal windows

– Window manager

System calls

• “an interface for accessing an OS service within a

computer program”

• A little lower level than an API, but similar

• Looks like a function call

• Examples

– Performing any I/O request, because these are not defined by

the programming language itself

e.g. read(file_ptr, str_buf_ptr, 80);

– assembly languages typically have “syscall” instruction.

When is it used?

How?

• If many parameters, they may be put on runtime stack

Types of system calls

• Controlling a process

• File management

• Device management

• Information

• Communication between processes

• What are some specific examples you’d expect to find?

System programs

• Also called system utilities

• Distinction between “system call” and “system program”

• Examples

–

–

–

–

–

Shell commands like ls, lp, ps, top

Text editors, compilers

Communication: e-mail, talk, ftp

Miscellaneous: cal, fortune

What are your favorites?

• Higher level software includes:

– Spreadsheets, text formatters, etc.

– But, boundary between “application” and “utility” software is

blurry. A text formatter is a type of compiler!

OS design ideas

• An OS is a big program, so we should consider

principles of systems analysis and software engineering

• In design phase, need to consider policies and

mechanisms

– Policy = What should we do; should we do X

– Mechanism = how to do X

– Example: a way to schedule jobs (policy)

versus: what input needed to produce schedule, how schedule

decision is specified (mechanism)

Implementation

• Originally in assembly

• Now usually in C (C++ if object-oriented)

• Still, some code needs to be in assembly

– Some specific device driver routines

– Saving/restoring registers

• We’d like to use HLL as much as possible – why?

• Today’s compilers produce very efficient code – what

does this tell us?

• How to improve performance of OS:

– More efficient data structure, algorithm

– Exploit HW and memory hierarchy

– Pay attention to CPU scheduling and memory management

Kernel structure

• Possible to implement minimal OS with a few thousand

lines of code monolithic kernel

– Modularize like any other large program

– After about 10k loc, difficult to prove correctness

• Layered approach to managing the complexity

– Layer 0 is the HW

– Layer n is the user interface

– Each layer makes use of routines and d.s. defined at lower

levels

– # layers difficult to predict: many subtle dependencies

– Many layers lots of internal system call overhead

Kernel structure (2)

• kernel

– Kernel = minimal support for processes and memory

management

– (The rest of the OS is at user level)

– Adding OS services doesn’t require changing kernel, so easier to

modify OS

– The kernel must manage communication between user program

and appropriate OS services (e.g. file system)

– Microsoft gave up on kernel idea for Windows XP

• OO Module approach

– Components isolated (OO information hiding)

– Used by Linux, Solaris

– Like a layered approach with just 2 layers, a core and everything

else

Virtual machine

• How to make 1 machine behave like many

• Give users the illusion they have access to real HW,

distinct from other users

• Figure 2.17 levels of abstraction:

– Processes / kernels / VM’s / VM implementations / host HW

As opposed to:

– Processes / kernels / different machines

• Why do it?

– To test multiple OS’s on the same HW platform

– Host machine’s real HW protected from virus in a VM bubble

VM implementation

• It’s hard!

– Need to painstakingly replicate every HW detail, to avoid giving

away the illusion

– Need to keep track of what each guest OS is doing (whether it’s

in kernel or user mode)

– Each VM must interpret its assembly code – why? Is this a

problem?

• Very similar concept: simulation

– Often, all we are interested in is changing the HW, not the OS;

for example, adding/eliminating the data cache

– Write a program that simulates every HW feature, providing the

OS with the expected behavior

CS 346 – Chapter 3

•

•

•

•

•

•

What is a process

Scheduling and life cycle

Creation

Termination

Interprocess communication: purpose, how to do it

Client-server: sockets, remote procedure call

• Commitment

– Please read through section 3.4 by Wednesday and

3.6 by Friday.

Process

• Goal: to be able to run > 1 program concurrently

– We don’t have to finish one before starting another

– Concurrent doesn’t mean parallel

– CPU often switches from one job to another

• Process = a program that has started but hasn’t yet

finished

• States:

– New, Ready, Running, Waiting, Terminated

– What transitions exist between these states?

Contents

• A process consists of:

–

–

–

–

–

Code (“text” section)

Program Counter

Data section

Run-time stack

Heap allocated memory

• A process is represented in

kernel by a Process Control

Block, containing:

–

–

–

–

–

–

–

State

Program counter

Register values

Scheduling info (e.g. priority)

Memory info (e.g. bounds)

Accounting (e.g. time)

I/O info (e.g. which files open)

– What is not stored here?

Scheduling

• Typically many processes are ready, but only 1 can run

at a time.

– Need to choose who’s next from ready queue

– Can’t stay running for too long!

– At some point, process needs to be switched out temporarily

back to the ready queue (Fig. 3.4)

• What happens to a process ? (Fig 3.7)

– New process enters ready queue. At some point it can run.

– After running awhile, a few possibilities:

1. Time quantum expires. Go back to ready queue.

2. Need I/O. Go to I/O queue, do I/O, re-enter ready queue!

3. Interrupted. Handle interrupt, and go to ready queue.

– Context switch overhead

Creation

• Processes can spawn other processes.

– Parent / child relationship

– Tree

– Book shows Solaris example:

In the beginning, there was sched, which spawned init (the

ancestor of all user processes), the memory manager, and the

file manager.

– Process ID’s are unique integers (up to some max e.g. 215)

• What should happen when process created?

– OS policy on what resources for baby: system default, or copy

parent’s capabilities, or specify at its creation

– What program does child run? Same as parent, or new one?

– Does parent continue to execute, or does it wait (i.e. block)?

How to create

• Unix procedure is typical…

• Parent calls fork( )

– This creates duplicate process.

– fork( ) returns 0 for child; positive number for parent; negative

number if error. (How could we have error?)

• Next, we call exec( ) to tell child what program to run.

– Do this immediately after fork

– Do inside the if clause that corresponds to case that we are

inside the child!

• Parent can call wait( ) to go to sleep.

– Not executing, not in ready queue

Termination

• Assembly programs end with a system call to exit( ).

– An int value is returned to parent’s wait( ) function. This lets

parent know which child has just finished.

• Or, process can be killed prematurely

– Why?

– Only the parent (or ancestor) can kill another process – why this

restriction?

• When a process dies, 2 possible policies:

– OS can kill all descendants (rare)

– Allow descendants to continue, but set parent of dead process to

init

IPC Examples

• Allowing concurrent access to information

– Producer / consumer is a common paradigm

• Distributing work, as long as spare resources (e.g. CPU)

are around

• A program may need result of another program

– IPC more efficient than running serially and redirecting I/O

– A compiler may need result of timing analysis in order to know

which optimizations to perform

• Note: ease of programming is based on what OS and

programming language allow

2 techniques

• Shared memory

– 2 processes have access to an overlapping area of memory

– Conceptually easier to learn, but be careful!

– OS overhead only at the beginning: get kernel permission to set

up shared region

• Message passing

– Uses system calls, with kernel as middle man – easier to code

correctly

– System call overhead for every message we’d want amount

of data to be small

– Definitely better when processes on different machines

• Often, both approaches are possible on the system

Shared memory

• Usually forbidden to touch another process’ memory

area

• Each program must be written so that the shared

memory request is explicit (via system call)

– An overlapping “buffer” can be set up. Range of addresses. But

there is no need for the buffer to be contiguous in memory with

the existing processes.

– Then, the buffer can be treated like an array (of char)

• Making use of the buffer (p. 122)

– Insert( ) function

– Remove( ) function

– Circular array… does the code make sense to you?

Shared memory (2)

• What could go wrong?... How to fix?

• Trying to insert into full buffer

• Trying to remove from empty buffer

• Sound familiar?

• Also: both trying to insert. Is this a problem?

Message passing

• Make continual use of system calls:

– Send( )

– Receive( )

• Direct or indirect communication?

– Direct: send (process_num, the_message)

Hard coding the process we’re talking to

– Indirect: send (mailbox_num, the_message)

Assuming we’ve set up a “mailbox” inside the kernel

• Flexibility: can have a communication link with more

than 2 processes. e.g. 2 producers and 1 consumer

• Design issues in case we have multiple consumers

– We could forbid it

– Could be first-come-first-serve

Synchronization

• What should we do when we send/receive a message?

• Block (or “wait”):

– Go to sleep until counterpart acts.

– If you send, sleep until received by process or mailbox.

– If you receive, block until a message available. How do we

know?

• Don’t block

– Just keep executing. If they drop the baton it’s their fault.

– In case of receive( ), return null if there is no message (where do

we look?)

• We may need some queue of messages (set up in

kernel) so we don’t lose messages!

Buffer messages

• The message passing may be direct (to another specific

process) or indirect (to a mailbox – no process explicitly

stated in the call).

• But either way, we don’t want to lose messages.

• Zero capacity: sender blocks until recipient gets

message

• Bounded capacity (common choice): Sender blocks if

the buffer is full.

• Unbounded capacity: Assume buffer is infinite. Never

block when you send.

Socket

• Can be used as an “endpoint of communication”

• Attach to a (software) port on a “host” computer

connected to the Internet

– 156.143.143.132:1625 means port # 1625 on the machine

whose IP number is 156.143.143.132

– Port numbers < 1024 are pre-assigned for “well known” tasks.

For example, port 80 is for a Web server.

• With a pair of sockets, you can communicate between

them.

• Generally used for remote I/O

Implementation

• Syntax depends on language.

• Server

–

–

–

–

–

–

Create socket object on some local port.

Wait for client to call. Accept connection.

Set up output stream for client.

Write data to client.

Close client connection.

Go back to wait

• Client

– Create socket object to connect to server

– Read input analogous to file input or stdin

– Close connection to server

Remote procedure call

• Useful application of inter-process communication (the

message-passing version)

• Systematic way to make procedure call between processes

on the network

– Reduce implementation details for user

• Client wants to call foreign function with some parameters

–

–

–

–

–

Tell kernel server’s IP number and function name

1st message: ask server which port corresponds with function

2nd message: sending function call with “marshalled” parameters

Server daemon listens for function call request, and processes

Client receives return value

• OS should ensure function call successful (once)

CS 346 – Chapter 4

• Threads

– How they differ from processes

– Definition, purpose

Threads of the same process share: code, data, open files

– Types

– Support by kernel and programming language

– Issues such as signals

– User thread implementation: C and Java

• Commitment

– For next day, please read chapter 4

Thread intro

• Also called “lightweight process”

• One process may have multiple threads of execution

• Allows a process to do 2+ things concurrently

– Games

– Simulations

• Even better: if you have 2+ CPU’s, you can execute in

parallel

• Multicore architecture demand for multithreaded

applications for speedup

• More efficient than using several concurrent processes

Threads

• A process contains:

– Code, data, open files, registers, memory usage (stack + heap),

program counter

• Threads of the same process share

– Code, data, open files

• What is unique to each thread?

• Can you think of example of a computational algorithm

where threads would be a great idea?

– Splitting up the code

– Splitting up the data

• Any disadvantages?

2 types of threads

• User threads

– Can be managed / controlled by user

– Need existing programming language API support:

POSIX threads in C

Java threads

• Kernel threads

– Management done by the kernel

• Possible scenarios

– OS doesn’t support threading

– OS support threads, but only at kernel level – you have no direct

control, except possibly by system call

– User can create thread objects and manipulate them. These

objects map to “real” kernel threads.

Multithreading models

• Many-to-one: User can create several thread objects,

but in reality the kernel only gives you one.

Multithreading is an illusion

• One-to-one: Each user thread maps to 1 real kernel

thread. Great but costly to OS. There may be a hard

limit to # of live threads.

• Many-to-many: A happy compromise. We have

multithreading, but the number of true threads may be

less than # of thread objects we created.

– A variant of this model “two-level” allows user to designate a

thread as being bound to one kernel thread.

Thread issues

• What should OS do if a thread calls fork( )?

– Can duplicate just the calling thread

– Can duplicate all threads in the process

• exec ( ) is designed to replace entire current process

• Cancellation

– kill thread before it’s finished

– “Asynchronous cancellation” = kill now. But it may be in the

middle of an update, or it may have acquired resources.

You may have noticed that Windows sometimes won’t let you

delete a file because it thinks it’s still open.

– “Deferred cancellation”. Thread periodically checks to see if it’s

time to quit. Graceful exit.

Signals

• Reminiscent of exception in Java

• Occurs when OS needs to send message to a process

– Some defined event generates a signal

– OS delivers signal

– Recipient must handle the signal.

Kernel defines a default handler – e.g. kill the process.

Or, user can write specific handler.

• Types of signals

– Synchronous: something in this program caused the event

– Asynchronous: event was external to my program

Signals (2)

• But what if process has multiple threads? Who gets the

signal? For a given signal, choose among 4 possibilities:

–

–

–

–

Deliver signal to the 1 appropriate thread

Deliver signal to all threads

Have the signal indicate which threads to contact

Designate a thread to receive all signals

• Rules of thumb…

– Synchronous event just deliver to 1 thread

– User hit ctrl-C kill all threads

Thread pool

• Like a motor pool

• When process starts, can create a set of threads that sit

around and wait for work

• Motivation

– overhead in creating/destroying

– We can set a bound for total number of threads, and avoid

overloading system later

• How many threads?

– User can specify

– Kernel can base on available resources (memory and # CPU’s)

– Can dynamically change if necessary

POSIX threads

• aka “Pthreads”

• C language

• Commonly seen in UNIX-style environments:

– Mac OS, Linux, Solaris

• POSIX is a set of standards for OS system calls

– Thread support is just one aspect

• POSIX provides an API for thread creation and

synchronization

• API specifies behavior of thread functionality, but not the

low-level implementation

Pthread functions

• pthread_attr_init

–

–

–

–

Initialize thread attributes, such as

Schedule priority

Stack size

State

• pthread_create

– Start new thread inside the process.

– We specify what function to call when thread starts, along with

the necessary parameter

– The thread is due to terminate when its function returns

• pthread_join

– Allows us to wait for a child thread to finish

Example code

#include <pthread.h>

int sum;

main() {

pthread_t tid;

pthread_attr attr;

pthread_attr_init(&attr);

pthread_create(&tid,

&attr, fun, argv[1]);

pthread join(tid, NULL);

printf(“%d\n”, sum);

}

int fun(char *param) ...

void *fun(void *param)

{

// compute a sum:

// store in global

// variable

...

}

Java threads

• Managed by the Java virtual machine

• Two ways to create threads

1. Create a class that extends the Thread class

–

Put code inside public void run( )

2. Implement the Runnable interface

–

public void run( )

• Parent thread (e.g. in main() …)

–

–

Create thread object – just binds name of thread

Call start( ) – creates actual running thread, goes to run( )

See book example

Skeletons

class Worker extends

Thread {

public void run() {

// do stuff

}

}

public class Driver

{

// in main method:

Worker w = new Worker();

w.start();

... Continue/join

}

class Worker2 implements

Runnable {

public void run() {

// do stuff

}

}

Public class Driver2

{

// in main method:

Runnable w2=new Worker2();

Thread t = new Thread(w2);

t.start();

// ...Continue/join

}

Java thread states

• This will probably sound familiar!

• New

– From here, go to “runnable” at call to start( )

• Runnable

– Go to “blocked” if need I/O or going to sleep

– Go to “dead” when we exit run( )

– Go to “waiting” if we call join( ) for child thread

• Blocked

– Go to “runnable” when I/O is serviced

• Waiting

• Dead

CS 346 – Sect. 5.1-5.2

• Process synchronization

– What is the problem?

– Criteria for solution

– Producer / consumer example

– General problems difficult because of subtleties

Problem

• It’s often desirable for processes/threads to share data

– Can be a form of communication

– One may need data being produced by the other

• Concurrent access possible data inconsistency

• Need to “synchronize”…

– HW or SW techniques to ensure orderly execution

• Bartender & drinker

–

–

–

–

–

Bartender takes empty glass and fills it

Drinker takes full glass and drinks contents

What if drinker overeager and starts drinking too soon?

What if drinker not finished when bartender returns?

Must ensure we don’t spill on counter.

Key concepts

• Critical section = code containing access to shared data

– Looking up a value or modifying it

• Race condition = situation where outcome of code

depends on the order in which processes take turns

– The correctness of the code should not depend on scheduling

• Simple example: producer / consumer code, p. 204

– Producer adds data to buffer and executes ++count;

– Consumer grabs data and executes --count;

– Assume count initially 5.

– Let’s see what could happen…

Machine code

Producer’s ++count becomes:

1

2

3

r1 = count

r1 = r1 + 1

count = r1

Consumer’s --count becomes:

4

5

6

r2 = count

r2 = r2 – 1

count = r2

Does this code work?

Yes, if we execute in order 1,2,3,4,5,6 or 4,5,6,1,2,3 -- see why?

Scheduler may have other ideas!

Alternate schedules

1

2

4

5

3

6

r1 = count

r1 = r1 + 1

r2 = count

r2 = r2 – 1

count = r1

count = r2

1

2

4

5

6

3

r1 = count

r1 = r1 + 1

r2 = count

r2 = r2 – 1

count = r2

count = r1

• What are the final values of count?

• How could these situations happen?

• If the updating of a single variable is nontrivial, you

can imagine how critical the general problem is!

Solution criteria

• How do we know we have solved a synchronization

problem? 3 criteria:

• Mutual exclusion – Only 1 process may be inside its

critical section at any one time.

– Note: For simplicity we’re assuming there is one zone of shared

data, so each process using it has 1 critical section.

• Progress – Don’t hesitate to enter your critical section if

no one else is in theirs.

– Avoid an overly conservative solution

• Bounded waiting – There is a limit on # of times you may

access your critical section if another is still waiting to

enter theirs.

– Avoid starvation

Solution skeleton

while (true)

{

Seek permission to enter critical section

Do critical section

Announce done with critical section

Do non-critical code

}

• BTW, easy solution is to forbid preemption.

– But this power can be abused.

– Identifying critical section can avoid preemption for a shorter

period of time.

CS 346 – Sect. 5.3-5.7

• Process synchronization

– A useful example is “producer-consumer” problem

– Peterson’s solution

– HW support

– Semaphores

– “Dining philosophers”

• Commitment

– Compile and run semaphore code from os-book.com

Peterson’s solution

… to the 2-process producer/consumer problem. (p. 204)

while (true)

{

ready[ me ] = true

turn = other

while (ready[ other ] && turn == other) ;

Do critical section

ready[ me ] = false

Do non-critical code

}

// Don’t memorize but think: Why does this ensure mutual exclusion?

// What assumptions does this solution make?

HW support

• As we mentioned before, we can disable interrupts

– No one can preempt me.

– Disadvantages

• The usual way to handle synchronization is by careful

programming (SW)

• We require some atomic HW operations

– A short sequence of assembly instructions guaranteed to be

non-interruptable

– This keeps non-preemption duration to absolute minimum

– Access to “lock” variables visible to all threads

– e.g. swapping the values in 2 variables

– e.g. get and set some value (aka “test and set”)

Semaphore

• Dijkstra’s solution to mutual exclusion problem

• Semaphore object

– integer value attribute ( > 0 means resource is available)

– acquire and release methods

• Semaphore variants: binary and counting

– Binary semaphore aka “mutex” or “mutex lock”

acquire()

{

if (value <= 0)

wait/sleep

--value

}

release()

{

++value

// wake sleeper

}

Deadlock / starvation

• After we solve a mutual exclusion problem, also need to

avoid other problems

– Another way of expressing our synchronization goals

• Deadlock: 2+ process waiting for an event that can only

be performed by one of the waiting processes

– the opposite of progress

• Starvation: being blocked for an indefinite or unbounded

amount of time

– e.g. Potentially stuck on a semaphore wait queue forever

Bounded-buffer problem

• aka “producer-consumer”. See figures 5.9 – 5.10

• Producer class

– run( ) to be executed by a thread

– Periodically call insert( )

• Consumer class

– Also to be run by a thread

– Periodically call remove( )

• BoundedBuffer class

– Creates semaphores (mutex, empty, full): why 3?

Initial values: mutex = 1, empty = SIZE, full = 0

– Implements insert( ) and remove( ).

These methods contain calls to semaphore operations acquire( )

and release( ).

Insert & delete

public void insert(E item)

{

empty.acquire();

mutex.acquire();

public E remove()

{

full.acquire();

mutex.acquire();

// add an item to the

// buffer...

mutex.release();

full.release();

}

// remove item ...

mutex.release();

empty.release();

}

• What are we doing with the semaphores?

Readers/writers problem

• More general than producer-consumer

• We may have multiple readers and writers of shared info

• Mutual exclusion requirement:

Must ensure that writers have exclusive access

• It’s okay to have multiple readers reading

See example solution, Fig. 5.10 – 5.12

• Reader and Writer threads periodically want to execute.

– Operations guarded by semaphore operations

• Database class (analogous to BoundedBuffer earlier)

– readerCount

– 2 semaphores: one to protect database, one to protect the

updating of readerCount

Solution outline

Reader:

mutex.acquire();

++readerCount;

if(readerCount == 1)

db.acquire();

mutex.release();

// READ NOW

mutex.acquire();

--readerCount;

if(readerCount == 0)

db.release();

mutex.release();

Writer:

db.acquire();

// WRITE NOW

db.release();

Example output

writer

writer

writer

reader

writer

reader

reader

Reader

Reader

Reader

writer

Reader

Reader

Reader

writer

reader

writer

0

0

0

2

1

0

1

2

0

1

0

1

2

0

1

0

1

wants to write.

is writing.

is done writing.

wants to read.

wants to write.

wants to read.

wants to read.

is reading. Reader count = 1

is reading. Reader count = 2

is reading. Reader count = 3

wants to write.

is done reading. Reader count = 2

is done reading. Reader count = 1

is done reading. Reader count = 0

is writing.

wants to read.

is done writing.

CS 346 – Sect. 5.7-5.8

• Process synchronization

– “Dining philosophers” (Dijkstra, 1965)

– Monitors

Dining philosophers

• Classic OS problem

– Many possible solutions depending on how foolproof you want

solution to be

• Simulates synchronization situation of several resources,

and several potential consumers.

• What is the problem?

• Model chopsticks with semaphores – available or not.

– Initialize each to be 1

• Achieve mutual exclusion:

– acquire left and right chopsticks (numbered i and i+1)

– Eat

– release left and right chopsticks

• What could go wrong?

DP (2)

• What can we say about this solution?

mutex.acquire();

Acquire 2 neighboring forks

Eat

Release the 2 forks

mutex.release();

• Other improvements:

– Ability to see if either neighbor is eating

– May make more sense to associate semaphore with the

philosophers, not the forks. A philosopher should block if cannot

acquire both forks.

– When done eating, wake up either neighbor if necessary.

Monitor

• Higher level than semaphore

– Semaphore coding can be buggy

• Programming language construct

– Special kind of class / data type

– Hides implementation detail

• Automatically ensures mutual exclusion

– Only 1 thread may be “inside” monitor at any one time

– Attributes of monitor are the shared variables

– Methods in monitor deal with specific synchronization problem.

This is where you access shared variables.

– Constructor can initialize shared variables

• Supported by a number of HLLs

– Concurrent Pascal, Java, C#

Condition variables

• With a monitor, you get mutual exclusion

• If you also want to ensure against deadlock or starvation,

you need condition variables

• Special data type associated with monitors

• Declared with other shared attributes of monitor

• How to use them:

– No attribute value to manipulate. 2 functions only:

– Wait: if you call this, you go to sleep. (Enter a queue)

– Signal: means you release a resource, waking up a thread

waiting for it.

– Each condition variable has its own queue of waiting

threads/processes.

Signal( )

•

•

•

•

A subtle issue for signal…

In a monitor, only 1 thread may be running at a time.

Suppose P calls x.wait( ). It’s now asleep.

Later, Q calls x.signal( ) in order to yield resource to

P.

• What should happen? 3 design alternatives:

– “blocking signal” – Q immediately goes to sleep so that P can

continue.

– “nonblocking signal” – P does not actually resume until Q has left

the monitor

– Compromise – Q immediately exits the monitor.

• Whoever gets to continue running may have to go to

sleep on another condition variable.

CS 346 – Sect. 5.9

• Process synchronization

– “Dining philosophers” monitor solution

– Java synchronization

– atomic operations

Monitor for DP

• Figure 5.18 on page 228

• Shared variable attributes:

– state for each philosopher

– “self” condition variable for each philosopher

• takeForks( )

– Declare myself hungry

– See if I can get the forks. If not, go to sleep.

• returnForks( )

– Why do we call test( )?

• test( )

– If I’m hungry and my neighbors are not eating, then I will eat and

leave the monitor.

Synch in Java

• “thread safe” = data remain consistent even if we have

concurrently running threads

• If waiting for a (semaphore) value to become positive

– Busy waiting loop

– Better: Java provides Thread.yield( ): “block me”

• But even “yielding” ourselves can cause livelock

– Continually attempting an operation that fails

– e.g. You wait for another process to run, but the scheduler

keeps scheduling you instead because you have higher priority

Synchronized

• Java’s answer to synchronization is the keyword

synchronized – qualifier for method

as in public synchronized void funName(params) { …

• When you call a synchronized method belonging to an

object, you obtain a “lock” on that object

e.g. sem.acquire();

• Lock automatically released when you exit method.

• If you try to call a synchronized method, & the object is

already locked by another thread, you are blocked and

sent to the object’s entry set.

– Not quite a queue. JVM may arbitrarily choose who gets in next

Avoid deadlock

• Producer/consumer example

– Suppose buffer is full. Producer now running.

– Producer calls insert( ). Successfully enters method has lock

on the buffer. Because buffer full, calls Thread.yield( ) so that

consumer can eat some data.

– Consumer wakes up, but cannot enter remove( ) method

because producer still has lock. we have deadlock.

• Solution is to use wait( ) and notify( ).

– When you wait, you release the lock, go to sleep (blocked), and

enter the object’s wait set. Not to be confused with entry set.

– When you notify, JVM picks a thread T from the wait set and

moves it to entry set. T now eligible to run, and continues from

point after its call to wait().

notifyAll

• Put every waiting thread into the entry set.

– Good idea if you think > 1 thread waiting.

– Now, all these threads compete for next use of synchronized

object.

• Sometimes, just calling notify can lead to deadlock

– Book’s doWork example ***

– Threads are numbered

– doWork has a shared variable turn. You can only do work here if

it’s your turn: if turn == your number.

– Thread 3 is doing work, sets turn to 4, and then leaves.

– But thread 4 is not in the wait set. All other threads will go to

sleep.

More Java support

See: java.util.concurrent

• Built-in ReentrantLock class

– Create an object of this class; call its lock and unlock

methods to access your critical section (p. 282)

– Allows you to set priority to waiting threads

• Condition interface (condition variable)

– Meant to be used with a lock. What is the goal?

– await( ) and notify( )

• Semaphore class

– acquire( ) and release( )

Atomic operations

• Behind the scenes, need to make sure instructions are

performed in appropriate order

• “transaction” = 1 single logical function performed by a

thread

– In this case, involving shared memory

– We want it to run atomically

• As we perform individual instructions, things might go

smoothly or not

– If all ok, then commit

– If not, abort and “roll back” to earlier state of computation

• This is easier if we have fewer instructions in a row to do

Keeping the order

Transaction 1

Transaction 2

Transaction 1

Read (A)

Read (A)

Write (A)

Write (A)

Transaction 2

Read (B)

Read (A)

Write (B)

Write (A)

Read (A)

Read (B)

Write (A)

Write (B)

Read (B)

Read (B)

Write (B)

Write (B)

• Are these two schedules equivalent? Why?

CS 346 – Chapter 6

• CPU scheduling

– Characteristics of jobs

– Scheduling criteria / goals

– Scheduling algorithms

– System load

– Implementation issues

– Real-time scheduling

Schedule issues

• Multi-programming is good! better CPU utilization

• CPU burst concept

– Jobs typically alternate between work and wait

– Fig. 6.2: Distribution has long tail on right.

General questions

• How or when does a job enter the ready queue?

• How much time can a job use the CPU?

• Do we prioritize jobs?

• Do we pre-empt jobs?

• How do we measure overall performance?

Scheduler

• Makes short-term decisions

– When? Whenever a job changes state (becomes ready, needs

to wait, finishes)

– Selects a job on the ready queue

– Dispatcher can then do the “context switch” to give CPU to new

job

• Should we preempt?

– Non-preemptive = Job continues to execute until it has to wait or

finishes

– Preemptive = Job may be removed from CPU while doing work!

– When you preempt: need to leave CPU “gracefully”. May be in

the middle of a system call or modifying shared data. Often we

let that operation complete.

Scheduling criteria

• CPU utilization = what % of time CPU is executing

instructions

• Throughput = # or rate of jobs completed in some time

period

• Turnaround time = (finish time) – (request time)

• Waiting time = how long spent in ready state

– Confusing name!

• Response time = how long after request that a job

begins to produce output

• Usually, we want to optimize the “average” of each

measure. e.g. Reduce average turnaround time.

Some scheduling algorithms

• First-come, first-served

• Round robin

– Like FCFS, but each job has a limited time quantum

• Shortest job next

• We use a Gantt chart to view and evaluate a schedule

– e.g. compute average turnaround time

• Often, key question is – in what order do we execute

jobs?

• Let’s compare FCFS and SJN…

Example 1

Process number

Time of request

Execution time needed

1

0

20

2

5

30

3

10

40

4

20

10

• First-come, first-served

–

–

–

–

Process 1 can execute from t=0 to t=20

Process 2 can execute from t=20 to t=50

Process 3 can execute from t=50 to t=90

Process 4 can execute from t=90 to t=100

• We can enter this info as extra columns in the table.

• What is the average turnaround time?

• What if we tried Shortest Job Next?

Example 2

Process number

Time of request

Execution time needed

1

0

10

2

30

30

3

40

20

4

50

5

Note that it’s possible to have idle time.

System load

• A measure of how “busy” the CPU is

• At an instant: how many tasks are currently running or

ready.

– If load > 1, the system is “overloaded”, and work is backing up.

• Typically reported as an average of the last 1, 5, or 15

minutes.

• Based on the schedule, can calculate average load as

well as maximum (peak) load.

Example 1

Job #

Request

Exec

Start

Finish

1

0

20

0

20

2

5

30

20

50

3

10

40

50

90

4

20

10

90

100

“Request time”

aka “Arrival time”

• FCFS schedule can also be depicted this way:

X

X

X

X

R

R

R

X

X

X

X

X

X

R

R

R

R

R

R

R

R

X

X

X

X

X

X

X

X

R

R

R

R

R

R

R

R

R

R

R

R

R

R

• What can we say about the load?

X

X

Example 2

Job #

Request

Exec

Start

Finish

1

0

10

0

10

2

30

30

30

60

3

40

20

65

85

4

50

5

60

65

• SJN schedule can be depicted this way:

X

X

X

• Load?

X

X

X

X

X

R

R

R

R

R

R

R

X

X

X

X

X

Preemptive SJN

• If a new job arrives with a shorter execution time (CPU

burst length) than currently running process, preempt!

• Could also call it “shortest remaining job next”

• Let’s redo previous example allowing preemption

– Job #1 is unaffected.

– Job #2 would have run from 30 to 60, but …

Job #

Request

Exec

Start

Finish

1

0

10

0

10

2

30

30

3

40

20

4

50

5

– Does preemption reduce average turnaround time? Load?

Estimating time

• Some scheduling algorithms like SJN need a job’s

expected CPU time

• We’re interested in scheduling bursts of CPU time, not

literally the entire job.

• OS doesn’t really know in advance how much of a

“burst” will be needed. Instead, we estimate.

• Exponential averaging method. We predict the next

CPU burst will take this long:

pn+1 = a tn + (1 – a)pn

tn = actual time of the nth burst

• Formula allows us to weight recent vs. long-term history.

– What if a = 0 or 1?

Estimating time (2)

• pn+1 = a tn + (1 – a)pn

• Why is it called “exponential”? Becomes clearer if we

substitute all the way back to the first burst.

• p1 = a t0 + (1 – a)p0

• p2 = a t1 + (1 – a)p1

= a t1 + (1 – a) [a t0 + (1 – a)p0 ]

= a t1 + (1 – a) a t0 + (1 – a)2 p0

• A general formula for pn+1 will eventually contain terms of

the form (1 – a) raised to various powers.

– In practice, we just look at previous actual vs. previous prediction

• Book’s example Figure 6.3: Prediction eventually

converges to correct recent behavior.

Priority scheduling

• SJN is a special case of a whole class of scheduling

algorithms that assign priorities to jobs.

• Each job has a priority value:

– Convention: low number = “high” priority

• SJN: priority = next predicted burst time

• Starvation: Some “low priority” jobs may never execute

– How could this happen?

• Aging: modify SJN so that while a job waits, it gradually

“increases” its priority so it won’t starve.

Round robin

•

•

•

•

Each job takes a short turn at the CPU

Commonly used, easy for OS to handle

Time quantum typically 10-100 ms – it’s constant

Choice of time quantum has a minor impact on

turnaround time (Figure 6.5)

– Can re-work an earlier example

• Questions to think about:

• If there are N jobs, what is the maximum wait time before

you can start executing?

• What happens if the time quantum is very large?

• What happens if the time quantum is very short?

Implementation issues

• Multi-level ready queue

• Threads

• Multi-processor scheduling

Multi-level queue

• We can assign jobs to different queues based on their

purpose or priority

• Foreground / interactive jobs may deserve high priority to

please the user

– Also: real-time tasks

• Background / routine tasks can be given lower priority

• Each queue can have its own scheduling regime, e.g.

round robin instead of SJN

– Interactive jobs may have unpredictable burst times

• Key issue: need to schedule among the queues

themselves. How?

Scheduling among queues

• Classify jobs according to purpose priority

• Priority based queue scheduling

– Can’t run any Priority 2 job until all Priority 1 jobs done.

– While running Priority 2 job, can preempt if a Priority 1 job

arrives.

– Starvation

• Round robin with different time quantum for each queue

• Time share for each queue

– Decreasing % of time for lower priorities

• Or… Multi-level feedback queue (pp. 275-277)

– All jobs enter at Priority 0. Given short time quantum.

– If not done, enter queue for Priority 1 jobs. Longer quantum next

time.

Thread scheduling

• The OS schedules “actual” kernel-level threads

• The thread library must handle user threads

– One-to-one model – easy, each user thread is already a kernel

thread. Direct system call

– Many-to-many or many-to-one models

• Thread library has 1 or a small number of kernel threads

available.

• Thread library must decide when user thread should run on a

true kernel thread.

• Programmer can set a priority for thread library to consider.

In other words, threads of the same process are competing

among themselves.

Multi-processing

• More issues to address, more complex overall

• Homogeneous system = identical processors, job can

run on any of them

• Asymmetric approach = allocate 1 processor for the OS,

all others for user tasks

– This “master server” makes decisions about what jobs run on the

other processors

• Symmetric approach (SMP) = 1 scheduler for each

processor, usually separate ready queue for each (but

could have a common queue for all)

• Load balancing: periodically see if we should “pull” or

“push” jobs

Affinity

• When switched out, a job may want to return next time to

the same processor as before

– Why desirable?

– An affinity policy may be “soft” or “hard”.

– Soft = OS will try but not guarantee.

Why might an OS prefer to migrate a process to a different

processor?

• Generalized concept: processor set

– For each process, maintain a list of processors it may be run on

• Memory system can exploit affinity, allocating more

memory that is closer to the favorite CPU. (Fig. 6.9)

Multicore processor

• Conceptually similar to multiprocessors

– Place multiple “processor cores” on same chip

– Faster, consume less power

– OS treats each core like a unique CPU

• However, the cores often share cache memory

– Leads to more “cache misses” Jobs spend more time stalled

waiting for instructions or data to arrive

– OS can allocate 2 threads to the same core, to increase

processor utilization

– Fig. 6.11 shows idealized situation. What happens in general?

Real-time Scheduling

• Real-time scheduling

– Earliest Deadline First

– Rate Monotonic

• What is this about?

– Primary goal is avoid missing deadlines. Other goals may

include having response times that are low and consistent.

– We’re assuming jobs are periodic, and the deadline of a job is

the end of a period

Real-time systems

• Specialized operating system

• All jobs potentially have a deadline

– Correctness of operation depends on meeting deadlines, in

addition to correct algorithm

– Often, jobs are periodic; some may be aperiodic/sporadic

• Hard real-time = missing a deadline is not acceptable

• Soft real-time = deadline miss not end of world, but try to

minimize

– Number of acceptable deadline misses is a design parameter

– We try to measure Quality of Service (QoS)

– Examples?

• Used in defense, factories, communications, multimedia;

embedded in appliances

Features

• A real-time system may be used to control specific

device

– Opening bomb bay door

– When to release chocolate into vat

• Host device typically very small and lacks features of

PC, greatly simplifying OS design

–

–

–

–

–

Single user or no user

Little or no memory hierarchy

Simple instruction set (or not!)

No disk drive, monitor

Cheap to manufacture, mass produce

Scheduling

• Most important issue in real-time systems is CPU

scheduling

• System needs to know WCET of jobs

• Jobs are given priority based on their timing/deadline

needs

• Jobs may be pre-empted

• Kernel jobs (implemented system calls) contain many

possible preemption points at which they may be safely

suspended

• Want to minimize latency

– System needs to respond quickly to external event, such as

change in temperature

– Interrupt must have minimum overhead – how to measure it?

EDF

• Given a set of jobs

– Need to know period and execution time of each

– Each job contributes to the CPU’s utilization: execution time

divided by the period

– If the total utilization of all jobs > 1, no schedule possible!

• At each scheduling checkpoint, choose the job with the

earliest deadline.

– A scheduling checkpoint occurs at t = 0, when a job begins

period or is finished, or when a new job arrives into the system

– If no new jobs enter the system, EDF is non-preemptive

– Sometimes the CPU is idle

• Need to compute schedule one dynamic job at a time

until you reach the LCM of the job periods

– Can predict deadline miss, if any

EDF example

• Suppose we have 2 jobs, A and B, with periods 10 and

15, and execution times 5 and 6.

• At t = 0, we schedule A because its deadline is earlier

(10 < 15).

• At t = 5, A is finished. We can now schedule B.

• At t = 11, B is finished. A has already started a new

period, we can schedule it immediately.

• At t = 16, A is finished. B already started a new period,

so schedule it.

• At t = 22, B is finished. Schedule A.

• At t = 27, A is finished, and CPU is idle until t = 30.

EDF: be careful

• At certain scheduling checkpoints, you need to schedule

the job with the earliest deadline.

– As long as that job has started its period.

– Do each cycle iteratively until the LCM of the job periods.

• Checkpoints include

– t=0

– Whenever a job is finished executing

– Whenever a job begins its period

(This condition is important when we have maximum utilization.)

• Example with 2 jobs

– Job A has period 6 and execution time 3.

– Job B has period 14 and execution time 7.

– U = 1. We should be able to schedule these jobs with EDF.

Example: EDF

• Wrong way: ignoring beginning of job periods

– At t = 0, we see jobs A (period 0-6) and B (period 0-14)

Since A has sooner deadline, schedule A for its 3 cycles.

– At t = 3, we see jobs A (period 6-12) and B (period 0-14)

Since A hasn’t started its period, our only choice is B, for its 7

cycles.

– At t = 10, we have job A (period 6-12) and B (period 14-28)

A has sooner deadline. Schedule A for its 3 cycles.

– At t = 13, A is finished but it missed its deadline. We don’t want

this to happen!

continued

• Job A = (per 6, exec 3) Job B = (per 14, exec 7)

• Correct EDF schedule that takes into account the start of

a job period as another scheduling checkpoint

1

2

3

4

5

6

7

8

9

0

1

2

3

4

5

6

7

8

9

0

1

A A A B B B A A A B B B B A A A B B A A A

2

3

4

5

6

7

8

B

B B B B A A

9

0

1

2

3

A B A A A

4

5

6

7

8

9

B B B A A A

0

1

2

B B B

• Notice:

– At t = 12 and t = 24, we don’t preempt job B, because B’s

deadline is sooner. In the other cases when A’s period begins, A

takes the higher priority.

RM

• Most often used because it’s easy

• Inherently preemptive

• Assign each job a fixed priority based on its period

– The shorter the period, the more often this job must execute, the

more deadlines it has the higher the priority

• Determine in advance the schedule of the highest priority

job

– Continue for other jobs in descending order of priority

– Be sure not to “schedule” a job before its period begins

• Less tedious than EDF to compute entire schedule

– For highest priority job, you know exactly when it will execute

– Other jobs may be preempted by higher priority jobs that were

scheduled first

RM (2)

• Sometimes not possible to find a schedule

– Our ability to schedule is more limited than EDF.

• There is a simple mathematical check to see if a RM

schedule is possible:

– We can schedule if the total utilization is n (21/n – 1)

Proved by Liu and Layland in 1973.

– If n (21/n – 1) < U 1, the test is inconclusive. Must compute

the schedule to find out.

– Ex. If n = 2, we are guaranteed to find a RM schedule if U <

82%, but for 90% it gets risky.

– Large experiments using random job parameters show that RM

is reliable up to about 88% utilization.

n

1

2

3

4

5

6

7

8

P(RM)

1.000

0.828

0.780

0.757

0.743

0.735

0.729

0.724

0.693

RM Example

• Suppose we have 2 jobs, C and D, with periods of 2 and

3, both with execution time 1.

• U = 1/2 + 1/3 > 82%, so RM is risky. Let’s try it…

• Schedule the more frequent job first.

C

C

C

C

C

C

C

• Then schedule job D.

C

D

• Looks okay!

C

D

D

C

D

RM Example 2

• Let’s look at earlier set of tasks, A and B, with periods of

10 and 15, and execution times of 5 and 6.

• U = 5/10 + 6/15 = 0.9, also risky.

• Schedule task A first.

123456789012345678901234567890

A A A A A

A A A A A

A A A A A

• Schedule task B into available spaces.

123456789012345678901234567890

A A A A A B B B B B A A A A A B

A A A A A

Comparison

• Consider this set of jobs

Job #

Period

Execution time

1

10

3

2

12

4

3

15

5

• What is the total utilization ratio? Are EDF and RM

schedules feasible?

• Handout

RM vs. EDF

• EDF

– Job’s priority is dynamic, hard to predict in advance

– Too democratic / egalitarian? Maybe we are trying to execute

too many jobs.

• RM

– Fixed priority is often desirable

– Higher priority job will have better response times overall, not

bothered by a lower priority job that luckily has an upcoming

deadline.

– RM cannot handle utilization up to 1 unless periods are in sync,

as in 1 : n1 : n1n2 : n1n2n3 : …

(Analogy: Telling time is easy until you get to months/years.)

RM example

• Let’s return to previous example, this time using RM.

– Job A = (per 6, exec 3) Job B = (per 14, exec 7)

– Hyperperiod is 42

– First, we must schedule job A, because it has shorter period.

1

2

3

4

5

6

A A A

7

8

9

A

A A

10

11

12

13

14

15

A

A

A

…

– Next, schedule job B.

1

2

3

4

5

6

7

A A A B B B A

8

9

10

A A B

11

12

13

14

15

…

B

B

A

A

A

B

– Uh-oh! During B’s period 0-14, it is only able to execute for 6

cycles. Deadline miss This job set cannot be scheduled. But

it could if either job’s execution time were less reduce U.

RM Utilization bound

• Liu and Layland (1973): “Scheduling Algorithms for

Multiprogramming in a Hard Real-Time Environment”

– They first looked at the case of 2 jobs. What is the maximum

CPU utilization that RM will always work? Express U as a

function of 1 of the job’s execution time, assuming the other job

will fully utilize the CPU during its period.

• We have 2 jobs

– Job j1 has period T1 = 8

Job j2 has period T2 = 12

– Let’s see what execution times C1 and C2 we can have, and

what effect this has on the CPU utilization.

– During one of j2’s periods, how many times will j1 start?

In general: ceil(T2/T1). In our case, ceil(12/8) = 2.

– They derive formulas to determine C2 and U, once we decide on

a value of C1.

continued

• We have job j1 (T1 = 8)

• Suppose C1 = 2.

and job j2 (T2 = 12)

– C2 = T2 – C1 * (number of times j1 starts)

= T2 – C1 * ceil (T2 / T1)

= 12 – 2 ceil (12 / 8) = 8.

– We can compute U = 2/8 + 8/12 = 11/12.

• Suppose C1 = 4

– C2 = 4

– U = 4/8 + 4/12 = 5/6

– The CPU utilization is actually lower as we increase the

execution time of j1.

• … If the last execution of j1 spills over into the next

period of j2, the opposite trend occurs.

Formula

• Eventually, Liu and Layland derive this general formula

for maximum utilization for 2 jobs:

U = 1 – x(1 – x)/(W + x)

where W = floor(T2/T1)

and x = T2/T1 – floor(T2/T1)

• We want to minimize U: to find at what level we can

guarantee schedulability. In this case W = 1, so

U = 1 – x(1 – x) / (1 + x)

• Setting the derivative equal to 0, we get x = √2 – 1, and

U(√2 – 1) = 2(√2 – 1) = about 0.83

• Result can be generalized to n jobs: U = n(2^(1/n) – 1)

CS 346 – Chapter 7

• Deadlock

– Properties

– Analysis: directed graph

• Handle

– Prevent

– Avoid

• Safe states and the Banker’s algorithm

– Detect

– Recover

Origins of deadlock

• System contains resources

• Process compete for resources:

– request, acquire / use, release

• Deadlock occurs on a set of processes when each one is

waiting for some event (e.g. the release of a resource)

that can only be triggered by another deadlocked

process.

– e.g. P1 possesses the keyboard, and P2 has the printer. P1

requests the printer and goes to sleep waiting. P2 requests the

keyboard and goes to sleep waiting.

– Sometimes hard to detect because it may depend on the order in

which resources are requested/allocated

Necessary conditions

4 conditions to detect for deadlock:

• Mutual exclusion – when a resource is held, the process

has exclusive access to it

• Hold and wait – processes each hold 1+ resource while

seeking more

• No preemption – a process will not release a resource

unless it’s finished using it

• Circular wait

• The first 3 conditions are routine, so it’s the circular wait

that is usually the big problem.

– Model using a directed graph, and look for cycle

Directed graph

• A resource allocation graph is a formal way to show we

have deadlock

• Vertices include processes and resources

• Directed edges

– (P R) means that process requests a resource

– (R P) means that resource is allocated to process

• If a resource has multiple instances

– Multiple processes may request or be allocated the resource

– Intuitive, but make sure you don’t over-allocate

– e.g. Figure 7.2: Resource R2 has 2 instances which are both

allocated. But process P3 also wants some of R2. The “out”

degree of R2 is 2 and “in” degree is 1.

Examples

• R2 has 2 instances.

– We can have these edges: P1 R2, P2 R2, P3 R2.

– What does this situation mean? What should happen next?

• Suppose R1 and R2 have 1 instance each.

–

–

–

–

Edges: R1 P1, R2 P2, P1 R2

Describe this situation.

Now, add this edge: P2 R1

Deadlock?

• Fortunately, not all cycles imply a deadlock.

– There may be sufficient instances to honor request

– Fig 7.3 shows a cycle. P1 waits for R1 and P3 waits for R2. But

either of these 2 resources can be released by processes that

are not in the cycle…. as long as they don’t run forever.

How OS handles

• Ostrich method – pretend it will never happen. Ignore

the issue. Let the programmer worry about it.

– Good idea if deadlock is rare.

• Dynamically prevent deadlock from ever occurring

– Allow up to 3 of the 4 necessary conditions to occur.

– Prevent certain requests from being made.

• A priori avoidance

– Require advance warning about requests, so that deadlock can

be avoided.

– Some requests are delayed

• Detection

– Allow conditions that create deadlock, and deal with it as it

occurs.

– Must be able to detect!

Prevention

• “An ounce of prevention is worth a pound of cure”:

Benjamin Franklin

• Take a look at each of the 4 necessary conditions. Don’t

allow it to be the 4th nail in the coffin.

1. Mutual exclusion

–

–

Not much we can do here. Some resources must be exclusive.

Which resources are sharable?

2. Hold & wait

–

–

–

Could require a process to make all its requests at the

beginning of its execution.

How does this help?

Resource utilization; and starvation?

Prevention (2)

3. No resource preemption

– Well, we do want to allow some preemption

– If you make a resource request that can’t be fulfilled at the

moment, OS can require you to release everything you have.

(release = preempting the resource)

– If you make a resource request, and its held by a sleeping

process, OS can let you steal it for a while.

4. Circular wait

– System ensures the request doesn’t complete a cycle

– Total ordering technique: Assign a whole number to each

resource. Process must request resources in numerical order, or

at least not request a lowered # resource when it holds a higher

one.

– Fig. 7.2: P3 has resource #3 but also requests #2. OS could

reject this request.

Avoidance

• We need a priori information to avoid future deadlock.

• What information? We could require processes to

declare up front the maximum # of resources of each

type it will ever need.

• During execution: let’s define a resource-allocation

state, telling us:

– # of resources available (static)

– Maximum needs of each process (static)

– # allocated to each process (dynamic)

Safe state

• To be in a safe state, there must exist a safe sequence.

• A safe sequence is a list of processes [ P1, P2, … Pn ]

– for each P_i, we can satisfy P_i’s requests given whatever

resources are currently available or currently held by the

processes numbered lower than i (i.e. Pj where j < i) by letting

them finish.

– For example, all of P2’s possible requests can be met by either

what is currently available or by what is held by P1.

– If P3 needs a resource held by P2, can wait until P2 done, etc.

• Safe state = a safe sequence including all processes.

– Deadlock occurs only in an unsafe state.

• The system needs to examine each request and ensure

that if the allocation will preserve the safe state.

Example

• Suppose we have 12 instances of some resource.

• 3 processes have these a priori known needs

Process #

Max needs

Current use

1

10

5

2

4

2

3

9

2

• We need to find some safe sequence of all 3 processes

• At present, 12 – (5 + 2 + 2) = 3 instances available.

• Is [ 1, 2, 3 ] a safe sequence?

Banker’s algorithm

• General enough to handle multiple instances

• Principles

–

–

–

–

No customer can borrow more money than is in the bank

All customers given maximum credit limit at outset

Can’t go over your limit!

Sum of all loans never exceeds bank’s capital.

• Good news: customers’ aggregate credit limit may be

higher than bank’s assets

• Safe state: Bank has enough “money” to service request

of 1 customer.

• Algorithm: satisfy a request only if you stay safe

– Identify which job has smallest remaining requests, and make

sure we always have enough dough

Example

• Consider 10 devices of the same type

• Processes 1-3 need up to 4, 5, 8 of these devices,

respectively

• Are these states safe?

Job

# allocated

Max needed

1

0

4

2

2

5

3

4

8

Job

# allocated

Max needed

1

2

4

2

3

5

3

4

8

Handling deadlock

• Continually employ a detection algorithm

– Search for cycle

– Can do it occasionally

• When deadlock detected, perform recovery

• Recover by killing

– Kill 1 process at a time until deadlock cycle gone

– Kill which process? Consider: priority, how many resources it

has, how close it is to completion.

• Recover by resource preemption

– Need to restart that job in near future.

– Possibility for starvation if the same process is selected over and

over.

Detection algorithm

• Start with allocation graph, and “reduce it”

While no changes do:

• Find a process using a resource & not waiting for one.

Remove edge: process will eventually finish.

• Can now re-allocate this resource to another process, if

needed.

• Also can perform other resource allocations for

resources not fully allocated.

• If there are any edges left, we have deadlock.

Example

• 3 processes & 3 resources

• Edges:

–

–

–

–

–

(R1 P1)

(P1 R2)

(R2 P2)

(P2 R3)

(R3 P3)

• Can this graph be reduced to the point that it has no

edges?

CS 346 – Chapter 8

• Main memory

– Addressing

– Swapping

– Allocation and fragmentation

– Paging

– Segmentation

• Commitment

– Please finish chapter 8

Addresses

• CPU/instructions can only access registers and main

memory locations

– Stuff on disk must be loaded into main memory

• Each process given range of legal memory addresses

– Base and limit registers

– Accessible only to OS

– Every address request compared against these limits

• When is address of an object determined?

– Compile time: hard-coded by programmer

– Load time: compiler generates a relative address

– Execute time: if address may vary during execution because the

process moves. (most flexible)

Addresses (2)

• Logical vs. physical address

– Logical (aka virtual): The address as known to the CPU and

source code

– Physical = the real location in RAM

– How could logical and physical address differ? In case of

execution-time binding. i.e. if the process location could move

during execution

• Relocation register

– Specifies what constant offset to add to logical address to obtain

physical address

– CPU / program never needs to worry about the “real” address, or

that addresses of things may change. It can pretend its address

start at 0.

Swapping

• A process may need to go back to disk before finishing.

– Why?

• Consequence of scheduling (context switch)

• Maintain a queue of processes waiting to be loaded from

disk

• Actual transfer time is relatively huge

– When loading a program initially, we might not want to load the

whole thing

• Another question – what to do if we’re swapped out while

waiting for I/O.

– Don’t swap if waiting for input; or

– Put input into buffer. Empty buffer next time process back in

memory.

Allocation

• Simplest technique is to define fixed-size partitions

• Some partitions dedicated to OS; rest for user processes

• Variable-size partitions also possible, but must maintain

starting address of each

• Holes to fill

• How to dynamically fill hole with a process:

– First fit: find the first hole big enough for process

– Best fit: find smallest one big enough

– Worst fit: fit into largest hole, in order to create largest possible

remaining hole

• Internal & external fragmentation

Paging

• Allows for noncontiguous process memory space

• Physical memory consists of “frames”

• Logical memory consists of “pages”

– Page size = frame size

• Every address referenced by CPU can be resolved:

– Page number

– Offset

– how to do it? Turns out page/frame size is power of 2.

Determines # bits in address.

• Look up page number in the page table to find correct

frame

Example

• Suppose RAM = 256 MB, page/frame size is 4 KB, and

our logical addresses are 32 bits.

–

–

–

–

How many bits for the page offset?

How many bits for the logical/virtual page number?

How many bits for the physical page number?

Note that the page offsets (logical & physical) will match.

• A program’s data begins at 0x1001 0000, and text

begins at 0x0040 0000. If they are each 1 page, what is

the highest logical address of each page?

• What physical page do they map to?

• How large is the page table?

Page table

• HW representation

– Several registers

– Store in RAM, with pointer as a register

– TLB (“translation look-aside buffer”)

Functions as a “page table cache”: Should store info about most

commonly occurring pages.

• How does a memory access work?

– First, inspect address to see if datum should be in cache.

– If not, inspect address to see if TLB knows physical address

– If no TLB tag match, look up logical/virtual page number in the

page table (thus requiring another memory access)

– Finally, in the worst case, we have to go out to disk.

Protection, etc.

• HW must ensure that accesses to TLB or page table are

legitimate

– No one should be able to access frame belonging to another

process

• Valid bit: does the process have permission to access

this frame?

– e.g. might no longer belong to this process

• Protection bit: is this physical page frame read-only?

• Paging supports shared memory. Example?

• Paging can cause internal fragmentation. How?

• Sometimes we can make page table more concise by

storing just the bounds of the pages instead of each one.

Page table design

• How to deal with huge number of pages

• Hierarchical or 2-level page table

– In other words, we “page” the page table.

– Split up the address into 3 parts. “outer page”, “inner page” and

then the offset.

– The outer page number tells you where to find the appropriate

part of the (inner) page table. See Figure 8.15.

– Not practical for 64-bit addressing! Why not?

• Hashed page table

– Look up virtual page number in a hash table.

– The contents of the cell might be a linked list: search for match.

• Inverted page table

– A table that stores only the physical pages, and then tells you

which logical page map to each. Any disadvantage?

Segmentation

• Alternative to paging

• More intuitive way to lay out main memory…

– Segments do not have to be contiguous in memory