P1-1: Intro Multivariate

advertisement

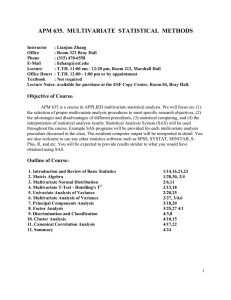

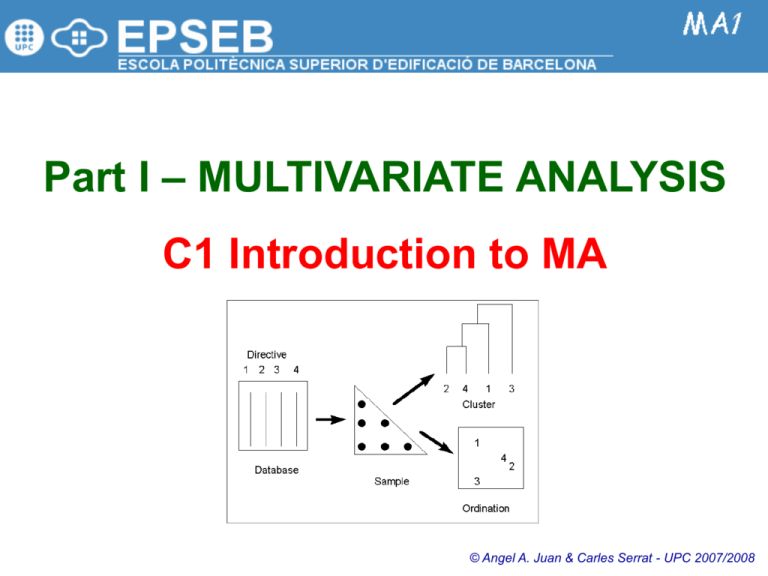

Part I – MULTIVARIATE ANALYSIS C1 Introduction to MA © Angel A. Juan & Carles Serrat - UPC 2007/2008 1.1.1: Why Multivariate Statistics? Variables are roughly dichotomized into two major types: independent variables or IVs are the differing conditions to which you expose your subjects, or characteristics the subjects themselves bring into the research situation. IVs are usually considered either predictor or causal variables because they predict or cause the dependent variables or DVs –the response or outcome variables Multivariate statistics are increasingly popular techniques used for analyzing complicated data sets. They provide analysis when there are many IVs and/or many DVs, all correlated with one another to varying degrees Multivariate statistics are an extension of univariate and bivariate statistics. Multivariate statistics are the general case In an experiment, the researcher has control over the levels of at least one IV to which a subject is exposed. In nonexperimental (correlational or survey) research, the levels of the IV(s) are not manipulated by the researcher. Attribution of causality to results is crucially affected by the experimental-nonexperimental distinction The trick in multivariate statistics is not in computation; that is easily done by computer. The trick is to select reliable and valid measurements, choose the appropriate program, use it correctly, and know how to interpret the output 1.1.2: Some Definitions (1/2) Continuous (interval or quantitative) variables are measured on a scale that changes values smoothly rather than in steps. They take on any value within the range of the scale, and the size of the number reflects the amount of the variable (ex.: time, annual income, age, temperature, distance, grade point average, …) Discrete (nominal, categorical or qualitative) variables take on a finite and usually small number of values and there is no smooth transition from one value or category to the next (ex.: time as displayed by a digital clock, continents, categories of religious affiliation, type of community, …) Discrete variables composed of qualitatively different categories are sometimes analyzed after being changed into a number of dichotomous or two-level variables, called dummy variables Samples are measured in order to make generalizations about populations. Ideally, samples are selected, usually by some random process, so that they represent the population of interest. In real life, however, populations are frequently best identified in terms of samples, rather than viceversa; the population is the group from which you were able to randomly sample 1.1.2: Some Definitions (2/2) Descriptive statistics describe samples of subjects in terms of variables or combinations of variables. Inferential statistics test hypothesis about differences in population on the basis of measurements made on samples of subjects Ortogonality is perfect nonassociation between variables. If two variables are orthogonal, knowing the value of one variable gives no clue as to the value of the other; the correlation between them is zero Multivariate analyses combine variables to do useful work such as predict scores or predict group membership. In most cases the combination that is formed is a linear combination A general rule is to get the best solution with the fewest variables. If there are too many variables relative to sample size, the solution provides a wonderful fit to the sample that may not generalize to the population, a condition known as overfitting A few reliable variables give a more meaningful solution (model) than a large number of unreliable variables The difference between the predicted and obtained values is known as the residual and is a measure of error of prediction References for Multivariate Analysis Anderson, D.; Sweeney, D.; Williams, T. (2004): Statistics for Business and Economics. South-Western College Pub. Frees, E. (1995): Data Analysis Using Regression Models: The Business Perspective. Prentice Hall. McKenzie, J.; Goldman, R. (1998): The Student Edition of MINITAB for Windows. Addison Wesley Longman. Mendenhall, W.; Sincich, T. (2003): A Second Course in Statistics: Regression Analysis. Prentice Hall. Montgomery, D.; Runger, G.; Hubele, N. (2006): Engineering Statistics. John Wiley & Sons. StatSoft (2007): Electronic Statistics Textbook. Available at http://www.statsoft.com/textbook/stathome.html Tabachnick, B.; Fidell, L. (2006): Using Multivariate Statistics. Allyn & Bacon.