ppt

advertisement

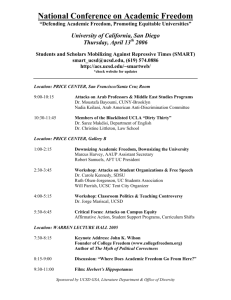

The OptIPuter Project— Eliminating Bandwidth as a Barrier to Collaboration and Analysis DARPA Microsystems Technology Office Arlington, VA December 13, 2002 Dr. Larry Smarr Director, California Institute for Telecommunications and Information Technologies Harry E. Gruber Professor, Dept. of Computer Science and Engineering Jacobs School of Engineering, UCSD Abstract The OptIPuter is a radical distributed visualization, teleimmersion, data mining, and computing architecture. The National Science Foundation recently awarded a six-campus research consortium a five-year large Information Technology Research grant to construct working prototypes of the OptIPuter on campus, regional, national, and international scales. The OptIPuter project is driven by applications leadership from two scientific communities, the US National NSF's EarthScope and the National Institutes of Health's Biomedical Imaging Research Network (BIRN), both of which are beginning to produce a flood of large 3D data objects (e.g., 3D brain images or a SAR terrain datasets) which are stored in distributed federated data repositories. Essentially, the OptIPuter is a "virtual metacomputer" in which the individual "processors" are widely distributed Linux PC clusters; the "backplane" is provided by Internet Protocol (IP) delivered over multiple dedicated 1-10 Gbps optical wavelengths; and, the "mass storage systems" are large distributed scientific data repositories, fed by scientific instruments as OptIPuter peripheral devices, operated in near real-time. Collaboration, visualization, and teleimmersion tools are provided on tiled mono or stereo super-high definition screens directly connected to the OptIPuter to enable distributed analysis and decision making. The OptIPuter project aims at the re-optimization of the entire Grid stack of software abstractions, learning how, as George Gilder suggests, to "waste" bandwidth and storage in order to conserve increasingly "scarce" high-end computing and people time in this new world of inverted values. The Move to Data-Intensive Science & Engineeringe-Science Community Resources ALMA LHC Sloan Digital Sky Survey ATLAS A LambdaGrid Will Be the Backbone for an e-Science Network Apps Middleware Clusters Dynamically Allocated Lightpaths Switch Fabrics Physical Monitoring C O N T R O L P L A N E Source: Joe Mambretti, NU Just Like in Computing -Different FLOPS for Different Folks A -> Need Full Internet Routing B -> Need VPN Services On/And Full Internet Routing C -> Need Very Fat Pipes, Limited Multiple Virtual Organizations Bandwidth consumed A Number of users B DSL C GigE LAN Source: Cees Delaat OptIPuter NSF Proposal Partnered with National Experts and Infrastructure Asia Pacific Vancouver Seattle Portland CA*net4 Pacific Light Rail Chicago UIC NU San Francisco Asia Pacific SURFnet CERN PSC NYC NCSA USC Los Angeles UCI UCSD, SDSU San Diego (SDSC) Atlanta AMPATH Source: Tom DeFanti and Maxine Brown, UIC The OptIPuter is an Experimental Network Research Project • Driven by Large Neuroscience and Earth Science Data • Multiple Lambdas Linking Clusters and Storage – – – – – – LambdaGrid Software Stack Integration with PC Clusters Interactive Collaborative Volume Visualization Lambda Peer to Peer Storage With Optimized Storewidth Enhance Security Mechanisms Rethink TCP/IP Protocols • NSF Large Information Technology Research Proposal – – – – UCSD and UIC Lead Campuses—Larry Smarr PI USC, UCI, SDSU, NW Partnering Campuses Industrial Partners: IBM, Telcordia/SAIC, Chiaro Networks $13.5 Million Over Five Years The OptIPuter Frontier Advisory Board • Optical Component Research – – – – – • Optical Networking Systems – – – – – – • Yannis Papakonstantinou, UCSD Paul Siegel, UCSD Clusters, Grid, and Computing – – – • Dan Blumenthal, UCSB George Papen, UCSD Joe Mambretti, Northwestern University Steve Wallach, Chiaro Networks, Ltd. George Clapp, Telcordia/SAIC Tom West, CENIC Data and Storage – – • Shaya Fainman, UCSD Sadik Esener, UCSD Alan Willner, USC Frank Shi, UCI Joe Ford, UCSD Alan Benner, IBM eServer Group, Systems Architecture and Performance department Fran Berman, SDSC director Ian Foster, Argonne National Laboratory Generalists – – – Franz Birkner, FXB Ventures and San Diego Telecom Council Forest Baskett, Venture Partner with New Enterprise Associates Mohan Trivedi, UCSD First Meeting February 6-7, 2003 The First OptIPuter Workshop on Optical Switch Products • Hosted by Calit2 @ UCSD – October 25, 2002 – Organized by Maxine Brown (UIC) and Greg Hidley (UCSD) – Full Day Open Presentations by Vendors and OptIPuter Team • Examined Variety of Technology Offerings: – OEOEO – TeraBurst Networks – OEO – Chiaro Networks – OOO – Glimmerglass – Calient – IMMI OptIPuter Inspiration--Node of a 2009 PetaFLOPS Supercomputer DRAM – 16 GB DRAM - 4MB GB- -HIGHLY HIGHLYINTERLEAVED INTERLEAVED 64/256 5 Terabits/s MULTI-LAMBDA Optical Network CROSS BAR 2nd LEVEL CACHE Coherence 8 MB 640 GB/s 2nd LEVEL CACHE 8 MB 24 Bytes wide 240 GB/s VLIW/RISC CORE 40 GFLOPS 10 GHz ... 24 Bytes wide 240 GB/s VLIW/RISC CORE 40 GFLOPS 10 GHz Updated From Steve Wallach, Supercomputing 2000 Keynote Global Architecture of a 2009 COTS PetaFLOPS System 10 meters= 50 nanosec Delay 3 2 4 5 ... 16 1 17 64 ALL-OPTICAL SWITCH 63 ... 18 ... 32 49 48 Systems Become GRID Enabled 128 Die/Box 4 CPU/Die 47 I/O LAN/WAN ... 33 Multi-Die Multi-Processor 46 Source: Steve Wallach, Supercomputing 2000 Keynote Convergence of Networking Fabrics • Today's Computer Room – Router For External Communications (WAN) – Ethernet Switch For Internal Networking (LAN) – Fibre Channel For Internal Networked Storage (SAN) • Tomorrow's Grid Room – A Unified Architecture Of LAN/WAN/SAN Switching – More Cost Effective – One Network Element vs. Many – One Sphere of Scalability – ALL Resources are GRID Enabled – Layer 3 Switching and Addressing Throughout Source: Steve Wallach, Chiaro Networks The OptIPuter Philosophy Bandwidth is getting cheaper faster than storage. Storage is getting cheaper faster than computing. Exponentials are crossing. “A global economy designed to waste transistors, power, and silicon area -and conserve bandwidth above allis breaking apart and reorganizing itself to waste bandwidth and conserve power, silicon area, and transistors." George Gilder Telecosm (2000) From SuperComputers to SuperNetworks-Changing the Grid Design Point • The TeraGrid is Optimized for Computing – – – – 1024 IA-64 Nodes Linux Cluster Assume 1 GigE per Node = 1 Terabit/s I/O Grid Optical Connection 4x10Gig Lambdas = 40 Gigabit/s Optical Connections are Only 4% Bisection Bandwidth • The OptIPuter is Optimized for Bandwidth – – – – 32 IA-64 Node Linux Cluster Assume 1 GigE per Processor = 32 gigabit/s I/O Grid Optical Connection 4x10GigE = 40 Gigabit/s Optical Connections are Over 100% Bisection Bandwidth Data Intensive Scientific Applications Require Experimental Optical Networks • Large Data Challenges in Neuro and Earth Sciences – Each Data Object is 3D and Gigabytes – Data are Generated and Stored in Distributed Archives – Research is Carried Out on Federated Repository • Requirements – – – – Computing Requirements PC Clusters Communications Dedicated Lambdas Over Fiber Data Large Peer-to-Peer Lambda Attached Storage Visualization Collaborative Volume Algorithms • Response – OptIPuter Research Project The Biomedical Informatics Research Network a Multi-Scale Brain Imaging Federated Repository BIRN Test-beds: Multiscale Mouse Models of Disease, Human Brain Morphometrics, and FIRST BIRN (10 site project for fMRI’s of Schizophrenics) NIH Plans to Expand to Other Organs and Many Laboratories Microscopy Imaging of Neural Tissue Marketta Bobik Francisco Capani & Eric Bushong Confocal image of a sagittal section through rat cortex triple labeled for glial fibrillary acidic protein (blue), neurofilaments (green) and actin (red) Projection of a series of optical sections through a Purkinje neuron revealing both the overall morphology (red) and the dendritic spines (green) http://ncmir.ucsd.edu/gallery.html Interactive Visual Analysis of Large Datasets -East Pacific Rise Seafloor Topography Scripps Institution of Oceanography Visualization Center http://siovizcenter.ucsd.edu/library/gallery/shoot1/index.shtml Tidal Wave Threat Analysis Using Lake Tahoe Bathymetry Graham Kent, SIO Scripps Institution of Oceanography Visualization Center http://siovizcenter.ucsd.edu/library/gallery/shoot1/index.shtml SIO Uses the Visualization Center to Teach a Wide Variety of Graduate Classes • • • • Geodesy Gravity and Geomagnetism Planetary Physics Radar and Sonar Interferometry • • • Seismology Tectonics Time Series Analysis Deborah Kilb & Frank Vernon, SIO Multiple Interactive Views of Seismic Epicenter and Topography Databases http://siovizcenter.ucsd.edu/library/gallery/shoot2/index.shtml NSF’s EarthScope Rollout Over 14 Years Starting With Existing Broadband Stations Metro Optically Linked Visualization Walls with Industrial Partners Set Stage for Federal Grant • Driven by SensorNets Data – – – – Real Time Seismic Environmental Monitoring Distributed Collaboration Emergency Response • Linked UCSD and SDSU – Dedication March 4, 2002 Linking Control Rooms UCSD SDSU 44 Miles of Cox Fiber Cox, Panoram, SAIC, SGI, IBM, TeraBurst Networks SD Telecom Council Extending the Optical Grid to Oil and Gas Research • • Society for Exploration Geophysicists in Salt Lake City Oct. 6-11, 2002 Optically Linked Visualization Walls – – – – • 80 Miles of Fiber from BP Visualization Lab from Univ. of Colorado OC-48 Both Ways Interactive Collaborative Visualization of Seismic Cubes & Reservoir Models SGI, TeraBurst Industrial Partners Organized by SDSU and Cal-(IT)2 Source: Eric Frost, SDSU The OptIPuter Experimental The UCSD OptIPuter Deployment UCSD Campus Optical Network To CENIC Phase I, Fall 02 Phase II, 2003 Collocation point Production Router (Planned) SDSC SDSC SDSC SDSC Annex Annex JSOE Engineering CRCA Arts SOM Medicine Roughly, $0.20 / Strand-Foot Chemistry Phys. Sci Keck Preuss High School 6th Undergrad College College UCSD New Cost Sharing Roughly $250k of Dedicated Fiber Node M Collocation Chiaro Router (Installed Nov 18, 2002) SIO Earth Sciences ½ Mile Source: Phil Papadopoulos, SDSC; Greg Hidley, Cal-(IT)2 OptIPuter LambdaGrid Enabled by Chiaro Networking Router www.calit2.net/news/2002/11-18-chiaro.html Medical Imaging and Microscopy Chemistry, Engineering, Arts switch switch • Cluster – Disk • Disk – Disk Chiaro Enstara • Viz – Disk • DB – Cluster switch switch San Diego Supercomputer Center • Cluster – Cluster Scripps Institution of Oceanography Image Source: Phil Papadopoulos, SDSC We Chose OptIPuter for Fast Switching and Scalability Large Port Count Small Port Count Chiaro Optical Phased Array MEMS Electrical Fabrics Bubble Electrical Fabrics Lithium Niobate l Switching Speeds (ms) Packet Switching Speeds (ns) Optical Phased Array – Multiple Parallel Optical Waveguides Output Fibers GaAs WG #1 Waveguides Input Optical Fiber WG #128 Chiaro Has a Scalable, Fully Fault Tolerant Architecture Network Proc. Line Card Network Proc. Line Card Chiaro OPA Fabric Network Proc. Line Card Network Proc. Line Card Global Arbitration Optical Electrical • Significant Technical Innovation – OPA Fabric Enables Large Port Count – Global Arbitration Provides Guaranteed Performance – Fault-Tolerant Control System Provides Non-stop Performance • Smart Line Cards – ASICs With Programmable Network Processors – Software Downloads For Features And Standards Evolution Planned Chicago Metro Lambda Switching OptIPuter Laboratory Internationals: Canada, Holland, CERN, GTRN, AmPATH, Asia… Int’l GE, 10GE 16x1 GE 16x10 GE Metro GE, 10GE 16-Processor McKinley at University of Illinois at Chicago 10x1 GE + 1x10GE Nat’l GE, 16-Processor Montecito/Chivano at Northwestern 10GE StarLight Nationals: Illinois, California, Wisconsin, Indiana, Abilene, FedNets. Washington, Pennsylvania… Source: Tom DeFanti, UIC OptIPuter Software Research • Near-term: Build Software To Support Advancement Of Applications With Traditional Models – High Speed IP Protocol Variations (RBUDP, SABUL, …) – Switch Control Software For DWDM Management And Dynamic Setup – Distributed Configuration Management For OptIPuter Systems • Long-Term Goals To Develop: – System Model Which Supports Grid, Single System, And Multi-System Views – Architectures Which Can: – Harness High Speed DWDM – Present To The Applications And Protocols – New Communication Abstractions Which Make Lambda-Based Communication Easily Usable – New Communication & Data Services Which Exploit The Underlying Communication Abstractions – Underlying Data Movement & Management Protocols Supporting These Services – “Killer App” Drivers And Demonstrations Which Leverage This Capability Into The Wireless Internet Source: Andrew Chien, UCSD OptIPuter System Opportunities • • What’s The Right View Of The System? Grid View – Federation Of Systems – Autonomously Managed, Separate Security, No Implied Trust Relationships, No Transitive Trust – High Overhead – Administrative And Performance – Web Services And Grid Services View • Single System View – More Static Federation Of Systems – A Single Trusted Administrative Control, Implied Trust Relationships, Transitive Trust Relationships – But This Is Not Quite A Closed System Box – High Performance – Securing A Basic System And Its Capabilities – Communication, Data, Operating System Coordination Issues • Multi-System View – Can We Create Single System Views Out Of Grid System Views? – Delivering The Performance; Boundaries On Trust Source: Andrew Chien, UCSD OptIPuter Communication Challenges • Terminating A Terabit Link In An Application System – --> Not A Router • Parallel Termination With Commodity Components – N 10GigE Links -> N Clustered Machines (Low Cost) – Community-Based Communication • What Are: – Efficient Protocols to Move Data in Local, Metropolitan, Wide Area? – High Bandwidth, Low Startup – Dedicated Channels, Shared Endpoints – Good Parallel Abstractions For Communication? – Coordinate Management And Use Of Endpoints And Channels – Convenient For Application, Storage System – Secure Models For “Single System View” – Enabled By “Lambda” Private Channels – Exploit Flexible Dispersion Of Data And Computation Source: Andrew Chien, UCSD OptIPuter Storage Challenges • DWDM Enables Uniform Performance View Of Storage – How To Exploit Capability? – Other Challenges Remain: Security, Coherence, Parallelism – “Storage Is a Network Device” • Grid View: High-Level Storage Federation – – – – • Single-System View: Low-Level Storage Federation – – – – • GridFTP (Distributed File Sharing) NAS – File System Protocols Access-control and Security in Protocol Performance? Secure Single System View SAN – Block Level Disk and Controller Protocols High Performance Security? Access Control? Secure Distributed Storage: Threshold Cryptography Based Distribution – PASIS Style – Distributed Shared Secrets – Lambda’s Minimize Performance Penalty Source: Andrew Chien, UCSD OptIPuter is Exploring Quanta as a High Performance Middleware • Quanta is a high performance networking toolkit / API. • Reliable Blast UDP: – Assumes you are running over an over-provisioned or dedicated network. – Excellent for photonic networks, don’t try this on commodity Internet. – It is FAST! – It is very predictable. – We give you a prediction equation to predict performance. This is useful for the application. – It is most suited for transfering very large payloads. – At higher data rates processor is 100% loaded so dual processors are needed for your application to move data and do useful work at the same time. Source: Jason Leigh, UIC TeraVision Over WAN : Greece to Chicago Throughput TCP Performance Over WAN Is Poor; Windows Performance Is Lower Than Linux; Synchronization Reduces Frame Rate. Reliable Blast UDP (RBUDP) • At IGrid 2002 all applications which were able to make the most effective use of the 10G link from Chicago to Amsterdam used UDP • RBUDP[1], SABUL[2] and Tsunami[3] are all similar protocols that use UDP for bulk data transfer- all of which are based on NETBLT- RFC969 • RBUDP has fewer memory copies & a prediction function to let applications know what kind of performance to expect. – [1] J. Leigh, O. Yu, D. Schonfeld, R. Ansari, et al., Adaptive Networking for Tele-Immersion, Proc. Immersive Projection Technology/Eurographics Virtual Environments Workshop (IPT/EGVE), May 16-18, Stuttgart, Germany, 2001. – [2] Sivakumar Harinath, Data Management Support for Distributed Data Mining of Large Datasets over High Speed Wide Area Networks, PhD thesis, University of Illinois at Chicago, 2002. – [3] http://www.indiana.edu/~anml/anmlresearch.html Source: Jason Leigh, UIC Visualization at Near Photographic Resolution The OptIPanel Version I 5x3 Grid of 1280x1024 Pixel LCD Panels Driven by 16-PC Cluster Resolution=6400x3072 Pixels, or ~3000x1500 pixels in Autostereo Source: Tom DeFanti, EVL--UIC NTT Super High Definition Video (NTT 4Kx2K=8 Megapixels) Over Internet2 Applications: Astronomy Mathematics Entertainment Starlight in Chicago www.ntt.co.jp/news/news02e/0211/021113.html SHD = 4xHDTV = 16xDVD USC In Los Angeles The Continuum at EVL and TRECC OptIPuter Amplified Work Environment Passive stereo display AccessGrid Digital white board Tiled display Source: Tom DeFanti, Electronic Visualization Lab, UIC OptIPuter Transforms Individual Laboratory Visualization, Computation, & Analysis Facilities Fast polygon and volume rendering with stereographics + GeoWall = 3D APPLICATIONS: Earth Science Underground Earth Science Anatomy Neuroscience GeoFusion GeoMatrix Toolkit Rob Mellors and Eric Frost, SDSU SDSC Volume Explorer Visible Human Project NLM, Brooks AFB, SDSC Volume Explorer Dave Nadeau, SDSC, BIRN SDSC Volume Explorer The Preuss School UCSD OptIPuter Facility Providing a 21st Century Internet Grid Infrastructure Wireless Sensor Nets, Personal Communicators Routers Tightly Coupled Optically-Connected OptIPuter Core Routers Loosely Coupled Peer-to-Peer Computing & Storage